package com.njbdqn.mydataset

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions._

object MyThirdSpark1 {

case class Customers(userid:String,fname:String,lname:String,tel:String,email:String,addr:String,city:String,state:String,zip:String)

case class Orders(ordid:String,orddate:String,userid:String,ordstatus:String)

case class OrderItems(id:String,ordid:String,proid:String,buynum:String,countPrice:String,price:String)

case class Products(proid:String,protype:String,title:String,price:String,img:String)

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().master("local[2]").appName("myshops").getOrCreate()

val customers = spark.sparkContext.textFile("file:///d:/datas/dataset/customers.csv").cache()

val orders = spark.sparkContext.textFile("file:///d:/datas/dataset/orders.csv")

val orderItems = spark.sparkContext.textFile("file:///d:/datas/dataset/order_items.csv")

val products = spark.sparkContext.textFile("file:///d:/datas/dataset/products.csv")

import spark.implicits._

val cus = customers.map(line => {

val e = line.replaceAll("\"", "").split(",");

Customers(e(0), e(1), e(2), e(3), e(4), e(5), e(6), e(7), e(8))

})

.toDS()

val ords = orders.map(line => {

val e = line.replace("\"", "").split(",");

Orders(e(0), e(1), e(2), e(3))

}).toDS()

val orditms = orderItems.map(line => {

val e = line.replace("\"", "").split(",");

OrderItems(e(0), e(1), e(2), e(3), e(4), e(5))

}).toDS()

val pro = products.map(line => {

val e = line.replace("\"", "").replaceAll(",,",",").split(",");

Products(e(0), e(1), e(2), e(3), e(4))

}).toDS()

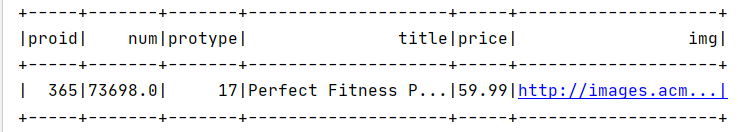

pro.show()

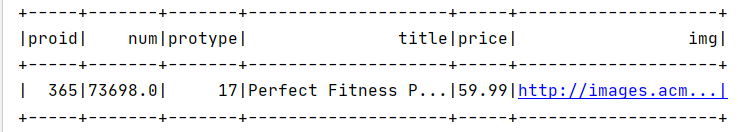

orditms

.groupBy("proid")

.agg(sum("buynum").alias("num"))

.orderBy(desc("num"))

.limit(1)

.join(pro,"proid")

.show()

}

}

查询结果: