lightGBM实战

文章目录

-

- 一、使用LGBMClassifier对iris进行训练

-

- 1.1 使用lgb.LGBMClassifier

-

- 1.1.2使用pickle进行保存模型,然后加载预测

- 1.1.3 使用txt和json保存模型并加载

- 1.2使用原生的API进行模型训练和预测

-

- 1.2.2 使用txt/json格式保存模型

- 1.2.3 使用pickle进行保存模型

- 三、任务3 分类、回归和排序任务

-

- 3.1使用 make_classification生成二分类数据进行训练

-

- 3.1.1 sklearn接口

- 3.1.2 原生train接口

- 3.2使用 make_classification生成多分类数据进行训练

-

- 3.2.1 sklearn接口

- 3.2.2 原生train接口

- 3.3使用 make_regression生成回归数据

-

- 3.3.1 sklearn接口

- 3.3.2 原生train接口

- 四、graphviz可视化

- 五、模型调参(网格、随机、贝叶斯)

-

- 5.1 加载数据集

- 5.2:步骤2 设置树模型深度分别为[3,5,6,9],记录下验证集AUC精度。

- 5.3 步骤3:category变量设置为categorical_feature

- 5.4 步骤4:超参搜索

-

- 5.4.1 GridSearchCV

- 5.4.3 随机搜索

- 5.4.4 贝叶斯搜索

- 六、模型微调与参数衰减

-

- 6.2 学习率衰减

- 七、特征筛选方法

-

- 7.1 筛选最重要的3个特征

- 7.2 利用PermutationImportance排列特征重要性

- 7.3 Null Importances进行特征选择

-

- 7.3.1 主要思想:

- 7.3.2实现步骤

- 7.3.3 读取数据集,计算Real Targe和shuffle Target下的特征重要度

- 7.3.4计算Score

- 八、自定义损失函数和评测函数

- 九 模型部署与加速

- 九 模型部署与加速

LightGBM有两种接口:

- sklearn接口接口文档

- 原生train接口文档

- Python API(包括Scikit-learn API)

- 阿水知乎贴:《你应该知道的LightGBM各种操作》

- 《Coggle 30 Days of ML(22年1&2月)》

学习内容:

LightGBM(Light Gradient Boosting Machine)是微软开源的一个实现 GBDT 算法的框架,支持高效率的并行训练。LightGBM 提出的主要原因是为了解决 GBDT 在海量数据遇到的问题。本次学习内容包括使用LightGBM完成各种操作,包括竞赛和数据挖掘中的模型训练、验证和调参过程。

打卡汇总:

| 任务名称 | 难度、分数 | 所需技能 |

|---|---|---|

| 任务1模型训练与预测 | 低、1 | LightGBM |

| 任务2:模型保存与加载 | 低、1 | LightGBM |

| 任务3:分类、回归和排序任务 | 高、3 | LightGBM |

| 任务4:模型可视化 | 低、1 | graphviz |

| 任务5:模型调参(网格、随机、贝叶斯) | 中、2 | 模型调参 |

| 任务6:模型微调与参数衰减 | 中、2 | LightGBM |

| 任务7:特征筛选方法 | 高、3 | 特征筛选方法 |

| 任务8:自定义损失函数 | 中、2 | 损失函数&评价函数 |

| 任务9:模型部署与加速 | 高、3 | Treelite |

一、使用LGBMClassifier对iris进行训练

from sklearn.datasets import load_iris

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

import numpy as np

import pandas as pd

from sklearn import metrics

import warnings

warnings.filterwarnings("ignore")

iris = load_iris()

X,y = iris.data,iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2022) # 数据集分割

1.1 使用lgb.LGBMClassifier

gbm = lgb.LGBMClassifier(max_depth=10,

learning_rate=0.01,

n_estimators=2000,#提升迭代次数

objective='multi:softmax',#默认regression,用于设置损失函数

num_class=3 ,

nthread=-1,#LightGBM 的线程数

min_child_weight=1,

max_delta_step=0,

subsample=0.85,

colsample_bytree=0.7,

reg_alpha=0,#L1正则化系数

reg_lambda=1,#L2正则化系数

scale_pos_weight=1,

seed=0,

missing=None)

gbm.fit(X_train, y_train)

y_pred = gbm.predict(X_test)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

[LightGBM] [Warning] num_threads is set with n_jobs=-1, nthread=-1 will be ignored. Current value: num_threads=-1

accuarcy: 93.33%

1.1.2使用pickle进行保存模型,然后加载预测

import pickle

with open('model.pkl', 'wb') as fout:

pickle.dump(gbm, fout)

# load model with pickle to predict

with open('model.pkl', 'rb') as fin:

pkl_bst = pickle.load(fin)

# can predict with any iteration when loaded in pickle way

y_pred = pkl_bst.predict(X_test)

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

accuarcy: 93.33%

1.1.3 使用txt和json保存模型并加载

# txt格式

gbm.booster_.save_model("skmodel.txt")

clf_loads = lgb.Booster(model_file='skmodel.txt')

y_pred = clf_loads.predict(X_test)

y_pred=np.argmax(y_pred,axis=-1)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

accuarcy: 93.33%

#json格式

gbm.booster_.save_model("skmodel.json")

clf_loads = lgb.Booster(model_file='skmodel.json')

y_pred = clf_loads.predict(X_test)

y_pred=np.argmax(y_pred,axis=-1)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

accuarcy: 93.33%

1.2使用原生的API进行模型训练和预测

import numpy as np

lgb_train = lgb.Dataset(X_train, y_train)

#reference:如果这是用于验证的数据集,则应使用训练数据作为参考

#weight : list每个实例的权重

#free_raw_data:default=True,构建内部 Dataset 后释放原始数据,节省内存。

#silent:布尔类型,default=False。是否在构建过程中打印消息。

#init_score:数据集初始分数

#feature_name:设为 'auto' 时,如果 data 是 pandas DataFrame,则使用数据列名称。

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

#设置参数

#多分类的objective为multiclass或者别名softmax

params = {

'boosting_type': 'gbdt',

'objective': 'softmax',

'num_class': 3,

'max_depth': 6,

'lambda_l2': 1,

'subsample': 0.85,

'colsample_bytree': 0.7,

'min_child_weight': 1,

'learning_rate':0.01,

"verbosity":-1}

gbm = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred = gbm.predict(X_test,num_iteration=gbm.best_iteration)

y_pred=np.argmax(y_pred,axis=-1)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[10] valid_0's multi_logloss: 0.96537

accuarcy: 93.33%

1.2.2 使用txt/json格式保存模型

#使用txt保存模型

gbm.save_model('model.txt')

bst = lgb.Booster(model_file='model.txt')

y_pred = bst.predict(X_test, num_iteration=gbm.best_iteration)

y_pred=np.argmax(y_pred,axis=-1)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

#使用json格式保存模型

gbm.save_model('model.json')

bst = lgb.Booster(model_file='model.json')

y_pred = bst.predict(X_test, num_iteration=gbm.best_iteration)

y_pred=np.argmax(y_pred,axis=-1)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

accuarcy: 93.33%

accuarcy: 93.33%

1.2.3 使用pickle进行保存模型

import pickle

with open('model.pkl', 'wb') as fout:

pickle.dump(gbm, fout)

# load model with pickle to predict

with open('model.pkl', 'rb') as fin:

pkl_bst = pickle.load(fin)

# can predict with any iteration when loaded in pickle way

y_pred = pkl_bst.predict(X_test)

y_pred=np.argmax(y_pred,axis=-1)

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

accuarcy: 93.33%

三、任务3 分类、回归和排序任务

3.1使用 make_classification生成二分类数据进行训练

from sklearn.datasets import make_classification

import numpy as np

import pandas as pd

from scipy import stats

import matplotlib.pyplot as plt

#n_samples:样本数量,默认100

#n_features:特征数,默认20

#n_informative:有效特征数量,默认2

#n_redundant:冗余特征,默认2

#n_repeated :重复的特征个数,默认0

#n_clusters_per_class:每个类别中cluster数量,默认2

#weight:各个类的占比

#n_classes* n_clusters_per_class 必须≤ 2**有效特征数

data, target = make_classification(n_samples=1000,n_features=3,n_informative=3,n_redundant=0,n_classes=2)

df = pd.DataFrame(data)

df['target'] = target

df1 = df[df['target']==0]

df2 = df[df['target']==1]

df1.index = range(len(df1))

df2.index = range(len(df2))

# 画出数据集的数据分布

plt.figure(figsize=(3,3))

plt.scatter(df1[0],df1[1],color='red')

plt.scatter(df2[0],df2[1],color='green')

plt.figure(figsize=(6,2))

df1[0].hist()

df1[0].plot(kind = 'kde', secondary_y=True)

mean_ = df1[0].mean()

std_ = df1[0].std()

stats.kstest(df1[0], 'norm', (mean_, std_))

KstestResult(statistic=0.03723785150172143, pvalue=0.4930944895472954)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-3jpNhDmW-1642190201168)(lightGBM_files/lightGBM_17_1.png)]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-QBs17PgZ-1642190201170)(lightGBM_files/lightGBM_17_2.png)]

3.1.1 sklearn接口

sklearn接口lgb分类器参考文档

注意:每次产生的数据都不一样,所以

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

X,y = data,target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2022) # 数据集分割

gbm = lgb.LGBMClassifier()

gbm.fit(X_train, y_train,

eval_set=[(X_test, y_test)],

eval_metric='binary_logloss',

callbacks=[lgb.early_stopping(5)])

#eval_metric默认值:LGBMRegressor 为“l2”,LGBMClassifier 为“logloss”,LGBMRanker 为“ndcg”。

#使用binary_logloss或者logloss准确率都是一样的。默认logloss

y_pred = gbm.predict(X_test)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[39] valid_0's binary_logloss: 0.255538

accuarcy: 88.50%

3.1.2 原生train接口

import numpy as np

lgb_train = lgb.Dataset(X_train, y_train)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

#设置参数

params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'max_depth': 10,

'metric': 'binary_logloss',

"verbosity":-1}

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred = gbm2.predict(X_test,num_iteration=gbm2.best_iteration)#结果是0-1之间的概率值,是一维数组

y_pred =[1 if x >0.5 else 0 for x in y_pred]

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[10] valid_0's binary_logloss: 0.371903

accuarcy: 88.00%

3.2使用 make_classification生成多分类数据进行训练

3.2.1 sklearn接口

from sklearn.datasets import make_classification

import numpy as np

import pandas as pd

from scipy import stats

import matplotlib.pyplot as plt

data, target = make_classification(n_samples=1000,n_features=3,n_informative=3,n_redundant=0,n_classes=4)

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

X,y = data,target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2022) # 数据集分割

gbm = lgb.LGBMClassifier()

gbm.fit(X_train, y_train,

eval_set=[(X_test, y_test)],

eval_metric='logloss',

callbacks=[lgb.early_stopping(5)])

#eval_metric默认值:LGBMRegressor 为“l2”,LGBMClassifier 为“logloss”,LGBMRanker 为“ndcg”。

#使用binary_logloss或者logloss准确率都是一样的。默认logloss

y_pred = gbm.predict(X_test)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[39] valid_0's binary_logloss: 0.255538

accuarcy: 88.50%

3.2.2 原生train接口

import numpy as np

lgb_train = lgb.Dataset(X_train, y_train)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

#设置参数

params = {

'boosting_type': 'gbdt',

'objective': 'softmax',

'num_class': 4,

'max_depth': 10,

'metric': 'softmax',

"verbosity":-1}

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred = gbm2.predict(X_test,num_iteration=gbm2.best_iteration)#结果是0-1之间的概率值,是一维数组

y_pred=np.argmax(y_pred,axis=-1)

accuracy = accuracy_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[10] valid_0's multi_logloss: 0.322024

accuarcy: 87.50%

3.3使用 make_regression生成回归数据

3.3.1 sklearn接口

make_regression(n_samples=100, n_features=100, n_informative=10, n_targets=1, bias=0.0,

effective_rank=None, tail_strength=0.5, noise=0.0, shuffle=True, coef=False, random_state=None)

- n_samples:样本数

- n_features:特征数(自变量个数)

- n_informative:参与建模特征数

- n_targets:因变量个数

- noise:噪音

- bias:偏差(截距)

- coef:是否输出coef标识

- random_state:随机状态若为固定值则每次产生的数据都一样

from sklearn.datasets import make_regression

import numpy as np

import pandas as pd

from scipy import stats

import matplotlib.pyplot as plt

data, target = make_regression(n_samples=1000, n_features=5,n_targets=1,noise=1.5,random_state=1)

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

import lightgbm as lgb

from lightgbm import LGBMClassifier

X,y = data,target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2022) # 数据集分割

gbm = lgb.LGBMRegressor()#直接使用默认参数,mse较小。

gbm.fit(X_train, y_train,

eval_set=[(X_test, y_test)],

eval_metric='l1',

callbacks=[lgb.early_stopping(5)])

#eval_metric默认值:LGBMRegressor 为“l2”,LGBMClassifier 为“logloss”,LGBMRanker 为“ndcg”。

#使用binary_logloss或者logloss准确率都是一样的。默认logloss

y_pred = gbm.predict(X_test)

# 计算准确率

mse= mean_squared_error(y_test,y_pred)

print("mse: %.2f" % (mse))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[99] valid_0's l1: 8.13036 valid_0's l2: 119.246

mse: 119.25

3.3.2 原生train接口

lgb_train = lgb.Dataset(X_train, y_train)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

#设置参数为lgb.LGBMRegressor的默认参数。

params = {

'boosting_type': 'gbdt',

'objective': 'regression',

"num_leaves":31,

"learning_rate": 0.1,

"n_estimators": 100,

"min_child_samples": 20,

"verbosity":-1}

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=5,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred = gbm2.predict(X_test,num_iteration=gbm2.best_iteration)#结果是0-1之间的概率值,是一维数组

mse= mean_squared_error(y_test,y_pred)

print("mse: %.2f" % (mse))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[99] valid_0's l2: 119.246

mse: 119.25

四、graphviz可视化

参考文档:《lightgbm 决策树 可视化 graphviz》、 graphviz参考文档 、《xgboost 可视化API文档》、《lightgbm可视化API》

from sklearn.datasets import load_iris

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

import graphviz

iris = load_iris()

X,y = iris.data,iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2022) # 数据集分割

lgb_clf = lgb.LGBMClassifier()

lgb_clf.fit(X_train, y_train)

lgb.create_tree_digraph(lgb_clf, tree_index=1)

#设置参数

#多分类的objective为multiclass或者别名softmax

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-1QMxh4Kb-1642190201171)(lightGBM_files/lightGBM_33_0.svg)]

#lgb没有to_graphviz,无法这样保存图片通

digraph = lgb.to_graphviz(lgb_clf , num_trees=1)#报错module 'lightgbm' has no attribute 'to_graphviz'

digraph.format = 'png'

digraph.view('./iris_lgb')

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

in

1 #lgb没有to_graphviz,无法这样保存图片通

----> 2 digraph = lgb.to_graphviz(lgb_clf , num_trees=1)#报错module 'lightgbm' has no attribute 'to_graphviz'

3 digraph.format = 'png'

4 digraph.view('./iris_lgb')

AttributeError: module 'lightgbm' has no attribute 'to_graphviz'

import xgboost as xgb

from sklearn.datasets import load_iris

iris = load_iris()

xgb_clf = xgb.XGBClassifier()

xgb_clf.fit(iris.data, iris.target)

xgb.to_graphviz(xgb_clf, num_trees=1)

[02:55:18] WARNING: C:/Users/Administrator/workspace/xgboost-win64_release_1.5.1/src/learner.cc:1115: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'multi:softprob' was changed from 'merror' to 'mlogloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-CK4h5BDz-1642190201171)(lightGBM_files/lightGBM_35_1.svg)]

#通过Digraph对象可以将保存文件并查看

digraph = xgb.to_graphviz(xgb_clf, num_trees=1)

digraph.format = 'png'#将图像保存为png图片

digraph.view('./iris_xgb')

'iris_xgb.png'

### 步骤3:读取任务2的json格式模型文件

bst = lgb.Booster(model_file='model.json')

lgb.create_tree_digraph(bst, tree_index=1)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-rAEbwKaU-1642190201172)(lightGBM_files/lightGBM_37_0.svg)]

五、模型调参(网格、随机、贝叶斯)

5.1 加载数据集

import pandas as pd, numpy as np, time

data= pd.read_csv("https://cdn.coggle.club/kaggle-flight-delays/flights_10k.csv.zip")

# 提取有用的列

data= data[["MONTH","DAY","DAY_OF_WEEK","AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT",

"ORIGIN_AIRPORT","AIR_TIME", "DEPARTURE_TIME","DISTANCE","ARRIVAL_DELAY"]]

data.dropna(inplace=True)

from sklearn.model_selection import train_test_split

# 筛选出部分数据

data["ARRIVAL_DELAY"] = (data["ARRIVAL_DELAY"]>10)*1

# 以下四列数据转换为类别

cols = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

for item in cols:

data[item] = data[item].astype("category").cat.codes +1

# 划分训练集和测试集

train, test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

data

| MONTH | DAY | DAY_OF_WEEK | AIRLINE | FLIGHT_NUMBER | DESTINATION_AIRPORT | ORIGIN_AIRPORT | AIR_TIME | DEPARTURE_TIME | DISTANCE | ARRIVAL_DELAY | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 4 | 2 | 88 | 253 | 13 | 169.0 | 2354.0 | 1448 | 0 |

| 1 | 1 | 1 | 4 | 1 | 2120 | 213 | 164 | 263.0 | 2.0 | 2330 | 0 |

| 2 | 1 | 1 | 4 | 12 | 803 | 60 | 262 | 266.0 | 18.0 | 2296 | 0 |

| 3 | 1 | 1 | 4 | 1 | 238 | 185 | 164 | 258.0 | 15.0 | 2342 | 0 |

| 4 | 1 | 1 | 4 | 2 | 122 | 14 | 261 | 199.0 | 24.0 | 1448 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 9994 | 1 | 1 | 4 | 8 | 2399 | 44 | 215 | 62.0 | 1710.0 | 473 | 0 |

| 9995 | 1 | 1 | 4 | 7 | 149 | 128 | 210 | 28.0 | 1716.0 | 100 | 1 |

| 9996 | 1 | 1 | 4 | 8 | 2510 | 208 | 76 | 29.0 | 1653.0 | 147 | 0 |

| 9997 | 1 | 1 | 4 | 8 | 2512 | 62 | 215 | 28.0 | 1721.0 | 135 | 1 |

| 9998 | 1 | 1 | 4 | 8 | 2541 | 208 | 182 | 103.0 | 2000.0 | 594 | 1 |

9592 rows × 11 columns

from sklearn.datasets import load_iris

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

5.2:步骤2 设置树模型深度分别为[3,5,6,9],记录下验证集AUC精度。

- predict:lgb.LGBMClassifier()等sklearn接口中是返回预测的类别

- predict_proba:klearn接口中是返回预测的概率。重点是求auc时,我们必须用predict_proba。因为roc曲线的阀值是根据其正样本的概率求的。

参考《数据挖掘竞赛lightgbm通过求最大auc调参》

#sklearn接口

def test_depth(max_depth):

gbm = lgb.LGBMClassifier(max_depth=max_depth)

gbm.fit(train, y_train,

eval_set=[(test, y_test)],

eval_metric='binary_logloss',

callbacks=[lgb.early_stopping(5)])

#eval_metric默认值:LGBMRegressor 为“l2”,LGBMClassifier 为“logloss”,LGBMRanker 为“ndcg”。

#使用binary_logloss或者logloss准确率都是一样的。默认logloss

y_pred = gbm.predict(test)

# 计算准确率

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,gbm.predict_proba(test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("max_depth=",max_depth,"accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

test_depth(3)

test_depth(5)

test_depth(6)

test_depth(9)

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[98] valid_0's binary_logloss: 0.429334

max_depth= 3 accuarcy: 81.90% auc_score: 76.32%

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[60] valid_0's binary_logloss: 0.430826

max_depth= 5 accuarcy: 81.98% auc_score: 75.54%

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[65] valid_0's binary_logloss: 0.429341

max_depth= 6 accuarcy: 81.69% auc_score: 75.63%

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[52] valid_0's binary_logloss: 0.429146

max_depth= 9 accuarcy: 81.94% auc_score: 76.07%

#原生train接口

import numpy as np

from sklearn import metrics

lgb_train = lgb.Dataset(train, y_train)

lgb_eval = lgb.Dataset(test, y_test, reference=lgb_train)

#设置参数

def test_depth(max_depth):

params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'max_depth': 10,

'metric': 'binary_logloss',

"learning_rate":0.1,

"min_child_samples":20,

"num_leaves":31,

"max_depth":max_depth}

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred = gbm2.predict(test,num_iteration=gbm2.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.5 else 0 for x in y_pred]

accuracy = accuracy_score(y_test,pred)

auc_score=metrics.roc_auc_score(y_test,y_pred)

print("max_depth=",max_depth,"accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

test_depth(3)

print('-------------------------------------------------------------------------')

test_depth(5)

print('-------------------------------------------------------------------------')

test_depth(6)

print('-------------------------------------------------------------------------')

test_depth(9)

5.3 步骤3:category变量设置为categorical_feature

参考《Lightgbm如何处理类别特征?》

参考kaggle教程《Feature Selection with Null Importances》中的代码。

lightGBM比XGBoost的1个改进之处在于对类别特征的处理, 不再需要将类别特征转为one-hot形式。这一步通过设置categorical_feature来实现。

唯一疑惑的是真正的object特征只有’AIRLINE’, ‘DESTINATION_AIRPORT’, ‘ORIGIN_AIRPORT’,但是’FLIGHT_NUMBER’也设置成类别特征效果更好。

- 'FLIGHT_NUMBER’也为类别特征:accuarcy: 81.82% auc_score: 77.52%

- 'FLIGHT_NUMBER’不是类别特征:accuarcy: 81.69% auc_score: 76.48%

估计跟数据集有关系,没有仔细研究数据集。

import pandas as pd, numpy as np, time

data= pd.read_csv("https://cdn.coggle.club/kaggle-flight-delays/flights_10k.csv.zip")

# 提取有用的列

data= data[["MONTH","DAY","DAY_OF_WEEK","AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT",

"ORIGIN_AIRPORT","AIR_TIME", "DEPARTURE_TIME","DISTANCE","ARRIVAL_DELAY"]]

data.dropna(inplace=True)

from sklearn.model_selection import train_test_split

# 筛选出部分数据

data["ARRIVAL_DELAY"] = (data["ARRIVAL_DELAY"]>10)*1

categorical_feats = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

#categorical_feats = [f for f in data.columns if data[f].dtype == 'object']

#将上面四列特征转为类别特征,但不是one-hot编码

for f_ in categorical_feats:

data[f_], _ = pd.factorize(data[f_])

# Set feature type as categorical

data[f_] = data[f_].astype('category')

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

categorical_feats

['AIRLINE', 'FLIGHT_NUMBER', 'DESTINATION_AIRPORT', 'ORIGIN_AIRPORT']

import numpy as np

from sklearn import metrics

lgb_train = lgb.Dataset(X_train, y_train,free_raw_data=False)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train,free_raw_data=False)

params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',

'num_leaves': 16,

'learning_rate': 0.3,

'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0038,

"verbosity":-1}

# 特征命名

#num_train, num_feature = X_train.shape#X_train是7194行10列的数据集,num_feature=10表示特征数量

#feature_name = ['feature_' + str(col) for col in range(num_feature)]#feature_0到9

gbm = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval, #验证集设置

#feature_name=feature_name, #特征命名

categorical_feature=categorical_feats,

callbacks=[lgb.early_stopping(stopping_rounds=5)]) #设置分类变量

y_pred = gbm.predict(X_test,num_iteration=gbm.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.5 else 0 for x in y_pred]

accuracy = accuracy_score(y_test,pred)

auc_score=metrics.roc_auc_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[10] valid_0's binary_logloss: 0.424384

accuarcy: 81.82% auc_score: 77.52%

#不设置categorical_feature结果一样啊,不知道为何?

# 进行one-hot编码

cols = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

for item in cols:

data[item] = data[item].astype("category").cat.codes +1

# 划分训练集和测试集

train, test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=10,

valid_sets=lgb_eval,

callbacks=[lgb.early_stopping(stopping_rounds=5)])

y_pred2 = gbm2.predict(X_test,num_iteration=gbm2.best_iteration)#结果是0-1之间的概率值,是一维数组

pred2 =[1 if x >0.5 else 0 for x in y_pred2]

accuracy2 = accuracy_score(y_test,pred2)

auc_score2=metrics.roc_auc_score(y_test,y_pred2)

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))

Training until validation scores don't improve for 5 rounds

Did not meet early stopping. Best iteration is:

[10] valid_0's binary_logloss: 0.424384

accuarcy: 81.82% auc_score: 77.52%

5.4 步骤4:超参搜索

5.4.1 GridSearchCV

GridSearchCV参考文档

sklearn.model_selection.GridSearchCV(estimator, param_grid, *, scoring=None, n_jobs=None, refit=True, cv=None, verbose=0, pre_dispatch='2*n_jobs', error_score=nan, return_train_score=False)

其中 scoring是字符串格式或者str列表、字典。具体的参数列表参考文档scoring-parameter

from sklearn.datasets import load_iris

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import lightgbm as lgb

from lightgbm import LGBMClassifier

import numpy as np

from sklearn import metrics

from sklearn.model_selection import GridSearchCV

import pandas as pd, numpy as np, time

# 读取数据

data = pd.read_csv("https://cdn.coggle.club/kaggle-flight-delays/flights_10k.csv.zip")

# 提取有用的列

data = data[["MONTH","DAY","DAY_OF_WEEK","AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT",

"ORIGIN_AIRPORT","AIR_TIME", "DEPARTURE_TIME","DISTANCE","ARRIVAL_DELAY"]]

data.dropna(inplace=True)

# 筛选出部分数据

data["ARRIVAL_DELAY"] = (data["ARRIVAL_DELAY"]>10)*1

# 进行编码

cols = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

for item in cols:

data[item] = data[item].astype("category").cat.codes +1

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

parameters = {

'max_depth': [8, 10, 12],

'learning_rate': [0.05, 0.1, 0.15],

'n_estimators': [100, 200,500],

"num_leaves":[25,31,36]}

gbm = lgb.LGBMClassifier(max_depth=10,#构建树的深度,越大越容易过拟合

learning_rate=0.01,

n_estimators=100,

seed=0,

missing=None)

gs = GridSearchCV(gbm, param_grid=parameters, scoring='accuracy', cv=3)

gs.fit(X_train, y_train)

print("Best score: %0.3f" % gs.best_score_)

print("Best parameters set: %s" % gs.best_params_ )

Best score: 0.805

Best parameters set: {'learning_rate': 0.05, 'max_depth': 8, 'n_estimators': 100, 'num_leaves': 36}

#使用最优参数预测验证集

y_pred = gs.predict(X_test)

# 计算准确率和auc

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,gs.predict_proba(X_test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

accuarcy: 82.07% auc_score: 75.92%

5.4.3 随机搜索

参考文档

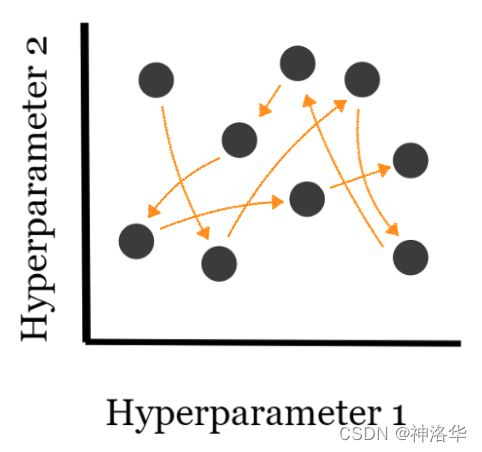

网格搜索尝试超参数的所有组合,因此增加了计算的时间复杂度,在数据量较大,或者模型较为复杂等等情况下,可能导致不可行的计算成本,这样网格搜索调参方法就不适用了。然而,随机搜索提供更便利的替代方案,该方法只测试你选择的超参数组成的元组,并且超参数值的选择是完全随机的,如下图所示。

from sklearn.model_selection import RandomizedSearchCV

param = dict(n_estimators=[80,100, 200],

max_depth=[6,8,10],

learning_rate= [0.02,0.05, 0.1],

num_leaves=[25,31,36])

grid = RandomizedSearchCV(estimator=lgb.LGBMClassifier(),

param_distributions=param,scoring='accuracy',cv=3)

grid.fit(X_train, y_train)

print("Best score: %0.3f" % grid.best_score_)

print("Best parameters set: %s" % grid.best_params_ )

# 找到最好的模型

grid.best_estimator_

Best score: 0.806

Best parameters set: {'num_leaves': 36, 'n_estimators': 80, 'max_depth': 6, 'learning_rate': 0.1}

LGBMClassifier(max_depth=6, n_estimators=80, num_leaves=36)

最优模型直接用grid或者rid.best_estimator_都行

#使用最优参数预测验证集

y_pred = grid .predict(X_test)

# 计算准确率和auc

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,grid .predict_proba(X_test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

# 找到最好的模型

gd=grid.best_estimator_

y_pred = gd .predict(X_test)

# 计算准确率和auc

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,gd .predict_proba(X_test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

accuarcy: 81.69% auc_score: 75.62%

accuarcy: 81.69% auc_score: 75.62%

5.4.4 贝叶斯搜索

参考《贝叶斯全局优化(LightGBM调参)》

贝叶斯搜索使用贝叶斯优化技术对搜索空间进行建模,以尽快获得优化的参数值。它使用搜索空间的结构来优化搜索时间。贝叶斯搜索方法使用过去的评估结果来采样最有可能提供更好结果的新候选参数(如下图所示):

#设定贝叶斯优化的黑盒函数LGB_bayesian

def LGB_bayesian(

num_leaves, # int

min_data_in_leaf, # int

learning_rate,

min_sum_hessian_in_leaf, # int

feature_fraction,

lambda_l1,

lambda_l2,

min_gain_to_split,

max_depth):

# LightGBM expects next three parameters need to be integer. So we make them integer

num_leaves = int(num_leaves)

min_data_in_leaf = int(min_data_in_leaf)

max_depth = int(max_depth)

assert type(num_leaves) == int

assert type(min_data_in_leaf) == int

assert type(max_depth) == int

param = {

'num_leaves': num_leaves,

'max_bin': 63,

'min_data_in_leaf': min_data_in_leaf,

'learning_rate': learning_rate,

'min_sum_hessian_in_leaf': min_sum_hessian_in_leaf,

'bagging_fraction': 1.0,

'bagging_freq': 5,

'feature_fraction': feature_fraction,

'lambda_l1': lambda_l1,

'lambda_l2': lambda_l2,

'min_gain_to_split': min_gain_to_split,

'max_depth': max_depth,

'save_binary': True,

'seed': 1337,

'feature_fraction_seed': 1337,

'bagging_seed': 1337,

'drop_seed': 1337,

'data_random_seed': 1337,

'objective': 'binary',

'boosting_type': 'gbdt',

'verbose': 1,

'metric': 'auc',

'is_unbalance': True,

'boost_from_average': False,

"verbosity":-1

}

lgb_train = lgb.Dataset(X_train,

label=y_train)

lgb_valid = lgb.Dataset(X_test,label=y_test,reference=lgb_train)

num_round = 500

gbm= lgb.train(param, lgb_train, num_round, valid_sets = [lgb_valid],callbacks=[lgb.early_stopping(stopping_rounds=5)])

predictions = gbm.predict(X_test,num_iteration=gbm.best_iteration)

score = metrics.roc_auc_score(y_test, predictions)

return score

LGB_bayesian函数从贝叶斯优化框架获取num_leaves,min_data_in_leaf,learning_rate,min_sum_hessian_in_leaf,feature_fraction,lambda_l1,lambda_l2,min_gain_to_split,max_depth的值。 请记住,对于LightGBM,num_leaves,min_data_in_leaf和max_depth应该是整数。 但贝叶斯优化会发送连续的函数。 所以我强制它们是整数。 我只会找到它们的最佳参数值。 读者可以增加或减少要优化的参数数量。

现在需要为这些参数提供边界,以便贝叶斯优化仅在边界内搜索

bounds_LGB = {

'num_leaves': (5, 20),

'min_data_in_leaf': (5, 20),

'learning_rate': (0.01, 0.3),

'min_sum_hessian_in_leaf': (0.00001, 0.01),

'feature_fraction': (0.05, 0.5),

'lambda_l1': (0, 5.0),

'lambda_l2': (0, 5.0),

'min_gain_to_split': (0, 1.0),

'max_depth':(3,15),

}

#将它们全部放在BayesianOptimization对象中

from bayes_opt import BayesianOptimization

LGB_BO = BayesianOptimization(LGB_bayesian, bounds_LGB, random_state=13)

print(LGB_BO.space.keys)#显示要优化的参数

['feature_fraction', 'lambda_l1', 'lambda_l2', 'learning_rate', 'max_depth', 'min_data_in_leaf', 'min_gain_to_split', 'min_sum_hessian_in_leaf', 'num_leaves']

调用maximize方法LGB_BO才会开始搜索。

- init_points:我们想要执行的随机探索的初始随机运行次数。 在我们的例子中,LGB_bayesian将被运行n_iter次。

- n_iter:运行init_points数后,我们要执行多少次贝叶斯优化运行。

import warnings

import gc

pd.set_option('display.max_columns', 200)

init_points = 5

n_iter = 5

print('-' * 130)

with warnings.catch_warnings():

warnings.filterwarnings('ignore')

LGB_BO.maximize(init_points=init_points, n_iter=n_iter, acq='ucb', xi=0.0, alpha=1e-6)

----------------------------------------------------------------------------------------------------------------------------------

| iter | target | featur... | lambda_l1 | lambda_l2 | learni... | max_depth | min_da... | min_ga... | min_su... | num_le... |

-------------------------------------------------------------------------------------------------------------------------------------

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[22] valid_0's auc: 0.770384

| [0m 1 [0m | [0m 0.7704 [0m | [0m 0.4 [0m | [0m 1.188 [0m | [0m 4.121 [0m | [0m 0.2901 [0m | [0m 14.67 [0m | [0m 11.8 [0m | [0m 0.609 [0m | [0m 0.007758[0m | [0m 14.62 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[5] valid_0's auc: 0.737399

| [0m 2 [0m | [0m 0.7374 [0m | [0m 0.3749 [0m | [0m 0.1752 [0m | [0m 1.492 [0m | [0m 0.02697 [0m | [0m 13.28 [0m | [0m 10.59 [0m | [0m 0.6798 [0m | [0m 0.00257 [0m | [0m 10.21 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[8] valid_0's auc: 0.719501

| [0m 3 [0m | [0m 0.7195 [0m | [0m 0.05424 [0m | [0m 1.792 [0m | [0m 4.745 [0m | [0m 0.07319 [0m | [0m 6.833 [0m | [0m 18.77 [0m | [0m 0.0319 [0m | [0m 0.000660[0m | [0m 14.45 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[19] valid_0's auc: 0.760323

| [0m 4 [0m | [0m 0.7603 [0m | [0m 0.4432 [0m | [0m 0.04358 [0m | [0m 3.733 [0m | [0m 0.2457 [0m | [0m 3.909 [0m | [0m 14.85 [0m | [0m 0.5093 [0m | [0m 0.004804[0m | [0m 19.33 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[8] valid_0's auc: 0.719412

| [0m 5 [0m | [0m 0.7194 [0m | [0m 0.05001 [0m | [0m 1.235 [0m | [0m 3.561 [0m | [0m 0.1041 [0m | [0m 6.324 [0m | [0m 15.43 [0m | [0m 0.9186 [0m | [0m 0.002452[0m | [0m 11.87 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[11] valid_0's auc: 0.761779

| [0m 6 [0m | [0m 0.7618 [0m | [0m 0.5 [0m | [0m 1.457 [0m | [0m 5.0 [0m | [0m 0.3 [0m | [0m 15.0 [0m | [0m 11.16 [0m | [0m 0.5786 [0m | [0m 0.01 [0m | [0m 17.3 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[8] valid_0's auc: 0.723696

| [0m 7 [0m | [0m 0.7237 [0m | [0m 0.05 [0m | [0m 5.0 [0m | [0m 5.0 [0m | [0m 0.3 [0m | [0m 15.0 [0m | [0m 12.07 [0m | [0m 0.0 [0m | [0m 0.01 [0m | [0m 14.22 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770585

| [95m 8 [0m | [95m 0.7706 [0m | [95m 0.5 [0m | [95m 0.0 [0m | [95m 2.9 [0m | [95m 0.3 [0m | [95m 14.75 [0m | [95m 11.78 [0m | [95m 1.0 [0m | [95m 0.003764[0m | [95m 16.12 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[19] valid_0's auc: 0.769728

| [0m 9 [0m | [0m 0.7697 [0m | [0m 0.5 [0m | [0m 0.0 [0m | [0m 4.272 [0m | [0m 0.3 [0m | [0m 15.0 [0m | [0m 8.6 [0m | [0m 1.0 [0m | [0m 0.009985[0m | [0m 15.0 [0m |

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[28] valid_0's auc: 0.770488

| [0m 10 [0m | [0m 0.7705 [0m | [0m 0.5 [0m | [0m 0.0 [0m | [0m 4.457 [0m | [0m 0.3 [0m | [0m 10.79 [0m | [0m 9.347 [0m | [0m 1.0 [0m | [0m 0.01 [0m | [0m 16.83 [0m |

=====================================================================================================================================

print(LGB_BO.max['target'])#最佳的auc值

LGB_BO.max['params']#最佳模型参数

0.7705848546741305

{'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.899605369776912,

'learning_rate': 0.3,

'max_depth': 14.752822601781512,

'min_data_in_leaf': 11.782200828907708,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0037639771497955552,

'num_leaves': 16.11909067874899}

#将这些参数用于我们的最终模型

LGB_BO.probe(

params={'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'learning_rate': 0.3,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0038,

'num_leaves': 16},

lazy=True)

#对LGB_BO对象进行最大化调用。

LGB_BO.maximize(init_points=0, n_iter=0)

| iter | target | featur... | lambda_l1 | lambda_l2 | learni... | max_depth | min_da... | min_ga... | min_su... | num_le... |

-------------------------------------------------------------------------------------------------------------------------------------

[LightGBM] [Warning] verbosity is set=-1, verbose=1 will be ignored. Current value: verbosity=-1

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

| [95m 11 [0m | [95m 0.7706 [0m | [95m 0.5 [0m | [95m 0.0 [0m | [95m 2.9 [0m | [95m 0.3 [0m | [95m 15.0 [0m | [95m 12.0 [0m | [95m 1.0 [0m | [95m 0.0038 [0m | [95m 16.0 [0m |

=====================================================================================================================================

#通过属性LGB_BO.res可以获得探测的所有参数列表及其相应的目标值。

for i, res in enumerate(LGB_BO.res):

print("Iteration {}: \n\t{}".format(i, res))

将LGB_BO的最佳参数保存到param_lgb字典中,然后进行5折交叉训练

from sklearn.model_selection import StratifiedKFold

from scipy.stats import rankdata

param_lgb = {

'num_leaves': int(LGB_BO.max['params']['num_leaves']), # remember to int here

'max_bin': 63,

'min_data_in_leaf': int(LGB_BO.max['params']['min_data_in_leaf']), # remember to int here

'learning_rate': LGB_BO.max['params']['learning_rate'],

'min_sum_hessian_in_leaf': LGB_BO.max['params']['min_sum_hessian_in_leaf'],

'bagging_fraction': 1.0,

'bagging_freq': 5,

'feature_fraction': LGB_BO.max['params']['feature_fraction'],

'lambda_l1': LGB_BO.max['params']['lambda_l1'],

'lambda_l2': LGB_BO.max['params']['lambda_l2'],

'min_gain_to_split': LGB_BO.max['params']['min_gain_to_split'],

'max_depth': int(LGB_BO.max['params']['max_depth']), # remember to int here

'save_binary': True,

'seed': 1337,

'feature_fraction_seed': 1337,

'bagging_seed': 1337,

'drop_seed': 1337,

'data_random_seed': 1337,

'objective': 'binary',

'boosting_type': 'gbdt',

'verbose': 1,

'metric': 'auc',

'is_unbalance': True,

'boost_from_average': False,

}

nfold = 5

gc.collect()

skf = StratifiedKFold(n_splits=nfold, shuffle=True, random_state=2019)

oof = np.zeros(len(y_train))

predictions = np.zeros((len(X_test),nfold))

i = 1

for train_index, valid_index in skf.split(X_train, y_train):

print("\nfold {}".format(i))

lgb_train = lgb.Dataset(X_train,label=y_train)

lgb_valid = lgb.Dataset(X_test,label=y_test,reference=lgb_train)

clf = lgb.train(param_lgb, lgb_train,500, valid_sets = [lgb_valid ], verbose_eval=250, callbacks=[lgb.early_stopping(stopping_rounds=5)])

print(clf.predict(X_train, num_iteration=clf.best_iteration) )

oof[valid_index] = clf.predict(X_train.iloc[valid_index].values, num_iteration=clf.best_iteration)

predictions[:,i-1] += clf.predict(X_test, num_iteration=clf.best_iteration)

i = i + 1

print("\n\nCV AUC: {:<0.2f}".format(metrics.roc_auc_score(y_train, oof)))

fold 1

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000288 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 393

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

[0.52559221 0.40000825 0.43907974 ... 0.40122056 0.46515425 0.56678622]

fold 2

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000330 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 393

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

[0.52559221 0.40000825 0.43907974 ... 0.40122056 0.46515425 0.56678622]

fold 3

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000292 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 393

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

[0.52559221 0.40000825 0.43907974 ... 0.40122056 0.46515425 0.56678622]

fold 4

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000302 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 393

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

[0.52559221 0.40000825 0.43907974 ... 0.40122056 0.46515425 0.56678622]

fold 5

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000300 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 393

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[17] valid_0's auc: 0.770586

[0.52559221 0.40000825 0.43907974 ... 0.40122056 0.46515425 0.56678622]

CV AUC: 0.81

另一种贝叶斯搜索,参考:《网格搜索、随机搜索和贝叶斯搜索实用教程》

#还没有写完,并不能正确运行

from skopt import BayesSearchCV

# 参数范围由下面的一个指定

from skopt.space import Real, Categorical, Integer

search_spaces = {

'C': Real(0.1, 1e+4),

'gamma': Real(1e-6, 1e+1, 'log-uniform')}

#接下来创建一个RandomizedSearchCV带参数n_iter_search的对象,并将使用训练数据来训练模型。

n_iter_search = 20

bayes_search = BayesSearchCV(

lgb.LGBMClassifier(),

search_spaces,

n_iter=n_iter_search,

cv=3,

verbose=3

)

bayes_search.fit(X_train, y_train)

bayes_search.best_params_

六、模型微调与参数衰减

6.2 学习率衰减

参考《python实现LightGBM(进阶)python实现LightGBM(进阶)》

import pandas as pd, numpy as np, time

from sklearn.model_selection import train_test_split

# 读取数据

data = pd.read_csv("https://cdn.coggle.club/kaggle-flight-delays/flights_10k.csv.zip")

# 提取有用的列

data = data[["MONTH","DAY","DAY_OF_WEEK","AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT",

"ORIGIN_AIRPORT","AIR_TIME", "DEPARTURE_TIME","DISTANCE","ARRIVAL_DELAY"]]

data.dropna(inplace=True)

# 筛选出部分数据

data["ARRIVAL_DELAY"] = (data["ARRIVAL_DELAY"]>10)*1

# 进行编码

cols = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

for item in cols:

data[item] = data[item].astype("category").cat.codes +1

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

lgb_train = lgb.Dataset(X_train, y_train,free_raw_data=False)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train,free_raw_data=False)

params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',

'num_leaves': 16,

'learning_rate': 0.3,

'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0038,

"verbosity":-1}

# 学习率指数衰减,learning_rates弃用了

gbm = lgb.train(params,

lgb_train,

num_boost_round=10,

learning_rates=lambda iter: 0.3 * (0.99 ** iter),# 学习率衰减

valid_sets=lgb_eval)

#设置learning_rates结果是accuarcy: 82.07% auc_score: 75.40%

#不设置learning_rates结果是accuarcy: 81.61% auc_score: 75.74%,还是不一样

# 学习率指数衰减

gbm2 = lgb.train(params,

lgb_train,

num_boost_round=10,

init_model=gbm,

valid_sets=lgb_eval,

callbacks=[lgb.reset_parameter(bagging_fraction=lambda iter: 0.3 * (0.99 ** iter))])

#不设置init_model,结果是accuarcy: 81.69% auc_score: 75.25%

# 设置init_model,结果是accuarcy: 81.94% auc_score: 76.32%

#lgb.reset_parameter参数可以是列表或者衰减函数,不知道为啥bagging_fraction设置不同值结果是一样的

y_pred1,y_pred2 = gbm.predict(X_test,num_iteration=gbm.best_iteration),gbm2.predict(X_test,num_iteration=gbm2.best_iteration)

pred1,pred2 =[1 if x >0.5 else 0 for x in y_pred1],[1 if x >0.5 else 0 for x in y_pred2]

accuracy1,accuracy2 = accuracy_score(y_test,pred1),accuracy_score(y_test,pred2)

auc_score1,auc_score2=metrics.roc_auc_score(y_test,y_pred1),metrics.roc_auc_score(y_test,y_pred2)

print("accuarcy: %.2f%%" % (accuracy1*100.0),"auc_score: %.2f%%" % (auc_score1*100.0))

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))

[1] valid_0's binary_logloss: 0.48425

[2] valid_0's binary_logloss: 0.471031

[3] valid_0's binary_logloss: 0.46278

[4] valid_0's binary_logloss: 0.456369

[5] valid_0's binary_logloss: 0.449357

[6] valid_0's binary_logloss: 0.444377

[7] valid_0's binary_logloss: 0.440908

[8] valid_0's binary_logloss: 0.438597

[9] valid_0's binary_logloss: 0.435632

[10] valid_0's binary_logloss: 0.434647

accuarcy: 82.07% auc_score: 75.40%

accuarcy: 82.19% auc_score: 76.08%

# 学习率阶梯衰减,bagging_fraction'如果使用列表,列表元素数量要和 'num_boost_round'值一样

gbm3 = lgb.train(params,

lgb_train,

num_boost_round=10,

init_model=gbm,#

valid_sets=lgb_eval,

callbacks=[lgb.reset_parameter(bagging_fraction=[0.6]*5+[0.2]*3+[0.1]*2)])

y_pred = gbm3.predict(X_test,num_iteration=gbm3.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.5 else 0 for x in y_pred]

accuracy = accuracy_score(y_test,pred)

auc_score=metrics.roc_auc_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

accuarcy: 81.94% auc_score: 76.30%

七、特征筛选方法

7.1 筛选最重要的3个特征

#通过feature_importances_方法得到特征重要性,值越高越重要

gbm = lgb.LGBMClassifier(max_depth=9)

gbm.fit(train, y_train,

eval_set=[(test, y_test)],

eval_metric='binary_logloss',

callbacks=[lgb.early_stopping(5)])

df=pd.DataFrame(gbm.feature_importances_,gbm.feature_name_,columns=['value'])

df.sort_values('value',inplace=True,ascending=False)

df

Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[52] valid_0's binary_logloss: 0.429146

| value | |

|---|---|

| DEPARTURE_TIME | 276 |

| ORIGIN_AIRPORT | 262 |

| DESTINATION_AIRPORT | 250 |

| FLIGHT_NUMBER | 236 |

| AIR_TIME | 227 |

| DISTANCE | 184 |

| AIRLINE | 124 |

| MONTH | 0 |

| DAY | 0 |

| DAY_OF_WEEK | 0 |

上图看出,最重要的是DEPARTURE_TIME、ORIGIN_AIRPORT、DESTINATION_AIRPORT

7.2 利用PermutationImportance排列特征重要性

- eli5文档

- 利用PermutationImportance挑选变量

- kaggle教程

import eli5

from eli5.sklearn import PermutationImportance

perm = PermutationImportance(gbm, random_state=1).fit(test,y_test)

eli5.show_weights(perm, feature_names =gbm.feature_name_)

Training until validation scores don’t improve for 5 rounds

Early stopping, best iteration is:

[52] valid_0’s binary_logloss: 0.429146

| Weight | Feature |

|---|

± 0.0096

DEPARTURE_TIME

± 0.0057

DESTINATION_AIRPORT

± 0.0043

ORIGIN_AIRPORT

± 0.0067

AIR_TIME

± 0.0041

AIRLINE

± 0.0045

DISTANCE

± 0.0029

FLIGHT_NUMBER

± 0.0000

DAY_OF_WEEK

± 0.0000

DAY

± 0.0000

MONTH

所以前三重要的特征是DEPARTURE_TIME、DESTINATION_AIRPORT、ORIGIN_AIRPORT

7.3 Null Importances进行特征选择

参考kaggkle教程

《数据竞赛】99%情况下都有效的特征筛选策略–Null Importance》

特征筛选策略 – Null Importance 特征筛选

7.3.1 主要思想:

通过利用跑树模型得到特征的importance来判断特征的稳定性和好坏。

-

将构建好的特征和正确的标签扔进树模型中,此时可以得到每个特征的重要性(split 和 gain)

-

将数据的标签打乱,再扔进模型中,得到打乱标签后,每个特征的重要性(split和gain);重复n次;取n次特征重要性的平均值。

-

将1中正确标签跑的特征的重要性和2中打乱标签的特征中重要性进行比较;具体比较方式可以参考上面的kernel

- 当一个特征非常work,那它在正确标签的树模型中的importance应该很高,但它在打乱标签的树模型中的importance将很低(无法识别随机标签);反之,一个垃圾特征,那它在正确标签的模型中importance很一般,打乱标签的树模型中importance将大于等于正确标签模型的importance。所以通过同时判断每个特征在正确标签的模型和打乱标签的模型中的importance(split和gain),可以选择特征稳定和work的特征。

- 思想大概就是这样吧,importance受到特征相关性的影响,特征的重要性会被相关特征的重要性稀释,看importance也不一定准,用这个来对暴力特征进行筛选还是可以的。

7.3.2实现步骤

Null Importance算法的实现步骤为:

- 在原始数据集上运行模型并且记录每个特征重要性。以此作为基准;

- 构建Null importances分布:对我们的标签进行随机Shuffle,并且计算shuffle之后的特征的重要性;

- 对2进行多循环操作,得到多个不同shuffle之后的特征重要性;

- 设计score函数,得到未shuffle的特征重要性与shuffle之后特征重要性的偏离度,并以此设计特征筛选策略;

- 计算不同筛选情况下的模型的分数,并进行记录;

- 将分数最好的几个分数对应的特征进行返回。实现步骤

7.3.3 读取数据集,计算Real Targe和shuffle Target下的特征重要度

import pandas as pd

import numpy as np

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import KFold

import time

from lightgbm import LGBMClassifier

import lightgbm as lgb

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import seaborn as sns

%matplotlib inline

import warnings

warnings.simplefilter('ignore', UserWarning)

import gc

gc.enable()

import pandas as pd, numpy as np, time

data= pd.read_csv("https://cdn.coggle.club/kaggle-flight-delays/flights_10k.csv.zip")

# 提取有用的列

data= data[["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT",

"ORIGIN_AIRPORT","AIR_TIME", "DEPARTURE_TIME","DISTANCE","ARRIVAL_DELAY"]]

data.dropna(inplace=True)

from sklearn.model_selection import train_test_split

# 筛选出部分数据

data["ARRIVAL_DELAY"] = (data["ARRIVAL_DELAY"]>10)*1

#categorical_feats = ["AIRLINE","FLIGHT_NUMBER","DESTINATION_AIRPORT","ORIGIN_AIRPORT"]

categorical_feats = [f for f in data.columns if data[f].dtype == 'object']

#将上面四列特征转为类别特征,但不是one-hot编码

for f_ in categorical_feats:

data[f_], _ = pd.factorize(data[f_])

# Set feature type as categorical

data[f_] = data[f_].astype('category')

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(data.drop(["ARRIVAL_DELAY"], axis=1), data["ARRIVAL_DELAY"], random_state=10, test_size=0.25)

创建评分函数

feature_importances_ :特征重要性的类型。default=‘split’。

- 如果是split,则结果包含该特征在模型中使用的次数。

- 如果为“gain”,则结果包含使用该特征的分割的总增益。

def get_feature_importances(X_train, X_test, y_train, y_test,shuffle, seed=None):

# 获取特征

train_features = list(X_train.columns)

# 判断是否shuffle TARGET

y_train,y_test= y_train.copy(),y_test.copy()

if shuffle:

# Here you could as well use a binomial distribution

y_train,y_test= y_train.copy().sample(frac=1.0),y_test.copy().sample(frac=1.0)

lgb_train = lgb.Dataset(X_train, y_train,free_raw_data=False,silent=True)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train,free_raw_data=False,silent=True)

# 在 RF 模式下安装 LightGBM,它比 sklearn RandomForest 更快

lgb_params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',

'num_leaves': 16,

'learning_rate': 0.3,

'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0038}

# 训练模型

clf = lgb.train(params=lgb_params,train_set=lgb_train,valid_sets=lgb_eval,num_boost_round=10, categorical_feature=categorical_feats)#将object特征设置为分类特征,但是并不需要进行one-hot编码

#得到特征重要性

imp_df = pd.DataFrame()

imp_df["feature"] = list(train_features)

imp_df["importance_gain"] = clf.feature_importance(importance_type='gain')

imp_df["importance_split"] = clf.feature_importance(importance_type='split')

imp_df['trn_score'] = roc_auc_score(y_test, clf.predict( X_test))

return imp_df

np.random.seed(123)

# 获得市实际的特征重要性,即没有shuffletarget

actual_imp_df = get_feature_importances(X_train, X_test, y_train, y_test, shuffle=False)

actual_imp_df

[LightGBM] [Info] Number of positive: 1600, number of negative: 5594

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000416 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 1406

[LightGBM] [Info] Number of data points in the train set: 7194, number of used features: 7

[1] valid_0's binary_logloss: 0.479157

[2] valid_0's binary_logloss: 0.46882

[3] valid_0's binary_logloss: 0.454724

[4] valid_0's binary_logloss: 0.445913

[5] valid_0's binary_logloss: 0.440924

[6] valid_0's binary_logloss: 0.438309

[7] valid_0's binary_logloss: 0.433886

[8] valid_0's binary_logloss: 0.432747

[9] valid_0's binary_logloss: 0.431001

[10] valid_0's binary_logloss: 0.429621

| feature | importance_gain | importance_split | trn_score | |

|---|---|---|---|---|

| 0 | AIRLINE | 153.229680 | 15 | 0.764829 |

| 1 | FLIGHT_NUMBER | 189.481180 | 23 | 0.764829 |

| 2 | DESTINATION_AIRPORT | 1036.401096 | 23 | 0.764829 |

| 3 | ORIGIN_AIRPORT | 650.938854 | 22 | 0.764829 |

| 4 | AIR_TIME | 119.763649 | 17 | 0.764829 |

| 5 | DEPARTURE_TIME | 994.109417 | 37 | 0.764829 |

| 6 | DISTANCE | 93.170790 | 13 | 0.764829 |

null_imp_df = pd.DataFrame()

nb_runs = 10

import time

start = time.time()

dsp = ''

for i in range(nb_runs):

# 获取当前的特征重要性

imp_df = get_feature_importances(X_train, X_test, y_train, y_test, shuffle=True)

imp_df['run'] = i + 1

# 将特征重要性连起来

null_imp_df = pd.concat([null_imp_df, imp_df], axis=0)

# 删除上一条信息

for l in range(len(dsp)):

print('\b', end='', flush=True)

# Display current run and time used

spent = (time.time() - start) / 60

dsp = 'Done with %4d of %4d (Spent %5.1f min)' % (i + 1, nb_runs, spent)

print(dsp, end='', flush=True)

null_imp_df

| feature | importance_gain | importance_split | trn_score | run | |

|---|---|---|---|---|---|

| 0 | AIRLINE | 26.436000 | 8 | 0.525050 | 1 |

| 1 | FLIGHT_NUMBER | 142.159161 | 35 | 0.525050 | 1 |

| 2 | DESTINATION_AIRPORT | 231.459383 | 20 | 0.525050 | 1 |

| 3 | ORIGIN_AIRPORT | 319.862975 | 26 | 0.525050 | 1 |

| 4 | AIR_TIME | 97.764902 | 24 | 0.525050 | 1 |

| ... | ... | ... | ... | ... | ... |

| 2 | DESTINATION_AIRPORT | 254.016771 | 20 | 0.509197 | 10 |

| 3 | ORIGIN_AIRPORT | 271.220462 | 20 | 0.509197 | 10 |

| 4 | AIR_TIME | 82.260759 | 17 | 0.509197 | 10 |

| 5 | DEPARTURE_TIME | 137.511192 | 25 | 0.509197 | 10 |

| 6 | DISTANCE | 73.353821 | 19 | 0.509197 | 10 |

70 rows × 5 columns

可视化演示

def display_distributions(actual_imp_df_, null_imp_df_, feature_):

plt.figure(figsize=(13, 6))

gs = gridspec.GridSpec(1, 2)

# 画出 Split importances

ax = plt.subplot(gs[0, 0])

a = ax.hist(null_imp_df_.loc[null_imp_df_['feature'] == feature_, 'importance_split'].values, label='Null importances')

ax.vlines(x=actual_imp_df_.loc[actual_imp_df_['feature'] == feature_, 'importance_split'].mean(),

ymin=0, ymax=np.max(a[0]), color='r',linewidth=10, label='Real Target')

ax.legend()

ax.set_title('Split Importance of %s' % feature_.upper(), fontweight='bold')

plt.xlabel('Null Importance (split) Distribution for %s ' % feature_.upper())

# 画出 Gain importances

ax = plt.subplot(gs[0, 1])

a = ax.hist(null_imp_df_.loc[null_imp_df_['feature'] == feature_, 'importance_gain'].values, label='Null importances')

ax.vlines(x=actual_imp_df_.loc[actual_imp_df_['feature'] == feature_, 'importance_gain'].mean(),

ymin=0, ymax=np.max(a[0]), color='r',linewidth=10, label='Real Target')

ax.legend()

ax.set_title('Gain Importance of %s' % feature_.upper(), fontweight='bold')

plt.xlabel('Null Importance (gain) Distribution for %s ' % feature_.upper())

#画出“DESTINATION_AIRPORT”的特征重要性

display_distributions(actual_imp_df_=actual_imp_df, null_imp_df_=null_imp_df, feature_='DESTINATION_AIRPORT')

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-irMW8jCB-1642190201174)(lightGBM_files/lightGBM_91_0.png)]

7.3.4计算Score

- 以未进行特征shuffle的特征重要性除以shuffle之后的0.75分位数作为我们的score

因为’MONTH’,‘DAY’,'DAY_OF_WEEK’三个特征没有什么用,画的的图结果不好看,所以把这三个去掉了。

feature_scores = []

for _f in actual_imp_df['feature'].unique():

f_null_imps_gain = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_gain'].values

f_act_imps_gain = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_gain'].mean()

gain_score = np.log(1e-10 + f_act_imps_gain / (1 + np.percentile(f_null_imps_gain, 75))) # Avoid didvide by zero

f_null_imps_split = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_split'].values

f_act_imps_split = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_split'].mean()

split_score = np.log(1e-10 + f_act_imps_split / (1 + np.percentile(f_null_imps_split, 75))) # Avoid didvide by zero

feature_scores.append((_f, split_score, gain_score))

scores_df = pd.DataFrame(feature_scores, columns=['feature', 'split_score', 'gain_score'])

plt.figure(figsize=(16, 16))

gs = gridspec.GridSpec(1, 2)

# Plot Split importances

ax = plt.subplot(gs[0, 0])

sns.barplot(x='split_score', y='feature', data=scores_df.sort_values('split_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt split importances', fontweight='bold', fontsize=14)

# Plot Gain importances

ax = plt.subplot(gs[0, 1])

sns.barplot(x='gain_score', y='feature', data=scores_df.sort_values('gain_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt gain importances', fontweight='bold', fontsize=14)

plt.tight_layout()

null_imp_df.to_csv('null_importances_distribution_rf.csv')

actual_imp_df.to_csv('actual_importances_ditribution_rf.csv')

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-MbfvGl3f-1642190201175)(lightGBM_files/lightGBM_93_0.png)]

- shuffle target之后特征重要性低于实际target对应特征的重要性0.25分位数的次数百分比。

correlation_scores = []

for _f in actual_imp_df['feature'].unique():

f_null_imps = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_gain'].values

f_act_imps = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_gain'].values

gain_score = 100 * (f_null_imps < np.percentile(f_act_imps, 25)).sum() / f_null_imps.size

f_null_imps = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_split'].values

f_act_imps = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_split'].values

split_score = 100 * (f_null_imps < np.percentile(f_act_imps, 25)).sum() / f_null_imps.size

correlation_scores.append((_f, split_score, gain_score))

corr_scores_df = pd.DataFrame(correlation_scores, columns=['feature', 'split_score', 'gain_score'])

fig = plt.figure(figsize=(16, 16))

gs = gridspec.GridSpec(1, 2)

# Plot Split importances

ax = plt.subplot(gs[0, 0])

sns.barplot(x='split_score', y='feature', data=corr_scores_df.sort_values('split_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt split importances', fontweight='bold', fontsize=14)

# Plot Gain importances

ax = plt.subplot(gs[0, 1])

sns.barplot(x='gain_score', y='feature', data=corr_scores_df.sort_values('gain_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt gain importances', fontweight='bold', fontsize=14)

plt.tight_layout()

plt.suptitle("Features' split and gain scores", fontweight='bold', fontsize=16)

fig.subplots_adjust(top=0.93)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-aDm9kLIm-1642190201175)(lightGBM_files/lightGBM_95_0.png)]

correlation_scores

[('AIRLINE', 100.0, 100.0),

('FLIGHT_NUMBER', 0.0, 60.0),

('DESTINATION_AIRPORT', 100.0, 100.0),

('ORIGIN_AIRPORT', 50.0, 100.0),

('AIR_TIME', 10.0, 90.0),

('DEPARTURE_TIME', 100.0, 100.0),

('DISTANCE', 30.0, 100.0)]

- 计算特征筛选之后的最佳分数并记录相应特征

通过运行下面的代码,train_features选择不同的特征来拟合模型,最终Results for threshold 20/30效果最好。此时的模型特征为

split_feats = [_f for _f, _score, _ in correlation_scores if _score >=20]

split_feats

['AIRLINE',

'DESTINATION_AIRPORT',

'ORIGIN_AIRPORT',

'DEPARTURE_TIME',

'DISTANCE']

#此时的特征为

def score_feature_selection(data,train_features=None, cat_feats=None):

# Fit LightGBM

lgb_train = lgb.Dataset(data[train_features], data["ARRIVAL_DELAY"],free_raw_data=False,silent=True)

# 在 RF 模式下安装 LightGBM,它比 sklearn RandomForest 更快

lgb_params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',

'num_leaves': 16,

'learning_rate': 0.3,

'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'metric': 'auc',

'min_sum_hessian_in_leaf': 0.0038,

"verbosity":-1}

#"force_col_wise":true}

# 训练模型

hist = lgb.cv(params=lgb_params,train_set=lgb_train,

num_boost_round=10, categorical_feature=cat_feats,

nfold=5,stratified=True,shuffle=True,early_stopping_rounds=5,seed=17)

# Return the last mean / std values

return hist['auc-mean'][-1], hist['auc-stdv'][-1]

# features = [f for f in data.columns if f not in ['SK_ID_CURR', 'TARGET']]

# score_feature_selection(df=data[features], train_features=features, target=data['TARGET'])

for threshold in [0, 10, 20, 30 , 40, 50 ,60 , 70, 80 , 90, 99]:

split_feats = [_f for _f, _score, _ in correlation_scores if _score >= threshold]

split_cat_feats = [_f for _f, _score, _ in correlation_scores if (_score >= threshold) & (_f in categorical_feats)]

gain_feats = [_f for _f, _, _score in correlation_scores if _score >= threshold]

gain_cat_feats = [_f for _f, _, _score in correlation_scores if (_score >= threshold) & (_f in categorical_feats)]

print('Results for threshold %3d' % threshold)

split_results = score_feature_selection(data,train_features=split_feats, cat_feats=split_cat_feats)

print('\t SPLIT : %.6f +/- %.6f' % (split_results[0], split_results[1]))

gain_results = score_feature_selection(data,train_features=gain_feats, cat_feats=gain_cat_feats)

print('\t GAIN : %.6f +/- %.6f' % (gain_results[0], gain_results[1]))

Results for threshold 0

SPLIT : 0.757882 +/- 0.012114

GAIN : 0.757882 +/- 0.012114

Results for threshold 10

SPLIT : 0.756999 +/- 0.011506

GAIN : 0.757882 +/- 0.012114

Results for threshold 20

SPLIT : 0.757959 +/- 0.012558

GAIN : 0.757882 +/- 0.012114

Results for threshold 30

SPLIT : 0.757959 +/- 0.012558

GAIN : 0.757882 +/- 0.012114

Results for threshold 40

SPLIT : 0.745729 +/- 0.013217

GAIN : 0.757882 +/- 0.012114

Results for threshold 50

SPLIT : 0.745729 +/- 0.013217

GAIN : 0.757882 +/- 0.012114

Results for threshold 60

SPLIT : 0.727063 +/- 0.006758

GAIN : 0.757882 +/- 0.012114

Results for threshold 70

SPLIT : 0.727063 +/- 0.006758

GAIN : 0.756999 +/- 0.011506

Results for threshold 80

SPLIT : 0.727063 +/- 0.006758

GAIN : 0.756999 +/- 0.011506

Results for threshold 90

SPLIT : 0.727063 +/- 0.006758

GAIN : 0.756999 +/- 0.011506

Results for threshold 99

SPLIT : 0.727063 +/- 0.006758

GAIN : 0.757959 +/- 0.012558

八、自定义损失函数和评测函数

参考XGB/LGB—自定义损失函数与评价函数

参考示例

- 自定义损失函数,预测概率小于0.1的正样本(标签为正样本,但模型预测概率小于0.1),梯度增加一倍。

- 自定义评价函数,阈值大于0.8视为正样本(标签为正样本,但模型预测概率大于0.8)。

#正常模型效果

import warnings

warnings.filterwarnings("ignore")

#特征去掉'MONTH','DAY','DAY_OF_WEEK'三个没用的之后,正常模型阈值0.5时accuarcy: 83.03% auc_score: 83.67%

#acc阈值 0.8时accuarcy: 80.40% auc_score: 83.67%

lgb_train = lgb.Dataset(X_train, y_train,free_raw_data=False,silent=True)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train,free_raw_data=False,silent=True)

# 在 RF 模式下安装 LightGBM,它比 sklearn RandomForest 更快

lgb_params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',#别名binary_error

'num_leaves': 16,

'learning_rate': 0.3,

'feature_fraction': 0.5,

'lambda_l1': 0.0,

'lambda_l2': 2.9,

'max_depth': 15,

'min_data_in_leaf': 12,

'min_gain_to_split': 1.0,

'min_sum_hessian_in_leaf': 0.0038,

"verbosity":-5}

# 训练模型

clf2 = lgb.train(params=lgb_params,train_set=lgb_train,valid_sets=lgb_eval,num_boost_round=10,

categorical_feature=categorical_feats)

y_pred = clf2.predict(X_test,num_iteration=clf2.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.5 else 0 for x in y_pred]

accuracy = accuracy_score(y_test,pred)

auc_score=metrics.roc_auc_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

[1] valid_0's binary_logloss: 0.479157

[2] valid_0's binary_logloss: 0.46882

[3] valid_0's binary_logloss: 0.454724

[4] valid_0's binary_logloss: 0.445913

[5] valid_0's binary_logloss: 0.440924

[6] valid_0's binary_logloss: 0.438309

[7] valid_0's binary_logloss: 0.433886

[8] valid_0's binary_logloss: 0.432747

[9] valid_0's binary_logloss: 0.431001

[10] valid_0's binary_logloss: 0.429621

accuarcy: 81.69% auc_score: 76.48%

# 自定义目标函数,预测概率小于0.1的正样本(标签为正样本,但模型预测概率小于0.1),梯度增加一倍。

def loglikelihood(preds, train_data):

labels=train_data.get_label()

preds=1./(1.+np.exp(-preds))

grad=[(p-l) if p>=0.1 else 2*(p-l) for (p,l) in zip(preds,labels) ]

hess=[p*(1.-p) if p>=0.1 else 2*p*(1.-p) for p in preds ]

return grad, hess

# 自定义评价指标binary_error,阈值大于0.8视为正样本

def binary_error(preds, train_data):

labels = train_data.get_label()

preds = 1. / (1. + np.exp(-preds))

return 'error', np.mean(labels != (preds > 0.8)), False

clf3 = lgb.train(lgb_params,

lgb_train,

num_boost_round=10,

init_model=clf2,

fobj=loglikelihood, # 目标函数

feval=binary_error, # 评价指标

valid_sets=lgb_eval)

y_pred = clf3.predict(X_test,num_iteration=clf3.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.8 else 0 for x in y_pred]

accuracy2 = accuracy_score(y_test,pred)

auc_score2=metrics.roc_auc_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))

[11] valid_0's binary_logloss: 4.68218 valid_0's error: 0.196414

[12] valid_0's binary_logloss: 4.51541 valid_0's error: 0.196414

[13] valid_0's binary_logloss: 4.4647 valid_0's error: 0.19558

[14] valid_0's binary_logloss: 4.5248 valid_0's error: 0.196414

[15] valid_0's binary_logloss: 4.51904 valid_0's error: 0.196414

[16] valid_0's binary_logloss: 4.52481 valid_0's error: 0.196414

[17] valid_0's binary_logloss: 4.4928 valid_0's error: 0.196414

[18] valid_0's binary_logloss: 4.43027 valid_0's error: 0.196414

[19] valid_0's binary_logloss: 4.4285 valid_0's error: 0.196414

[20] valid_0's binary_logloss: 4.42314 valid_0's error: 0.196831

accuarcy: 81.19% auc_score: 76.47%

九 模型部署与加速

参考文档

python+Treelite:Sklearn树模型训练迁移到c、java部署

由于 Treelite 的范围仅限于预测,因此必须使用其他机器学习包来训练决策树集成模型。在本文档中,我们将展示如何导入已在其他地方训练过的集成模型。

import treelite失败,无法导入,不知道为什么

gbm9 = lgb.LGBMClassifier()

gbm9.fit(X_train, y_train)

y_pred = gbm9.predict(X_test)

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,gbm9.predict_proba(X_test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

gbm9.booster_.save_model("model9.txt")#保存模型为txt格式

import treelite

import treelite.sklearn

model = treelite.sklearn.import_model(gbm9)#导入 scikit-learn 模型

y_pred = model.predict(X_test)

accuracy2 = accuracy_score(y_test,y_pred)

auc_score2=metrics.roc_auc_score(y_test,model.predict_proba(X_test)[:,1])

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))

lgb.train(lgb_params,

lgb_train,

num_boost_round=10,

init_model=clf2,

fobj=loglikelihood, # 目标函数

feval=binary_error, # 评价指标

valid_sets=lgb_eval)

y_pred = clf3.predict(X_test,num_iteration=clf3.best_iteration)#结果是0-1之间的概率值,是一维数组

pred =[1 if x >0.8 else 0 for x in y_pred]

accuracy2 = accuracy_score(y_test,pred)

auc_score2=metrics.roc_auc_score(y_test,y_pred)

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))

[11] valid_0's binary_logloss: 4.68218 valid_0's error: 0.196414

[12] valid_0's binary_logloss: 4.51541 valid_0's error: 0.196414

[13] valid_0's binary_logloss: 4.4647 valid_0's error: 0.19558

[14] valid_0's binary_logloss: 4.5248 valid_0's error: 0.196414

[15] valid_0's binary_logloss: 4.51904 valid_0's error: 0.196414

[16] valid_0's binary_logloss: 4.52481 valid_0's error: 0.196414

[17] valid_0's binary_logloss: 4.4928 valid_0's error: 0.196414

[18] valid_0's binary_logloss: 4.43027 valid_0's error: 0.196414

[19] valid_0's binary_logloss: 4.4285 valid_0's error: 0.196414

[20] valid_0's binary_logloss: 4.42314 valid_0's error: 0.196831

accuarcy: 81.19% auc_score: 76.47%

九 模型部署与加速

参考文档

python+Treelite:Sklearn树模型训练迁移到c、java部署

由于 Treelite 的范围仅限于预测,因此必须使用其他机器学习包来训练决策树集成模型。在本文档中,我们将展示如何导入已在其他地方训练过的集成模型。

import treelite失败,无法导入,不知道为什么

gbm9 = lgb.LGBMClassifier()

gbm9.fit(X_train, y_train)

y_pred = gbm9.predict(X_test)

accuracy = accuracy_score(y_test,y_pred)

auc_score=metrics.roc_auc_score(y_test,gbm9.predict_proba(X_test)[:,1])#predict_proba输出正负样本概率值,取第二列为正样本概率值

print("accuarcy: %.2f%%" % (accuracy*100.0),"auc_score: %.2f%%" % (auc_score*100.0))

gbm9.booster_.save_model("model9.txt")#保存模型为txt格式

import treelite

import treelite.sklearn

model = treelite.sklearn.import_model(gbm9)#导入 scikit-learn 模型

y_pred = model.predict(X_test)

accuracy2 = accuracy_score(y_test,y_pred)

auc_score2=metrics.roc_auc_score(y_test,model.predict_proba(X_test)[:,1])

print("accuarcy: %.2f%%" % (accuracy2*100.0),"auc_score: %.2f%%" % (auc_score2*100.0))