机器学习:SOM聚类的实现

SOM

SOM算法是一种无监督学习的神经网络算法。由输入层和竞争层(输出层)组成。SOM是一种聚类方法。

算法步骤

- 初始化竞争层;竞争层一行代表一个坐标点(x,y)。

- 根据竞争层的尺寸初始化权重矩阵;权重矩阵一行代表竞争层中一个点的权重,一列代表样本的一个属性。

- 对样本集进行归一化处理

- 从样本集中选取一个样本作为输入的向量,然后计算该输入向量与权重矩阵中的哪个权重向量距离最小(使用欧氏距离)。

- 距离最小的向量所属的竞争层点为优胜点,根据优胜点的坐标(竞争层中的坐标)和领域范围来确定在邻域中的其他点。

- 更新邻域内其他点的权值。权值更新公式如下: W j ( t + 1 ) = W j ( t ) + α ( t ) [ X i ( t ) − W j ( t ) ] W_{j}(t+1)=W_{j}(t)+\alpha(t)[X_{i}(t)-W_{j}(t)] Wj(t+1)=Wj(t)+α(t)[Xi(t)−Wj(t)]其中,t代表的是当前遍历的次数,α(t)表示的是当前遍历次数下的学习率,[x-w]表示的是当前的样本与优胜领域内某一点对应的权重之差。

- 重复步骤4-6,直到满足迭代次数。

- 将所有样本进行输入,最后按聚类结果分类存储。

代码实现

参数说明

- 竞争层数组的形状=要划分的类别个数×2;数组的每一行代表一个点,所以为2列

例如:要分成4类的竞争层形状

[

[0,0],

[0,1],

[1,0],

[1,1]

] - 权重层的形状=要划分的类别个数(竞争层数组的行数)×特征数(样本的属性列数)

- 数据集:鸢尾花前50条样本

- 分类:4类

- 迭代次数:10000次

相关方法的定义

//初始化,调用train并将数据集归一化处理后传入

public SOM(double[][] dataset,int M,int N,int iterationNum)

//对数据集进行归一化处理

public double[][] normalizedDataset(double[][] dataset)

//计算向量的模,为normalizedDataset调用

public double calMod(double[] rowArr)

//初始化竞争层

public int[][] initCompetitionLayer(int row,int col)

//初始化权重矩阵

public double[][] initWeigthMatrix(int competitionLayerSize,int feature)

public void train(double[][] dataset,int M,int N,int iterationNum)

public HashMap<Integer,ArrayList<double[]>> classify(double[][] dataset)

public double learning(int iterationNum,double distance)

//返回优胜邻域内的点的序号(不是x,y坐标)和其到优胜点的距离

public HashMap<Integer,Double> getArea(int winnerIndex,double area,int[][] competitionLayer)

//获取优胜单元的序号

public int getWinner(double[] selectedSample,double[][] weigthMatrix)

//计算两向量间的距离,被getWinner调用

public double calDistance(double[] selectedSample,double[] weight)

//计算竞争层中各点之间的距离

public double calDistanceForNode(int[] otherNode,int[] winnerNode)

完整代码

import java.util.ArrayList;

import java.util.HashMap;

public class SOM {

private double[][] trainedWeightMatrix;

public SOM2(double[][] dataset,int M,int N,int iterationNum){

double[][] normalizeddataset=normalizedDataset(dataset);

train(normalizeddataset,M,N,iterationNum);

}

public void train(double[][] dataset,int M,int N,int iterationNum){

int featureNum= dataset[0].length;

int sampleNum= dataset.length;

int[][] competitionLayer = initCompetitionLayer(M, N);

double[][] weigthMatrix = initWeigthMatrix(M * N, featureNum);

double area=1.2;

for(int t=0;t<iterationNum;t++){

for (int z = 0; z < dataset.length; z++) {

double[] selectedSample = dataset[z];

int winnerIndex=getWinner(selectedSample,weigthMatrix);

HashMap<Integer,Double> inAreaList = getArea(winnerIndex, area, competitionLayer);//得到优胜领域内的节点

//更新节点,包括优胜点

for(Integer index:inAreaList.keySet()){

for (int i = 0; i < featureNum; i++) {

weigthMatrix[index][i]=weigthMatrix[index][i]+learning(t,inAreaList.get(index))*(selectedSample[i]-weigthMatrix[index][i]);

}

}

}

}

trainedWeightMatrix=weigthMatrix;

}

// train完后调用该方法来对数据集进行分类

public HashMap<Integer,ArrayList<double[]>> classify(double[][] dataset){

double[][] normalized=normalizedDataset(dataset);

HashMap<Integer,ArrayList<double[]>> result=new HashMap<>();

for (int i = 0; i < normalized.length; i++) {

int winnerIndex=getWinner(normalized[i],trainedWeightMatrix);

boolean isExist=false;

for(Integer tag: result.keySet()){//遍历是否已存在

if(tag==winnerIndex){//已存在

result.get(winnerIndex).add(normalized[i]);

isExist=true;

break;

}

}

if (!isExist){

ArrayList<double[]> alist=new ArrayList<>();

alist.add(normalized[i]);

result.put(winnerIndex,alist);

}

}

return result;

}

//学习率。distance是该点到优胜点的距离

public double learning(int iterationNum,double distance){

return (0.3/(iterationNum+1))*(Math.exp(-distance));

}

//返回优胜邻域内的点的序号(不是x,y坐标)和其到优胜点的距离

public HashMap<Integer,Double> getArea(int winnerIndex,double area,int[][] competitionLayer){

HashMap<Integer,Double> inArea=new HashMap<>();

for (int i = 0; i < competitionLayer.length; i++) {

double distance=calDistanceForNode(competitionLayer[i],competitionLayer[winnerIndex]);

if (distance<area){

inArea.put(i,distance);

}

}

return inArea;

}

//返回winner的竞争单元序号(非x,y坐标)

public int getWinner(double[] selectedSample,double[][] weigthMatrix){

double Min_Distance=10;

int winnerIndex=0;

for (int i = 0; i < weigthMatrix.length; i++) {

double distance=calDistance(selectedSample,weigthMatrix[i]);

if (distance<Min_Distance){

Min_Distance=distance;

winnerIndex=i;

}

}

return winnerIndex;

}

//计算竞争层各点之间的距离

public double calDistanceForNode(int[] otherNode,int[] winnerNode){

double sum=0;

for (int i = 0; i < winnerNode.length; i++) {

double subtraction=winnerNode[i]-otherNode[i];

sum+=subtraction*subtraction;

}

return Math.sqrt(sum);

}

//计算样本和权值之间的距离

public double calDistance(double[] selectedSample,double[] weight){

double sum=0;

for (int i = 0; i < selectedSample.length; i++) {

double subtraction=selectedSample[i]-weight[i];

sum+=subtraction*subtraction;

}

return Math.sqrt(sum);

}

//初始化权重矩阵 competitionLayerSize=M*N

public double[][] initWeigthMatrix(int competitionLayerSize,int feature){

double[][] weightMatrix=new double[competitionLayerSize][feature];

for (int i = 0; i < competitionLayerSize; i++) {

for (int j = 0; j < feature; j++) {

weightMatrix[i][j]=Math.random();

}

}

return weightMatrix;

}

//对数据集进行归一化处理

public double[][] normalizedDataset(double[][] dataset){

for (int j = 0, datasetLength = dataset.length; j < datasetLength; j++) {

double mod = calMod(dataset[j]);

for (int i = 0; i < dataset[0].length; i++) {

dataset[j][i]=dataset[j][i]/mod;

}

}

return dataset;

}

//求模

public double calMod(double[] rowArr){

double temp=0;

for (double num:rowArr){

temp+=num*num;

}

return Math.sqrt(temp);

}

//初始化竞争层,并以此矩阵计算点到点之间的距离。

public int[][] initCompetitionLayer(int row,int col){

int[][] competitionLayer=new int[row*col][2]; // 数组的列为2是固定的,代表坐标x,y

int count=0;

for (int i = 0; i < row; i++) {

for (int j = 0; j < col; j++) {

competitionLayer[count]= new int[]{i, j};

count++;

}

}

return competitionLayer;

}

public static void main(String[] args) {

double[][] dataset=new double[][]{

{5.1,3.5,1.4,0.2},

{4.9,3.0,1.4,0.2},

{4.7,3.2,1.3,0.2},

{4.6,3.1,1.5,0.2},

{5.0,3.6,1.4,0.2},

{5.4,3.9,1.7,0.4},

{4.6,3.4,1.4,0.3},

{5.0,3.4,1.5,0.2},

{4.4,2.9,1.4,0.2},

{4.9,3.1,1.5,0.1},

{5.4,3.7,1.5,0.2},

{4.8,3.4,1.6,0.2},

{4.8,3.0,1.4,0.1},

{4.3,3.0,1.1,0.1},

{5.8,4.0,1.2,0.2},

{5.7,4.4,1.5,0.4},

{5.4,3.9,1.3,0.4},

{5.1,3.5,1.4,0.3},

{5.7,3.8,1.7,0.3},

{5.1,3.8,1.5,0.3},

{5.4,3.4,1.7,0.2},

{5.1,3.7,1.5,0.4},

{4.6,3.6,1.0,0.2},

{5.1,3.3,1.7,0.5},

{4.8,3.4,1.9,0.2},

{5.0,3.0,1.6,0.2},

{5.0,3.4,1.6,0.4},

{5.2,3.5,1.5,0.2},

{5.2,3.4,1.4,0.2},

{4.7,3.2,1.6,0.2},

{4.8,3.1,1.6,0.2},

{5.4,3.4,1.5,0.4},

{5.2,4.1,1.5,0.1},

{5.5,4.2,1.4,0.2},

{4.9,3.1,1.5,0.2},

{5.0,3.2,1.2,0.2},

{5.5,3.5,1.3,0.2},

{4.9,3.6,1.4,0.1},

{4.4,3.0,1.3,0.2},

{5.1,3.4,1.5,0.2},

{5.0,3.5,1.3,0.3},

{4.5,2.3,1.3,0.3},

{4.4,3.2,1.3,0.2},

{5.0,3.5,1.6,0.6},

{5.1,3.8,1.9,0.4},

{4.8,3.0,1.4,0.3},

{5.1,3.8,1.6,0.2},

{4.6,3.2,1.4,0.2},

{5.3,3.7,1.5,0.2},

{5.0,3.3,1.4,0.2}};

SOM s=new SOM(dataset,2,2,10000);

double[][] newDataset = s.normalizedDataset(dataset);

HashMap<Integer, ArrayList<double[]>> outc = s.classify(newDataset);

for(Integer index: outc.keySet()){

System.out.println("*********************第"+index+"类*********************");

for(double[] doubles: outc.get(index)){

System.out.print("[");

for (int i = 0; i < dataset[0].length; i++) {

System.out.print(doubles[i]+",");

}

System.out.print("]");

System.out.println("");

}

System.out.println("");

}

}

}

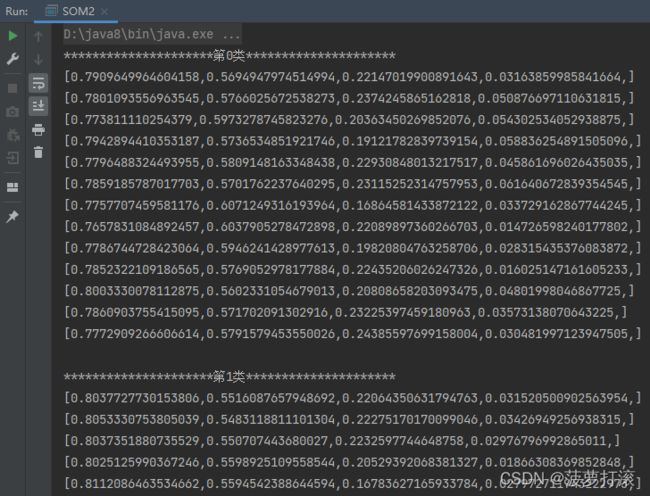

效果图

参考:

https://blog.csdn.net/chenge_j/article/details/72537568