5.pytorch学习:非线性激活——Non-linear Activations

目录

ReLU()

Sigmoid()

加入数据集查看效果

ReLU()

官网:

ReLU — PyTorch 1.10 documentation![]() https://pytorch.org/docs/1.10/generated/torch.nn.ReLU.html#torch.nn.ReLU

https://pytorch.org/docs/1.10/generated/torch.nn.ReLU.html#torch.nn.ReLU

用relu激活函数处理一个张量。

import torch

import torch.nn as nn

input = torch.randn(1, 3, 5, 5)

class ZiDingYi(nn.Module):

def __init__(self):

super(ZiDingYi, self).__init__()

self.relu1 = nn.ReLU(inplace=False)

def forward(self, x):

output = self.relu1(input)

return output

zidingyi = ZiDingYi()

output = zidingyi(input)

print(input)

print(output)结果:根据relu函数的原理,对照两个tensor

tensor([[[[ 0.4775, 0.5198, -0.9001, 2.0372, 1.1024],

[ 0.4123, 0.4447, -2.2582, -0.9545, 1.5849],

[ 0.3350, -1.4441, 1.0662, -0.3530, -0.6260],

[ 1.5861, -0.1249, 0.4190, 1.4067, 1.4868],

[-2.0234, -1.1176, -2.6588, 0.1786, 0.8254]],

[[ 1.5229, 0.4838, -1.0335, 0.0907, -0.0862],

[-1.1347, 1.0359, 0.8005, 0.0480, 0.3533],

[-1.6377, -0.8304, 1.1285, -1.6594, 2.0554],

[-0.1789, 1.2895, -0.2744, -1.3726, 0.8518],

[-0.8479, 0.4450, 0.1576, -1.0171, 0.1124]],

[[ 0.1418, 0.1456, -0.4504, 0.6623, -1.0393],

[-0.8508, 0.6686, 1.1152, -0.2147, 0.9810],

[ 0.4100, -0.9736, 0.2510, 0.6155, 0.2945],

[-0.2406, 0.8804, 0.8310, -0.3539, 0.2641],

[-1.3649, 0.7333, -0.1349, -0.2516, -1.5076]]]])

tensor([[[[0.4775, 0.5198, 0.0000, 2.0372, 1.1024],

[0.4123, 0.4447, 0.0000, 0.0000, 1.5849],

[0.3350, 0.0000, 1.0662, 0.0000, 0.0000],

[1.5861, 0.0000, 0.4190, 1.4067, 1.4868],

[0.0000, 0.0000, 0.0000, 0.1786, 0.8254]],

[[1.5229, 0.4838, 0.0000, 0.0907, 0.0000],

[0.0000, 1.0359, 0.8005, 0.0480, 0.3533],

[0.0000, 0.0000, 1.1285, 0.0000, 2.0554],

[0.0000, 1.2895, 0.0000, 0.0000, 0.8518],

[0.0000, 0.4450, 0.1576, 0.0000, 0.1124]],

[[0.1418, 0.1456, 0.0000, 0.6623, 0.0000],

[0.0000, 0.6686, 1.1152, 0.0000, 0.9810],

[0.4100, 0.0000, 0.2510, 0.6155, 0.2945],

[0.0000, 0.8804, 0.8310, 0.0000, 0.2641],

[0.0000, 0.7333, 0.0000, 0.0000, 0.0000]]]])

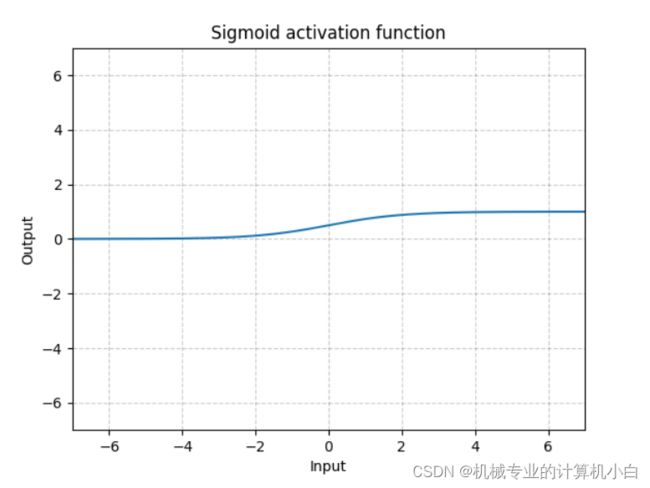

Process finished with exit code 0Sigmoid()

官网:

Sigmoid — PyTorch 1.10 documentation![]() https://pytorch.org/docs/1.10/generated/torch.nn.Sigmoid.html#torch.nn.Sigmoid

https://pytorch.org/docs/1.10/generated/torch.nn.Sigmoid.html#torch.nn.Sigmoid

import torch

import torch.nn as nn

input = torch.randn(1, 3, 5, 5)

class ZiDingYi(nn.Module):

def __init__(self):

super(ZiDingYi, self).__init__()

# self.relu1 = nn.ReLU(inplace=False)

self.sigmoid1 = nn.Sigmoid()

def forward(self, x):

# output = self.relu1(input)

output = self.sigmoid1(input)

return output

zidingyi = ZiDingYi()

output = zidingyi(input)

print(input)

print(output)结果:根据Sigmoid函数的原理,对照两个tensor

tensor([[[[ 0.8320, 0.3261, 0.5211, 0.8005, 0.2384],

[ 1.4506, -2.1106, -0.2674, 1.3780, -0.0732],

[ 0.7145, 0.8867, 0.1283, 1.1789, -0.0598],

[ 0.9552, -1.3852, 1.2036, 0.2603, 0.3854],

[-1.5885, -2.0675, -1.3327, 0.6447, 1.9366]],

[[-0.4702, 0.0669, 1.1686, 0.1894, 2.5503],

[ 1.9532, -0.2793, 0.7396, -0.7552, 1.3760],

[-0.1123, 1.0337, -0.5930, 0.0893, -0.6253],

[ 0.6927, -0.2818, 0.5810, -0.2303, -1.0985],

[ 0.5814, -0.6694, 0.1969, -0.0221, -0.8571]],

[[-0.3682, 0.1004, 2.4205, -1.7189, -1.0245],

[-0.3605, -1.0505, 0.1035, 0.6609, -0.3137],

[ 0.0182, 1.1047, 1.5027, 0.5151, 0.2891],

[ 0.7648, 1.3163, -0.1095, -1.2283, 0.5483],

[ 0.6259, 0.2017, 0.9407, -0.7334, -1.2847]]]])

tensor([[[[0.6968, 0.5808, 0.6274, 0.6901, 0.5593],

[0.8101, 0.1081, 0.4335, 0.7987, 0.4817],

[0.6714, 0.7082, 0.5320, 0.7648, 0.4851],

[0.7222, 0.2002, 0.7692, 0.5647, 0.5952],

[0.1696, 0.1123, 0.2087, 0.6558, 0.8740]],

[[0.3846, 0.5167, 0.7629, 0.5472, 0.9276],

[0.8758, 0.4306, 0.6769, 0.3197, 0.7983],

[0.4719, 0.7376, 0.3559, 0.5223, 0.3486],

[0.6666, 0.4300, 0.6413, 0.4427, 0.2500],

[0.6414, 0.3386, 0.5491, 0.4945, 0.2979]],

[[0.4090, 0.5251, 0.9184, 0.1520, 0.2641],

[0.4108, 0.2591, 0.5259, 0.6595, 0.4222],

[0.5045, 0.7511, 0.8180, 0.6260, 0.5718],

[0.6824, 0.7886, 0.4727, 0.2265, 0.6337],

[0.6516, 0.5502, 0.7192, 0.3244, 0.2168]]]])

Process finished with exit code 0加入数据集查看效果

import torch

import torch.nn as nn

import torchvision

from torch.utils.data import DataLoader

# input = torch.randn(1, 3, 5, 5)

from torch.utils.tensorboard import SummaryWriter

class ZiDingYi(nn.Module):

def __init__(self):

super(ZiDingYi, self).__init__()

# self.relu1 = nn.ReLU(inplace=False)

self.sigmoid1 = nn.Sigmoid()

def forward(self, x):

# output = self.relu1(input)

x = self.sigmoid1(x)

return x

train_dataset = torchvision.datasets.CIFAR10(root="./dataset", train=True, download=True,

transform=torchvision.transforms.ToTensor())

train_dataloader = DataLoader(train_dataset, batch_size=64)

zidingyi = ZiDingYi()

# output = zidingyi(input)

# print(input)

# print(output)

writer = SummaryWriter("./logs_relu")

step = 0

for data in train_dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = zidingyi(imgs)

writer.add_images("output", output, global_step=step)

step += 1

writer.close()tensorboard --logdir=logs_relu根据sigmoid()函数曲线,观察输入图片(totensor)与输出图片(totensor)的差别