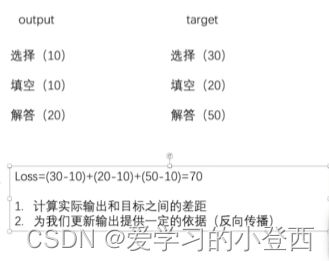

损失函数和反向传播

损失函数Loss

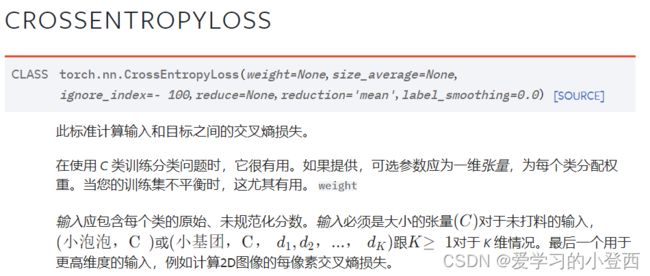

2.官方文档

使用不难,要明白loss是如何计算的需要一定数学功底

(1)L1loss

X:1,2,3

Y:1,2,5

L1loss = (0+0+2) / 3 = 0.6

MSE = (0+0+2^2) / 3 = 1.333

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

print(inputs.shape)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

print(inputs.shape)

loss = L1Loss(reduction='mean')

result = loss(inputs, targets)

print(result)

loss_mse = nn.MSELoss()

result2 = loss_mse(inputs,targets)

print(result2)

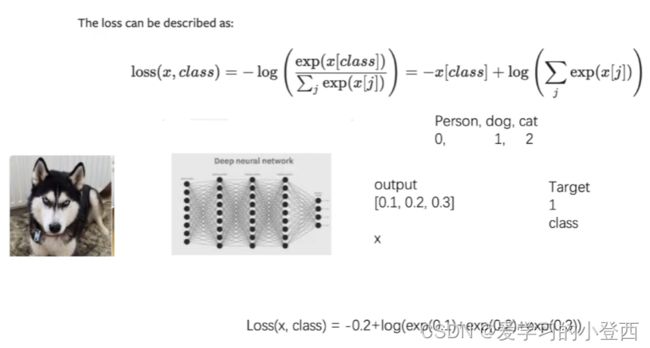

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3))

loss_cross = nn.CrossEntropyLoss()

result3 = loss_cross(x,y)

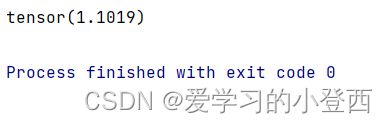

print(result3)

与卷积网络结合

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data", train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=1)

class Peipei(nn.Module):

def __init__(self) -> None:

super(Peipei, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2, stride=1),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

peipei = Peipei()

loss = nn.CrossEntropyLoss()

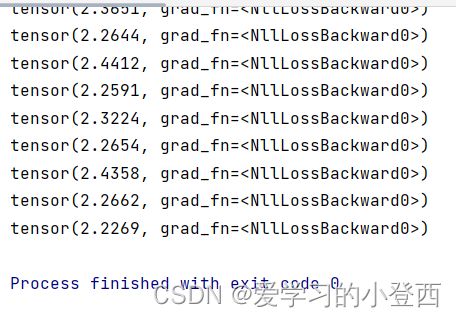

for data in dataloader:

imgs, targets = data

outputs = peipei(imgs)

result_loss = loss(outputs,targets)

print(result_loss)

# 反向传播,计算每个节点的梯度/参数,以便于后续选择合适的优化器

result_loss.backward()