【gstreamer opencv::Mat】将opencv的cv::Mat数据转换成MP4视频

目录

-

- 序言

- 实现

-

- VideoWriter.h

- VideoWriter.cc

- WriterDemo.cc

- CMakeLists.txt

- 参考文献

序言

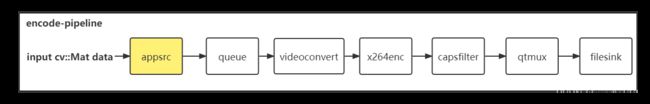

- 问题描述:继上一篇使用gstreamer读取视频中的每一帧为cv::Mat之后,又接到新需求 ,需要将处理后的cv::Mat数据保存成视频。为此,需要设置

pipeline和应用app的数据传输。在gstreamer的pipeline中,数据的输入元件一般有本地文件输入filesrc,网络文件输入souphttpsrc,应用数据输入appsrc,毫无疑问这里需要appsrc作为输入输入元件。 - 分析:使用

appsrc将cv::Mat数据导入管道并由filesink接受保存到磁盘。 - github地址:VideoReaderWriteOfRB5

实现

- 注意这里的测试demo用到了VideoReader的相关代码。

- 现在目录树如下:

.

|-- CMakeLists.txt

|-- ReaderDemo.cc

|-- VideoReader.cc

|-- VideoReader.h

|-- VideoWriter.cc

|-- VideoWriter.h

|-- WriterDemo.cc

`-- build

- 程序已经通过测试,可以直接运行。运行程序代码

sudo ./VideoWriterDome ./input.mp4 ./out.mp4

直接看代码:

VideoWriter.h

#ifndef __VIDEO_WRITER_H__

#define __VIDEO_WRITER_H__

#include

#include "opencv2/opencv.hpp"

#include

class VideoWriter {

public:

~VideoWriter();

/**

* @brief 打开视频, url为视频的文件路径或者网络地址

*/

int Open(const std::string url);

/**

* @brief 设置视频帧率

* @param fps = framerate.first/framerate.second

*/

void SetFramerate(std::pair framerate) {

framerate_ = framerate;

}

/**

* @brief 设置视频分辨率

*/

void SetSize(int width, int height) {

width_ = width;

height_ = height;

}

/**

* @brief 设置视频码率

* @param bitrate 单位bit/sec

*/

void SetBitrate(int bitrate) {

bitrate_ = bitrate;

}

/**

* @brief 写入视频帧

* @param timestamp 单位秒

*/

int Write(const cv::Mat& frame, double timestamp) ;

private:

int PushData2Pipeline(const cv::Mat& frame, double timestamp);

GstElement *pipeline_;

GstElement *appSrc_;

GstElement *queue_;

GstElement *videoConvert_;

GstElement *encoder_;

GstElement *capsFilter_;

GstElement *mux_;

GstElement *sink_;

int width_= 0;

int height_ = 0;

int bitrate_ = 0;

std::pair framerate_{30, 1};

};

#endif

VideoWriter.cc

#include "VideoWriter.h"

#include

VideoWriter::~VideoWriter() {

if (appSrc_) {

GstFlowReturn retflow;

g_signal_emit_by_name(appSrc_, "end-of-stream", &retflow);

std::cout << "EOS sended. Writing last several frame..." << std::endl;

g_usleep(4000000); // 等待4s,写数据

std::cout << "Writing Done!" << std::endl;

if (retflow != GST_FLOW_OK) {

std::cerr << "We got some error when sending eos!" << std::endl;

}

}

if (pipeline_) {

gst_element_set_state(pipeline_, GST_STATE_NULL);

gst_object_unref(pipeline_);

pipeline_ = nullptr;

}

}

int VideoWriter::Open(const std::string url) {

appSrc_ = gst_element_factory_make("appsrc", "AppSrc");

queue_ = gst_element_factory_make("queue", "QueueWrite");

videoConvert_ = gst_element_factory_make("videoconvert", "Videoconvert");

encoder_ = gst_element_factory_make("x264enc", "X264enc");

capsFilter_ = gst_element_factory_make("capsfilter", "Capsfilter");

mux_ = gst_element_factory_make("qtmux", "Qtmux");

sink_ = gst_element_factory_make("filesink", "OutputFile");

// Create the empty pipeline

pipeline_ = gst_pipeline_new("encode-pipeline");

if (!pipeline_ || !appSrc_ || !queue_ || !videoConvert_ || !encoder_ || !capsFilter_ || !mux_ || ! sink_) {

std::cerr << "Not all elements could be created" << std::endl;

return -1;

}

// 设置 src format

std::string srcFmt = "BGR";

// Modify element properties

g_object_set(G_OBJECT(appSrc_), "stream-type", 0, "format", GST_FORMAT_TIME, nullptr);

g_object_set(G_OBJECT(appSrc_), "caps", gst_caps_new_simple("video/x-raw", "format", G_TYPE_STRING, srcFmt.c_str(),

"width", G_TYPE_INT, width_,

"height", G_TYPE_INT, height_,

"framerate", GST_TYPE_FRACTION, framerate_.first, framerate_.second, nullptr), nullptr);

g_object_set(G_OBJECT(capsFilter_), "caps", gst_caps_new_simple("video/x-h264",

"stream-format", G_TYPE_STRING,"avc",

"profile", G_TYPE_STRING, "main", nullptr), nullptr);

g_object_set(G_OBJECT(sink_), "location", url.c_str(), nullptr);

// 设置视频码率

g_object_set(G_OBJECT(encoder_), "bitrate", 1000 + bitrate_ / 1000, nullptr);

// Build the pipeline

gst_bin_add_many(GST_BIN(pipeline_), appSrc_, queue_, videoConvert_, encoder_, capsFilter_, mux_, sink_, nullptr);

if (gst_element_link_many(appSrc_, queue_, videoConvert_, encoder_, capsFilter_, mux_, sink_, nullptr) != TRUE ) {

std::cerr << "appSrc, queue, videoConvert, encoder, capsFilter, mux and sink could not be linked" << std::endl;

return -1;

}

// Start playing

auto ret = gst_element_set_state (pipeline_, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE) {

std::cerr << "Unable to set the pipeline to the playing state" << std::endl;

return -1;

}

return 0;

}

int VideoWriter::PushData2Pipeline(const cv::Mat& frame, double timestamp) {

GstBuffer *buffer;

GstFlowReturn ret;

GstMapInfo map;

// Create a new empty buffer

uint size = frame.total() * frame.elemSize();

buffer = gst_buffer_new_and_alloc(size);

gst_buffer_map(buffer, &map, GST_MAP_WRITE);

memcpy(map.data, frame.data, size);

// debug

std::cout << "wrote size:" << size << std::endl;

// 必须写入时间戳和每帧画面持续时间

gst_buffer_unmap(buffer, &map);

GST_BUFFER_PTS(buffer) = static_cast(timestamp * GST_SECOND);

GST_BUFFER_DURATION(buffer) = gst_util_uint64_scale_int(1, GST_SECOND, framerate_.first / framerate_.second);

std::cout << "send data into buffer" << std::endl;

std::cout << "GST_BUFFER_DURATION(buffer):" << GST_BUFFER_DURATION(buffer) << std::endl;

std::cout << "timestamp:" << static_cast(timestamp * GST_SECOND) << std::endl;

// Push the buffer into the appsrc

g_signal_emit_by_name(appSrc_, "push-buffer", buffer, &ret);

// Free the buffer now that we are done with it

gst_buffer_unref(buffer);

if (ret != GST_FLOW_OK) {

// We got some error, stop sending data

std::cout << "We got some error, stop sending data" << std::endl;

return -1;

}

return 0;

}

int VideoWriter::Write(const cv::Mat& frame, double timestamp) {

return PushData2Pipeline(frame, timestamp);

}

WriterDemo.cc

#include "VideoReader.h"

#include "VideoWriter.h"

#include

#include

void TestVideoReadWrite(std::string url, std::string outUrl, int count) {

std::cout << "video:" << url << std::endl;

int width = 1920, height = 1080;

VideoReader reader;

reader.InputOriginSize(width, height);

auto ret = reader.Open(url);

if (ret < 0) return;

VideoWriter writer;

writer.SetSize(width, height);

writer.SetFramerate(reader.Framerate());

ret = writer.Open(outUrl);

if (ret < 0) return;

cv::Mat frame;

int seq = 0;

double timestamp = .0;

while (seq++ < count) {

auto ret = reader.Read(frame, timestamp);

if (ret < 0) break;

// std::string filename = "./bin/" + std::to_string(seq) + ".jpg";

// cv::imwrite(filename, frame);

writer.Write(frame, timestamp);

}

std::cout << "video read write test exit" << std::endl;

}

int main(int argc, char* argv[]) {

gst_init(&argc, &argv);

std::string inputUrl(argv[1]);

std::string outputUrl(argv[2]);

TestVideoReadWrite(inputUrl, outputUrl, 100);

return 0;

}

CMakeLists.txt

配置opencv的cmake可以参考:配置opencv cmake

cmake_minimum_required(VERSION 3.6)

PROJECT(sample)

find_package(OpenCV required)

# include(/home/jungle/smm/dependencies/opencv/qualcomm/lib/cmake/opencv4/OpenCVConfig.cmake)

# 添加reader的demo

add_executable(VideoReaderDome ReaderDemo.cc VideoReader.cc VideoReader.h)

# target_link_directories(/home/jungle/smm/dependencies/opencv/qualcomm/lib)

# target_link_libraries(VideoReaderDome PUBLIC /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_img_hash.so /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_img_hash.so.4.1 /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_img_hash.so.4.1.0 /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_world.so /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_world.so.4.1 /home/jungle/smm/dependencies/opencv/qualcomm/lib/libopencv_world.so.4.1.0)

target_link_libraries(VideoReaderDome PUBLIC ${OpenCV_LIBS})

target_link_libraries(VideoReaderDome PUBLIC "-lgstreamer-1.0 -lgobject-2.0 -lglib-2.0")

target_include_directories(VideoReaderDome PUBLIC /usr/include/gstreamer-1.0)

target_include_directories(VideoReaderDome PUBLIC /usr/include/gobject-2.0)

target_include_directories(VideoReaderDome PUBLIC /usr/include/glib-2.0)

target_include_directories(VideoReaderDome PUBLIC ${OpenCV_INCLUDE_DIRS})

# 添加writer的demo

add_executable(VideoWriterDome WriterDemo.cc VideoWriter.cc VideoWriter.h VideoReader.cc VideoReader.h)

target_link_libraries(VideoWriterDome PUBLIC ${OpenCV_LIBS})

target_link_libraries(VideoWriterDome PUBLIC "-lgstreamer-1.0 -lgobject-2.0 -lglib-2.0")

target_include_directories(VideoWriterDome PUBLIC /usr/include/gstreamer-1.0)

target_include_directories(VideoWriterDome PUBLIC /usr/include/gobject-2.0)

target_include_directories(VideoWriterDome PUBLIC /usr/include/glib-2.0)

target_include_directories(VideoWriterDome PUBLIC ${OpenCV_INCLUDE_DIRS})

参考文献

【gstreamer中appsink和appsrc操作数据转换cv::Mat】参考文献