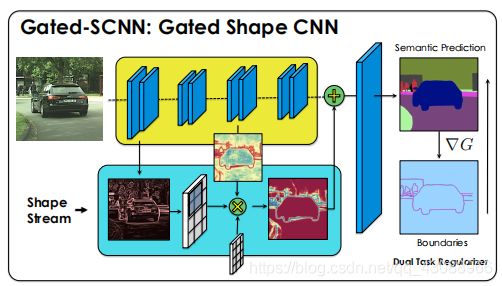

《Gated-SCNN: Gated Shape CNNs for Semantic Segmentation》论文笔记

- 这篇论文收录在ICCV2019的语义分割领域中,其核心思想采用了双流机制(regular stream和shape stream)以及ASPP进行分割处理。

论文链接:https://arxiv.org/abs/1907.05740

开源代码:https://nv-tlabs.github.io/GSCNN/

1、在Regular Stream中,采用传统CNN网络如ResNet、VGGNet、WideResnet等作为Backbone。

2、将Regular Stream和Shape Stream产生的feature map进行融合,融合采用的是Atrous Spatial Pyramid Pooling(ASPP)方法。

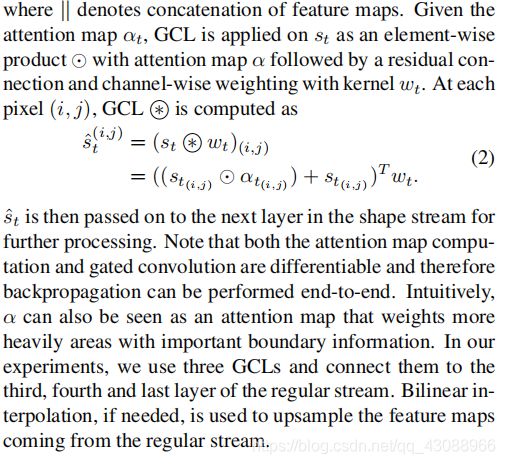

3、在Regular Stream中,加入来自Shape Stream的边界语义信息(类似Attention机制)的局部指引,两者的结合是采用文章中提出的Gated Convolutional Layer(GCL)。

**

Gated Convolutional Layer(GCL)

we first obtain an attention map αt ∈ RH×W

by concatenating rt and st followed by a normalized 1 × 1

convolutional layer C1×1 which in turn is followed by a sigmoid function σ :

![]()

**

**

Gated-SCNN源代码:

**

class GSCNN(nn.Module):

'''

Wide_resnet version of DeepLabV3

mod1

pool2

mod2 str2

pool3

mod3-7

structure: [3, 3, 6, 3, 1, 1]

channels = [(128, 128), (256, 256), (512, 512), (512, 1024), (512, 1024, 2048),

(1024, 2048, 4096)]

'''

def __init__(self, num_classes, trunk=None, criterion=None):

super(GSCNN, self).__init__()

self.criterion = criterion

self.num_classes = num_classes

wide_resnet = wider_resnet38_a2(classes=1000, dilation=True)

wide_resnet = torch.nn.DataParallel(wide_resnet)

wide_resnet = wide_resnet.module

self.mod1 = wide_resnet.mod1

self.mod2 = wide_resnet.mod2

self.mod3 = wide_resnet.mod3

self.mod4 = wide_resnet.mod4

self.mod5 = wide_resnet.mod5

self.mod6 = wide_resnet.mod6

self.mod7 = wide_resnet.mod7

self.pool2 = wide_resnet.pool2

self.pool3 = wide_resnet.pool3

self.interpolate = F.interpolate

del wide_resnet

self.dsn1 = nn.Conv2d(64, 1, 1)

self.dsn3 = nn.Conv2d(256, 1, 1)

self.dsn4 = nn.Conv2d(512, 1, 1)

self.dsn7 = nn.Conv2d(4096, 1, 1)

self.res1 = Resnet.BasicBlock(64, 64, stride=1, downsample=None)

self.d1 = nn.Conv2d(64, 32, 1)

self.res2 = Resnet.BasicBlock(32, 32, stride=1, downsample=None)

self.d2 = nn.Conv2d(32, 16, 1)

self.res3 = Resnet.BasicBlock(16, 16, stride=1, downsample=None)

self.d3 = nn.Conv2d(16, 8, 1)

self.fuse = nn.Conv2d(8, 1, kernel_size=1, padding=0, bias=False)

self.cw = nn.Conv2d(2, 1, kernel_size=1, padding=0, bias=False)

self.gate1 = gsc.GatedSpatialConv2d(32, 32)

self.gate2 = gsc.GatedSpatialConv2d(16, 16)

self.gate3 = gsc.GatedSpatialConv2d(8, 8)

self.aspp = _AtrousSpatialPyramidPoolingModule(4096, 256,

output_stride=8)

self.bot_fine = nn.Conv2d(128, 48, kernel_size=1, bias=False)

self.bot_aspp = nn.Conv2d(1280 + 256, 256, kernel_size=1, bias=False)

self.final_seg = nn.Sequential(

nn.Conv2d(256 + 48, 256, kernel_size=3, padding=1, bias=False),

Norm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1, bias=False),

Norm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, num_classes, kernel_size=1, bias=False))

self.sigmoid = nn.Sigmoid()

initialize_weights(self.final_seg)

def forward(self, inp, gts=None):

x_size = inp.size()

# res 1

m1 = self.mod1(inp)

# res 2

m2 = self.mod2(self.pool2(m1))

# res 3

m3 = self.mod3(self.pool3(m2))

# res 4-7

m4 = self.mod4(m3)

m5 = self.mod5(m4)

m6 = self.mod6(m5)

m7 = self.mod7(m6)

s3 = F.interpolate(self.dsn3(m3), x_size[2:],

mode='bilinear', align_corners=True)

s4 = F.interpolate(self.dsn4(m4), x_size[2:],

mode='bilinear', align_corners=True)

s7 = F.interpolate(self.dsn7(m7), x_size[2:],

mode='bilinear', align_corners=True)

m1f = F.interpolate(m1, x_size[2:], mode='bilinear', align_corners=True)

im_arr = inp.cpu().numpy().transpose((0,2,3,1)).astype(np.uint8)

canny = np.zeros((x_size[0], 1, x_size[2], x_size[3]))

for i in range(x_size[0]):

canny[i] = cv2.Canny(im_arr[i],10,100)

canny = torch.from_numpy(canny).cuda().float()

cs = self.res1(m1f)

cs = F.interpolate(cs, x_size[2:],

mode='bilinear', align_corners=True)

cs = self.d1(cs)

cs = self.gate1(cs, s3)

cs = self.res2(cs)

cs = F.interpolate(cs, x_size[2:],

mode='bilinear', align_corners=True)

cs = self.d2(cs)

cs = self.gate2(cs, s4)

cs = self.res3(cs)

cs = F.interpolate(cs, x_size[2:],

mode='bilinear', align_corners=True)

cs = self.d3(cs)

cs = self.gate3(cs, s7)

cs = self.fuse(cs)

cs = F.interpolate(cs, x_size[2:],

mode='bilinear', align_corners=True)

edge_out = self.sigmoid(cs)

cat = torch.cat((edge_out, canny), dim=1)

acts = self.cw(cat)

acts = self.sigmoid(acts)

# aspp

x = self.aspp(m7, acts)

dec0_up = self.bot_aspp(x)

dec0_fine = self.bot_fine(m2)

dec0_up = self.interpolate(dec0_up, m2.size()[2:], mode='bilinear',align_corners=True)

dec0 = [dec0_fine, dec0_up]

dec0 = torch.cat(dec0, 1)

dec1 = self.final_seg(dec0)

seg_out = self.interpolate(dec1, x_size[2:], mode='bilinear')

if self.training:

return self.criterion((seg_out, edge_out), gts)

else:

return seg_out, edge_out

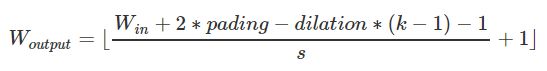

下面是ASPP模块的实现过程,其本质是实现特征的多尺度分割,ASPP始于Deeplab V3中,采用1x1卷积,还有三个dilated分别为6、12、18的3x3的空洞卷积,以及一个全局的Adaptive Pooling共5路并行,最终进行Concat。本文采用的ASPP还加入了最终生成的边界特征图。

class _AtrousSpatialPyramidPoolingModule(nn.Module):

'''

operations performed:

1x1 x depth

3x3 x depth dilation 6

3x3 x depth dilation 12

3x3 x depth dilation 18

image pooling

concatenate all together

Final 1x1 conv

'''

def __init__(self, in_dim, reduction_dim=256, output_stride=16, rates=[6, 12, 18]):

super(_AtrousSpatialPyramidPoolingModule, self).__init__()

# Check if we are using distributed BN and use the nn from encoding.nn

# library rather than using standard pytorch.nn

if output_stride == 8:

rates = [2 * r for r in rates]

elif output_stride == 16:

pass

else:

raise 'output stride of {} not supported'.format(output_stride)

self.features = []

# 1x1

self.features.append(

nn.Sequential(nn.Conv2d(in_dim, reduction_dim, kernel_size=1, bias=False),

Norm2d(reduction_dim),

nn.ReLU(inplace=True)))

# other rates

for r in rates:

self.features.append(nn.Sequential(

nn.Conv2d(in_dim, reduction_dim, kernel_size=3,

dilation=r, padding=r, bias=False),

Norm2d(reduction_dim),

nn.ReLU(inplace=True)

))

self.features = torch.nn.ModuleList(self.features)

# img level features

self.img_pooling = nn.AdaptiveAvgPool2d(1)

self.img_conv = nn.Sequential(

nn.Conv2d(in_dim, reduction_dim, kernel_size=1, bias=False),

Norm2d(reduction_dim),

nn.ReLU(inplace=True))

self.edge_conv = nn.Sequential(

nn.Conv2d(1, reduction_dim, kernel_size=1, bias=False),

Norm2d(reduction_dim),

nn.ReLU(inplace=True))

def forward(self, x, edge):

x_size = x.size()

img_features = self.img_pooling(x)

img_features = self.img_conv(img_features)

img_features = F.interpolate(img_features, x_size[2:],

mode='bilinear',align_corners=True)

out = img_features

edge_features = F.interpolate(edge, x_size[2:],

mode='bilinear',align_corners=True)

edge_features = self.edge_conv(edge_features)

out = torch.cat((out, edge_features), 1)

for f in self.features:

y = f(x)

out = torch.cat((out, y), 1)

return out

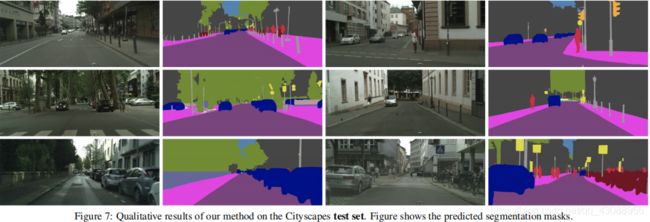

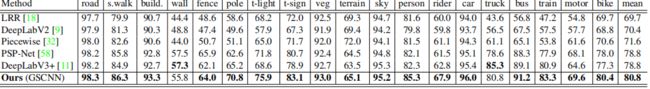

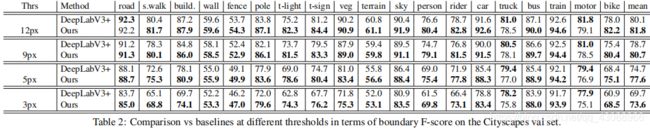

实验部分中,数据集为Cityscapes,baseline是Deeplab V3

分割结果:

结论:

本文提出了一种新的两流CNN语义分割结构:SCNN(GSCNN)。我们采用了一种新的选通机制来连接中间层,并利用语义分割和语义边界预测任务之间的二重性,提出了一种新的损失函数。我们的实验表明,这导致了一种高效的体系结构,在对象边界周围产生了更精确的预测,并大大提高了对较薄和较小对象的性能。 我们的体系结构在具有挑战性的 Cityscapes 数据集上取得了最先进的结果,大大改善了强大的基线.