体素CVPR2019(二)DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation

《DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation》论文解读

- Abstract

- 1. Modeling SDFs with Neural Networks

- 2. Learning the Latent Space of Shapes

原文:DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation

代码:Github

Abstract

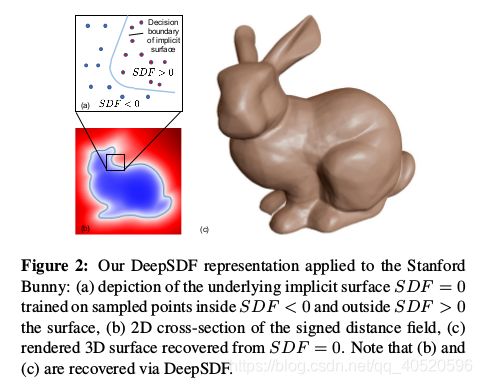

计算机图形学已经提出多种方法来表示3D几何图形。本文提出 DeepSDF (一个可学习有符号的连续距离函数SDF) 来表示3D形状,它能从部分和有噪声的3D输入数据中生成高质量的shape。

原理:通过连续的体积场表示形状的表面,场内点的大小即幅值表示其到表面的距离,符号表示在场外(+) 还是场内(-);解析形式或离散的体素形式的传统SDF通常只能表示某个形状,而DeepSDF可以表示一类形状,即:拿手来举例,SDF只能表示某个手的某个形态;而DeepSDF可以将手的所有形态都能表示出来。

1. Modeling SDFs with Neural Networks

该章节通过神经网络来得到SDF,由于SDF本身是一个把离散位置的点映射到一个距离值上的函数,该值的符号表示了该点在表面的内部还是外部. 一般用SDF = 0处表示surface。 因此该方法主要是通过深度网络来生成一个从离散点到 连续有符号距离函数(SDF) 的映射函数,没有voxelization的过程。由于离散化受 卷积参数大小 和 grid精度 所约束, 相比之下DeepSDF方法更加灵活。

S D F ( x ) = s : x ∈ R 3 , s ∈ R S D F(x)=s: x \in \mathbb{R}^{3}, s \in \mathbb{R} SDF(x)=s:x∈R3,s∈R

关键思想则是利用深度神经网络从点直接回归到连续SDF,输出给定位置的SDF值,表面的值为0。

理论上说,深度前馈神经网络能学到任意精度的完全连续函数,如Fig. 3a 所示:

给定目标形状,用3D点位置 x \boldsymbol{x} x 以及SDF值 s s s 来组成 X X X:

X = { ( x , s ) : S D F ( x ) = s } X=\{(\boldsymbol{x}, s): S D F(\boldsymbol{x})=s\} X={(x,s):SDF(x)=s}

在训练集 S 上训练多层全连接神经网络 f θ f_θ fθ 的参数 θ θ θ,使 f θ f_θ fθ 成为目标域Ω中给定 SDF 的良好逼近器,即:

f θ ( x ) ≈ SDF ( x ) , ∀ x ∈ Ω f_{\theta}(\boldsymbol{x}) \approx \operatorname{SDF}(\boldsymbol{x}), \forall \boldsymbol{x} \in \Omega fθ(x)≈SDF(x),∀x∈Ω

用L1损失函数来进行:

L ( f θ ( x ) , s ) = ∣ clamp ( f θ ( x ) , δ ) − clamp ( s , δ ) ∣ \mathcal{L}\left(f_{\theta}(\boldsymbol{x}), s\right)=\left|\operatorname{clamp}\left(f_{\theta}(\boldsymbol{x}), \delta\right)-\operatorname{clamp}(s, \delta)\right| L(fθ(x),s)=∣clamp(fθ(x),δ)−clamp(s,δ)∣,其中 clamp ( x , δ ) : = min ( δ , max ( − δ , x ) ) \operatorname{clamp}(x, \delta):=\min (\delta, \max (-\delta, x)) clamp(x,δ):=min(δ,max(−δ,x))

2. Learning the Latent Space of Shapes

该章节则是学习形状隐式空间,即 c o d e code code,由于针对一个shape来学习一个网络是很不实用的,因此本文引入隐式向量 z z z 代表目标形状的隐式密码,此时如Fig. 3b所示:用3D点位置 x \boldsymbol{x} x 以及 隐式密码 z z z 作为输入,此处与上面同理,使得:

f θ ( z i , x ) ≈ SDF i ( x ) f_{\theta}\left(\boldsymbol{z}_{i}, \boldsymbol{x}\right) \approx \operatorname{SDF}^{i}(\boldsymbol{x}) fθ(zi,x)≈SDFi(x),其中 i i i 指的是第 i i i个目标形状

这一部分的隐式密码 z i \boldsymbol{z}_{i} zi 采用自解码器来得到,给定N个shape,每个shape有K个点,输入的为: X i = { ( x j , s j ) : s j = S D F i ( x j ) } X_{i}=\left\{\left(\boldsymbol{x}_{j}, s_{j}\right): s_{j}=S D F^{i}\left(\boldsymbol{x}_{j}\right)\right\} Xi={(xj,sj):sj=SDFi(xj)},那么根据概率论则有:

p θ ( z i ∣ X i ) = p ( z i ) ∏ ( x j , s j ) ∈ X i p θ ( s j ∣ z i ; x j ) p_{\theta}\left(\boldsymbol{z}_{i} \mid X_{i}\right)=p\left(\boldsymbol{z}_{i}\right) \prod_{\left(\boldsymbol{x}_{j}, \boldsymbol{s}_{j}\right) \in X_{i}} p_{\theta}\left(\boldsymbol{s}_{j} \mid z_{i} ; \boldsymbol{x}_{j}\right) pθ(zi∣Xi)=p(zi)∏(xj,sj)∈Xipθ(sj∣zi;xj)

p θ ( s j ∣ z i ; x j ) = exp ( − L ( f θ ( z i , x j ) , s j ) ) p_{\theta}\left(\boldsymbol{s}_{j} \mid z_{i} ; \boldsymbol{x}_{j}\right)=\exp \left(-\mathcal{L}\left(f_{\theta}\left(\boldsymbol{z}_{i}, \boldsymbol{x}_{j}\right), s_{j}\right)\right) pθ(sj∣zi;xj)=exp(−L(fθ(zi,xj),sj))

在训练阶段,通过下式来优化 θ \theta θ 以及 z i \boldsymbol{z}_{i} zi:

arg min θ , { z i } i = 1 N ∑ i = 1 N ( ∑ j = 1 K L ( f θ ( z i , x j ) , s j ) + 1 σ 2 ∥ z i ∥ 2 2 ) \underset{\theta,\left\{\boldsymbol{z}_{i}\right\}_{i=1}^{N}}{\arg \min } \sum_{i=1}^{N}\left(\sum_{j=1}^{K} \mathcal{L}\left(f_{\theta}\left(\boldsymbol{z}_{i}, \boldsymbol{x}_{j}\right), \boldsymbol{s}_{j}\right)+\frac{1}{\sigma^{2}}\left\|\boldsymbol{z}_{i}\right\|_{2}^{2}\right) θ,{zi}i=1Nargmin∑i=1N(∑j=1KL(fθ(zi,xj),sj)+σ21∥zi∥22)

在推理阶段则是固定 θ \theta θ , z i \boldsymbol{z}_{i} zi可以通过 Maximum-aPosterior (MAP) estimation来得到,即:

z ^ = arg min z ∑ ( x j , s j ) ∈ X L ( f θ ( z , x j ) , s j ) + 1 σ 2 ∥ z ∥ 2 2 \hat{\boldsymbol{z}}=\underset{\boldsymbol{z}}{\arg \min } \sum_{\left(\boldsymbol{x}_{j}, \boldsymbol{s}_{j}\right) \in X} \mathcal{L}\left(f_{\theta}\left(\boldsymbol{z}, \boldsymbol{x}_{j}\right), s_{j}\right)+\frac{1}{\sigma^{2}}\|\boldsymbol{z}\|_{2}^{2} z^=zargmin∑(xj,sj)∈XL(fθ(z,xj),sj)+σ21∥z∥22

import torch.nn as nn

import torch

import torch.nn.functional as F

class Decoder(nn.Module):

def __init__(

self,

latent_size,

dims,

dropout=None,

dropout_prob=0.0,

norm_layers=(),

latent_in=(),

weight_norm=False,

xyz_in_all=None,

use_tanh=False,

latent_dropout=False,

):

super(Decoder, self).__init__()

def make_sequence():

return []

dims = [latent_size + 3] + dims + [1]

self.num_layers = len(dims)

self.norm_layers = norm_layers

self.latent_in = latent_in

self.latent_dropout = latent_dropout

if self.latent_dropout:

self.lat_dp = nn.Dropout(0.2)

self.xyz_in_all = xyz_in_all

self.weight_norm = weight_norm

for layer in range(0, self.num_layers - 1):

if layer + 1 in latent_in:

out_dim = dims[layer + 1] - dims[0]

else:

out_dim = dims[layer + 1]

if self.xyz_in_all and layer != self.num_layers - 2:

out_dim -= 3

if weight_norm and layer in self.norm_layers:

setattr(

self,

"lin" + str(layer),

nn.utils.weight_norm(nn.Linear(dims[layer], out_dim)),

)

else:

setattr(self, "lin" + str(layer), nn.Linear(dims[layer], out_dim))

if (

(not weight_norm)

and self.norm_layers is not None

and layer in self.norm_layers

):

setattr(self, "bn" + str(layer), nn.LayerNorm(out_dim))

self.use_tanh = use_tanh

if use_tanh:

self.tanh = nn.Tanh()

self.relu = nn.ReLU()

self.dropout_prob = dropout_prob

self.dropout = dropout

self.th = nn.Tanh()

# input: N x (L+3)

def forward(self, input):

xyz = input[:, -3:]

if input.shape[1] > 3 and self.latent_dropout:

latent_vecs = input[:, :-3]

latent_vecs = F.dropout(latent_vecs, p=0.2, training=self.training)

x = torch.cat([latent_vecs, xyz], 1)

else:

x = input

for layer in range(0, self.num_layers - 1):

lin = getattr(self, "lin" + str(layer))

if layer in self.latent_in:

x = torch.cat([x, input], 1)

elif layer != 0 and self.xyz_in_all:

x = torch.cat([x, xyz], 1)

x = lin(x)

# last layer Tanh

if layer == self.num_layers - 2 and self.use_tanh:

x = self.tanh(x)

if layer < self.num_layers - 2:

if (

self.norm_layers is not None

and layer in self.norm_layers

and not self.weight_norm

):

bn = getattr(self, "bn" + str(layer))

x = bn(x)

x = self.relu(x)

if self.dropout is not None and layer in self.dropout:

x = F.dropout(x, p=self.dropout_prob, training=self.training)

if hasattr(self, "th"):

x = self.th(x)

return x