Zed-Unity插件代码注释——ZEDCommon.cs

文章目录

- Zed-Unity插件代码注释——ZEDCommon.cs

-

- Update

- 引言

- 基础环境

- ZEDCommon.cs脚本介绍

- 代码(注释后)

Zed-Unity插件代码注释——ZEDCommon.cs

Update

略(2020-09-01 14.31)

引言

略(2020-9-01 14.31)

基础环境

略(2020-09-01 14.31)

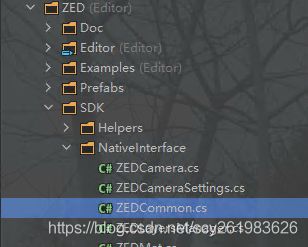

ZEDCommon.cs脚本介绍

- 脚本位置:

- 脚本功能:

这个脚本主要定义了大量的枚举类型和数据结构类型,主要是方便用于在unity和底层库做数据交换的时候使用。

这些枚举和结构体定义在 sl这个命名空间里面。 - 脚本使用:

- 在插件的其他文件里面被使用。 这个脚本里面几乎没有函数,只有两个简单的类。不做过多的介绍。

- 命名空间: sl

- 代码结构:

略(2020-09-01 14.29)

代码(注释后)

//======= Copyright (c) Stereolabs Corporation, All rights reserved. ===============

using System.Runtime.InteropServices;

using UnityEngine;

// This file holds classes built to be exchanged between the ZED wrapper DLL (sl_unitywrapper.dll)

// and C# scripts within Unity.

// 该文件包含旨在在ZED包装器DLL(sl_unitywrapper.dll)//和Unity中的C#脚本之间交换的类。

// Most have parity with a structure within the ZED C++ SDK.

// Find more info at https://www.stereolabs.com/developers/documentation/API/latest/.

namespace sl

{

///

/// 定义一个类ZEDCommon

///

public class ZEDCommon

{

public const string NameDLL = "sl_unitywrapper"; //动态链接库的名称

}

//相机序号的枚举

public enum ZED_CAMERA_ID

{

CAMERA_ID_01,

CAMERA_ID_02,

CAMERA_ID_03,

CAMERA_ID_04,

};

// 输入类型的枚举

public enum INPUT_TYPE

{

INPUT_TYPE_USB, // USB输入

INPUT_TYPE_SVO, // SVO文件输入,SVO是ZED公司自己定义的一种数据格式,里面包含有每一帧的时间戳

INPUT_TYPE_STREAM // 流输入,这个我暂时不知道,可能指的是通过管道或者网口传进来的数据?

};

///

/// Constant for plugin. Should not be changed

/// 插件的常量。不应更改

///

public enum Constant

{

MAX_CAMERA_PLUGIN = 4, //允许的最大相机数为4个

PLANE_DISTANCE = 10, //检测平面最长为10米

MAX_OBJECTS = 200 //物品识别的最大数量

};

///

/// Holds a 3x3 matrix that can be marshaled between the ZED

/// Unity wrapper and C# scripts.

/// 拥有一个可以在ZED-Unity包装器和C#脚本之间封送的3x3矩阵。

/// Lance:这边主要解决的是, 托管代码和非托管代码对内存的操作的区别,

/// 托管代码不需要自己去操作内存的管理,但是非托管的需要.

/// 系统需要准备好非托管内存以便调用dll,因为非托管dll不理解托管内存.

/// 相当于把托管内存里面的缓存数据拷贝到非托管内存里面让非托管代码进行访问和处理。

/// 详细可以访问:https://bbs.csdn.net/topics/390419351

///

public struct Matrix3x3

{

[MarshalAs(UnmanagedType.ByValArray, SizeConst = 9)] // 无托管类型的内存,数组元素为9个,下面定义的是浮点,难道是9个浮点数据的长度?

public float[] m; //3x3 matrix.

};

///

/// Holds a camera resolution as two pointers (for height and width) for easy

/// passing back and forth to the ZED Unity wrapper.

/// 将相机分辨率保持为两个指针(高度和宽度),

/// 以便轻松来回传递到ZED Unity包装器。

///

public struct Resolution

{

///

/// 分辨率

///

///

///

public Resolution(uint width, uint height)

{

this.width = (System.UIntPtr)width; // System.UIntPtr 能存储指针的整型

this.height = (System.UIntPtr)height; //

}

public System.UIntPtr width;

public System.UIntPtr height;

};

///

/// Pose structure with data on timing and validity in addition to

/// position and rotation.

/// 姿势结构,除了位置和旋转外,还包含时序和有效性数据。

///

/// 结构体布局:https://blog.csdn.net/u013472838/article/details/45574933

[StructLayout(LayoutKind.Sequential)] // 要求结构体在地址上布局的时候是顺序的,就是在内存层面上,后面的数据紧跟着前面的数据,

public struct Pose //这个应该是指的是ZED物理相机的姿势

{

public bool valid;

public ulong timestap;

public Quaternion rotation;

public Vector3 translation;

public int pose_confidence;

};

///

/// Rect structure to define a rectangle or a ROI in pixels

/// Use to set ROI target for AEC/AGC

/// 用于定义矩形或以像素为单位的感兴趣区域(ROI:region of interest )的矩形结构

/// 用于设置AEC / AGC的ROI目标

///

[StructLayout(LayoutKind.Sequential)]

public struct iRect //图像里面的一个矩形框

{

public int x;

public int y;

public int width;

public int height;

};

#region IMU、气压计、磁力计、温度计数据的结构体

///

/// Full IMU data structure.

/// 完整的IMU数据结构。

///

[StructLayout(LayoutKind.Sequential)]

public struct ImuData

{

///

/// Indicates if imu data is available

/// 指示imu数据是否可用

///

public bool available;

///

/// IMU Data timestamp in ns

/// 以ns为单位的IMU数据时间戳

///

public ulong timestamp;

///

/// Gyroscope calibrated data in degrees/second.

/// 陀螺仪校准数据,以度/秒为单位。

///

public Vector3 angularVelocity;

///

/// Accelerometer calibrated data in m/s².

/// 加速度计校准数据,单位为m /s²。

///

public Vector3 linearAcceleration;

///

/// Gyroscope raw/uncalibrated data in degrees/second.

/// 陀螺仪原始/未校准的数据,以度/秒为单位。

///

public Vector3 angularVelocityUncalibrated;

///

/// Accelerometer raw/uncalibrated data in m/s².

/// 加速度计原始/未校准数据,单位为m /s²。

///

public Vector3 linearAccelerationUncalibrated;

///

/// Orientation from gyro/accelerator fusion.

/// 陀螺/加速器融合的方向。

///

public Quaternion fusedOrientation;

///

/// Covariance matrix of the quaternion.

/// 四元数的协方差矩阵。

///

public Matrix3x3 orientationCovariance;

///

/// Gyroscope raw data covariance matrix.

/// 陀螺仪原始数据协方差矩阵。

///

public Matrix3x3 angularVelocityCovariance;

///

/// Accelerometer raw data covariance matrix.

/// 加速度计原始数据协方差矩阵。

///

public Matrix3x3 linearAccelerationCovariance;

};

///

/// 气压计数据,好像一般用不到

///

[StructLayout(LayoutKind.Sequential)]

public struct BarometerData

{

///

/// Indicates if mag data is available

///

public bool available;

///

/// mag Data timestamp in ns

///

public ulong timestamp;

///

/// Barometer ambient air pressure in hPa

///

public float pressure;

///

/// Relative altitude from first camera position

///

public float relativeAltitude;

};

///

/// 磁力计数据,好像一般也用不到

///

[StructLayout(LayoutKind.Sequential)]

public struct MagnetometerData

{

///

/// Indicates if mag data is available

///

public bool available;

///

/// mag Data timestamp in ns

///

public ulong timestamp;

///

/// Magnetic field calibrated values in uT

///

///

public Vector3 magneticField;

///

/// Magnetic field raw values in uT

///

public Vector3 magneticFieldUncalibrated;

};

///

/// 温度计数据

///

[StructLayout(LayoutKind.Sequential)]

public struct TemperatureSensorData

{

///

/// Temperature from IMU device ( -100 if not available)

///

public float imu_temp;

///

/// Temperature from Barometer device ( -100 if not available)

///

public float barometer_temp;

///

/// Temperature from Onboard left analog temperature sensor ( -100 if not available)

///

public float onboard_left_temp;

///

/// Temperature from Onboard right analog temperature sensor ( -100 if not available)

///

public float onboard_right_temp;

};

///

/// 传感器数据

/// 包括上面的IMU 气压计 磁力计 温度计数据

///

[StructLayout(LayoutKind.Sequential)]

public struct SensorsData

{

///

/// Contains Imu Data

///

public ImuData imu;

///

/// Contains Barometer Data

///

public BarometerData barometer;

///

/// Contains Mag Data

///

public MagnetometerData magnetometer;

///

/// Contains Temperature Data

///

public TemperatureSensorData temperatureSensor;

///

/// Indicated if camera is :

/// -> Static : 0

/// -> Moving : 1

/// -> Falling : 2

///

public int camera_moving_state;

///

/// Indicates if the current sensors data is sync to the current image (>=1). Otherwise, value will be 0.

///

public int image_sync_val;

};

# endregion

/*******************************************************************************************************************************

*******************************************************************************************************************************/

#region 相机参数、标定参数结构体

//——————————下面是相机传感器的信息—————————//

///

/// Calibration information for an individual sensor on the ZED (left or right).

/// ZED上单个传感器的标定信息(左或右)。

/// For more information, see:

/// https://www.stereolabs.com/developers/documentation/API/v2.5.1/structsl_1_1CameraParameters.html

[StructLayout(LayoutKind.Sequential)]

public struct CameraParameters

{

///

/// Focal X.

///

public float fx; //焦点x

///

/// Focal Y.

///

public float fy;//焦点y

///

/// Optical center X.

///

public float cx;//相机主心x

///

/// Optical center Y.

///

///

public float cy;//相机主心y

///

/// Distortion coefficients.

/// 畸变系数

///

[MarshalAs(UnmanagedType.ByValArray, ArraySubType = UnmanagedType.U8, SizeConst = 5)]

public double[] disto;

///

/// Vertical field of view after stereo rectification.

/// 双目校正后的垂直视场。

///

public float vFOV;

///

/// Horizontal field of view after stereo rectification.

/// 双目校正后的水平视场。

///

public float hFOV;

///

/// Diagonal field of view after stereo rectification.

/// 双目矫正后的对角视场立体矫正后的对角视场

///

public float dFOV;

///

/// Camera's current resolution.

/// 相机的当前分辨率。

///

public Resolution resolution;

};

///

/// Holds calibration information about the current ZED's hardware, including per-sensor

/// calibration and offsets between the two sensors.

/// 包含有关当前ZED硬件的校准信息,包括每个传感器的校准以及两个传感器之间的偏移量。

/// Lance: 偏移量应该指的是两个相机的基线

/// For more info, see:

/// https://www.stereolabs.com/developers/documentation/API/v2.5.1/structsl_1_1CalibrationParameters.html

[StructLayout(LayoutKind.Sequential)]

public struct CalibrationParameters

{

///

/// Parameters of the left sensor.

/// 左传感器的参数

///

public CameraParameters leftCam;

///

/// Parameters of the right sensor.

/// 右传感器的参数

///

public CameraParameters rightCam;

///

/// Rotation (using Rodrigues' transformation) between the two sensors. Defined as 'tilt', 'convergence' and 'roll'.

/// 两个传感器之间的旋转(使用Rodrigues变换)。定义为“倾斜”,“收敛”和“滚动”。

///

public Quaternion Rot;

///

/// Translation between the two sensors. T[0] is the distance between the two cameras in meters.

/// 两个传感器之间的平移。 T [0]是两个摄像机之间的距离,以米为单位。

///

public Vector3 Trans;

};

#endregion

///

/// Container for information about the current SVO recording process.

/// 有关当前SVO录制过程的信息的容器。

///

/// Status of the current frame. True if recording was successful, false if frame could not be written.

/// 当前帧的状态。如果录制成功,则为true;如果无法写入帧,则为false。

///

public bool status;

///

/// Compression time for the current frame in milliseconds.

/// 当前帧的压缩时间(以毫秒为单位)。

///

public double current_compression_time;

///

/// Compression ratio (% of raw size) for the current frame.

/// 当前帧的压缩率(原始大小的百分比)。

///

public double current_compression_ratio;

///

/// Average compression time in millisecond since beginning of recording.

/// 自录制开始以来的平均压缩时间(以毫秒为单位)。

///

public double average_compression_time;

///

/// Compression ratio (% of raw size) since recording was started.

/// 自记录开始以来的压缩率(原始大小的百分比)。

///

public double average_compression_ratio;

}

///

/// Status of the ZED's self-calibration. Since v0.9.3, self-calibration is done in the background and

/// starts in the sl.ZEDCamera.Init or Reset functions.

/// ZED的自校准状态。从v0.9.3开始,自校准在后台完成,

/// 从sl.ZEDCamera.Init或Reset函数开始。

///

/// USelf-calibration has not yet been called (no Init() called).

/// 尚未调用自校准(未调用Init())。

///

SELF_CALIBRATION_NOT_CALLED,

///

/// Self-calibration is currently running.

/// 自校准当前正在运行。

///

SELF_CALIBRATION_RUNNING,

///

/// Self-calibration has finished running but did not manage to get coherent values. Old Parameters are used instead.

/// 自校准已完成运行,但无法获得一致的值。而是使用旧参数。

///

SELF_CALIBRATION_FAILED,

///

/// Self Calibration has finished running and successfully produces coherent values.

/// 自校准已完成运行,并成功生成了相关值。

///

SELF_CALIBRATION_SUCCESS

};

///

/// Lists available depth computation modes. Each mode offers better accuracy than the

/// mode before it, but at a performance cost.

/// 列出可用的深度计算模式。每种模式都比之前的模式提供更好的准确性,但是会降低性能。

///

/// Does not compute any depth map. Only rectified stereo images will be available.

/// 不计算任何深度图。仅校正后的立体图像可用。

///

NONE,

///

/// Fastest mode for depth computation.

/// 深度计算的最快模式。

///

PERFORMANCE,

///

/// Balanced quality mode. Depth map is robust in most environment and requires medium compute power.

/// 平衡质量模式。深度图在大多数环境中都很鲁棒,并且需要中等的计算能力。

///

QUALITY,

///

/// Native depth. Very accurate, but at a large performance cost.

/// 本机深度。非常准确,但性能成本较高。

///

ULTRA

};

///

/// Types of Image view modes, for creating human-viewable textures.

/// Used only in ZEDRenderingPlane as a simplified version of sl.VIEW, which has more detailed options.

/// 图像查看模式的类型,用于创建人类可见的纹理。

/// 仅在ZEDRenderingPlane中用作sl.VIEW的简化版本,它具有更详细的选项。

///

public enum VIEW_MODE

{

///

/// Dsplays regular color images.

/// Dsplay播放常规彩色图像。

///

VIEW_IMAGE,

///

/// Displays a greyscale depth map.

/// 灰度图形式

/// Lance:应该是压缩到0-255的范围了

///

VIEW_DEPTH,

///

/// Displays a normal map.

/// 显示法线贴图

///

VIEW_NORMALS

};

///

/// List of error codes in the ZED SDK.

/// ZED SDK中的错误代码列表。

///

/// Operation was successful.

/// 操作成功。

///

SUCCESS,

///

/// Standard, generic code for unsuccessful behavior when no other code is more appropriate.

/// 当没有其他代码更合适时,用于失败行为的标准通用代码。

///

FAILURE,

///

/// No GPU found, or CUDA capability of the device is not supported.

/// 未找到GPU,或不支持设备的CUDA功能。

///

NO_GPU_COMPATIBLE,

///

/// Not enough GPU memory for this depth mode. Try a different mode (such as PERFORMANCE).

/// 此深度模式没有足够的GPU内存。尝试使用其他模式(例如PERFORMANCE)。

///

NOT_ENOUGH_GPUMEM,

///

/// The ZED camera is not plugged in or detected.

/// 未插入或未检测到ZED相机。

///

CAMERA_NOT_DETECTED,

///

/// a ZED Mini is detected but the inertial sensor cannot be opened. (Never called for original ZED)

/// 检测到ZED Mini,但惯性传感器无法打开。 (从未要求过原始的ZED)

///

SENSOR_NOT_DETECTED,

///

/// For Nvidia Jetson X1 only - resolution not yet supported (USB3.0 bandwidth).

/// 仅适用于Nvidia Jetson X1-尚不支持分辨率(USB3.0带宽)。

///

INVALID_RESOLUTION,

///

/// USB communication issues. Occurs when the camera FPS cannot be reached, due to a lot of corrupted frames.

/// Try changing the USB port.

/// USB通信问题。由于帧损坏而导致无法达到相机FPS时发生。 ///尝试更改USB端口。

///

LOW_USB_BANDWIDTH,

///

/// ZED calibration file is not found on the host machine. Use ZED Explorer or ZED Calibration to get one.

/// 在主机上找不到ZED校准文件。使用ZED Explorer或ZED Calibration获得一个。

///

CALIBRATION_FILE_NOT_AVAILABLE,

///

/// ZED calibration file is not valid. Try downloading the factory one or recalibrating using the ZED Calibration tool.

/// ZED校准文件无效。尝试下载工厂版本或使用ZED校准工具进行重新校准。

///

INVALID_CALIBRATION_FILE,

///

/// The provided SVO file is not valid.

/// 提供的SVO文件无效。

///

INVALID_SVO_FILE,

///

/// An SVO recorder-related error occurred (such as not enough free storage or an invalid file path).

/// 发生与SVO记录器有关的错误(例如,可用存储空间不足或文件路径无效)。

///

SVO_RECORDING_ERROR,

///

/// An SVO related error when NVIDIA based compression cannot be loaded

/// 无法加载基于NVIDIA的压缩时的SVO相关错误

///

SVO_UNSUPPORTED_COMPRESSION,

///

/// The requested coordinate system is not available.

/// 请求的坐标系不可用。

///

INVALID_COORDINATE_SYSTEM,

///

/// The firmware of the ZED is out of date. Update to the latest version.

/// ZED的固件已过期。更新到最新版本。

///

INVALID_FIRMWARE,

///

/// An invalid parameter has been set for the function.

/// 为该功能设置了无效的参数。

///

INVALID_FUNCTION_PARAMETERS,

///

/// In grab() only, the current call return the same frame as last call. Not a new frame.

/// 仅在grab()中,当前调用返回与上次调用相同的帧。不是新的框架。

///

NOT_A_NEW_FRAME,

///

/// In grab() only, a CUDA error has been detected in the process. Activate wrapperVerbose in ZEDManager.cs for more info.

/// 仅在grab()中,在此过程中检测到CUDA错误。激活ZEDManager.cs中的wrapperVerbose以获取更多信息。

///

CUDA_ERROR,

///

/// In grab() only, ZED SDK is not initialized. Probably a missing call to sl::Camera::open.

/// 仅在grab()中,未初始化ZED SDK。可能缺少对sl :: Camera :: open的调用。

///

CAMERA_NOT_INITIALIZED,

///

/// Your NVIDIA driver is too old and not compatible with your current CUDA version.

/// 您的NVIDIA驱动程序太旧,与您当前的CUDA版本不兼容。

///

NVIDIA_DRIVER_OUT_OF_DATE,

///

/// The function call is not valid in the current context. Could be a missing a call to sl::Camera::open.

/// 该函数调用在当前上下文中无效。可能缺少对sl :: Camera :: open的调用。

///

INVALID_FUNCTION_CALL,

///

/// The SDK wasn't able to load its dependencies, the installer should be launched.

/// SDK无法加载其依赖项,应启动安装程序。

///

CORRUPTED_SDK_INSTALLATION,

///

/// The installed SDK is not the SDK used to compile the program.

/// 安装的SDK不是用于编译程序的SDK。

///

INCOMPATIBLE_SDK_VERSION,

///

/// The given area file does not exist. Check the file path.

/// 给定的区域文件不存在。检查文件路径。

///

INVALID_AREA_FILE,

///

/// The area file does not contain enough data to be used ,or the sl::DEPTH_MODE used during the creation of the

/// area file is different from the one currently set.

/// 区域文件包含的数据不足,或者创建///区域文件时使用的sl :: DEPTH_MODE与当前设置的不同。

///

INCOMPATIBLE_AREA_FILE,

///

/// Camera failed to set up.

/// 相机无法设置。

///

CAMERA_FAILED_TO_SETUP,

///

/// Your ZED cannot be opened. Try replugging it to another USB port or flipping the USB-C connector (if using ZED Mini).

/// 您的ZED无法打开。尝试将其重新插入另一个USB端口或翻转USB-C连接器(如果使用ZED Mini)。

///

CAMERA_DETECTION_ISSUE,

///

/// The Camera is already in use by another process.

/// 相机已被其他进程使用。

///

CAMERA_ALREADY_IN_USE,

///

/// No GPU found or CUDA is unable to list it. Can be a driver/reboot issue.

/// 找不到GPU或CUDA无法列出它。可能是驱动程序/重启问题。

///

NO_GPU_DETECTED,

///

/// Plane not found. Either no plane is detected in the scene, at the location or corresponding to the floor,

/// or the floor plane doesn't match the prior given.

/// 找不到平面。在场景中,在该位置或与地板相对应的位置上未检测到平面,或地板平面与先前给定的平面不匹配。

///

PLANE_NOT_FOUND,

///

/// Missing or corrupted AI module ressources.

/// Please reinstall the ZED SDK with the AI (object detection) module to fix this issue

/// AI模块资源丢失或损坏。 ///请重新安装带有AI(对象检测)模块的ZED SDK,以解决此问题

///

AI_MODULE_NOT_AVAILABLE,

///

/// The cuDNN library cannot be loaded, or is not compatible with this version of the ZED SDK

/// cuDNN库无法加载,或与此版本的ZED SDK不兼容

///

INCOMPATIBLE_CUDNN_VERSION,

///

/// internal sdk timestamp is not valid

/// 内部SDK时间戳无效

///

AI_INVALID_TIMESTAMP,

///

/// an error occur while tracking objects

/// 跟踪对象时发生错误

///

AI_UNKNOWN_ERROR,

///

/// End of ERROR_CODE

/// ERROR_CODE的结尾

///

ERROR_CODE_LAST

};

///

/// Represents the available resolution options.

/// 表示可用的分辨率选项

///

public enum RESOLUTION

{

///

/// 2208*1242. Supported frame rate: 15 FPS.

///

HD2K,

///

/// 1920*1080. Supported frame rates: 15, 30 FPS.

///

HD1080,

///

/// 1280*720. Supported frame rates: 15, 30, 60 FPS.

///

HD720,

///

/// 672*376. Supported frame rates: 15, 30, 60, 100 FPS.

///

VGA

};

///

/// Types of compatible ZED cameras.

/// 兼容的ZED摄像机的类型。

///

public enum MODEL

{

///

/// ZED(1)

///

ZED,

///

/// ZED Mini.

///

ZED_M,

///

/// ZED2.

///

ZED2

};

///

/// Lists available sensing modes - whether to produce the original depth map (STANDARD) or one with

/// smoothing and other effects added to fill gaps and roughness (FILL).

/// 列出可用的感应模式-是生成原始深度图(STANDARD)

/// 还是添加平滑和/或其他效果以填充间隙和粗糙度的一种(FILL)。

/// Lances:选择原始的深度数据,还是经过滤波的深度数据。

/// 原始的数据一般有很多黑块,这是由于两个相机视差引起的,但是匹配成功的点的精度会比较高,而滤波后的深度图象会比较平滑,鲁棒性也比较好,不过对应具体的点的精度会降低

///

public enum SENSING_MODE

{

///

/// This mode outputs the standard ZED depth map that preserves edges and depth accuracy.

/// However, there will be missing data where a depth measurement couldn't be taken, such as from

/// a surface being occluded from one sensor but not the other.

/// Better for: Obstacle detection, autonomous navigation, people detection, 3D reconstruction.

/// 此模式输出保留边缘和深度精度的标准ZED深度图。

/// 但是,将缺少无法进行深度测量的数据,例如

/// 某个传感器遮挡的表面而不是另一个传感器遮挡的表面。

/// 更适合:障碍物检测,自主导航,人员检测,3D重建。

///

STANDARD,

///

/// This mode outputs a smooth and fully dense depth map. It doesn't have gaps in the data

/// like STANDARD where depth can't be calculated directly, but the values it fills them with

/// is less accurate than a real measurement.

/// Better for: AR/VR, mixed-reality capture, image post-processing.

/// 此模式输出平滑且完全密集的深度图。它在数据中没有

/// 像标准尺一样无法直接计算深度的空白,但是用

/// 填充深度的值比实际测量的精度低。

/// 更适合:AR / VR,混合现实捕捉,图像后处理

///

FILL

};

///

/// Lists available view types retrieved from the camera, used for creating human-viewable (Image-type) textures.

/// 列出从相机检索到的可用视图类型,这些视图类型用于创建人眼可见(图像类型)的纹理。

///

/// Left RGBA image. As a ZEDMat, MAT_TYPE is set to MAT_TYPE_8U_C4.

/// 左RGBA图像。作为ZEDMat,MAT_TYPE设置为MAT_TYPE_8U_C4。

///

LEFT,

///

/// Right RGBA image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C4.

///

RIGHT,

///

/// Left GRAY image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C1.

/// 左相机灰度图

///

LEFT_GREY,

///

/// Right GRAY image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C1.

///

RIGHT_GREY,

///

/// Left RGBA unrectified image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C4.

/// 左RGBA未校正的图像。作为ZEDMat,MAT_TYPE设置为sl :: MAT_TYPE_8U_C4。

///

LEFT_UNRECTIFIED,

///

/// Right RGBA unrectified image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C4.

///

RIGHT_UNRECTIFIED,

///

/// Left GRAY unrectified image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C1.

/// 左灰色未校正的图像。作为ZEDMat,MAT_TYPE设置为sl :: MAT_TYPE_8U_C1。

///

LEFT_UNRECTIFIED_GREY,

///

/// Right GRAY unrectified image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C1.

///

RIGHT_UNRECTIFIED_GREY,

///

/// Left and right image. Will be double the width to hold both. As a ZEDMat, MAT_TYPE is set to MAT_8U_C4.

///

SIDE_BY_SIDE,

///

/// Normalized depth image. As a ZEDMat, MAT_TYPE is set to sl::MAT_TYPE_8U_C4.

/// 归一化深度图像。作为ZEDMat,MAT_TYPE设置为sl :: MAT_TYPE_8U_C4。

/// Use an Image texture for viewing only. For measurements, use a Measure type instead

/// (ZEDCamera.RetrieveMeasure()) to preserve accuracy.

///

DEPTH,

///

/// Normalized confidence image. As a ZEDMat, MAT_TYPE is set to MAT_8U_C4.

/// 归一化置信度图像。作为ZEDMat,MAT_TYPE设置为MAT_8U_C4。

/// Use an Image texture for viewing only. For measurements, use a Measure type instead

/// (ZEDCamera.RetrieveMeasure()) to preserve accuracy.

///

CONFIDENCE,

///

/// Color rendering of the normals. As a ZEDMat, MAT_TYPE is set to MAT_8U_C4.

/// 法线的彩色渲染。作为ZEDMat,MAT_TYPE设置为MAT_8U_C4。

/// Use an Image texture for viewing only. For measurements, use a Measure type instead

/// (ZEDCamera.RetrieveMeasure()) to preserve accuracy.

///

NORMALS,

///

/// Color rendering of the right depth mapped on right sensor. As a ZEDMat, MAT_TYPE is set to MAT_8U_C4.

/// 右侧传感器上映射的正确深度的彩色渲染。作为ZEDMat,MAT_TYPE设置为MAT_8U_C4。

/// Use an Image texture for viewing only. For measurements, use a Measure type instead

/// (ZEDCamera.RetrieveMeasure()) to preserve accuracy.

///

DEPTH_RIGHT,

///

/// Color rendering of the normals mapped on right sensor. As a ZEDMat, MAT_TYPE is set to MAT_8U_C4.

/// 右侧传感器上映射的法线的彩色渲染。作为ZEDMat,MAT_TYPE设置为MAT_8U_C4。

/// Use an Image texture for viewing only. For measurements, use a Measure type instead

/// (ZEDCamera.RetrieveMeasure()) to preserve accuracy.

///

NORMALS_RIGHT

};

///

/// Lists available camera settings for the ZED camera (contrast, hue, saturation, gain, etc.)

/// 列出ZED摄像机的可用摄像机设置(对比度,色相,饱和度,增益等)

///

public enum CAMERA_SETTINGS

{

///

/// Brightness control. Value should be between 0 and 8.

/// 亮度

///

BRIGHTNESS,

///

/// Contrast control. Value should be between 0 and 8.

/// 对比度

///

CONTRAST,

///

/// Hue control. Value should be between 0 and 11.

/// 色调

///

HUE,

///

/// Saturation control. Value should be between 0 and 8.

/// 饱和

///

SATURATION,

///

/// Sharpness control. Value should be between 0 and 8.

/// 清晰度

///

SHARPNESS,

///

/// Gamma control. Value should be between 1 and 9

/// 伽马值

///

GAMMA,

///

/// Gain control. Value should be between 0 and 100 for manual control.

/// If ZED_EXPOSURE is set to -1 (automatic mode), then gain will be automatic as well.

/// 获得控制权。手动控制的值应介于0到100之间。

/// 如果ZED_EXPOSURE设置为-1(自动模式),则增益也将是自动的。

/// Lances:这个不是很理解

///

GAIN,

///

/// Exposure control. Value can be between 0 and 100.

/// Setting to -1 enables auto exposure and auto gain.

/// Setting to 0 disables auto exposure but doesn't change the last applied automatic values.

/// Setting to 1-100 disables auto mode and sets exposure to the chosen value.

/// 曝光控制。值可以在0到100之间。

/// 设置为-1启用自动曝光和自动增益。

/// 设置为0将禁用自动曝光,但不会更改最后应用的自动值。

/// 设置为1-100将禁用自动模式,并将曝光设置为所选值

///

EXPOSURE,

///

/// Auto-exposure and auto gain. Setting this to true switches on both. Assigning a specifc value to GAIN or EXPOSURE will set this to 0.

/// 自动曝光和自动增益。将此设置为true会同时打开两者。为GAIN或EXPOSURE分配特定值会将其设置为0。

///

AEC_AGC,

///

/// ROI for auto exposure/gain. ROI defines the target where the AEC/AGC will be calculated

/// Use overloaded function for this enum

/// 自动曝光/增益的投资回报率。 ROI定义了将计算AEC / AGC的目标

/// 为此枚举使用重载函数

///

AEC_AGC_ROI,

///

/// Color temperature control. Value should be between 2800 and 6500 with a step of 100.

/// 色温控制。值应在2800到6500之间,步长为100。

///

WHITEBALANCE,

///

/// Defines if the white balance is in automatic mode or not.

/// 定义白平衡是否处于自动模式。

///

AUTO_WHITEBALANCE,

///

/// front LED status (1==enable, 0 == disable)

/// 前LED状态(1 ==启用,0 ==禁用)

///

LED_STATUS

};

///

/// Lists available measure types retrieved from the camera, used for creating precise measurement maps

/// 列出从摄像机检索到的可用测量类型,用于创建精确的测量图

/// (Measure-type textures).

/// Based on the MEASURE enum in the ZED C++ SDK. For more info, see:

/// https://www.stereolabs.com/developers/documentation/API/v2.5.1/group__Depth__group.html#ga798a8eed10c573d759ef7e5a5bcd545d

///

public enum MEASURE

{

///

/// Disparity map. As a ZEDMat, MAT_TYPE is set to MAT_32F_C1.

/// 视差图。作为ZEDMat,MAT_TYPE设置为MAT_32F_C1。

///

DISPARITY,

///

/// Depth map. As a ZEDMat, MAT_TYPE is set to MAT_32F_C1.

/// 深度图。作为ZEDMat,MAT_TYPE设置为MAT_32F_C1。

///

DEPTH,

///

/// Certainty/confidence of the disparity map. As a ZEDMat, MAT_TYPE is set to MAT_32F_C1.

/// 视差图的确定性/可信度。作为ZEDMat,MAT_TYPE设置为MAT_32F_C1。

///

CONFIDENCE,

///

/// 3D coordinates of the image points. Used for point clouds in ZEDPointCloudManager.

/// As a ZEDMat, MAT_TYPE is set to MAT_32F_C4. The 4th channel may contain the colors.

/// 图像点的3D坐标。用于ZEDPointCloudManager中的点云。

/// 作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。第四通道可能包含颜色。

///

XYZ,

///

/// 3D coordinates and color of the image. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// The 4th channel encodes 4 UCHARs for colors in R-G-B-A order.

/// 3D坐标和图像颜色。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 第四个通道以R-G-B-A顺序为颜色编码4个UCHAR。

///

XYZRGBA,

///

/// 3D coordinates and color of the image. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// The 4th channel encode 4 UCHARs for colors in B-G-R-A order.

/// 3D坐标和图像颜色。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 第四个通道以B-G-R-A顺序编码4个UCHAR颜色。

///

XYZBGRA,

///

/// 3D coordinates and color of the image. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// The 4th channel encodes 4 UCHARs for color in A-R-G-B order.

/// 3D坐标和图像颜色。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 第四个通道按A-R-G-B顺序编码4个UCHAR颜色。

///

XYZARGB,

///

/// 3D coordinates and color of the image. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 contains color in A-B-G-R order.

/// 3D坐标和图像颜色。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4包含A-B-G-R顺序的颜色。

///

XYZABGR,

///

/// 3D coordinates and color of the image. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// The 4th channel encode 4 UCHARs for color in A-B-G-R order.

/// 3D坐标和图像颜色。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 第四个通道以A-B-G-R顺序编码4个UCHAR颜色。

///

NORMALS,

///

/// Disparity map for the right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C1.

/// 右传感器的视差图。作为ZEDMat,MAT_TYPE设置为MAT_32F_C1。

/// Lances:视差图:https://blog.csdn.net/kissgoodbye2012/article/details/79432771

///

DISPARITY_RIGHT,

///

/// Depth map for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C1.

/// 右传感器的深度图。作为ZEDMat,MAT_TYPE设置为MAT_32F_C1。

///

DEPTH_RIGHT,

///

/// Point cloud for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4. Channel 4 is empty.

/// 右侧传感器的点云。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。通道4为空。

///

XYZ_RIGHT,

///

/// Colored point cloud for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 contains colors in R-G-B-A order.

/// 右侧传感器的彩色点云。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4包含R-G-B-A顺序的颜色。

///

XYZRGBA_RIGHT,

///

/// Colored point cloud for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 contains colors in B-G-R-A order.

/// 右侧传感器的彩色点云。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4包含B-G-R-A顺序的颜色。

///

XYZBGRA_RIGHT,

///

/// Colored point cloud for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 contains colors in A-R-G-B order.

/// 右侧传感器的彩色点云。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4包含A-R-G-B顺序的颜色。

///

XYZARGB_RIGHT,

///

/// Colored point cloud for right sensor. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 contains colors in A-B-G-R order.

/// 右侧传感器的彩色点云。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4包含A-B-G-R顺序的颜色。

///

XYZABGR_RIGHT,

///

/// Normals vector for right view. As a ZEDMat, MAT_TYPE is set to MAT_32F_C4.

/// Channel 4 is empty (set to 0).

/// 右视图的法线向量。作为ZEDMat,MAT_TYPE设置为MAT_32F_C4。

/// 通道4为空(设置为0)。

///

NORMALS_RIGHT

};

///

/// Categories indicating when a timestamp is captured.

/// 指示何时捕获时间戳的类别。

///

public enum TIME_REFERENCE

{

///

/// Timestamp from when the image was received over USB from the camera, defined

/// by when the entire image was available in memory.

/// 从相机通过USB接收图像开始的时间戳,

/// 当整个图像在内存中可用时定义。

/// Lances:相当于从USB中读取的新一帧的图象数据完全存入cpu或者gpu内存的时候的时间,这个时间是离新图象最近的时间的?

///

IMAGE,

///

/// Timestamp from when the relevant function was called.

/// 从调用相关函数起的时间戳记。

///

CURRENT

};

///

/// Reference frame (world or camera) for tracking and depth sensing.

/// 用于跟踪和深度感测的参考框架(世界或摄像机)。

/// Lances:

///

public enum REFERENCE_FRAME

{

///

/// Matrix contains the total displacement from the world origin/the first tracked point.

/// 矩阵包含距世界原点/第一个跟踪点的总位移。

/// Lances:相当于绝对运动,参考系选的是外部世界坐标

///

WORLD,

///

/// Matrix contains the displacement from the previous camera position to the current one.

/// 矩阵包含从先前摄像机位置到当前摄像机位置的位移。

/// Lances:这个相当于是局部运动,就是把前一时刻当作参考系

///

CAMERA

};

///

/// Possible states of the ZED's Tracking system.

/// ZED跟踪系统的可能状态。

///

public enum TRACKING_STATE

{

///

/// Tracking is searching for a match from the database to relocate to a previously known position.

/// 跟踪是从数据库中搜索匹配项以重新定位到先前已知的位置。

/// Lances:之前经过了地图扫描,把匹配的特征点存到了离线文件里面,这边指示的是正在搜索这个文件的状态

///

TRACKING_SEARCH,

///

/// Tracking is operating normally; tracking data should be correct.

/// 跟踪运行正常;跟踪数据应正确。

///

TRACKING_OK,

///

/// Tracking is not enabled.

/// 未启用跟踪

///

TRACKING_OFF

}

///

/// SVO compression modes.

///

public enum SVO_COMPRESSION_MODE

{

///

/// Lossless compression based on png/zstd. Average size = 42% of RAW.

///

LOSSLESS_BASED,

///

/// AVCHD Based compression (H264). Available since ZED SDK 2.7

///

H264_BASED,

///

/// HEVC Based compression (H265). Available since ZED SDK 2.7

///

H265_BASED,

}

///

/// Streaming codecs

///

public enum STREAMING_CODEC

{

///

/// AVCHD Based compression (H264)

///

AVCHD_BASED,

///

/// HEVC Based compression (H265)

///

HEVC_BASED

}

///

/// Mesh formats that can be saved/loaded with spatial mapping.

///

public enum MESH_FILE_FORMAT

{

///

/// Contains only vertices and faces.

///

PLY,

///

/// Contains only vertices and faces, encoded in binary.

///

BIN,

///

/// Contains vertices, normals, faces, and texture information (if possible).

///

OBJ

}

///

/// Presets for filtering meshes scannedw ith spatial mapping. Higher values reduce total face count by more.

///

public enum FILTER

{

///

/// Soft decimation and smoothing.

///

LOW,

///

/// Decimate the number of faces and apply a soft smooth.

///

MEDIUM,

///

/// Drastically reduce the number of faces.

///

HIGH,

}

///

/// Possible states of the ZED's Spatial Mapping system.

///

public enum SPATIAL_MAPPING_STATE

{

///

/// Spatial mapping is initializing.

///

SPATIAL_MAPPING_STATE_INITIALIZING,

///

/// Depth and tracking data were correctly integrated into the fusion algorithm.

///

SPATIAL_MAPPING_STATE_OK,

///

/// Maximum memory dedicated to scanning has been reached; the mesh will no longer be updated.

///

SPATIAL_MAPPING_STATE_NOT_ENOUGH_MEMORY,

///

/// EnableSpatialMapping() wasn't called (or the scanning was stopped and not relaunched).

///

SPATIAL_MAPPING_STATE_NOT_ENABLED,

///

/// Effective FPS is too low to give proper results for spatial mapping.

/// Consider using performance-friendly parameters (DEPTH_MODE_PERFORMANCE, VGA or HD720 camera resolution,

/// and LOW spatial mapping resolution).

///

SPATIAL_MAPPING_STATE_FPS_TOO_LOW

}

///

/// Units used by the SDK for measurements and tracking. METER is best to stay consistent with Unity.

/// SDK用于测量和跟踪的单位。 采用"米"是最好的,因为与Unity保持一致。

///

public enum UNIT

{

///

/// International System, 1/1000 meters.

/// 毫米

///

MILLIMETER,

///

/// International System, 1/100 meters.

/// 分米

///

CENTIMETER,

///

/// International System, 1/1 meters.

/// 米

///

METER,

///

/// Imperial Unit, 1/12 feet.

/// 英寸

///

INCH,

///

/// Imperial Unit, 1/1 feet.

/// 英尺

///

FOOT

}

///

/// Struct containing all parameters passed to the SDK when initializing the ZED.

/// These parameters will be fixed for the whole execution life time of the camera.

/// 初始化ZED时,包含传递给SDK的所有参数的结构。

/// 这些参数在相机的整个执行生命周期中都是固定的。

/// For more details, see the InitParameters class in the SDK API documentation:

/// https://www.stereolabs.com/developers/documentation/API/v2.5.1/structsl_1_1InitParameters.html

///

public class InitParameters

{

public sl.INPUT_TYPE inputType;

///

/// Resolution the ZED will be set to.

///

public sl.RESOLUTION resolution;

///

/// Requested FPS for this resolution. Setting it to 0 will choose the default FPS for this resolution.

///

public int cameraFPS;

///

/// ID for identifying which of multiple connected ZEDs to use.

///

public int cameraDeviceID;

///

/// Path to a recorded SVO file to play, including filename.

///

public string pathSVO = "";

///

/// In SVO playback, this mode simulates a live camera and consequently skipped frames if the computation framerate is too slow.

///

public bool svoRealTimeMode;

///

/// Define a unit for all metric values (depth, point clouds, tracking, meshes, etc.) Meters are recommended for Unity.

///

public UNIT coordinateUnit;

///

/// This defines the order and the direction of the axis of the coordinate system.

/// LEFT_HANDED_Y_UP is recommended to match Unity's coordinates.

///

public COORDINATE_SYSTEM coordinateSystem;

///

/// Quality level of depth calculations. Higher settings improve accuracy but cost performance.

///

public sl.DEPTH_MODE depthMode;

///

/// Minimum distance from the camera from which depth will be computed, in the defined coordinateUnit.

///

public float depthMinimumDistance;

///

/// When estimating the depth, the SDK uses this upper limit to turn higher values into \ref TOO_FAR ones.

/// The current maximum distance that can be computed in the defined \ref UNIT.

/// Changing this value has no impact on performance and doesn't affect the positional tracking nor the spatial mapping. (Only the depth, point cloud, normals)

///

public float depthMaximumDistance;

///

/// Defines if images are horizontally flipped.

///

public bool cameraImageFlip;

///

/// Defines if measures relative to the right sensor should be computed (needed for MEASURE__RIGHT).

///

public bool enableRightSideMeasure;

///

/// True to disable self-calibration and use the optional calibration parameters without optimizing them.

/// False is recommended, so that calibration parameters can be optimized.

///

public bool cameraDisableSelfCalib;

///

/// True for the SDK to provide text feedback.

///

public bool sdkVerbose;

///

/// ID of the graphics card on which the ZED's computations will be performed.

///

public int sdkGPUId;

///

/// If set to verbose, the filename of the log file into which the SDK will store its text output.

///

public string sdkVerboseLogFile = "";

///

/// True to stabilize the depth map. Recommended.

///

public bool depthStabilization;

///

/// Optional path for searching configuration (calibration) file SNxxxx.conf. (introduced in ZED SDK 2.6)

///

public string optionalSettingsPath = "";

///

/// True to stabilize the depth map. Recommended.

///

public bool sensorsRequired;

///

/// Path to a recorded SVO file to play, including filename.

///

public string ipStream = "";

///

/// Path to a recorded SVO file to play, including filename.

///

public ushort portStream = 30000;

///

/// Whether to enable improved color/gamma curves added in ZED SDK 3.0.

///

public bool enableImageEnhancement = true;

///

/// Constructor. Sets default initialization parameters recommended for Unity.

/// 构造函数。设置建议为Unity使用的默认初始化参数。

/// Editor lances: 就是说一般在unity中使用的时候,所推荐的初始化参数. 2020-09-01 10.09

/// Editor lances:构造函数就是在类的默认初始化的值,要求名称和类的名称一样

/// 什么是构造函数:https://www.cnblogs.com/chinarbolg/p/9601402.html

///

public InitParameters()

{

this.inputType = sl.INPUT_TYPE.INPUT_TYPE_USB;

this.resolution = RESOLUTION.HD720;

this.cameraFPS = 60;

this.cameraDeviceID = 0;

this.pathSVO = "";

this.svoRealTimeMode = false;

this.coordinateUnit = UNIT.METER;

this.coordinateSystem = COORDINATE_SYSTEM.IMAGE;

this.depthMode = DEPTH_MODE.PERFORMANCE;

this.depthMinimumDistance = -1;

this.depthMaximumDistance = -1;

this.cameraImageFlip = false;

this.cameraDisableSelfCalib = false;

this.sdkVerbose = false;

this.sdkGPUId = -1;

this.sdkVerboseLogFile = "";

this.enableRightSideMeasure = false;

this.depthStabilization = true;

this.optionalSettingsPath = "";

this.sensorsRequired = false;

this.ipStream = "";

this.portStream = 30000;

this.enableImageEnhancement = true;

}

}

///

/// List of available coordinate systems. Left-Handed, Y Up is recommended to stay consistent with Unity.

/// consistent with Unity.

/// 可用坐标系列表。建议左手,Y Up与Unity保持一致。与Unity一致。

///

public enum COORDINATE_SYSTEM

{

///

/// Standard coordinates system used in computer vision.

/// 计算机视觉中使用的标准坐标系。

/// Used in OpenCV. See: http://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html

///

IMAGE,

///

/// Left-Handed with Y up and Z forward. Recommended. Used in Unity with DirectX.

/// 左手,Y向上,Z向前。推荐的。与DirectX一起在Unity中使用。

/// Editor lances:我们一般用这个

///

LEFT_HANDED_Y_UP,

///

/// Right-Handed with Y pointing up and Z backward. Used in OpenGL.

/// 右手,Y指向上方,Z向后。在OpenGL中使用。

///

RIGHT_HANDED_Y_UP,

///

/// Right-Handed with Z pointing up and Y forward. Used in 3DSMax.

/// 右手,Z指向上方,Y指向前方。在3DSMax中使用。

///

RIGHT_HANDED_Z_UP,

///

/// Left-Handed with Z axis pointing up and X forward. Used in Unreal Engine.

/// 左手,Z轴指向上方,X指向前方。用于虚幻引擎。

///

LEFT_HANDED_Z_UP

}

///

/// Possible states of the ZED's spatial memory area export, for saving 3D features used

/// by the tracking system to relocalize the camera. This is used when saving a mesh generated

/// by spatial mapping when Save Mesh is enabled - a .area file is saved as well.

/// ZED空间存储区域导出的可能状态,

/// 用于保存一些3D特征,

/// 这些特征主要用于重新定位相机。

/// 在保存通过空间映射生成的网格时将使用此方法

/// 当启用“Save Mesh”时可以使用。

/// 保存为一个.area文件。

///

public enum AREA_EXPORT_STATE

{

///

/// Spatial memory file has been successfully created.

/// 在保存通过空间映射生成的网格时将使用此方法

///

AREA_EXPORT_STATE_SUCCESS,

///

/// Spatial memory file is currently being written to.

/// 当前正在写入空间内存文件。

///

AREA_EXPORT_STATE_RUNNING,

///

/// Spatial memory file export has not been called.

/// 尚未调用空间内存文件导出

///

AREA_EXPORT_STATE_NOT_STARTED,

///

/// Spatial memory contains no data; the file is empty.

/// 空间内存不包含任何数据;该文件为空。

///

AREA_EXPORT_STATE_FILE_EMPTY,

///

/// Spatial memory file has not been written to because of a bad file name.

/// 由于文件名错误,因此未写入空间存储文件。

///

AREA_EXPORT_STATE_FILE_ERROR,

///

/// Spatial memory has been disabled, so no file can be created.

/// 空间内存已被禁用,因此无法创建文件。

///

AREA_EXPORT_STATE_SPATIAL_MEMORY_DISABLED

};

///

/// Runtime parameters used by the ZEDCamera.Grab() function, and its Camera::grab() counterpart in the SDK.

/// ZEDCamera.Grab()函数及其SDK中与Camera :: grab()对应的运行时参数。

/// Editor lances:这个结构体应该是作为采集函数的输入 Grap() 就是在相机已经完成底层初始化后,对相机采集图象数据的参数设置。

///

[StructLayout(LayoutKind.Sequential)]

public struct RuntimeParameters {

///

/// Defines the algorithm used for depth map computation, more info : \ref SENSING_MODE definition.

/// 定义用于深度图计算的算法,更多信息:\ ref SENSING_MODE定义。

///

public sl.SENSING_MODE sensingMode;

///

/// Provides 3D measures (point cloud and normals) in the desired reference frame (default is REFERENCE_FRAME_CAMERA).

/// 在所需的参考系中提供3D测量(点云和法线)(默认为REFERENCE_FRAME_CAMERA)。

///

public sl.REFERENCE_FRAME measure3DReferenceFrame;

///

/// Defines whether the depth map should be computed.

/// 定义是否应计算深度图。

///

[MarshalAs(UnmanagedType.U1)]

public bool enableDepth;

///

/// Defines the confidence threshold for the depth. Based on stereo matching score.

/// 定义深度的置信度阈值。基于立体声匹配分数。

///

public int confidenceThreshold;

///

/// Defines texture confidence threshold for the depth. Based on textureness confidence.

/// 定义深度的纹理置信度阈值。基于质感置信度。

///

public int textureConfidenceThreshold;

}

///

/// Part of the ZED (left/right sensor, center) that's considered its center for tracking purposes.

/// ZED(左/右传感器,中心)的一部分,被视为跟踪的中心。

/// Editor lances:指的是在Unity中应该以哪个相机的哪个部位作为中心点

///

public enum TRACKING_FRAME

{

///

/// Camera's center is at the left sensor.

/// 相机的中心在左侧传感器处。

///

LEFT_EYE,

///

/// Camera's center is in the camera's physical center, between the sensors.

/// 相机的中心在相机的物理中心,在传感器之间。

///

CENTER_EYE,

///

/// Camera's center is at the right sensor.

/// 相机的中心在右侧的传感器上。

///

RIGHT_EYE

};

///

/// Types of USB device brands.

/// USB设备品牌的类型。

///

public enum USB_DEVICE

{

///

/// Oculus device, eg. Oculus Rift VR Headset.

/// Oculus设备,例如Oculus Rift VR耳机。

///

USB_DEVICE_OCULUS,

///

/// HTC device, eg. HTC Vive.

/// HTC设备,例如HTC Vive。

///

USB_DEVICE_HTC,

///

/// Stereolabs device, eg. ZED/ZED Mini.

/// 立体声装置,例如。 ZED / ZED迷你。

///

USB_DEVICE_STEREOLABS

};

#region 物品检测相关的一些定义

Object Detection /

[StructLayout(LayoutKind.Sequential)]

public struct dll_ObjectDetectionParameters

{

///

/// Defines if the object detection is synchronized to the image or runs in a separate thread.

/// 定义对象检测是同步到图像还是在单独的线程中运行

/// Editor lances:这边的意思应该是控制底层SDK的代码,指的是物品检测是否与图像采集在一个线程里面,这样的话可以保证图像和检测结果是高度一致的

/// 检测结果的位置不会有太大偏差。但是可能会影响图像的采集速度,造成卡顿。不建议在AR模式下使用。

/// 如果不同步的话,在相机的运动是比较快的话,检测的结果和当前的图像可能偏移会比较严重。

/// 根据实际情况使用,首推还是采用非同步模式

/// 原来图像同步的意思指的是这个意思.

///

[MarshalAs(UnmanagedType.U1)]

public bool imageSync;

///

/// Defines if the object detection will track objects across multiple images, instead of an image-by-image basis.

/// 定义对象检测是否将跨多个图像而不是逐个图像地跟踪对象。

/// Editor lances:意思是说,是检测到物品后就在后面的图像中跟踪它,还是说直接在每一帧图像上都做物品检测,不管前一帧的结果如何

/// Editor lances:开启跟踪的话,按理说实时性会比较好。首选还是采用跟踪模式

///

[MarshalAs(UnmanagedType.U1)]

public bool enableObjectTracking;

///

/// Defines if the SDK will calculate 2D masks for each object. Requires more performance, so don't enable if you don't need these masks.

/// 定义SDK是否将为每个对象计算2D蒙版。需要更高的性能,因此如果不需要这些掩码,请不要启用。

/// Editor lances:如果有这个二维蒙版的话就方便提取物品对象的语义分割的数据,但是可能比较耗时耗性能

///

[MarshalAs(UnmanagedType.U1)]

public bool enable2DMask;

};

[StructLayout(LayoutKind.Sequential)]

public struct dll_ObjectDetectionRuntimeParameters

{

///

/// The detection confidence threshold between 1 and 99.

/// A confidence of 1 means a low threshold, more uncertain objects and 99 very few but very precise objects.

/// Ex: If set to 80, then the SDK must be at least 80% sure that a given object exists before reporting it in the list of detected objects.

/// If the scene contains a lot of objects, increasing the confidence can slightly speed up the process, since every object instance is tracked.

/// 检测置信度阈值在1到99之间。

/// 置信度1表示低阈值,更多不确定的对象和99个极少数但非常精确的对象。

/// 例:如果设置为80,则SDK必须至少80%确保给定对象存在,然后才能在检测到的对象列表中报告该对象。

/// 如果场景中包含许多对象,则由于会跟踪每个对象实例,因此增加置信度可以稍微加快此过程。

///

public float detectionConfidenceThreshold;

///

///

///

[MarshalAs(UnmanagedType.ByValArray, SizeConst =2)]

public int[] objectClassFilter;

};

///

/// Object data structure directly from the SDK. Represents a single object detection.

/// See DetectedObject for an abstracted version with helper functions that make this data easier to use in Unity.

/// 对象数据结构直接来自SDK。表示单个对象检测。

/// 有关具有帮助程序功能的抽象版本,请参见DetectedObject,该功能可以使这些数据在Unity中更易于使用。

/// Editor lances:好像目前的sdk只能检测人物?

///

[StructLayout(LayoutKind.Sequential)]

public struct ObjectDataSDK

{

//public int valid; //is Data Valid

public int id; //person ID

public sl.OBJECT_CLASS obj_type;

public sl.OBJECT_TRACK_STATE obj_track_state;

public float confidence;

public System.IntPtr mask;

///

/// Image data.

/// Note that Y in these values is relative from the top of the image, whereas the opposite is true

/// in most related Unity functions. If using this raw value, subtract Y from the

/// image height to get the height relative to the bottom.

/// 图像数据。

/// 请注意,这些值中的Y从图像顶部开始是相对的,而在大多数相关的Unity函数中则相反。如果使用此原始值,

/// 请从图像高度中减去Y以获取相对于底部的高度。

///

/// 0 ------- 1

/// | obj |

/// 3-------- 2

[MarshalAs(UnmanagedType.ByValArray, SizeConst = 4)]

public Vector2[] imageBoundingBox;

///

/// 3D space data (Camera Frame since this is what we used in Unity)

/// 3D空间数据(相机框架,因为这是我们在Unity中使用的数据)

///

public Vector3 rootWorldPosition; //object root position 对象根位置

public Vector3 rootWorldVelocity; //object root velocity 物体根速度

///

/// The 3D space bounding box. given as array of vertices

/// 3D空间边界框。以顶点数组形式给出

///

/// 1 ---------2

/// /| /|

/// 0 |--------3 |

/// | | | |

/// | 5--------|-6

/// |/ |/

/// 4 ---------7

///

[MarshalAs(UnmanagedType.ByValArray, SizeConst = 8)]

public Vector3[] worldBoundingBox; //八个顶点位置

};

///

/// Object Scene data directly from the ZED SDK. Represents all detections given during a single image frame.

/// See DetectionFrame for an abstracted version with helper functions that make this data easier to use in Unity.

/// Contains the number of object in the scene and the objectData structure for each object.

/// Since the data is transmitted from C++ to C#, the size of the structure must be constant. Therefore, there is a limitation of 200 (MAX_OBJECT constant) objects in the image.

/// This number cannot be changed.

/// 直接从ZED SDK获得对象场景数据。表示在单个图像帧中给出的所有检测。

/// 有关具有帮助程序功能的抽象版本,请参见DetectionFrame,该功能使这些数据更易于在Unity中使用。

/// 包含场景中的对象数以及每个对象的objectData结构。

/// 由于数据是从C ++传输到C#的,因此结构的大小必须恒定。因此,图像中有200个对象(MAX_OBJECT常数)的限制。

/// 此数量无法更改。

///

[StructLayout(LayoutKind.Sequential)]

public struct ObjectsFrameSDK

{

///

/// How many objects were detected this frame. Use this to iterate through the top of objectData; objects with indexes greater than numObject are empty.

/// 在该帧中检测到多少个对象。使用它来遍历objectData的顶部;索引大于numObject的对象为空。

///

public int numObject;

///

/// Timestamp of the image where these objects were detected.

/// 检测到这些对象的图像的时间戳。

///

public ulong timestamp;

///

/// Array of objects

/// 对象数组

///

[MarshalAs(UnmanagedType.ByValArray, SizeConst = (int)(Constant.MAX_OBJECTS))]

public ObjectDataSDK[] objectData;

};

///

/// Types of detected objects.

/// 检测的物品的类型

///

public enum OBJECT_CLASS

{

PERSON = 0,

VEHICLE = 1,

LAST = 2

};

///

/// Tracking state of an individual object.

/// 单个对象的跟踪状态。

///

public enum OBJECT_TRACK_STATE

{

OFF, //没有启动跟踪

OK,//没问题

SEARCHING //搜索中

};

#endregion

}// end namespace sl