PyTorch【8】PyTorch可视化工具

深度学习的可视化分为网络结构的可视化和训练过程的可视化,通过相关工具我们可以很方便的观察网络的结构和训练化过程。

数据准备

以手写数字为例,处理后的数据 shape 为:

train_data:shape为 (60000, 1, 28, 28);值范围为 [0, 1]

test_data:shape为 (10000, 1, 28, 28);值范围为 [0, 1]

from torch.utils.data import DataLoader

import torchvision

import torch

train_data = torchvision.datasets.MNIST(

root='data/MNIST',

transform=torchvision.transforms.ToTensor(),

train=True,

download=True

)

test_data = torchvision.datasets.MNIST(

root='data/MNIST',

train=False,

transform=torchvision.transforms.ToTensor(),

download=True

)

train_loader = DataLoader(dataset=train_data,batch_size=128,shuffle=True,drop_last=True)

# 取到原始的测试集特征数据 -> 值处理为 [0,1] -> 由 NWH 扩展为 NCWH

test_data_x = test_data.data/255.0

test_data_x = torch.unsqueeze(test_data_x,dim=1)

test_data_y = test_data.targets

网络创建

class MyModel(nn.Module):

def __init__(self):

super(ConvNet, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(1,16,3,padding=1),

nn.ReLU(),

nn.AvgPool2d(2,2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, 3, padding=1),

nn.ReLU(),

nn.AvgPool2d(2, 2)

)

self.fc = nn.Sequential(

nn.Linear(32*7*7,128),

nn.ReLU(),

nn.Linear(128,64),

nn.ReLU()

)

self.out = nn.Linear(64,10)

def forward(self,x):

x = self.conv1(x) # bs,1,28,28 -> bs,16,14,14

x = self.conv2(x) # bs,16,14,14-> bs,32,7,7

x = x.view(x.shape[0],-1) # bs,32,7,7-> bs,32*7*7

x = self.fc(x) # bs,32*7*7-> bs,128 -> bs,64

out = self.out(x) # bs,64 -> bs,10

return out

mymodel = MyModel()

一.网络结构可视化

1.torchsummary库

(1).pip install torchsummary

(2).输出网络结构_网络参数量_模型大小

from torchsummary import summary

summary(mymodel.cuda(),(1,28,28))

'''

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 28, 28] 160

ReLU-2 [-1, 16, 28, 28] 0

AvgPool2d-3 [-1, 16, 14, 14] 0

Conv2d-4 [-1, 32, 14, 14] 4,640

ReLU-5 [-1, 32, 14, 14] 0

AvgPool2d-6 [-1, 32, 7, 7] 0

Linear-7 [-1, 128] 200,832

ReLU-8 [-1, 128] 0

Linear-9 [-1, 64] 8,256

ReLU-10 [-1, 64] 0

Linear-11 [-1, 10] 650

================================================================

Total params: 214,538

Trainable params: 214,538

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.33

Params size (MB): 0.82

Estimated Total Size (MB): 1.15

----------------------------------------------------------------

'''

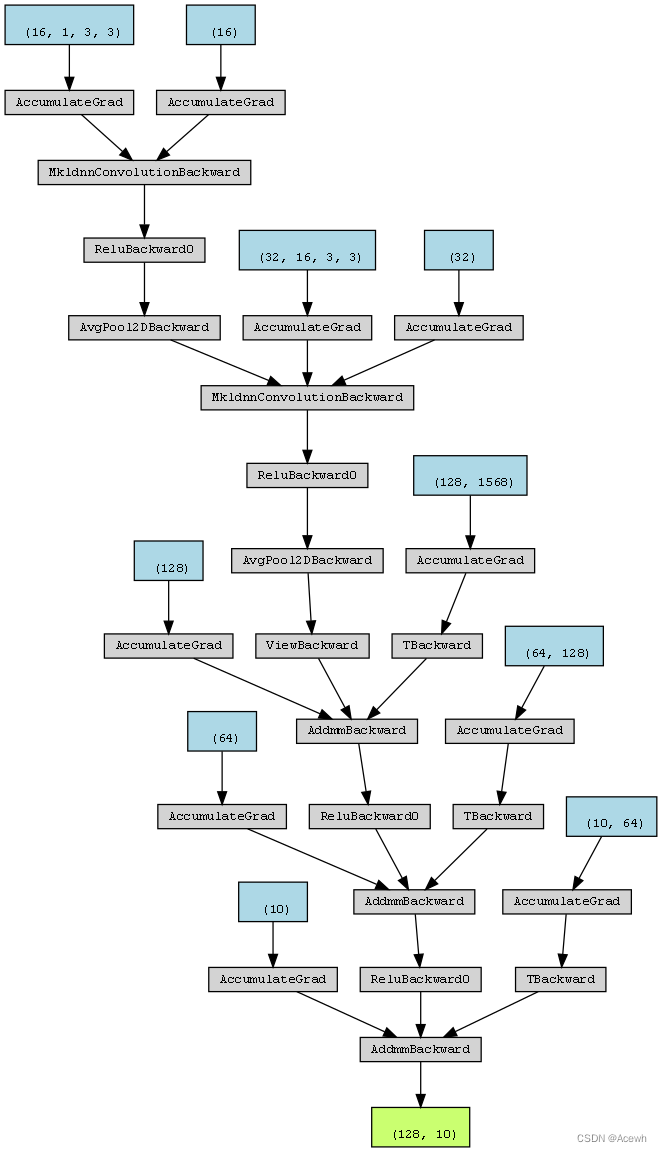

2.PyTorchViz库

(1).pip install graphviz torchviz

(2).输出网络结构

from torchviz import make_dot

x = torch.randn(128,1,28,28)

y = mymodel(x)

g = make_dot(y)

g.format = 'png' # 保存格式,默认为 pdf 格式

g.directory = './' # 保存路径

g.view()

3.HiddenLayer库

(1).pip install hiddenlayer

(2).显示网络结构

import hiddenlayer as hl

g = hl.build_graph(mymodel,torch.Tensor(128,1,28,28))

g.save('mymodel_hl.png',format='png')

二.训练过程可视化

tensorboardX库

tensorboardX 是帮助 PyTorch 使用 tensorboard 工具来可视化的库。tensorboardX提供了多种向tensorboard中添加事件的函数。使用该库的基本流程如下:

- 创建编写器,保存日志

- 添加可视化变量

- 查看结果

(1).创建编写器,保存日志

from tensorboardX import SummaryWriter

SumWriter = SummaryWriter(logdir='./trainlog') # log文件夹

(2).添加可视化变量

首先是正常的训练流程

optimizer = torch.optim.Adam(mymodel.parameters(),lr=0.0002)

loss_func = torch.nn.CrossEntropyLoss()

train_loss = 0

print_step = 16

for epoch in range(10):

for i,(imgs,labels) in enumerate(train_loader):

optimizer.zero_grad()

output = mymodel(imgs)

loss = loss_func(output,labels)

loss.backward()

optimizer.step()

train_loss +=loss.item()

然后添加想要可视化的内容,例如训练过程中的损失值、准确率、图像等。

from sklearn.metrics import accuracy_score

for epoch in range(10):

for i,(imgs,labels) in enumerate(train_loader):

optimizer.zero_grad()

output = mymodel(imgs)

loss = loss_func(output,labels)

loss.backward()

optimizer.step()

train_loss +=loss.item()

batchdone = epoch*len(train_loader)+i+1

# 每 print_step 个 batchdone记录一次变量的信息

if batchdone % print_step == 0:

# 在日志中添加训练集损失值:标签名;值;步长

SumWriter.add_scalar(tag='train loss',scalar_value=train_loss,global_step=batchdone)

# 在日志中添加测试集上的精度

output = mymodel(test_data_x)

pre_lab =torch.argmax(output,dim=1)

acc = accuracy_score(test_data_y,pre_lab)

SumWriter.add_scalar('test acc',acc,batchdone)

# 在日志中添加训练数据的可视化图像,当前有 batch_size 个图像

imgs_mg = vutils.make_grid(imgs,nrow=16)

SumWriter.add_image('train images sample',imgs_mg,batchdone)

# 在日志中添加使用直方图可视化网络参数的分布情况

for name,value in mymodel.named_parameters():

# value 为 Tensor 格式,首先取到其中的 data 值,再转为 numpy 格式

SumWriter.add_histogram(name,value.data.numpy(),batchdone)

tensorboardX 常用功能(from tensorboardX import SummaryWriter)

| 函数 | 功能 | 用法 |

|---|---|---|

SummaryWriter() |

创建日志编写器 | writer=SummaryWriter() |

writer.add_scalar() |

添加标量 | add_scalar('tagname', value, global_step) |

writer.add_image() |

添加图像 | add_image('tagname', value, global_step) |

writer.add_histogram() |

添加直方图 | add_histogram('tagname', value, global_step) |

writer.add_graph() |

添加网络结构 | add_graph(model, input_to_model=None, verbose=False) |

writer.add_audio() |

添加音频 | add_audio('tagname', value, global_step) |

writer.add_text() |

添加文本 | add_text('tagname', value, global_step) |

| 注意每个函数中参数的正确合理性,否则无法显示可视化界面 |

(3).查看结果

训练时或者训练完成后,按照如下步骤即可查看结果,注意查看时不要关闭终端:

- 打开终端,进入 SummaryWriter(logdir=‘mydir’) 中 logdir 所在的目录(而不是进入该目录中)

- 输入 tensorboard --logdir=mydir

- 过一会显示可视化网址,http://localhost:6006/ 在浏览器中查看即可