如何用BERT模型预测电影的未来几天的情感倾向性

如果要根据过去的电影评论评价预测接下来的几天该电影的情感倾向,那么就可以对过去几天的所有评论做情感倾向性分析,并比较其积极情感评分与消极情感评分,如果大多数评论都为积极情感评分,那么模型就能预测接下来几天,该电影的评论会是积极的,反之亦然。

而前面提到的测试过程需要对所有电影分析所有评论的情感倾向性。使用BERT工具来训练模型,BERT适合做这种需要考虑上下文的文本表示学习,因为BERT使用掩码语言模型进行训练,其中AE是自动编码模型,它与AR模型最大的区别就是AE可以考虑上下文信息,比如,对于同样的一句话:我爱吃饭,AR模型在预测这句话出现的概率时是单侧的,先预测“我”出现的概率,再预测在“我”出现的条件下“爱”出现的概率,以此类推,可以看出这是依照句子顺序来预测的。而AE模型会mask句子中的单字(是单字,不是单词),比如mask吃之后,那么公式左边在“我爱mask饭”的条件下“我爱吃饭”出现的概率就变成了,在“我爱饭”条件下“吃”出现的概率。AE模型mask文本之后,能够利用上下文信息使得文本重建。利用BERT基础架构做自己的上游任务,BERT的精度还是很高的。

BERT理解

https://blog.csdn.net/qq_42316533/article/details/116270095

训练语料库来源

https://gitee.com/Jade1998/ChineseSentimentAnalysis/tree/master/data/input

下载其中的数据集,并通过下面这段代码修改文件格式。

import numpy as np

path = 'training_set.txt'

pos = []

neg = []

with open(path, 'r', encoding='utf-8') as f:

for line in f:

if line[0] == 1:

sentence = line[4:].replace(' ', '').replace('\n', '')

pos.append(sentence)

else:

sentence = line[4:].replace(' ', '').replace('\n', '')

neg.append(sentence)

np.savetxt('nnneg.txt', neg, encoding='utf-8', delimiter=',', fmt = '%s')

np.savetxt('pppos.txt', pos, encoding='utf-8', delimiter=',', fmt = '%s')准备环境

torch(anaconda、cuda、cudnn、torch-gpu)

https://blog.csdn.net/Mind_programmonkey/article/details/99688839/

transformers库

pip install transformers

代码详情

导包和超参设置

import numpy as np

import random

import torch

import matplotlib.pyplot as plt

from torch.nn.utils import clip_grad_norm_

from torch.utils.data import TensorDataset, DataLoader, RandomSampler, SequentialSampler

from transformers import BertTokenizer, BertForSequenceClassification, AdamW

from transformers import get_linear_schedule_with_warmup

import os

import time

import datetime

from sklearn.model_selection import train_test_split

train_inputs, test_inputs, train_labels, test_labels = train_test_split(input_tokens, total_targets, random_state=666, test_size=0.2)

train_masks, test_masks, _, _ = train_test_split(attention_tokens, input_tokens, random_state=666, test_size=0.2)

SEED = 123

BATCH_SIZE = 16

LEARNING_RATE = 2e-5

WEIGHT_DECAY = 1e-2

EPSILON = 1e-8

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)数据处理

def readfile(filename):

with open(filename, encoding="utf-8") as f:

content = f.readlines()

return content

#将每一句转成数字(大于126做截断,小于126做PADDING,加上首尾两个标识,长度总共等于128)

def convert_text_to_token(tokenizer, sentence, limit_size=126):

tokens = tokenizer.encode(sentence[:limit_size]) #直接截断

if len(tokens) < limit_size + 2: #补齐(pad的索引号就是0)

tokens.extend([0] * (limit_size + 2 - len(tokens)))

return tokens

#建立mask

def attention_masks(input_ids):

atten_masks = []

for seq in input_ids:

seq_mask = [float(i>0) for i in seq]

atten_masks.append(seq_mask)

return atten_masks

def binary_acc(preds, labels):

correct = torch.eq(torch.max(preds, dim=1)[1], labels.flatten()).float()

acc = correct.sum().item() / len(correct)

return acc

def format_time(elapsed):

elapsed_rounded = int(round((elapsed)))

return str(datetime.timedelta(seconds=elapsed_rounded))训练和评估

def train(model, optimizer):

t0 = time.time()

avg_loss, avg_acc = [],[]

model.train()

for step, batch in enumerate(train_dataloader):

# 每隔40个batch 输出一下所用时间.

if step % 40 == 0 and not step == 0:

elapsed = format_time(time.time() - t0)

print(' Batch {:>5,} of {:>5,}. Elapsed: {:}.'.format(step, len(train_dataloader), elapsed))

b_input_ids, b_input_mask, b_labels = batch[0].long().to(device), batch[1].long().to(device), batch[2].long().to(device)

output = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask, labels=b_labels)

loss, logits = output[0], output[1]

avg_loss.append(loss.item())

acc = binary_acc(logits, b_labels)

avg_acc.append(acc)

optimizer.zero_grad()

loss.backward()

clip_grad_norm_(model.parameters(), 1.0) #大于1的梯度将其设为1.0, 以防梯度爆炸

optimizer.step() #更新模型参数

scheduler.step() #更新learning rate

avg_acc = np.array(avg_acc).mean()

avg_loss = np.array(avg_loss).mean()

return avg_loss, avg_acc

def evaluate(model):

avg_acc = []

model.eval() #表示进入测试模式

with torch.no_grad():

for batch in test_dataloader:

b_input_ids, b_input_mask, b_labels = batch[0].long().to(device), batch[1].long().to(device), batch[2].long().to(device)

output = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask)

acc = binary_acc(output[0], b_labels)

avg_acc.append(acc)

avg_acc = np.array(avg_acc).mean()

return avg_acc验证或预测

def predict(sen):

input_id = convert_text_to_token(tokenizer, sen)

input_token = torch.tensor(input_id).long().to(device) #torch.Size([128])

atten_mask = [float(i>0) for i in input_id]

attention_token = torch.tensor(atten_mask).long().to(device) #torch.Size([128])

output = model(input_token.view(1, -1), token_type_ids=None, attention_mask=attention_token.view(1, -1)) #torch.Size([128])->torch.Size([1, 128])否则会报错

print(output[0])

return torch.max(output[0], dim=1)[1]

def valid(path):

q = [] # 用来存所有文件

for root, dirs, files in os.walk(path):

for name in files:

q.append(root + '/' +name) # 将文件名逐个放入q

count, counter = 0, 0

for path_i in q:

counter += 1

p, n = 0, 0

with open(path_i, 'r', encoding='utf-8') as f:

sentence = f.readline()

label = predict(sentence)

if label == 1:

p += 1

else:

n += 1

if p >= n:

final_s = 1

else:

final_s = 0

if str(final_s) == path_i.split('.')[0].split('_')[1]:

count += 1

print('对于{}个电影的未来评论的情感倾向性预测准确率为:'.format(counter))

print(count/counter)main函数

if __name__ == '__main__':

# data process

pos_text, neg_text = readfile('pppos.txt'), readfile('nnneg.txt')

sentences = pos_text + neg_text

# 设定标签

pos_targets = np.ones((len(pos_text)))

neg_targets = np.zeros((len(neg_text)))

targets = np.concatenate((pos_targets, neg_targets), axis=0).reshape(-1, 1) # (10000, 1)

total_targets = torch.tensor(targets)

# BERTTokenizer进行编码,将每一句中的单字转换成索引值,BERT中句子开头是‘CLS’,句子结尾是‘SEP’

tokenizer = BertTokenizer.from_pretrained('bert-base-chinese', cache_dir="E:/transformer_file/")

# 固定句子长度,太长的截断,不够长的用pad填充

input_ids = [convert_text_to_token(tokenizer, sen) for sen in sentences]

input_tokens = torch.tensor(input_ids)

# 一个句子中,如果对应位置为pad,则mask值为0, 否则为1

atten_masks = attention_masks(input_ids)

attention_tokens = torch.tensor(atten_masks)

# 创建train、test dataloader,

train_data = TensorDataset(train_inputs, train_masks, train_labels)

train_sampler = RandomSampler(train_data)

train_dataloader = DataLoader(train_data, sampler=train_sampler, batch_size=BATCH_SIZE)

test_data = TensorDataset(test_inputs, test_masks, test_labels)

test_sampler = SequentialSampler(test_data)

test_dataloader = DataLoader(test_data, sampler=test_sampler, batch_size=BATCH_SIZE)

# 创建模型

model = BertForSequenceClassification.from_pretrained("bert-base-chinese", num_labels=2) # 调用模型

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 使用GPU加速

model.to(device)

# 创建优化器

no_decay = ['bias', 'LayerNorm.weight']

optimizer_grouped_parameters = [

{'params': [p for n, p in model.named_parameters() if not any(nd in n for nd in no_decay)],

'weight_decay': WEIGHT_DECAY},

{'params': [p for n, p in model.named_parameters() if any(nd in n for nd in no_decay)], 'weight_decay': 0.0}

]

optimizer = AdamW(optimizer_grouped_parameters, lr=LEARNING_RATE, eps=EPSILON) # 优化器

epochs = 2 # 迭代次数

# training steps 的数量: [number of batches] x [number of epochs].

total_steps = len(train_dataloader) * epochs

# 设计 learning rate scheduler.

scheduler = get_linear_schedule_with_warmup(optimizer, num_warmup_steps=0, num_training_steps=total_steps)

# 开始训练

for epoch in range(epochs):

train_loss, train_acc = train(model, optimizer)

print('epoch={},训练准确率={},损失={}'.format(epoch, train_acc, train_loss))

test_acc = evaluate(model)

print("epoch={},测试准确率={}".format(epoch, test_acc))

####### 验证预测准确率

path = 'movie' # 文件夹的路径

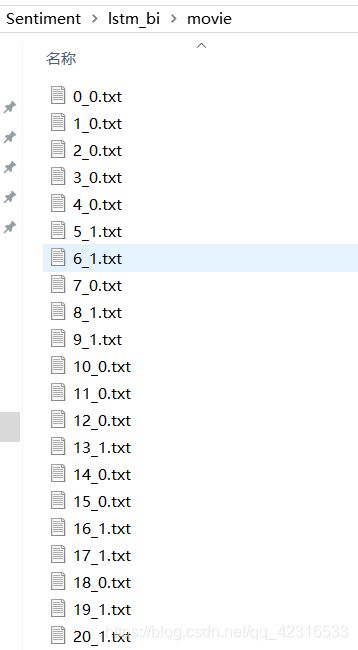

valid(path)其中:movie文件夹下是若干用来做验证的电影,每个txt文件代表一个电影的评论,命名规则是i_label,label表示该电影未来几天的情感倾向(好评表示1,差评表示0)。