【Transformer】《Attention is All You Need》论文笔记和pytorch代码笔记

参考自李沐读论文和pytorch代码

参数设置

## 维度

d_model = 512 # sub-layers, embedding layers and outputs的维度(为了利用残差连接,是一个加法操作)

d_inner_hid = 2048 # Feed Forward(MLP)的维度【d_ff】

d_k = 64 # key的维度

d_v = 64 # value的维度

## 其它

n_head = 8 # 多头注意力机制的数量【h】

n_layers = 6 # encoder/decoder的层数【N】

【】里是论文中的记号

模型主体

1. Encoder

1.1. 模型结构图

是由h=6个完全相同的结构构成,分为两个sub-layers:

1.2.Multi-Head Attention

1.2.1.Attention

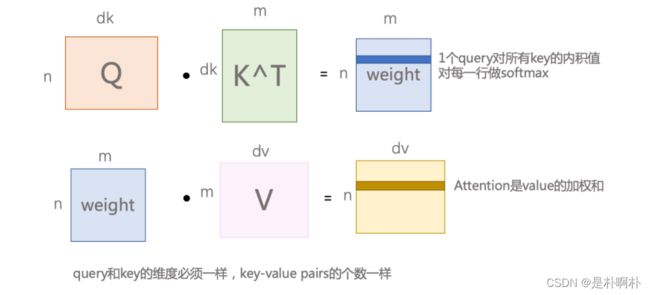

- Attention function涉及query和key-value pairs

- attention的output是value的加权和,权重来自query和key的相似度,由

compatibility function计算而来。 - 计算attention的

compatibility function有很多种,比如加性attitive attention(使用query和key不等长)或者乘性dot-product attention。本文选择了一种比较简单的Scaled Dot-Product Attention。query和key做内积,值越大表越相似。因为两个向量的长度一样,内积越大表示余弦越大,夹角越小,越相似。内积为0,则两个向量正交。

1.2.2.Scaled Dot-Product Attention

- A t t e n t i o n ( Q , K , V ) = s o f t m a x ( Q K T d k ) V Attention(Q,K,V)=softmax(\frac{QK^T}{\sqrt{d_k}})V Attention(Q,K,V)=softmax(dkQKT)V

-

矩阵乘法可以并行化,与CNN和RNN不同之处。

-

1个query与 d k d_k dk个key做点乘,计算出来 d k d_k dk个值,通过一个softmax,得到 d k d_k dk个和为1的权重。

-

Scaled体现在 1 d k \frac{1}{\sqrt{d_k}} dk1,因为 d k d_k dk很大的情况下,做softmax,权重非0即1,从而导致梯度消失。有点像蒸馏logits。使用 1 d k \frac{1}{\sqrt{d_k}} dk1的原因是:假设q和k是均值为0方差为1的iid变量,他们的点积 q ⋅ k = ∑ i = 1 d k q i k i q·k=\sum_{i=1}^{d_k}q_ik_i q⋅k=∑i=1dkqiki的均值为0方差为 d k d_k dk。所以scaled操作让方差变为1。

1.2.3.Multi-Head Attention

- M u l t i H e a d ( Q , K , V ) = C o n c a t ( h e a d 1 , . . . , h e a d h ) W O MultiHead(Q,K,V)=Concat(head_1,...,head_h)W^O MultiHead(Q,K,V)=Concat(head1,...,headh)WO

- h e a d i = A t t e n t i o n ( Q W i Q , K W i K , V W i V ) head_i=Attention(QW_i^Q,KW_i^K,VW_i^V) headi=Attention(QWiQ,KWiK,VWiV)

- 相当于将Q、K、V投影h次到不同的低维空间,相当于用不同表征子空间的pos算attention,有点像CNN的通道。

- W i Q , W i K ∈ R d m o d e l × d k W_i^Q,W_i^K\in R^{d_{model}×d_k} WiQ,WiK∈Rdmodel×dk, W i V ∈ R d m o d e l × d v W_i^V\in R^{d_{model}×d_v} WiV∈Rdmodel×dv, W O ∈ R h d v × d m o d e l W^O\in R^{hd_v×d_{model}} WO∈Rhdv×dmodel,投影的矩阵,参数可学习

- h = 8 , d k = d v = d m o d e l / h = 64 h=8,d_k=d_v=d_{model}/h=64 h=8,dk=dv=dmodel/h=64

- 输入的时候Q、K、V其实是一样的,第一层都是

input_embedding+positional encoding

1.3.Feed Forward

-

简单的全连接层,表示为 F F N ( x ) = m a x ( 0 , x W 1 + b 1 ) W 2 + b 2 FFN(x)=max(0,xW_1+b_1)W_2+b_2 FFN(x)=max(0,xW1+b1)W2+b2

max为Relu的激活函数- W 1 W_1 W1的维度是 ( d m o d e l , d f f ) (d_{model},d_{ff}) (dmodel,dff) , W 2 W_2 W2的维度是 ( d f f , d m o d e l ) (d_{ff},d_{model}) (dff,dmodel), d f f d_{ff} dff刚好是 d m o d e l d_{model} dmodel,相当于维度先放大四倍再复原

- 也相当于两个卷积核大小为1的卷积层

-

6个layer的FF参数不同,但是同一层的FF是一样的,对每一个词都要作用一次

1.4.其它部分

-

Input Embedding:输入为word序列,转换为词嵌入tensor

-

Positional Encoding:因为attention计算是对整体做,打乱也是一样的,所以要加上相对或者绝对的位置信息。是和

input embedding直接相加的,所以维度也是 d m o d e l d_{model} dmodel,位置信息的计算有很多方式,本文用的sin和cos函数。-

P E ( p o s , 2 i ) = s i n ( p o s / 1000 0 2 i / d m o d e l ) PE(pos,2i)=sin(pos/10000^{2i/d_{model}}) PE(pos,2i)=sin(pos/100002i/dmodel)

-

P E ( p o s , 2 i + 1 ) = c o s ( p o s / 1000 0 2 i / d m o d e l ) PE(pos,2i+1)=cos(pos/10000^{2i/d_{model}}) PE(pos,2i+1)=cos(pos/100002i/dmodel)

- pos代表词的位置;i代表第几维,取值[0,d_model),就是将三角函数缩放成d_model个不同的函数

-

选择三角函数的原因是可以很好的表示相对位置关系,因为 P E p o s + k PE_{pos+k} PEpos+k可以用 P E p o s PE_{pos} PEpos的线性方程所表示

-

正弦两角和公式 s i n ( p o s + k ) = s i n ( p o s ) c o s ( k ) + c o s ( p o s ) s i n ( k ) sin(pos+k)=sin(pos)cos(k)+cos(pos)sin(k) sin(pos+k)=sin(pos)cos(k)+cos(pos)sin(k),

即 P E ( p o s + k , 2 i ) = P E ( p o s , 2 i ) c o s ( k ) + P E ( p o s , 2 i + 1 ) s i n ( k ) PE(pos+k,2i)=PE(pos,2i)cos(k)+PE(pos,2i+1)sin(k) PE(pos+k,2i)=PE(pos,2i)cos(k)+PE(pos,2i+1)sin(k),省略维度

当k是一个常数时, s i n ( k ) sin(k) sin(k)和 c o s ( k ) cos(k) cos(k)也是常数

-

-

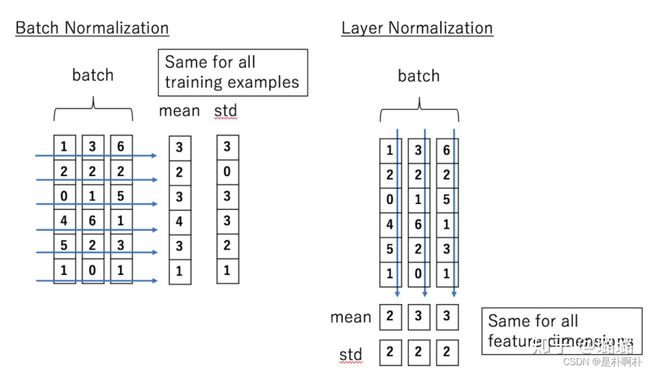

residual connection和layer normalization:接在每个sub-layers之后,表示为 L a y e r N o r m ( x + S u b l a y e r ( x ) ) LayerNorm(x+Sublayer(x)) LayerNorm(x+Sublayer(x))

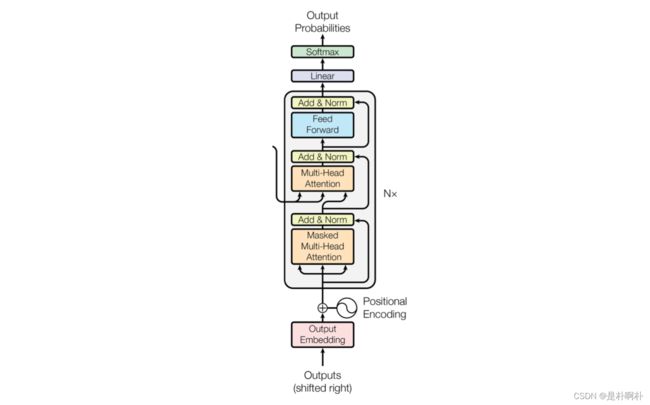

2.Decoder

2.1.模型结构图

2.2.Masked Multi-Head Attention(self-attention)

decoder增加的部分,在预测的时候只能看到之前位置,不能看到之后的位置。在计算attention时softmax之前,使用一个很大的负数替换mask位置的attention,使其经过softmax的结果很接近0。

2.3.Multi-Head Attention(encoder-attention)

- Q:decoder_output

- K:encoder_output

- V:encoder_output

2.4 输入

以翻译为例:

输入:我爱中国

输出: I Love China

Decoder执行步骤

- Time Step 1

- 初始输入: 起始符 + Positional Encoding(位置编码)

- 中间输入:(我爱中国)Encoder Embedding

- 最终输出:产生预测“I”

- Time Step 2

- 初始输入:起始符 + “I”+ Positonal Encoding

- 中间输入:(我爱中国)Encoder Embedding

- 最终输出:产生预测“Love”

- Time Step 3

- 初始输入:起始符 + “I”+ “Love”+ Positonal Encoding

- 中间输入:(我爱中国)Encoder Embedding

- 最终输出:产生预测“China”

参考自知乎

3.代码

3.1.Encoder总体架构

class Encoder(nn.Module):

''' A encoder model with self attention mechanism. '''

def __init__(

self, n_src_vocab, d_word_vec, n_layers, n_head, d_k, d_v,

d_model, d_inner, pad_idx, dropout=0.1, n_position=200, scale_emb=False):

super().__init__()

self.src_word_emb = nn.Embedding(n_src_vocab, d_word_vec, padding_idx=pad_idx) # 【Input Embedding层】

self.position_enc = PositionalEncoding(d_word_vec, n_position=n_position) # 【Positional Encoding】

self.dropout = nn.Dropout(p=dropout)

self.layer_stack = nn.ModuleList([

EncoderLayer(d_model, d_inner, n_head, d_k, d_v, dropout=dropout)

for _ in range(n_layers)]) # 【Encoder Layer】*n_layers

self.layer_norm = nn.LayerNorm(d_model, eps=1e-6) # 【layer norm】

self.scale_emb = scale_emb # boolean, 是否scaled embed

self.d_model = d_model

def forward(self, src_seq, src_mask, return_attns=False):

enc_slf_attn_list = []

# -- Forward

enc_output = self.src_word_emb(src_seq)

if self.scale_emb:

enc_output *= self.d_model ** 0.5 # 不懂为什么这样做

enc_output = self.dropout(self.position_enc(enc_output))

enc_output = self.layer_norm(enc_output)

for enc_layer in self.layer_stack:

enc_output, enc_slf_attn = enc_layer(enc_output, slf_attn_mask=src_mask)

enc_slf_attn_list += [enc_slf_attn] if return_attns else []

if return_attns:

return enc_output, enc_slf_attn_list

return enc_output,

3.2.Positional Encoding

class PositionalEncoding(nn.Module):

def __init__(self, d_hid, n_position=200):

super(PositionalEncoding, self).__init__()

# Not a parameter

self.register_buffer('pos_table', self._get_sinusoid_encoding_table(n_position, d_hid))

def _get_sinusoid_encoding_table(self, n_position, d_hid):

''' Sinusoid position encoding table '''

# TODO: make it with torch instead of numpy

def get_position_angle_vec(position):

return [position / np.power(10000, 2 * (hid_j // 2) / d_hid) for hid_j in range(d_hid)] # 2*(hid_j//2)让2i和2i+1都对应2i

sinusoid_table = np.array([get_position_angle_vec(pos_i) for pos_i in range(n_position)]) # pos(0,200)

sinusoid_table[:, 0::2] = np.sin(sinusoid_table[:, 0::2]) # dim 2i (0::2表示从0开始,每跳2个截取1个)

sinusoid_table[:, 1::2] = np.cos(sinusoid_table[:, 1::2]) # dim 2i+1

return torch.FloatTensor(sinusoid_table).unsqueeze(0)

def forward(self, x):

return x + self.pos_table[:, :x.size(1)].clone().detach() # x + pos_encoding(x) 截取了x维度的pos_encoding

3.3.EncoderLayer

包含两个sub_layers

class EncoderLayer(nn.Module):

''' Compose with two layers '''

def __init__(self, d_model, d_inner, n_head, d_k, d_v, dropout=0.1):

super(EncoderLayer, self).__init__()

self.slf_attn = MultiHeadAttention(n_head, d_model, d_k, d_v, dropout=dropout) # 包含了residual network&layer norm

self.pos_ffn = PositionwiseFeedForward(d_model, d_inner, dropout=dropout)

def forward(self, enc_input, slf_attn_mask=None):

enc_output, enc_slf_attn = self.slf_attn(

enc_input, enc_input, enc_input, mask=slf_attn_mask) # 输入都是enc_input

enc_output = self.pos_ffn(enc_output)

return enc_output, enc_slf_attn

MultiHeadAttention

class MultiHeadAttention(nn.Module):

''' Multi-Head Attention module '''

def __init__(self, n_head, d_model, d_k, d_v, dropout=0.1):

super().__init__()

# d_k=d_v=d_model/n_head = 512/8 = 64

self.n_head = n_head # 8

self.d_k = d_k # 64

self.d_v = d_v # 64

# 投影矩阵w,Linear一起表示,之后通过view重构shape

self.w_qs = nn.Linear(d_model, n_head * d_k, bias=False)

self.w_ks = nn.Linear(d_model, n_head * d_k, bias=False)

self.w_vs = nn.Linear(d_model, n_head * d_v, bias=False)

self.fc = nn.Linear(n_head * d_v, d_model, bias=False) # concat后接一个linear

self.attention = ScaledDotProductAttention(temperature=d_k ** 0.5)

self.dropout = nn.Dropout(dropout)

self.layer_norm = nn.LayerNorm(d_model, eps=1e-6)

def forward(self, q, k, v, mask=None):

# q,k,v其实是相同的

d_k, d_v, n_head = self.d_k, self.d_v, self.n_head

sz_b, len_q, len_k, len_v = q.size(0), q.size(1), k.size(1), v.size(1)

residual = q # residual是其中一个输入

# Pass through the pre-attention projection: b x lq x (n*dv)

# Separate different heads: b x lq x n x dv

q = self.w_qs(q).view(sz_b, len_q, n_head, d_k)

k = self.w_ks(k).view(sz_b, len_k, n_head, d_k)

v = self.w_vs(v).view(sz_b, len_v, n_head, d_v)

# Transpose for attention dot product: b x n x lq x dv

q, k, v = q.transpose(1, 2), k.transpose(1, 2), v.transpose(1, 2)

if mask is not None:

mask = mask.unsqueeze(1) # For head axis broadcasting.

q, attn = self.attention(q, k, v, mask=mask)

# Transpose to move the head dimension back: b x lq x n x dv

# Combine the last two dimensions to concatenate all the heads together: b x lq x (n*dv)

q = q.transpose(1, 2).contiguous().view(sz_b, len_q, -1)

q = self.dropout(self.fc(q))

q += residual

q = self.layer_norm(q)

return q, attn

ScaledDotProductAttention

class ScaledDotProductAttention(nn.Module):

''' Scaled Dot-Product Attention '''

def __init__(self, temperature, attn_dropout=0.1):

super().__init__()

self.temperature = temperature

self.dropout = nn.Dropout(attn_dropout)

def forward(self, q, k, v, mask=None):

attn = torch.matmul(q / self.temperature, k.transpose(2, 3))

if mask is not None:

attn = attn.masked_fill(mask == 0, -1e9) # mask为0,用一个很大的负数代替

attn = self.dropout(F.softmax(attn, dim=-1))

output = torch.matmul(attn, v)

return output, attn

PositionWiseFeedForward

class PositionwiseFeedForward(nn.Module):

''' A two-feed-forward-layer module '''

def __init__(self, d_in, d_hid, dropout=0.1):

super().__init__()

self.w_1 = nn.Linear(d_in, d_hid) # position-wise

self.w_2 = nn.Linear(d_hid, d_in) # position-wise

self.layer_norm = nn.LayerNorm(d_in, eps=1e-6)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

residual = x

x = self.w_2(F.relu(self.w_1(x)))

x = self.dropout(x)

x += residual

x = self.layer_norm(x)

return x