nanodet_ncnn_vs2019环境搭配

我的环境准备:

- VS2019

- opencv3.4.12

- protobuf-3.4.0

- ncnn

ncnn_window安装:

Build for Windows x64 using VS2017

因为我只用cpu不用GPU,所以在ncnn编译的时候,输入参数需要重新改变

cmake -G"NMake Makefiles" -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=%cd%/install -DProtobuf_INCLUDE_DIR=/build/install/include -DProtobuf_LIBRARIES=/build/install/lib/libprotobuf.lib -DProtobuf_PROTOC_EXECUTABLE=/build/install/bin/protoc.exe -DNCNN_VULKAN=ON ..

就是-DNCNN_VULKAN=ON改为OFF

要是你有GPU按默认的ON就行

给VS2019配置:

- 新建一个空项目

- 视图->其他窗口->属性管理器->Release | x64 ->右键 ->属性

- 添加下面的东西到-> VC++目录 ->包含目录:

添加opencv:

D:\life_b\opencv\build\include

D:\life_b\opencv\build\include\opencv

D:\life_b\opencv\build\include\opencv2

添加protobuf

D:\life_b\protobuf\protobuf-3.4.0\build\install\include

添加Vulkan

D:\life_b\Vulkan\1.2.154.1\Include

添加ncnn

D:\life_b\ncnn\build\install\include

- 添加下面东西到 -> VC++目录 -> 库目录:

D:\life_b\ncnn\build\install\lib

D:\life_b\opencv\build\x64\vc15\lib

D:\life_b\Vulkan\1.2.154.1\Lib

D:\life_b\protobuf\protobuf-3.4.0\build\install\lib

- 连接器->输入-> 附加依赖项

ncnn.lib

libprotobuf.lib

opencv_world3412.lib

vulkan-1.lib

在源文件添加main.cpp

#include

#include

#include

#include

#include

#include "nanodet.h"

#include

struct object_rect {

int x;

int y;

int width;

int height;

};

int resize_uniform(cv::Mat& src, cv::Mat& dst, cv::Size dst_size, object_rect& effect_area)

{

int w = src.cols;

int h = src.rows;

int dst_w = dst_size.width;

int dst_h = dst_size.height;

//std::cout << "src: (" << h << ", " << w << ")" << std::endl;

dst = cv::Mat(cv::Size(dst_w, dst_h), CV_8UC3, cv::Scalar(0));

float ratio_src = w * 1.0 / h;

float ratio_dst = dst_w * 1.0 / dst_h;

int tmp_w = 0;

int tmp_h = 0;

if (ratio_src > ratio_dst) {

tmp_w = dst_w;

tmp_h = floor((dst_w * 1.0 / w) * h);

}

else if (ratio_src < ratio_dst) {

tmp_h = dst_h;

tmp_w = floor((dst_h * 1.0 / h) * w);

}

else {

cv::resize(src, dst, dst_size);

effect_area.x = 0;

effect_area.y = 0;

effect_area.width = dst_w;

effect_area.height = dst_h;

return 0;

}

//std::cout << "tmp: (" << tmp_h << ", " << tmp_w << ")" << std::endl;

cv::Mat tmp;

cv::resize(src, tmp, cv::Size(tmp_w, tmp_h));

if (tmp_w != dst_w) {

int index_w = floor((dst_w - tmp_w) / 2.0);

//std::cout << "index_w: " << index_w << std::endl;

for (int i = 0; i < dst_h; i++) {

memcpy(dst.data + i * dst_w * 3 + index_w * 3, tmp.data + i * tmp_w * 3, tmp_w * 3);

}

effect_area.x = index_w;

effect_area.y = 0;

effect_area.width = tmp_w;

effect_area.height = tmp_h;

}

else if (tmp_h != dst_h) {

int index_h = floor((dst_h - tmp_h) / 2.0);

//std::cout << "index_h: " << index_h << std::endl;

memcpy(dst.data + index_h * dst_w * 3, tmp.data, tmp_w * tmp_h * 3);

effect_area.x = 0;

effect_area.y = index_h;

effect_area.width = tmp_w;

effect_area.height = tmp_h;

}

else {

printf("error\n");

}

//cv::imshow("dst", dst);

//cv::waitKey(0);

return 0;

}

const int color_list[80][3] =

{

//{255 ,255 ,255}, //bg

{216 , 82 , 24},

{236 ,176 , 31},

{125 , 46 ,141},

{118 ,171 , 47},

{ 76 ,189 ,237},

{238 , 19 , 46},

{ 76 , 76 , 76},

{153 ,153 ,153},

{255 , 0 , 0},

{255 ,127 , 0},

{190 ,190 , 0},

{ 0 ,255 , 0},

{ 0 , 0 ,255},

{170 , 0 ,255},

{ 84 , 84 , 0},

{ 84 ,170 , 0},

{ 84 ,255 , 0},

{170 , 84 , 0},

{170 ,170 , 0},

{170 ,255 , 0},

{255 , 84 , 0},

{255 ,170 , 0},

{255 ,255 , 0},

{ 0 , 84 ,127},

{ 0 ,170 ,127},

{ 0 ,255 ,127},

{ 84 , 0 ,127},

{ 84 , 84 ,127},

{ 84 ,170 ,127},

{ 84 ,255 ,127},

{170 , 0 ,127},

{170 , 84 ,127},

{170 ,170 ,127},

{170 ,255 ,127},

{255 , 0 ,127},

{255 , 84 ,127},

{255 ,170 ,127},

{255 ,255 ,127},

{ 0 , 84 ,255},

{ 0 ,170 ,255},

{ 0 ,255 ,255},

{ 84 , 0 ,255},

{ 84 , 84 ,255},

{ 84 ,170 ,255},

{ 84 ,255 ,255},

{170 , 0 ,255},

{170 , 84 ,255},

{170 ,170 ,255},

{170 ,255 ,255},

{255 , 0 ,255},

{255 , 84 ,255},

{255 ,170 ,255},

{ 42 , 0 , 0},

{ 84 , 0 , 0},

{127 , 0 , 0},

{170 , 0 , 0},

{212 , 0 , 0},

{255 , 0 , 0},

{ 0 , 42 , 0},

{ 0 , 84 , 0},

{ 0 ,127 , 0},

{ 0 ,170 , 0},

{ 0 ,212 , 0},

{ 0 ,255 , 0},

{ 0 , 0 , 42},

{ 0 , 0 , 84},

{ 0 , 0 ,127},

{ 0 , 0 ,170},

{ 0 , 0 ,212},

{ 0 , 0 ,255},

{ 0 , 0 , 0},

{ 36 , 36 , 36},

{ 72 , 72 , 72},

{109 ,109 ,109},

{145 ,145 ,145},

{182 ,182 ,182},

{218 ,218 ,218},

{ 0 ,113 ,188},

{ 80 ,182 ,188},

{127 ,127 , 0},

};

void draw_bboxes(const cv::Mat& bgr, const std::vector& bboxes, object_rect effect_roi)

{

static const char* class_names[] = { "person", "bicycle", "car", "motorcycle", "airplane", "bus",

"train", "truck", "boat", "traffic light", "fire hydrant",

"stop sign", "parking meter", "bench", "bird", "cat", "dog",

"horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe",

"backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket",

"bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl",

"banana", "apple", "sandwich", "orange", "broccoli", "carrot",

"hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop",

"mouse", "remote", "keyboard", "cell phone", "microwave", "oven",

"toaster", "sink", "refrigerator", "book", "clock", "vase",

"scissors", "teddy bear", "hair drier", "toothbrush"

};

cv::Mat image = bgr.clone();

int src_w = image.cols;

int src_h = image.rows;

int dst_w = effect_roi.width;

int dst_h = effect_roi.height;

float width_ratio = (float)src_w / (float)dst_w;

float height_ratio = (float)src_h / (float)dst_h;

for (size_t i = 0; i < bboxes.size(); i++)

{

const BoxInfo& bbox = bboxes[i];

cv::Scalar color = cv::Scalar(color_list[bbox.label][0], color_list[bbox.label][1], color_list[bbox.label][2]);

//fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f %.2f\n", bbox.label, bbox.score,

// bbox.x1, bbox.y1, bbox.x2, bbox.y2);

cv::rectangle(image, cv::Rect(cv::Point((bbox.x1 - effect_roi.x) * width_ratio, (bbox.y1 - effect_roi.y) * height_ratio),

cv::Point((bbox.x2 - effect_roi.x) * width_ratio, (bbox.y2 - effect_roi.y) * height_ratio)), color);

char text[256];

//sprintf(text, "%s %.1f%%", class_names[bbox.label], bbox.score * 100);

sprintf_s(text, "%s %.1f%%", class_names[bbox.label], bbox.score * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.4, 1, &baseLine);

int x = (bbox.x1 - effect_roi.x) * width_ratio;

int y = (bbox.y1 - effect_roi.y) * height_ratio - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > image.cols)

x = image.cols - label_size.width;

cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

color, -1);

cv::putText(image, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(255, 255, 255));

}

cv::imshow("image", image);

}

int image_demo(NanoDet& detector, const char* imagepath)

{

// const char* imagepath = "D:/Dataset/coco/val2017/*.jpg";

std::vector filenames;

cv::glob(imagepath, filenames, false);

for (auto img_name : filenames)

{

cv::Mat image = cv::imread(img_name);

if (image.empty())

{

fprintf(stderr, "cv::imread %s failed\n", img_name);

return -1;

}

object_rect effect_roi;

cv::Mat resized_img;

resize_uniform(image, resized_img, cv::Size(320, 320), effect_roi);

auto results = detector.detect(resized_img, 0.4, 0.5);

draw_bboxes(image, results, effect_roi);

cv::waitKey(0);

}

return 0;

}

int webcam_demo(NanoDet& detector, int cam_id)

{

cv::Mat image;

cv::VideoCapture cap(cam_id);

while (true)

{

cap >> image;

object_rect effect_roi;

cv::Mat resized_img;

resize_uniform(image, resized_img, cv::Size(320, 320), effect_roi);

auto results = detector.detect(resized_img, 0.4, 0.5);

draw_bboxes(image, results, effect_roi);

cv::waitKey(1);

}

return 0;

}

int video_demo(NanoDet& detector, const char* path)

{

cv::Mat image;

cv::VideoCapture cap(path);

while (true)

{

cap >> image;

object_rect effect_roi;

cv::Mat resized_img;

resize_uniform(image, resized_img, cv::Size(320, 320), effect_roi);

auto results = detector.detect(resized_img, 0.4, 0.5);

draw_bboxes(image, results, effect_roi);

cv::waitKey(1);

}

return 0;

}

int benchmark(NanoDet& detector)

{

int loop_num = 100;

int warm_up = 8;

double time_min = DBL_MAX;

double time_max = -DBL_MAX;

double time_avg = 0;

ncnn::Mat input = ncnn::Mat(320, 320, 3);

input.fill(0.01f);

for (int i = 0; i < warm_up + loop_num; i++)

{

double start = ncnn::get_current_time();

ncnn::Extractor ex = detector.Net->create_extractor();

ex.input("input.1", input);

for (const auto& head_info : detector.heads_info)

{

ncnn::Mat dis_pred;

ncnn::Mat cls_pred;

ex.extract(head_info.dis_layer.c_str(), dis_pred);

ex.extract(head_info.cls_layer.c_str(), cls_pred);

}

double end = ncnn::get_current_time();

double time = end - start;

if (i >= warm_up)

{

time_min = (std::min)(time_min, time);

time_max = (std::max)(time_max, time);

time_avg += time;

}

}

time_avg /= loop_num;

fprintf(stderr, "%20s min = %7.2f max = %7.2f avg = %7.2f\n", "nanodet", time_min, time_max, time_avg);

return 0;

}

int main(int argc, char** argv)

{

if (argc != 3)

{

fprintf(stderr, "usage: %s [mode] [path]. \n For webcam mode=0, path is cam id; \n For image demo, mode=1, path=xxx/xxx/*.jpg; \n For video, mode=2; \n For benchmark, mode=3 path=0.\n", argv[0]);

return -1;

}

NanoDet detector = NanoDet("./models/nanodet_m.param", "./models/nanodet_m.bin", true);

int mode = atoi(argv[1]);

switch (mode)

{

case 0: {

int cam_id = atoi(argv[2]);

webcam_demo(detector, cam_id);

break;

}

case 1: {

const char* images = argv[2];

image_demo(detector, images);

break;

}

case 2: {

const char* path = argv[2];

video_demo(detector, path);

break;

}

case 3: {

benchmark(detector);

break;

}

default: {

fprintf(stderr, "usage: %s [mode] [path]. \n For webcam mode=0, path is cam id; \n For image demo, mode=1, path=xxx/xxx/*.jpg; \n For video, mode=2; \n For benchmark, mode=3 path=0.\n", argv[0]);

break;

}

}

}

添加nanodet.cpp文件

//

// Create by RangiLyu

// 2020 / 10 / 2

//

#include "nanodet.h"

#include

// #include

inline float fast_exp(float x)

{

union {

uint32_t i;

float f;

} v{};

v.i = (1 << 23) * (1.4426950409 * x + 126.93490512f);

return v.f;

}

inline float sigmoid(float x)

{

return 1.0f / (1.0f + fast_exp(-x));

}

template

int activation_function_softmax(const _Tp* src, _Tp* dst, int length)

{

const _Tp alpha = *std::max_element(src, src + length);

_Tp denominator{ 0 };

for (int i = 0; i < length; ++i) {

dst[i] = fast_exp(src[i] - alpha);

denominator += dst[i];

}

for (int i = 0; i < length; ++i) {

dst[i] /= denominator;

}

return 0;

}

bool NanoDet::hasGPU = true;

NanoDet* NanoDet::detector = nullptr;

NanoDet::NanoDet(const char* param, const char* bin, bool useGPU)

{

this->Net = new ncnn::Net();

// opt

this->hasGPU = 0 > 0;

this->Net->opt.use_vulkan_compute = this->hasGPU && useGPU;

this->Net->opt.use_fp16_arithmetic = true;

this->Net->load_param(param);

this->Net->load_model(bin);

}

NanoDet::~NanoDet()

{

delete this->Net;

}

void NanoDet::preprocess(cv::Mat& image, ncnn::Mat& in)

{

int img_w = image.cols;

int img_h = image.rows;

in = ncnn::Mat::from_pixels(image.data, ncnn::Mat::PIXEL_BGR, img_w, img_h);

//in = ncnn::Mat::from_pixels_resize(image.data, ncnn::Mat::PIXEL_BGR, img_w, img_h, this->input_width, this->input_height);

const float mean_vals[3] = { 104.04f, 113.9f, 119.8f };

const float norm_vals[3] = { 0.013569f, 0.014312f, 0.014106f };

in.substract_mean_normalize(mean_vals, norm_vals);

}

std::vector NanoDet::detect(cv::Mat image, float score_threshold, float nms_threshold)

{

ncnn::Mat input;

preprocess(image, input);

//double start = ncnn::get_current_time();

auto ex = this->Net->create_extractor();

ex.set_light_mode(false);

ex.set_num_threads(4);

//this->hasGPU = ncnn::get_gpu_count() > 0;

//ex.set_vulkan_compute(this->hasGPU);

ex.input("input.1", input);

std::vector> results;

results.resize(this->num_class);

for (const auto& head_info : this->heads_info)

{

ncnn::Mat dis_pred;

ncnn::Mat cls_pred;

ex.extract(head_info.dis_layer.c_str(), dis_pred);

ex.extract(head_info.cls_layer.c_str(), cls_pred);

// std::cout << "c:" << cls_pred.c << " h:" << cls_pred.h <<" w:" <decode_infer(cls_pred, dis_pred, head_info.stride, score_threshold, results);

}

std::vector dets;

for (int i = 0; i < (int)results.size(); i++)

{

this->nms(results[i], nms_threshold);

for (auto box : results[i])

{

dets.push_back(box);

}

}

//double end = ncnn::get_current_time();

//double time = end - start;

//printf("Detect Time:%7.2f \n", time);

return dets;

}

void NanoDet::decode_infer(ncnn::Mat& cls_pred, ncnn::Mat& dis_pred, int stride, float threshold, std::vector>& results)

{

int feature_h = this->input_size / stride;

int feature_w = this->input_size / stride;

//cv::Mat debug_heatmap = cv::Mat(feature_h, feature_w, CV_8UC3);

for (int idx = 0; idx < feature_h * feature_w; idx++)

{

const float* scores = cls_pred.row(idx);

int row = idx / feature_w;

int col = idx % feature_w;

float score = 0;

int cur_label = 0;

for (int label = 0; label < this->num_class; label++)

{

if (scores[label] > score)

{

score = scores[label];

cur_label = label;

}

}

if (score > threshold)

{

//std::cout << "label:" << cur_label << " score:" << score << std::endl;

const float* bbox_pred = dis_pred.row(idx);

results[cur_label].push_back(this->disPred2Bbox(bbox_pred, cur_label, score, col, row, stride));

//debug_heatmap.at(row, col)[0] = 255;

//cv::imshow("debug", debug_heatmap);

}

}

}

BoxInfo NanoDet::disPred2Bbox(const float*& dfl_det, int label, float score, int x, int y, int stride)

{

float ct_x = (x + 0.5) * stride;

float ct_y = (y + 0.5) * stride;

std::vector dis_pred;

dis_pred.resize(4);

for (int i = 0; i < 4; i++)

{

float dis = 0;

float* dis_after_sm = new float[this->reg_max + 1];

activation_function_softmax(dfl_det + i * (this->reg_max + 1), dis_after_sm, this->reg_max + 1);

for (int j = 0; j < this->reg_max + 1; j++)

{

dis += j * dis_after_sm[j];

}

dis *= stride;

//std::cout << "dis:" << dis << std::endl;

dis_pred[i] = dis;

delete[] dis_after_sm;

}

float xmin = (std::max)(ct_x - dis_pred[0], .0f);

float ymin = (std::max)(ct_y - dis_pred[1], .0f);

float xmax = (std::min)(ct_x + dis_pred[2], (float)this->input_size);

float ymax = (std::min)(ct_y + dis_pred[3], (float)this->input_size);

//std::cout << xmin << "," << ymin << "," << xmax << "," << xmax << "," << std::endl;

return BoxInfo{ xmin, ymin, xmax, ymax, score, label };

}

void NanoDet::nms(std::vector& input_boxes, float NMS_THRESH)

{

std::sort(input_boxes.begin(), input_boxes.end(), [](BoxInfo a, BoxInfo b) { return a.score > b.score; });

std::vector vArea(input_boxes.size());

for (int i = 0; i < int(input_boxes.size()); ++i) {

vArea[i] = (input_boxes.at(i).x2 - input_boxes.at(i).x1 + 1)

* (input_boxes.at(i).y2 - input_boxes.at(i).y1 + 1);

}

for (int i = 0; i < int(input_boxes.size()); ++i) {

for (int j = i + 1; j < int(input_boxes.size());) {

float xx1 = (std::max)(input_boxes[i].x1, input_boxes[j].x1);

float yy1 = (std::max)(input_boxes[i].y1, input_boxes[j].y1);

float xx2 = (std::min)(input_boxes[i].x2, input_boxes[j].x2);

float yy2 = (std::min)(input_boxes[i].y2, input_boxes[j].y2);

float w = (std::max)(float(0), xx2 - xx1 + 1);

float h = (std::max)(float(0), yy2 - yy1 + 1);

float inter = w * h;

float ovr = inter / (vArea[i] + vArea[j] - inter);

if (ovr >= NMS_THRESH) {

input_boxes.erase(input_boxes.begin() + j);

vArea.erase(vArea.begin() + j);

}

else {

j++;

}

}

}

}

在头文件添加nanodet.h

//

// Create by RangiLyu

// 2020 / 10 / 2

//

#ifndef NANODET_H

#define NAMODET_H

#include

#include "ncnn/net.h"

typedef struct HeadInfo

{

std::string cls_layer;

std::string dis_layer;

int stride;

};

typedef struct BoxInfo

{

float x1;

float y1;

float x2;

float y2;

float score;

int label;

} BoxInfo;

class NanoDet

{

public:

NanoDet(const char* param, const char* bin, bool useGPU);

~NanoDet();

static NanoDet* detector;

ncnn::Net* Net;

static bool hasGPU;

std::vector heads_info{

// cls_pred|dis_pred|stride

{"792", "795", 8},

{"814", "817", 16},

{"836", "839", 32},

};

std::vector detect(cv::Mat image, float score_threshold, float nms_threshold);

std::vector labels{ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };

private:

void preprocess(cv::Mat& image, ncnn::Mat& in);

void decode_infer(ncnn::Mat& cls_pred, ncnn::Mat& dis_pred, int stride, float threshold, std::vector>& results);

BoxInfo disPred2Bbox(const float*& dfl_det, int label, float score, int x, int y, int stride);

static void nms(std::vector& result, float nms_threshold);

int input_size = 320;

int num_class = 80;

int reg_max = 7;

};

#endif //NANODET_H

在创建的C++空项目目录里面,创建2个目录,一个是images,另一个是models。

images添加要测试的图片

models添加转换好的模型(nanodet_m.bin,nanodet_m.param)模型链接

做好这些,回到VS2019->调试->开始执行。

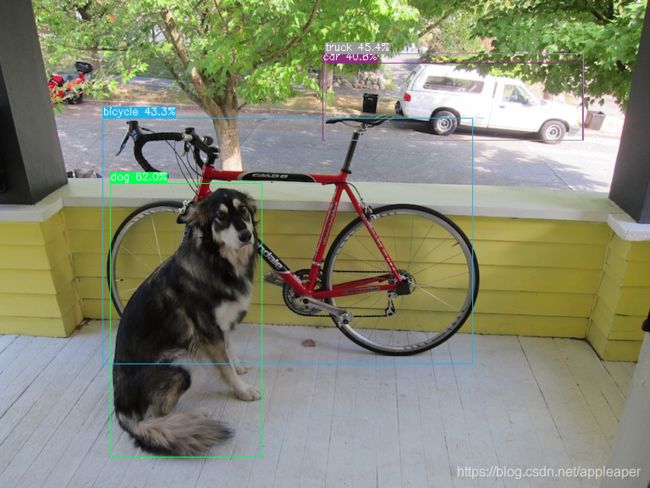

直接看结果