GBase 8a 节点替换

节点替换 包括替换gcware、替换gcluster、替换gnode ,新节点可以是freenode节点(替换后节点IP改变),也可以是全新未安装过gbase的节点(替换后节点IP不变),本次内容是使用全新节点替换gnode,其他情况 以后有时间再补充

原始集群 192.168.61.1 【8a-1】 (gcware + gcluster + gnode)

192.168.61.2 【8a-2】 (gnode) 待替换节点

新节点 192.168.61.3 【8a-3】

目录

原始状态

删除被替换节点的 feventlog

被替换节点关机或者断网

生成新的distribution

将被替换的集群节点(原 192.168.61.2)机器网线拔出(或者直接关机),并将待替换的新机器(192.168.61.3)IP改为192.168.61.2

配置新节点的ssh

执行节点替换

再次数据重分布,将数据分布到新节点

删除旧的分布方案

问题记录

原始状态

[gbase@8a-1 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 192.168.61.1 | OPEN |

------------------------------------

======================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

======================================================

| NodeName | IpAddress | gcluster | DataState |

------------------------------------------------------

| coordinator1 | 192.168.61.1 | OPEN | 0 |

------------------------------------------------------

=============================================================

| GBASE CLUSTER FREE DATA NODE INFORMATION |

=============================================================

| NodeName | IpAddress | gnode | syncserver | DataState |

-------------------------------------------------------------

| FreeNode1 | 192.168.61.2 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| FreeNode2 | 192.168.61.1 | OPEN | OPEN | 0 |

-------------------------------------------------------------

0 virtual cluster

1 coordinator node

2 free data node设置被替换节点状态为 unavailable

当节点状态置为unavaliable时 该节点不再接收gcluster下发的SQL

[gbase@8a-1 gcinstall]$ gcadmin setnodestate 192.168.61.2 unavailable

[gbase@8a-1 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 192.168.61.1 | OPEN |

------------------------------------

======================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

======================================================

| NodeName | IpAddress | gcluster | DataState |

------------------------------------------------------

| coordinator1 | 192.168.61.1 | OPEN | 0 |

------------------------------------------------------

===============================================================================================================

| GBASE DATA CLUSTER INFORMATION |

===============================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------------

| node1 | 192.168.61.2 | 1 | UNAVAILABLE | | |

---------------------------------------------------------------------------------------------------------------

| node2 | 192.168.61.1 | 1 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------------

删除被替换节点的 feventlog

当集群各节点状态不一致时会生成feventlog,这个feventlog也是判断集群各节点是否一致的标准,当我们将节点状态置为unavailable时 这个节点已经与其他节点不一致了,需要手工删除feventlog,虽然再后面替换节点repalce.py这个里面也会删除feventlog, 但是我们选择先手工删除,这样在节点替换过程中 再次删除feventlog的时候会很快,可以保证在节点替换过程中 不会因为feventlog太大 而耗费时间过长,只要feventlog一致,那么集群就可以正常运转,同时也保证了替换节点过程中,瞬间删除feventlog ,集群可以正常工作,同时 在节点替换过程中,如果没有先将feventlog删除的话,那么节点替换过程中也会自动删除,只不过这个时间可能会很长,但是在这个时间段,下发过来的sql不会拒收,而是将SQL置为等待状态,等节点替换完继续执行SQL,这个特性也说明了节点替换过程中,业务不受影响

[gbase@8a-1 gcinstall]$ gcadmin rmfeventlog 192.168.61.2被替换节点关机或者断网

#192.168.61.2节点

[root@8a-2 ~]# shutdown -h now生成新的distribution

由于192.168.61.2将被替换,所以要将192.168.61.2上面的数据先转移,转移的方法就是先生成新的分布方案,再按照新的分布方案进行数据重分布。比如原集群的分布方案是192.168.61.1和192.168.61.2两个节点,192.168.61.2将被替换,那么新的分布方案就不能包含192.168.61.2了

原始集群的分布方案(Distribution ID = 1)

[gbase@8a-1 gcinstall]$ gcadmin showdistribution node

Distribution ID: 1 | State: new | Total segment num: 2

============================================================================================

| nodes | 192.168.61.2 | 192.168.61.1 |

--------------------------------------------------------------------------------------------

| primary | 1 | 2 |

| segments | | |

--------------------------------------------------------------------------------------------

|duplicate | 2 | 1 |

|segments 1| | |

============================================================================================

复制原始的分布方案

[gbase@8a-1 gcinstall]$ gcadmin getdistribution 1 distribution_info_1.xml

[gbase@8a-1 gcinstall]$ cat distribution_info_1.xml

修改新的分布方案

[gbase@8a-1 gcinstall]$ vi distribution_info_1.xml

如果被替换节点是主分片,那么将ip由被替换的节点改为改为该分片上的备份分片的IP,当前分片的备份分片为192.168.61.1,所以主分片这里改为 192.168.61.1

因为上面主分片已经改为192.168.61.1,这里的备份分片直接删掉

如果被替换节点是备份分片,那么这几行直接删除

[gbase@8a-1 gcinstall]$ vi gcChangeInfo_dis1.xml生成新的分布方案

[gbase@8a-1 gcinstall]$ gcadmin distribution gcChangeInfo_dis1.xml查看新的分布方案

此时多了distribution ID = 2 ,这个分布方案里面不包含被替换节点

[gbase@8a-1 gcinstall]$ gcadmin showdistribution node

Distribution ID: 2 | State: new | Total segment num: 2

====================================================

| nodes | 192.168.61.1 |

----------------------------------------------------

| primary | 1 |

| segments | 2 |

====================================================

Distribution ID: 1 | State: old | Total segment num: 2

============================================================================================

| nodes | 192.168.61.2 | 192.168.61.1 |

--------------------------------------------------------------------------------------------

| primary | 1 | 2 |

| segments | | |

--------------------------------------------------------------------------------------------

|duplicate | 2 | 1 |

|segments 1| | |

============================================================================================

使用Distribution = 2 ,将数据重新分布但不给被替换节点分配数据

没有重分布时 数据的分布情况如下(数据全部使用data_distribution_id = 1)

gbase> select index_name,tbname,data_distribution_id,vc_id from gbase.table_distribution;

+-------------------------------+--------------------+----------------------+---------+

| index_name | tbname | data_distribution_id | vc_id |

+-------------------------------+--------------------+----------------------+---------+

| gclusterdb.rebalancing_status | rebalancing_status | 1 | vc00001 |

| gclusterdb.dual | dual | 1 | vc00001 |

| testdb.t1 | t1 | 1 | vc00001 |

+-------------------------------+--------------------+----------------------+---------+

3 rows in set (Elapsed: 00:00:00.00)重分布后 数据的分布情况如下(数据改为使用 data_distribution_id = 2)

gbase> initnodedatamap;

Query OK, 0 rows affected, 3 warnings (Elapsed: 00:00:00.11)

gbase> rebalance instance to 2; 这里 to 2 表示使用Distribution = 2 的分布方案

Query OK, 1 row affected (Elapsed: 00:00:00.03)

gbase> select index_name,status,percentage,priority,host,distribution_id from gclusterdb.rebalancing_status;

+------------+-----------+------------+----------+--------------+-----------------+

| index_name | status | percentage | priority | host | distribution_id |

+------------+-----------+------------+----------+--------------+-----------------+

| testdb.t1 | COMPLETED | 100 | 5 | 192.168.61.1 | 2 |

+------------+-----------+------------+----------+--------------+-----------------+

1 row in set (Elapsed: 00:00:00.01)

gbase> select index_name,tbname,data_distribution_id,vc_id from gbase.table_distribution;

+-------------------------------+--------------------+----------------------+---------+

| index_name | tbname | data_distribution_id | vc_id |

+-------------------------------+--------------------+----------------------+---------+

| gclusterdb.rebalancing_status | rebalancing_status | 2 | vc00001 |

| gclusterdb.dual | dual | 2 | vc00001 |

| test.t | t | 2 | vc00001 |

+-------------------------------+--------------------+----------------------+---------+

3 rows in set (Elapsed: 00:00:00.00)将被替换的集群节点(原 192.168.61.2)机器网线拔出(或者直接关机,上面做过这里可以略过),并将待替换的新机器(192.168.61.3)IP改为192.168.61.2

[root@8a-3 ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

[root@8a-3 ~]# service network restart

Restarting network (via systemctl): [ OK ]

[root@8a-3 ~]# ip a

2: ens33: mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:c6:d3:8e brd ff:ff:ff:ff:ff:ff

inet 192.168.61.2/24 brd 192.168.61.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::61d5:26ac:7834:3674/64 scope link noprefixroute

[root@8a-3 ~]# cd /opt/gbase/

[root@8a-3 gbase]# ll #新节点原始状态 当前目录为空

total 0

自己在虚拟机测试时,这一步非常重要,如果忘记这步,那么替换前后集群是没有变化的,只有先将旧节点关机,新节点开机 才可以达到正常替换的效果,不然替换完了,结果一看 新节点192.168.61.3上什么都没有

配置新节点的ssh

192.168.61.1上面的ssh密钥还是旧的,对于新节点需要重新生成

#更新gbase用户

[gbase@8a-1 ~]$ su - gbase

[gbase@8a-1 ~]$ vi /home/gbase/.ssh/known_hosts

[gbase@8a-1 ~]$ ssh 192.168.61.2192.168.61.1 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBNZZ/4d+7gPdp9IA6JqTZ85mFjbVuPMJkHExKkPmmdMGoRjjAtnBcxw+noK3ozrzmQ19t7ThFZDS4R73tq8r64M=

192.168.61.2 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBNU6SIPlrj10pbGzZPzB2Tr0Ssvo4Sj79Z/DjhCqsdSc969xOoj8P9sS+smIC2YW0cPWIU/1YdnV/IPtOiKx7PI=

#更新root用户

[gbase@8a-1 ~]$ su - root

[root@8a-1 ~]# vi /root/.ssh/known_hosts

[root@8a-1 ~]# ssh 192.168.61.2192.168.61.1 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBNZZ/4d+7gPdp9IA6JqTZ85mFjbVuPMJkHExKkPmmdMGoRjjAtnBcxw+noK3ozrzmQ19t7ThFZDS4R73tq8r64M=

192.168.61.2 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBNU6SIPlrj10pbGzZPzB2Tr0Ssvo4Sj79Z/DjhCqsdSc969xOoj8P9sS+smIC2YW0cPWIU/1YdnV/IPtOiKx7PI=

执行节点替换

[root@8a-1 ~]# su - gbase

[gbase@8a-1 ~]$ cd /opt/gcinstall/

[gbase@8a-1 gcinstall]$ ./replace.py --host=192.168.61.2 --type=data --dbaUser=gbase --dbaUserPwd=gbase --generalDBUser=gbase --generalDBPwd=gbase20110531 --overwrite 这里的host表示被替换节点的IP再次检查分布情况(替换后的分布方案 Distribution = 3)

[gbase@8a-1 gcinstall]$ gcadmin showdistribution

Distribution ID: 3 | State: new | Total segment num: 2

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 192.168.61.2 | 1 | 192.168.61.1 |

------------------------------------------------------------------------------------------------------------------------

| 192.168.61.1 | 2 | 192.168.61.2 |

========================================================================================================================

Distribution ID: 2 | State: old | Total segment num: 2

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 192.168.61.1 | 1 | |

------------------------------------------------------------------------------------------------------------------------

| 192.168.61.1 | 2 | |

========================================================================================================================

[gbase@8a-1 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 192.168.61.1 | OPEN |

------------------------------------

======================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

======================================================

| NodeName | IpAddress | gcluster | DataState |

------------------------------------------------------

| coordinator1 | 192.168.61.1 | OPEN | 0 |

------------------------------------------------------

=========================================================================================================

| GBASE DATA CLUSTER INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 192.168.61.2 | 3 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 192.168.61.1 | 2,3 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

备注:执行完replace.py时,此时新节点已经安装完gbase,可以去新节点检查以下,安装前/opt/gbase目录为空的,安装后该目录下已经生成了安装文件

#新的192.168.61.2节点

#replace.py执行前

[root@8a-3 ~]# cd /opt/gbase/

[root@8a-3 gbase]# ll

total 0

#replace.py执行后

[root@8a-3 gbase]# ll

total 0

drwxrwxr-x 2 gbase gbase 6 Dec 1 23:34 192.168.61.2再次数据重分布,将数据分布到新节点

[gbase@8a-1 gcinstall]$ gccli -uroot -p

Enter password:

GBase client 9.5.3.27.14_patch.1b41b5c1. Copyright (c) 2004-2022, GBase. All Rights Reserved.

gbase> rebalance instance to 3;

Query OK, 1 row affected (Elapsed: 00:00:00.02)

gbase> select * from gclusterdb.rebalancing_status;

+------------+---------+------------+----------+----------------------------+----------+---------+------------+----------+--------------+-----------------+

| index_name | db_name | table_name | tmptable | start_time | end_time | status | percentage | priority | host | distribution_id |

+------------+---------+------------+----------+----------------------------+----------+---------+------------+----------+--------------+-----------------+

| test.t | test | t | | 2022-11-29 00:08:30.294000 | NULL | RUNNING | 0 | 5 | 192.168.61.1 | 3 |

+------------+---------+------------+----------+----------------------------+----------+---------+------------+----------+--------------+-----------------+

1 row in set (Elapsed: 00:00:00.00)删除旧的分布方案

[gbase@8a-1 gcinstall]$ gcadmin rmdistribution 2问题记录

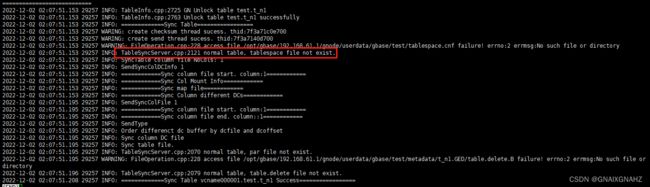

rebalance一直处于running状态 查看日志,发现表空间不存在,卧槽!官方文档少写一步吧,没有initnodedatamap(好像并不是 无法initnodedatamap 我现在怀疑无VC的不支持节点替换!明天再试试有VC的节点替换吧)

(以下解决方法是错的 不要看了! 但是我不想删 也是一种解决问题的思路)

删除旧的分布方案时无法删除,提示还在使用

[gbase@8a-1 gcinstall]$ gcadmin rmdistribution 2

cluster distribution ID [2]

it will be removed now

please ensure this is ok, input [Y,y] or [N,n]: y

select count(*) from gbase.nodedatamap where data_distribution_id=2 result is not 0

refreshnodedatamap drop 2 failed, gcluster command error: Can not drop nodedatamap 2. Some table are using it.

gcadmin remove distribution: check whether distribution [2] is using failed

gcadmin remove distribution [2] failed

gbase> select index_name,tbname,data_distribution_id,vc_id from gbase.table_distribution;

+-------------------------------+--------------------+----------------------+---------+

| index_name | tbname | data_distribution_id | vc_id |

+-------------------------------+--------------------+----------------------+---------+

| gclusterdb.rebalancing_status | rebalancing_status | 3 | vc00001 |

| test.t | t | 2 | vc00001 |

| gclusterdb.dual | dual | 3 | vc00001 |

+-------------------------------+--------------------+----------------------+---------+

3 rows in set (Elapsed: 00:00:00.00)

gbase> rebalance table test.t to 3;

ERROR 1707 (HY000): gcluster command error: target table has been rebalancing.查看重分布状态 发现重分布一直处于running状态

gbase> select index_name,status,percentage,priority,host,distribution_id from gclusterdb.rebalancing_status;

+------------+---------+------------+----------+--------------+-----------------+

| index_name | status | percentage | priority | host | distribution_id |

+------------+---------+------------+----------+--------------+-----------------+

| test.t | RUNNING | 0 | 1 | 192.168.61.1 | 3 |

+------------+---------+------------+----------+--------------+-----------------+判断是不是锁住了,查看锁信息,果然被锁了

[gbase@8a-1 gcinstall]$ gcadmin showlock

+=====================================================================================+

| GCLUSTER LOCK |

+=====================================================================================+

+-----------------------------+------------+---------------+--------------+------+----+

| Lock name | owner | content | create time |locked|type|

+-----------------------------+------------+---------------+--------------+------+----+

| gc-event-lock |192.168.61.1| global master |20221129002719| TRUE | E |

+-----------------------------+------------+---------------+--------------+------+----+

| vc00001.hashmap_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

+-----------------------------+------------+---------------+--------------+------+----+

| vc00001.test.db_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

+-----------------------------+------------+---------------+--------------+------+----+

| vc00001.test.t.meta_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

+-----------------------------+------------+---------------+--------------+------+----+

|vc00001.test.t.rebalance_lock|192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | E |

+-----------------------------+------------+---------------+--------------+------+----+

|vc00001.test.table_space_lock|192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

+-----------------------------+------------+---------------+--------------+------+----+

Total : 6找出当前被锁住的进程ID为3388

gbase> show processlist;

+------+-----------------+--------------------+------+---------+------+-----------------------------+-------------------------------------------------------------------+

| Id | User | Host | db | Command | Time | State | Info |

+------+-----------------+--------------------+------+---------+------+-----------------------------+-------------------------------------------------------------------+

| 1 | event_scheduler | localhost | NULL | Daemon | 1780 | Waiting for next activation | NULL |

| 3388 | gbase | 192.168.61.1:36000 | NULL | Query | 667 | Move table's slice | REBALANCE /*+ synchronous,vcid */ TABLE "vc00001"."test"."t" TO 3 |

| 3391 | root | localhost | NULL | Query | 0 | NULL | show processlist |

+------+-----------------+--------------------+------+---------+------+-----------------------------+-------------------------------------------------------------------+

3 rows in set (Elapsed: 00:00:00.00)查看被锁进程的锁状态

[gbase@8a-1 gcinstall]$ gcadmin showlock | grep 3388

| vc00001.hashmap_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

| vc00001.test.db_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

| vc00001.test.t.meta_lock |192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |

|vc00001.test.t.rebalance_lock|192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | E |

|vc00001.test.table_space_lock|192.168.61.1|3388(LWP:41885)|20221129004555| TRUE | S |后面就是排查哪个进程持有了锁没有释放,然后杀掉就好

有时需要先删除datamap

refreshnodedatamap drop 2

GBase 8a扩容完成后refreshnodedatamap drop报错:Can not drop nodedatamap ,Some table are using it. – 老紫竹的家