【资金流入流出预测】baseline 周期因子和LSTM

分别尝试了周期因子和LSTM两种方法

周期因子效果较好分数为135,但是LSTM分数仅为93(可能也是没有进行参数调价的后果,还有就是初次应用。。。)

import pandas as pd

import numpy as np

周期因子

1、取14年3月至9月的数据

2、按翌日进行加总然后除总平均值等到翌日因子

3、统计每月中各天为各翌日的频率,用频率乘上翌日因子,除上各天出现的频率得到月份中每天的周期因子

4、对总数据按天取平均值,然后除以每天的周期因子得到base

5、求出9月份每天所在的翌日,匹配翌日因子,然后用其乘上base等到结果

cols = ['total_purchase_amt','total_redeem_amt']

df = pd.read_csv('user_balance_table.csv')

取14年3月至9月的数据

def creat_data_1(data):

data = df[['report_date','total_purchase_amt','total_redeem_amt']].groupby('report_date').sum()

data.reset_index(inplace=True)

data['date'] = pd.to_datetime(data['report_date'],format='%Y%m%d')

data_1 = data[data['date'] >= pd.to_datetime('2014-3-1')]

return data_1

def add_date(data_1):

data_1['month'] = data_1['date'].dt.month

data_1['day'] = data_1['date'].dt.day

data_1['week'] = data_1['date'].dt.week

data_1['weekday'] = data_1['date'].dt.weekday

return data_1

按翌日进行加总然后除总平均值等到翌日因子

def weekday_yinzi(data_1):

mean_weekday = data_1[['weekday']+cols].groupby('weekday').mean()

mean_weekday.columns = ['purchase_mean_weekday','redeem_mean_weekday']

mean_weekday['purchase_mean_weekday'] /= np.mean(data_1['total_purchase_amt'])

mean_weekday['redeem_mean_weekday'] /= np.mean(data_1['total_redeem_amt'])

data_1 = pd.merge(data_1,mean_weekday, on='weekday', how='left')

return data_1,mean_weekday

计每月中各天为各翌日的频率,用频率乘上翌日因子,除上各天出现的频率得到月份中每天的周期因子

def day_rat(data_1):

weekday_count = data_1[['day','weekday','date']].groupby(['day','weekday'],as_index=False).count()

weekday_count = pd.merge(weekday_count, mean_weekday, on='weekday')

weekday_count['purchase_mean_weekday'] *= weekday_count['date']

weekday_count['redeem_mean_weekday'] *= weekday_count['date']

day_rate = weekday_count.drop('weekday',axis=1).groupby('day',as_index=False).sum()

day_rate = weekday_count.drop(['weekday'],axis=1).groupby('day',as_index=False).sum()

day_rate['purchase_mean_weekday'] /= day_rate['date']

day_rate['redeem_mean_weekday'] /= day_rate['date']

return day_rate

对总数据按天取平均值,然后除以每天的周期因子得到base

def day_base():

day_mean = data_1[['day'] + ['total_purchase_amt','total_redeem_amt']].groupby('day',as_index=False).mean()

day_pre = pd.merge(day_mean, day_rate, on='day', how='left')

day_pre['total_purchase_amt'] /= day_pre['purchase_mean_weekday']

day_pre['total_redeem_amt'] /= day_pre['redeem_mean_weekday']

return day_pre

data_1 = creat_data_1(df)

data_1 = add_date(data_1)

data_1,mean_weekday = weekday_yinzi(data_1)

day_rate = day_rat(data_1)

day_pre = day_base()

求出9月份每天所在的翌日,匹配翌日因子,然后用其乘上base等到结果

pre_9 = day_pre[['total_purchase_amt','total_redeem_amt']].drop(index = 30)

pre_9['date'] = [i.date() for i in pd.date_range('2014-9-1','2014-9-30')]

pre_9['date'] = pd.to_datetime(pre_9['date'])

pre_9['weekday'] = pre_9['date'].dt.weekday

pre_9 = pre_9.merge(mean_weekday,on='weekday')

pre_9['total_purchase_amt'] *= pre_9['purchase_mean_weekday']

pre_9['total_redeem_amt'] *= pre_9['redeem_mean_weekday']

pre_9.sort_values(by='date')

pre_9.index = pre_9['date'].dt.strftime('%Y%m%d')

pre_9.iloc[:,:2].to_csv('result_base.csv',header=None)

LSTM求解的过程 :

使用前50天预测后一天数据

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

import numpy as np

data = pd.read_csv('user_balance_table.csv')

data_1 = data.loc[:,['user_id','report_date','total_purchase_amt','total_redeem_amt']]

data_1 = data_1.groupby(by='report_date').sum()

data_1.reset_index(inplace=True)

data_1['date'] = pd.to_datetime(data_1['report_date'],format='%Y%m%d')

data = data_1[data_1['date'] >pd.to_datetime('2014-4-1')]

mm = MinMaxScaler()

data = mm.fit_transform(data.iloc[:,2:-1])

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\preprocessing\data.py:334: DataConversionWarning: Data with input dtype int64 were all converted to float64 by MinMaxScaler.

return self.partial_fit(X, y)

def create_data(amt):

mid_time = 50

d = []

for i in range(len(amt)-mid_time):

x = amt[i:51+i]

d.append((x.tolist()))

amt_np = pd.DataFrame(d).values

amt_np = amt_np.reshape((102,51,1))

X_train ,y_train = amt_np[:,:50],amt_np[:,-1]

return X_train,y_train

amt = data[:,0]

X_train,y_train = create_data(amt)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

in

1 amt = data[:,0]

----> 2 X_train,y_train = create_data(amt)

in create_data(amt)

6 d.append((x.tolist()))

7 amt_np = pd.DataFrame(d).values

----> 8 amt_np = amt_np.reshape((102,51,1))

9 X_train ,y_train = amt_np[:,:50],amt_np[:,-1]

10 return X_train,y_train

ValueError: cannot reshape array of size 0 into shape (102,51,1)

from keras.models import Sequential

from keras.layers import LSTM, TimeDistributed, Dense, Activation

from keras.optimizers import Adam

Using TensorFlow backend.

def create_model():

model = Sequential()

model.add(LSTM(units=256,input_shape=(None,1),return_sequences=True))

model.add(LSTM(units=256))

model.add(Dense(units=1))

model.add(Activation('linear'))

model.compile(loss='mse',optimizer='adam')

return model

model = create_model()

model.fit(X_train, y_train, batch_size=20,epochs=100,validation_split=0.1)

WARNING:tensorflow:From C:\ProgramData\Anaconda3\lib\site-packages\keras\backend\tensorflow_backend.py:422: The name tf.global_variables is deprecated. Please use tf.compat.v1.global_variables instead.

Train on 91 samples, validate on 11 samples

Epoch 1/100

91/91 [==============================] - 3s 36ms/step - loss: 0.0927 - val_loss: 0.0359

Epoch 2/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0535 - val_loss: 0.0361

Epoch 3/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0550 - val_loss: 0.0367

Epoch 4/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0542 - val_loss: 0.0373

Epoch 5/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0514 - val_loss: 0.0364

Epoch 6/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0582 - val_loss: 0.0354

Epoch 7/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0557 - val_loss: 0.0386

Epoch 8/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0539 - val_loss: 0.0353

Epoch 9/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0510 - val_loss: 0.0362

Epoch 10/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0520 - val_loss: 0.0359

Epoch 11/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0503 - val_loss: 0.0342

Epoch 12/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0505 - val_loss: 0.0353

Epoch 13/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0508 - val_loss: 0.0336

Epoch 14/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0530 - val_loss: 0.0338

Epoch 15/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0495 - val_loss: 0.0376

Epoch 16/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0510 - val_loss: 0.0346

Epoch 17/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0490 - val_loss: 0.0332

Epoch 18/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0496 - val_loss: 0.0343

Epoch 19/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0519 - val_loss: 0.0345

Epoch 20/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0524 - val_loss: 0.0328

Epoch 21/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0511 - val_loss: 0.0374

Epoch 22/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0493 - val_loss: 0.0323

Epoch 23/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0489 - val_loss: 0.0322

Epoch 24/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0478 - val_loss: 0.0348

Epoch 96/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0238 - val_loss: 0.0164

Epoch 97/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0233 - val_loss: 0.0218

Epoch 98/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0245 - val_loss: 0.0183

Epoch 99/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0239 - val_loss: 0.0173

Epoch 100/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0217 - val_loss: 0.0172

import matplotlib.pyplot as plt

%matplotlib inline

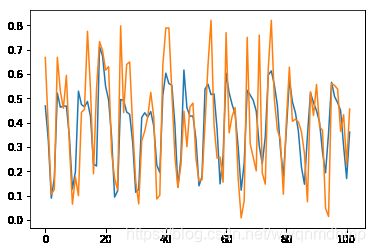

plt.plot(model.predict(X_train))

plt.plot(y_train)

[]

X_test = X_train[-1:,]

for i in range (30):

pre = model.predict(data)

X_test = np.append(data,pre).reshape(1,-1,1)[:,-50:]

pre = data[:,-30:]

result = pd.DataFrame(pre.reshape(-1,1))

amt = data[:,1]

X_train,y_train = create_data(amt)

model.fit(X_train, y_train, batch_size=20,epochs=100,validation_split=0.1)

Train on 91 samples, validate on 11 samples

Epoch 1/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0420 - val_loss: 0.0217

Epoch 2/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0333 - val_loss: 0.0275

Epoch 3/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0326 - val_loss: 0.0266

Epoch 4/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0300 - val_loss: 0.0250

Epoch 5/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0294 - val_loss: 0.0213

Epoch 6/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0256 - val_loss: 0.0267

Epoch 7/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0312 - val_loss: 0.0229

Epoch 8/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0271 - val_loss: 0.0246

Epoch 9/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0275 - val_loss: 0.0232

Epoch 10/100

91/91 [==============================] - 1s 13ms/step - loss: 0.0283 - val_loss: 0.0187

Epoch 90/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0130 - val_loss: 0.0339

Epoch 91/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0145 - val_loss: 0.0317

Epoch 92/100

91/91 [==============================] - 2s 17ms/step - loss: 0.0112 - val_loss: 0.0383

Epoch 93/100

91/91 [==============================] - 2s 17ms/step - loss: 0.0120 - val_loss: 0.0357

Epoch 94/100

91/91 [==============================] - 1s 16ms/step - loss: 0.0119 - val_loss: 0.0333

Epoch 95/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0129 - val_loss: 0.0350

Epoch 96/100

91/91 [==============================] - 1s 16ms/step - loss: 0.0112 - val_loss: 0.0350

Epoch 97/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0120 - val_loss: 0.0323

Epoch 98/100

91/91 [==============================] - 1s 15ms/step - loss: 0.0111 - val_loss: 0.0334

Epoch 99/100

91/91 [==============================] - 1s 16ms/step - loss: 0.0133 - val_loss: 0.0308

Epoch 100/100

91/91 [==============================] - 1s 14ms/step - loss: 0.0108 - val_loss: 0.0327

import matplotlib.pyplot as plt

%matplotlib inline

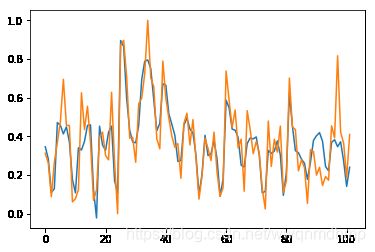

plt.plot(model.predict(X_train))

plt.plot(y_train)

[]

X_test = X_train[-1:,]

for i in range (30):

pre = model.predict(X_test)

X_test = np.append(X_test,pre).reshape(1,-1,1)[:,-50:]

pre = X_test[:,-30:]

result['1'] = pre.reshape(-1,1)

pd.DataFrame(mm.inverse_transform(result)).to_csv('result.csv')