hyperopt使用

文章目录

- 一、下载包

- 二、贝叶斯优化使用

-

- 2.1 fmin

-

- 2.1.1 fn

- 2.1.2 space

- 2.1.3 Trials 捕获信息

- 2.1.4 SVM 调参例子

- 2.1.5 画图可视化

一、下载包

pip install hyperopt

二、贝叶斯优化使用

from hyperopt import hp,STATUS_OK,Trials,fmin,tpe

2.1 fmin

fmin是指寻找某个函数的最小值,函数的参数用lambda x进行指定,space指明寻找的参数范围,algo指明搜索算法,max_evals为最大评估次数。

2.1.1 fn

fn 指定参数以及函数方法

先看一个简单的使用例子:在(0,1)空间上寻找x的最小值,寻优结果最小值为:0.0001083848848416751趋近于0

min_best = fmin(

fn=lambda x: x,

space=hp.uniform('x', 0, 1),

algo=tpe.suggest,

max_evals=100)

print(min_best)

{'x': 0.00010838488484167519}

同样fn可以是指定函数:

min_best = fmin(

fn=lambda x: x**2-2*x,

space=hp.uniform('x', 0, 1),

algo=tpe.suggest,

max_evals=100)

print(min_best)

{'x': 0.9991971325808372}

同时fn中若要使用多元函数应该使用function形式进行定义:

def f (param):

result=(param['x']+param['y'])**2-2*param['x']

return result

space = {

'x': hp.choice('x',range(1,20)),

'y': hp.uniform('y', 1,5)}

min_best = fmin(

fn=f,

space=space,

algo=tpe.suggest,

max_evals=100)

print(min_best)

{'x': 0, 'y': 1.2524785791821587}

2.1.2 space

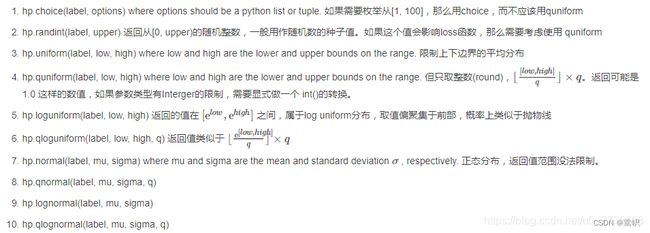

space可以接受字典的输入方式,通过hp包来实现范围之内的取值,例如hp.uniform指搜索(0,2)上连续的点,hp.choice指(0,2)上的整点,关于hp的选择,下图有详细解释:

space = {

'x': hp.choice('x', range(1,20)),

'y': hp.choice('y',range(1,5)),

'criterion': hp.choice('criterion', ["gini", "entropy"]),

}

2.1.3 Trials 捕获信息

Trials帮助我们了解到hyperopt黑匣子内发生了什么

Trials对象允许我们在每个时间步存储信息。然后我们可以将它们打印出来,并在给定的时间步查看给定参数的函数评估值。

def f (param):

result=(param['x']+param['y'])**2-2*param['x']

return result

trials = Trials()

space = {

'x': hp.choice('x',range(1,20)),

'y': hp.uniform('y', 1,5)}

min_best = fmin(

fn=f,

space=space,

algo=tpe.suggest,

max_evals=100,

trials=trials)

print(min_best)

for trial in trials.trials[:2]:

print(trial)

100%|██████████████████████████████████████████████| 100/100 [00:00<00:00, 226.67trial/s, best loss: 2.119808416865758]

{'x': 0, 'y': 1.0297311193519594}

{'state': 2, 'tid': 0, 'spec': None, 'result': {'loss': 26.92689737519718, 'status': 'ok'}, 'misc': {'tid': 0, 'cmd': ('domain_attachment', 'FMinIter_Domain'), 'workdir': None, 'idxs': {'x': [0], 'y': [0]}, 'vals': {'x': [3], 'y': [1.9098982542170027]}}, 'exp_key': None, 'owner': None, 'version': 0, 'book_time': datetime.datetime(2022, 9, 24, 11, 23, 0, 301000), 'refresh_time': datetime.datetime(2022, 9, 24, 11, 23, 0, 301000)}

{'state': 2, 'tid': 1, 'spec': None, 'result': {'loss': 18.028522718941552, 'status': 'ok'}, 'misc': {'tid': 1, 'cmd': ('domain_attachment', 'FMinIter_Domain'), 'workdir': None, 'idxs': {'x': [1], 'y': [1]}, 'vals': {'x': [1], 'y': [2.6934553070143914]}}, 'exp_key': None, 'owner': None, 'version': 0, 'book_time': datetime.datetime(2022, 9, 24, 11, 23, 0, 304000), 'refresh_time': datetime.datetime(2022, 9, 24, 11, 23, 0, 304000)}

tid是时间 id,即时间步,其值从0到max_evals-1。它随着迭代次数递增。

'x’和‘y’是键’vals’的值,其中存储的是每次迭代参数的值。'loss’是键’result’的值,其给出了该次迭代目标函数的值

2.1.4 SVM 调参例子

from sklearn.svm import SVC

from sklearn import datasets

iris = datasets.load_iris()

X = iris.data

y = iris.target

def hyperopt_train_test(params):

X_ = X[:]

clf = SVC(**params)

return cross_val_score(clf, X_, y).mean()

space4svm = {

'C': hp.uniform('C', 0, 20),

'kernel': hp.choice('kernel', ['linear', 'sigmoid', 'poly', 'rbf']),

'gamma': hp.uniform('gamma', 0, 20),

}

def f(params):

acc = hyperopt_train_test(params)

return {'loss': -acc, 'status': STATUS_OK}

trials = Trials()

best = fmin(f, space4svm, algo=tpe.suggest, max_evals=100, trials=trials)

print('best:')

print(best)

100%|█████████████████████████████████████████████| 100/100 [00:08<00:00, 11.35trial/s, best loss: -0.9866666666666667]

best:

{'C': 1.6130746771125175, 'gamma': 18.56247523455796, 'kernel': 0}

值得注意的是对于hp.choice来说’kernel’: 0代表的是第0个位置上的取值

2.1.5 画图可视化

plt.scatter(x,y,c=y,cmap=plt.cm.gist_rainbow,s=20)#cm即colormap,c=y表示颜色随y变化

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

# 定义画图参数

parameters = ['C', 'kernel', 'gamma']

cols = len(parameters)

#定义画布

f, axes = plt.subplots(nrows=1, ncols=cols, figsize=(20, 5))

#定义画布颜色:网址如下

#https://www.baidu.com/s?wd=colormap%20python%E6%98%AF%E4%BB%80%E4%B9%88&rsv_spt=1&rsv_iqid=0x96a9df67001103cb&issp=1&f=8&rsv_bp=1&rsv_idx=2&ie=utf-8&rqlang=cn&tn=baiduhome_pg&rsv_enter=0&rsv_dl=tb&oq=colormap&rsv_btype=t&rsv_t=1842hZgKyjwYUgbmm13Gh4ejKM7vUSdb47VnotpN01ifGSLnaueUknyNmzoBA9LHmWIQ&rsv_pq=9f6a494800014b9e&inputT=3252&rsv_sug3=41&rsv_sug1=15&rsv_sug7=100&rsv_sug2=0&rsv_sug4=3727

cmap = plt.cm.jet

for i, val in enumerate(parameters):

xs = np.array([t['misc']['vals'][val] for t in trials.trials]).ravel()

ys = [-t['result']['loss'] for t in trials.trials]

axes[i].scatter(

xs,

ys,

s=20,

linewidth=0.02,

alpha=0.25,

c=cmap(float(i) / len(parameters)))

axes[i].set_title(val)

axes[i].set_ylim([0.9, 1.0])#根据loss值来的:-0.9866666666666667