tensorflow版使用uNet进行医学图像分割(Skin数据集)

tensorflow版使用uNet进行医学图像分割(Skin数据集)

深度学习、计算机视觉学习笔记、医学图像分割、uNet、Skin皮肤数据集

- tensorflow版使用uNet进行医学图像分割(Skin数据集)

- 实验环境

-

- skin皮肤数据集

- 一、uNet模型

- 二、实验过程

-

- 1. 加载skin皮肤数据集

- 2. 定义uNet模型

- 3. 训练

- 4. 预测

- 5. 结果可视化

- 三、总结

实验环境

python、tensorflow、keras、jupyter

v100

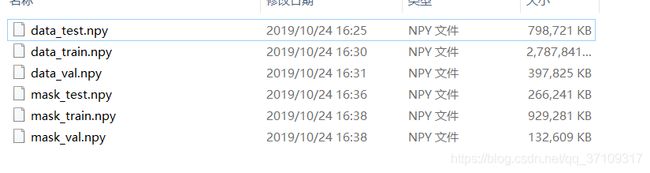

skin皮肤数据集

下载链接:链接: https://pan.baidu.com/s/1cD8WEB3yjWVvNhqhnJqGPg 提取码: xgf5

提示:以下是本篇文章正文内容

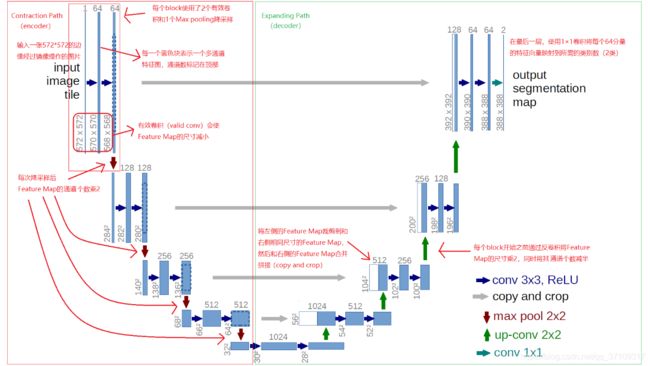

一、uNet模型

如上图,U-Net是一个经典的全卷积网络(即网络中没有全连接操作)。网络的输入是一张572×572的边缘经过镜像操作的图片,网络的左侧(即红色大方框内)是由卷积Max Pooling构成的一系列降采样操作,在原论文中将这一部分叫做压缩路径(contraction path),压缩路径由4个block组成,每个block使用了2个有效卷积层(每次卷积都会丢失边界像素)和1个Max Pooling降采样,每次采样之后Feature Map的个数都会乘以2,因此有了途中所示的Feature Map尺寸变化,最终得到了尺寸为32×32的Feature Map。

网络的右侧部分(绿色方框)在原论文中叫做扩展路径(expansive path),同样由4个block组成,每个block开始之前通过反卷积将Feature Map的尺寸乘2,同时将其个数减半(最后一层使用1×1卷积将每个64分量的特征向量映射到所需的类别数),然后和左侧对称的压缩路径的Feature Map合并拼接,由于左侧压缩路径和右侧扩展路径的Feature Map的尺寸不一样,U-Net是通过将压缩路径的Feature Map裁剪到和扩展路径相同尺寸的Feature Map进行归一化的扩展路径的卷积操作依旧使用的是有效卷积操作,最终得到的Feature Map的尺寸是388×388,由于该任务是一个二分类任务,所以网络有两个输出Feature Map。

二、实验过程

1. 加载skin皮肤数据集

from keras.models import Model

from keras.optimizers import Adam

from keras.layers import Conv2D, Input, MaxPooling2D, Dropout, concatenate, UpSampling2D

import numpy as np

from keras.callbacks import ModelCheckpoint, LearningRateScheduler

import os

from keras.preprocessing.image import array_to_img

import matplotlib.pyplot as plt

# 加载训练集

train = np.load('/shuyc_tmp/models/tensorflow/uNet/skin_dataset/data_train.npy')

# 加载训练集的mask

mask = np.load('/shuyc_tmp/models/tensorflow/uNet/skin_dataset/mask_train.npy')

# 加载测试集

test = np.load('/shuyc_tmp/models/tensorflow/uNet/skin_dataset/data_test.npy')

# 归一化处理

train = train.astype('float32')

train = train/255.

mask = mask /mask.max()

test = test.reshape((test.shape[0],256,256,3))

test = test.astype('float32')

test = test/255.

train = train.reshape(train.shape[0], 256, 256, 3)

mask = mask.reshape(mask.shape[0], 256, 256, 1)

##train = train[0]

2. 定义uNet模型

# 定义u-Net网络模型

def Unet():

# contraction path

# 输入层数据为256*256的三通道图像

inputs = Input(shape=[256, 256, 3])

# 第一个block(含两个激活函数为relu的有效卷积层 ,和一个卷积最大池化(下采样)操作)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(inputs)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv1)

# 最大池化

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

# 第二个block(含两个激活函数为relu的有效卷积层 ,和一个卷积最大池化(下采样)操作)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool1)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

# 第三个block(含两个激活函数为relu的有效卷积层 ,和一个卷积最大池化(下采样)操作)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool2)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv3)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3)

# 第四个block(含两个激活函数为relu的有效卷积层 ,和一个卷积最大池化(下采样)操作)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool3)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv4)

# 将部分隐藏层神经元丢弃,防止过于细化而引起的过拟合情况

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv5)

# 将部分隐藏层神经元丢弃,防止过于细化而引起的过拟合情况

drop5 = Dropout(0.5)(conv5)

# expansive path

# 上采样

up6 = Conv2D(512, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(drop5))

# copy and crop(和contraction path 的feature map合并拼接)

merge6 = concatenate([drop4, up6], axis=3)

# 两个有效卷积层

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge6)

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv6)

# 上采样

up7 = Conv2D(256, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv6))

merge7 = concatenate([conv3, up7], axis=3)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge7)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv7)

# 上采样

up8 = Conv2D(128, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv7))

merge8 = concatenate([conv2, up8], axis=3)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge8)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv8)

# 上采样

up9 = Conv2D(64, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv8))

merge9 = concatenate([conv1, up9], axis=3)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge9)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv9 = Conv2D(2, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv10 = Conv2D(1, 1, activation='sigmoid')(conv9)

model = Model(inputs=inputs, outputs=conv10)

# 优化器为 Adam,损失函数为 binary_crossentropy,评价函数为 accuracy

model.compile(optimizer=Adam(lr=1e-4),

loss='binary_crossentropy',

metrics=['accuracy'])

return model

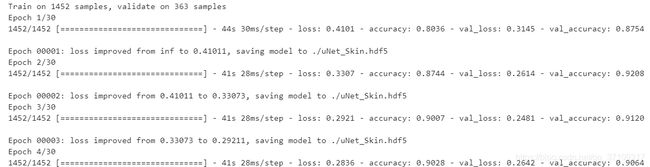

3. 训练

# 开始训练

unet = Unet()

# 每个epoch后保存模型到 uNet_Skin.hdf5

model_checkpoint = ModelCheckpoint('./uNet_Skin.hdf5',monitor='loss',verbose=1,save_best_only=True)

# 训练

history = unet.fit(train, mask, batch_size=4, epochs=30, verbose=1,

validation_split=0.2, shuffle=True, callbacks=[model_checkpoint])

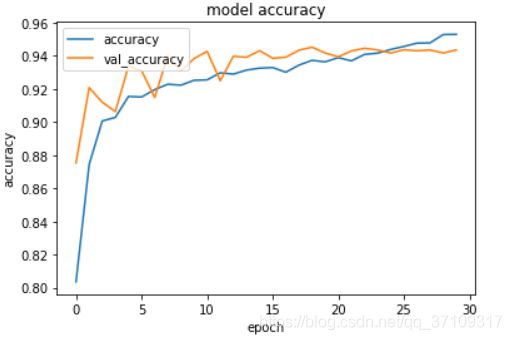

# 展示一下精确度随训练的变化图

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['accuracy', 'val_accuracy'], loc='upper left')

plt.show()

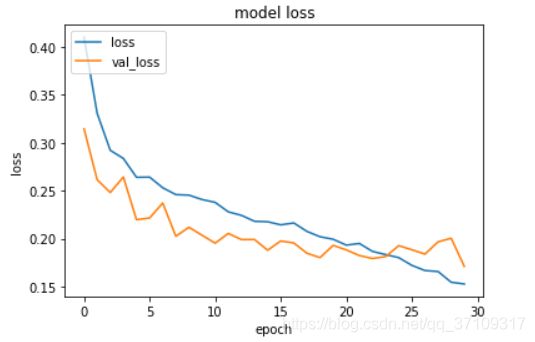

# 展示一下loss随训练的变化图

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['loss', 'val_loss'], loc='upper left')

plt.show()

4. 预测

# 预测

predict_imgs = unet.predict(test, batch_size=1, verbose=1)

# 图像还原

predict_imgs = predict_imgs * 255

# print(predict_imgs[0])

# 将像素限制在[0, 255]之间

predict_imgs = np.clip(predict_imgs,0,255)

# print(predict_imgs[0])

# 保存为predict.npy文件

if not os.path.exists('./results'):

os.makedirs('./results')

np.save('./results/predict.npy',predict_imgs)

# 保存为图像文件

def save_img():

imgs = np.load('./results/predict.npy')

for i in range(imgs.shape[0]):

img = imgs[i]

img = array_to_img(img)

img.save("./data_out/%d.jpg" % (i))

save_img()

5. 结果可视化

比较测试图像的 ground_truth_mask 和预测的 mask

import matplotlib.pyplot as plt

import numpy as np

import cv2 as cv

plt.figure(num=1, figsize=(10, 6))

for i in range(3):

for j in range(5):

if (i == 0):

img_data = cv.imread('./SKIN_data/test/images/' + str(j) + '.jpg')

b, g, r = cv.split(img_data)

img_data = cv.merge([r, g, b])

plt.subplot(3, 5, (i * 5) + (j + 1)), plt.imshow(img_data)

plt.xticks(())

plt.yticks(())

plt.title(str(j) + '.jpg')

if (j == 0):

plt.ylabel('test_img')

if (i == 1):

ground_truth = cv.imread('./SKIN_data/test/labels/' + str(j) + '.jpg')

b, g, r = cv.split(ground_truth)

ground_truth = cv.merge([r, g, b])

plt.subplot(3, 5, (i * 5) + (j + 1)), plt.imshow(ground_truth)

plt.xticks(())

plt.yticks(())

if (j == 0):

plt.ylabel('ground_truth_mask')

if (i == 2):

predict_img = cv.imread('./data_out/' + str(j) + '.jpg')

b, g, r = cv.split(predict_img)

predict_img = cv.merge([r, g, b])

plt.subplot(3, 5, (i * 5) + (j + 1)), plt.imshow(predict_img)

plt.xticks(())

plt.yticks(())

if (j == 0):

plt.ylabel('predicted')

plt.show()

三、总结

还可以使用如IoU、mIoU等评测方法对分割的结果进行测评

附源码:uNet