基于VS与OpenCV的模板匹配学习(4):手写OpenCV matchTemplate()

基于VS与OpenCV的模板匹配学习(4):手写OpenCV matchTemplate()

文章目录

- 基于VS与OpenCV的模板匹配学习(4):手写OpenCV matchTemplate()

- 前言

- 一、OpenCV templmatch源码分析

- 二、平方差度量计算

- 三、高斯金字塔

-

- 3.1 创建高斯金字塔模板

- 3.2 高斯金字塔策略

- 3.3 findMatchingPosition_GrayValueBased()

- 3.4 库函数+高斯金字塔

前言

在前文基于VS与OpenCV的模板匹配学习(1):OpenCV matchTemplate()示例中,讲解了OpenCV自带的模板匹配函数。尽管OpenCV的模板匹配带来了便利性,同时也制约了编程的灵活性,本文尝试手写OpenCV matchTemplate()函数,研究OpenCV源码,结合上文研究Mat快速遍历与高斯金字塔算法,尽量使得手写的match Template()时间上逼近OpenCV库函数。

一、OpenCV templmatch源码分析

OpenCV的模块源码基本都是开源的,这为我们研究OpenCV库函数的实现原理带来了便利性。在网上下载OpenCV sources文件夹,在OpenCV\sources\modules\imgproc\src路径下,找到了templmatch.cpp。

templmatch.cpp部分代码:

void crossCorr( const Mat& img, const Mat& _templ, Mat& corr,

Point anchor, double delta, int borderType )

{

// compute DFT of each template plane

for( k = 0; k < tcn; k++ )

{

int yofs = k*dftsize.height;

Mat src = templ;

Mat dst(dftTempl, Rect(0, yofs, dftsize.width, dftsize.height));

Mat dst1(dftTempl, Rect(0, yofs, templ.cols, templ.rows));

if( tcn > 1 )

{

src = tdepth == maxDepth ? dst1 : Mat(templ.size(), tdepth, &buf[0]);

int pairs[] = {k, 0};

mixChannels(&templ, 1, &src, 1, pairs, 1);

}

if( dst1.data != src.data )

src.convertTo(dst1, dst1.depth());

if( dst.cols > templ.cols )

{

Mat part(dst, Range(0, templ.rows), Range(templ.cols, dst.cols));

part = Scalar::all(0);

}

c->apply(dst.data, (int)dst.step, dst.data, (int)dst.step);

}

int tileCountX = (corr.cols + blocksize.width - 1)/blocksize.width;

int tileCountY = (corr.rows + blocksize.height - 1)/blocksize.height;

int tileCount = tileCountX * tileCountY;

Size wholeSize = img.size();

Point roiofs(0,0);

Mat img0 = img;

if( !(borderType & BORDER_ISOLATED) )

{

img.locateROI(wholeSize, roiofs);

img0.adjustROI(roiofs.y, wholeSize.height-img.rows-roiofs.y,

roiofs.x, wholeSize.width-img.cols-roiofs.x);

}

borderType |= BORDER_ISOLATED;

Ptr<hal::DFT2D> cF, cR;

int f = CV_HAL_DFT_IS_INPLACE;

int f_inv = f | CV_HAL_DFT_INVERSE | CV_HAL_DFT_SCALE;

cF = hal::DFT2D::create(dftsize.width, dftsize.height, maxDepth, 1, 1, f, blocksize.height + templ.rows - 1);

cR = hal::DFT2D::create(dftsize.width, dftsize.height, maxDepth, 1, 1, f_inv, blocksize.height);

// calculate correlation by blocks

for( i = 0; i < tileCount; i++ )

{

int x = (i%tileCountX)*blocksize.width;

int y = (i/tileCountX)*blocksize.height;

Size bsz(std::min(blocksize.width, corr.cols - x),

std::min(blocksize.height, corr.rows - y));

Size dsz(bsz.width + templ.cols - 1, bsz.height + templ.rows - 1);

int x0 = x - anchor.x + roiofs.x, y0 = y - anchor.y + roiofs.y;

int x1 = std::max(0, x0), y1 = std::max(0, y0);

int x2 = std::min(img0.cols, x0 + dsz.width);

int y2 = std::min(img0.rows, y0 + dsz.height);

Mat src0(img0, Range(y1, y2), Range(x1, x2));

Mat dst(dftImg, Rect(0, 0, dsz.width, dsz.height));

Mat dst1(dftImg, Rect(x1-x0, y1-y0, x2-x1, y2-y1));

Mat cdst(corr, Rect(x, y, bsz.width, bsz.height));

for( k = 0; k < cn; k++ )

{

Mat src = src0;

dftImg = Scalar::all(0);

if( cn > 1 )

{

src = depth == maxDepth ? dst1 : Mat(y2-y1, x2-x1, depth, &buf[0]);

int pairs[] = {k, 0};

mixChannels(&src0, 1, &src, 1, pairs, 1);

}

if( dst1.data != src.data )

src.convertTo(dst1, dst1.depth());

if( x2 - x1 < dsz.width || y2 - y1 < dsz.height )

copyMakeBorder(dst1, dst, y1-y0, dst.rows-dst1.rows-(y1-y0),

x1-x0, dst.cols-dst1.cols-(x1-x0), borderType);

if (bsz.height == blocksize.height)

cF->apply(dftImg.data, (int)dftImg.step, dftImg.data, (int)dftImg.step);

else

dft( dftImg, dftImg, 0, dsz.height );

Mat dftTempl1(dftTempl, Rect(0, tcn > 1 ? k*dftsize.height : 0,

dftsize.width, dftsize.height));

mulSpectrums(dftImg, dftTempl1, dftImg, 0, true);

if (bsz.height == blocksize.height)

cR->apply(dftImg.data, (int)dftImg.step, dftImg.data, (int)dftImg.step);

else

dft( dftImg, dftImg, DFT_INVERSE + DFT_SCALE, bsz.height );

src = dftImg(Rect(0, 0, bsz.width, bsz.height));

if( ccn > 1 )

{

if( cdepth != maxDepth )

{

Mat plane(bsz, cdepth, &buf[0]);

src.convertTo(plane, cdepth, 1, delta);

src = plane;

}

int pairs[] = {0, k};

mixChannels(&src, 1, &cdst, 1, pairs, 1);

}

else

{

if( k == 0 )

src.convertTo(cdst, cdepth, 1, delta);

else

{

if( maxDepth != cdepth )

{

Mat plane(bsz, cdepth, &buf[0]);

src.convertTo(plane, cdepth);

src = plane;

}

add(src, cdst, cdst);

}

}

}

}

}

上述代码中,OpenCV在进行相关性计算的时候,并不是直接在空间域操作,而在crossCorr函数中将输入图像做了一次DFT变换,将空间域的图像转换到频率域中来进行处理,这也解释了OpenCV代码的高效性原因。

if (method == CV_TM_SQDIFF)

{

Mat mask2_templ = templ.mul(mask2);

Mat corr(corrSize, CV_32F);

crossCorr( img, mask2_templ, corr, Point(0,0), 0, 0 );

crossCorr( img2, mask, result, Point(0,0), 0, 0 );

result -= corr * 2;

result += templSum2;

}

该段代码证明对于CV_TM_SQDIFF基于平方差度量的匹配方法也是在频域完成计算的,

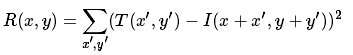

二、平方差度量计算

本文准备手写CV_TM_SQDIFF基于平方差度量的匹配方法,因为该度量方法是理论上最容易理解的。本文并不准备利用傅里叶变化,在频域计算平方差度量,而是结合OpenCV Mat快速遍历在空间域计算平方差度量,因而在算法时间复杂度本身要远高于OpenCV库函数。

(1)ptr(row)[col]直接遍历目标元素

for (int resultImageRow = 0; resultImageRow < resultImage_rows; resultImageRow++)

{

for (int resultImageCol = 0; resultImageCol < resultImage_cols; resultImageCol++)

{

int sum = 0;

short int temp = 0;

for (int templateImageRow = 0; templateImageRow < templateImage_rows; templateImageRow++)

{

for (int templateImageCol = 0; templateImageCol < templateImage_cols; templateImageCol++)

{

temp = srcImage.ptr<uchar>(resultImageRow + templateImageRow)[templateImageCol + resultImageCol] - templateImage.ptr<uchar>(templateImageRow)[templateImageCol];

sum += temp * temp;

}

}

resultImage.ptr<int>(resultImageRow)[resultImageCol] = sum;

}

}

Run Time:75.417s

与前文对应,使用ptr(row)[col]遍历目标元素,尽管代码精简,但是效率较低。

(2)ptr(row)行指针逐行遍历目标元素:

int* resultImagePtr;

uchar* srcImagePtr;

uchar* templateImagePtr;

for (int resultImageRow = 0; resultImageRow < resultImage_rows; resultImageRow++)

{

resultImagePtr = resultImage.ptr<int>(resultImageRow);

for (int resultImageCol = 0; resultImageCol < resultImage_cols; resultImageCol++)

{

int sum = 0;

short int temp = 0;

for (int templateImageRow = 0; templateImageRow < templateImage_rows; templateImageRow++)

{

srcImagePtr = srcImage.ptr<uchar>(resultImageRow + templateImageRow);

templateImagePtr = templateImage.ptr<uchar>(templateImageRow);

for (int templateImageCol = 0; templateImageCol < templateImage_cols; templateImageCol++)

{

temp=srcImagePtr[templateImageCol+ resultImageCol] - templateImagePtr[templateImageCol];

sum += temp * temp;

}

}

resultImagePtr[resultImageCol]=sum ;

}

}

Run Time:17.584s

使用ptr(row)逐行遍历目标元素,效率较高。

(3)指针按行遍历目标元素:

int* resultImagePtr;

uchar* srcImagePtr;

uchar* templateImagePtr;

for (int resultImageRow = 0; resultImageRow < resultImage_rows; resultImageRow++)

{

resultImagePtr = resultImage.ptr<int>(resultImageRow);

for (int resultImageCol = 0; resultImageCol < resultImage_cols; resultImageCol++)

{

int sum = 0;

short int temp = 0;

for (int templateImageRow = 0; templateImageRow < templateImage_rows; templateImageRow++)

{

srcImagePtr = srcImage.ptr<uchar>(resultImageRow+templateImageRow)+ resultImageCol;

templateImagePtr = templateImage.ptr<uchar>(templateImageRow);

for (int templateImageCol = 0; templateImageCol < templateImage_cols; templateImageCol++)

{

temp= *(srcImagePtr++) - *(templateImagePtr++);

sum += temp * temp;

}

}

*(resultImagePtr++) = sum;

}

}

Run Time:16.509s

使用ptr(row)逐行遍历,与(2)逐行遍历时使用指针自加,效率比(2)稍高。

(4)指针单循环遍历目标元素:

int* resultImageDataStart = (int*)resultImage.data;

uchar* srcImageDataStart = srcImage.data;

uchar* templateDataPre;

uchar* srcImageDataPre;

for (int resultImageSeq = 0; resultImageSeq < resultImage_rows* resultImage_cols; resultImageSeq++)

{

int sum = 0;

short int temp = 0;

if (resultImageSeq % resultImage_cols == 0 && resultImageSeq != 0)

srcImageDataStart += templateImage_cols - 1;

templateDataPre = templateImage.data;

srcImageDataPre = srcImageDataStart++;

for (int templateImageSeq = 0; templateImageSeq < templateImage_rows * templateImage_cols; templateImageSeq++)

{

if (templateImageSeq % templateImage_cols == 0 && templateImageSeq!=0)

srcImageDataPre += srcImage_cols - templateImage_cols;

temp = *(templateDataPre++) - *(srcImageDataPre++);

sum += temp * temp;

}

*(resultImageDataStart++) = sum;

}

Run Time:208.184s

利用OpenCV地址的连续性,srcImage.data可访问数据地址头,因而将四层循环简化为两层循环。然而,在计算某个位置的平方差度量,srcImage只是取了模板大小部分,这带来了两点困难。

其一,srcImage需要遍历部分并不是连续地址,故而每当逐行遍历结束,就需要跳到下一行首元素位置。

其二,srcImage在取模板部分的时候,需要确定当前的行列位置坐标,因为需要srcImageDataStart确定位置,并且由于srcImage与resultImage大小不一致,每当resultImage逐行结束,srcImageDataStart也需要自动跳至下一行首元素。

因而,由于逻辑复杂性的增加,循环中加入了大量的判断语句,反而降低了整体程序的速度,因而最终的时间效率非常差。

(5)OpenCV库函数:

cv::matchTemplate(srcImage, tplImage, resultImage, 0);

Run Time:0.056s

效率最高,但是灵活性较差。

(6)时间总结:

在上述前四种情况中,srcImage为2048 * 2048,tplImage为200 * 200,运行模式为release,使用指针逐行遍历的效率是最高。即便如此,在空间域的计算与OpenCV库函数在频域的计算的时间相差依然很大。考虑在空间域计算的基础上加入高斯金字塔的思想,并设置循环终止条件。

三、高斯金字塔

在基于VS与OpenCV的模板匹配学习(2):边缘匹配+图像金字塔阐述过高斯金字塔的算法思想,高斯金字塔对算法时间的加速是显著的。

3.1 创建高斯金字塔模板

bool createParamidImage(cv::Mat inputArray, std::vector<cv::Mat> &OutputArrayVector, int ¶midNums)

{

if (paramidNums <= 0)

{

std::cout << "The range of paramidNums is 1 to n ." << std::endl;

return false;

}

//After pyrdown, the outputArray must be greater than 8*8

while (true)

{

if (inputArray.cols < 8 * pow( 2, paramidNums-1 ) || inputArray.rows < 8 * pow(2, paramidNums - 1))

{

paramidNums--;

printf("ParamidNums has been set lower, Current paramidNums is %d . \n", paramidNums);

if (paramidNums - 1 == 0) break;

}

else break;

}

int paramidCount = paramidNums;

cv::Mat inputArrayPre = inputArray;

cv::Mat inputArrayTemp;

OutputArrayVector.push_back(inputArrayPre);

while (paramidCount > 1)

{

cv::pyrDown(inputArrayPre, inputArrayTemp, cv::Size(inputArrayPre.cols / 2, inputArrayPre.rows / 2));

inputArrayPre = inputArrayTemp;

OutputArrayVector.push_back(inputArrayPre);

paramidCount--;

}

return true;

}

该段代码借鉴了别人博客的经验,即高斯金字塔最高层模板大小不应小于8*8,否则往往会出现定位问题。这个经验在本场景中同样适用。

3.2 高斯金字塔策略

//Find MatchingPosition based on GrayValue

int srcImageAOI_rowMin = 0;

int srcImageAOI_rowMax = srcImage.rows - tplImage.rows + 1;

int srcImageAOI_colMin = 0;

int srcImageAOI_colMax = srcImage.cols - tplImage.cols + 1;

int matchingPointX = 0;

int matchingPointY = 0;

cv::Point matchingPoint;

for (int paramidNumsPre = paramidNums; paramidNumsPre >= 1; paramidNumsPre--)

{

cv::Mat srcImagePre = srcImageParamidVector[paramidNumsPre-1];

cv::Mat tplImagePre = tplImageParamidVector[paramidNumsPre-1];

if (paramidNumsPre - paramidNums == 0)

{

srcImageAOI_rowMin = 0;

srcImageAOI_rowMax = srcImagePre.rows - tplImagePre.rows + 1;

srcImageAOI_colMin = 0;

srcImageAOI_colMax = srcImagePre.cols - tplImagePre.cols + 1;

}

matchingPoint = findMatchingPosition_GrayValueBased(srcImagePre, tplImagePre, srcImageAOI_rowMin, srcImageAOI_rowMax, srcImageAOI_colMin, srcImageAOI_colMax);

matchingPointX = matchingPoint.x;

matchingPointY = matchingPoint.y;

srcImageAOI_rowMin = matchingPointY * 2 - 3;

srcImageAOI_rowMax = matchingPointY * 2 + 4;

srcImageAOI_colMin = matchingPointX * 2 - 3;

srcImageAOI_colMax = matchingPointX * 2 + 4;

}

从最高层金字塔图像开始,会在高层位置寻到匹配点可能存在的大致位置;

选择匹配点的7*7邻域区域,进入下一层继续匹配;

直至寻到第一层,即在原图像和原模板层进行匹配。

3.3 findMatchingPosition_GrayValueBased()

uchar* srcImagePtr;

uchar* tplImagePtr;

cv::Point matchingPoint;

short int matchingPointX=0;

short int matchingPointY=0;

int matchingScore = INT_MAX;

for (int resultImageRow = row_min; resultImageRow < row_max; resultImageRow++)

{

for (int resultImageCol = col_min; resultImageCol < col_max; resultImageCol++)

{

int sum = 0;

short int temp = 0;

for (int tplImageRow = 0; tplImageRow < tplImage.rows; tplImageRow++)

{

srcImagePtr = srcImage.ptr<uchar>(resultImageRow+tplImageRow)+ resultImageCol;

tplImagePtr = tplImage.ptr<uchar>(tplImageRow);

for (int tplImageCol = 0; tplImageCol < tplImage.cols; tplImageCol++)

{

temp= *(srcImagePtr++) - *(tplImagePtr++);

sum += temp * temp;

if (sum > matchingScore) goto label;

}

}

label:

if (sum < matchingScore)

{

matchingScore = sum;

matchingPointY = resultImageRow;

matchingPointX = resultImageCol;

}

}

}

matchingPoint.x = matchingPointX;

matchingPoint.y = matchingPointY;

Run Time:0.007s

经过高斯金字塔的加速,算法时间得到了极大的优化。

此外,值得注意的是,该算法设置度量计算停止条件,即if (sum > matchingScore) goto label,每当现有的sum已经超出matchingScore范围,便停止该点sum计算。

3.4 库函数+高斯金字塔

顾名思义,高斯金字塔的思想结合OpenCV的库函数matchTemplate()。

matchTemplate():

cv::matchTemplate(srcImage, tplImage, resultImage, 0);

Run Time:0.056s

matchTemplate()+gaussianPyramid:

cv::Point matchingPoint;

double minValue;

double maxValue;

cv::Point minLocation;

cv::Point maxLocation;

cv::Point matchLocation;

cv::Mat srcImageROI = srcImage(cv::Range(row_min, row_max + tplImage.rows - 1), cv::Range(col_min, col_max + tplImage.cols - 1)).clone();

cv::Mat resultImageROI;

int resultImageROI_rows = srcImageROI.rows - tplImage.rows + 1;

int resultImageROI_cols = srcImageROI.cols - tplImage.cols + 1;

cv::matchTemplate(srcImageROI, tplImage, resultImageROI, 0);

cv::minMaxLoc(resultImageROI, &minValue, &maxValue, &minLocation, &maxLocation, cv::Mat());

matchingPoint.x = minLocation.x + col_min;

matchingPoint.y = minLocation.y + row_min;

Run Time:0.008s

高斯金字塔对于OpenCV库函数的加速并不显著,这是由于OpenCV在频域的计算本身的算法时间复杂度为2048 * 2048,而手写的函数在空间的计算其时间复杂度为2048 * 2048 * 200 * 200。倘若在第二层金字塔位置,频域算法时间复杂度下降至1024 * 1024,而空间域算法时间复杂度为1024 * 1024 * 100 * 100,前者下降了4倍,而后者下降了16倍。