【SwinTransformer源码阅读一】build_loader部分代码

最近打算认真看下SwinTransformer算法,这里记录下今天看的build_loader部分的代码,步骤均加了汉语注释,希望可以帮到一些正在学习的朋友。

前言

build_loader是Swin Transformer代码中main.py的第一句,所以这里主要记录下Swin Transformer加载数据的过程。

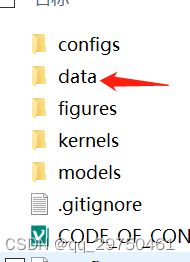

在swin tranformer 中,数据预处理部分是在下面的文件夹中

build.py

try:

from torchvision.transforms import InterpolationMode

def _pil_interp(method):

if method == 'bicubic':

return InterpolationMode.BICUBIC

elif method == 'lanczos':

return InterpolationMode.LANCZOS

elif method == 'hamming':

return InterpolationMode.HAMMING

else:

# default bilinear, do we want to allow nearest?

return InterpolationMode.BILINEAR

import timm.data.transforms as timm_transforms

timm_transforms._pil_interp = _pil_interp

except:

from timm.data.transforms import _pil_interp

#建立数据加载器

def build_loader(config):

#配置文件解冻 这一步是方便后续对配置文件config.MODEL.NUM_CLASSES 的更改

config.defrost()

#加载训练集

dataset_train, config.MODEL.NUM_CLASSES = build_dataset(is_train=True, config=config)

config.freeze()#配置文件锁

print(f"local rank {config.LOCAL_RANK} / global rank {dist.get_rank()} successfully build train dataset")

dataset_val, _ = build_dataset(is_train=False, config=config)#验证数据集

print(f"local rank {config.LOCAL_RANK} / global rank {dist.get_rank()} successfully build val dataset")

#分布式训练所需参数

num_tasks = dist.get_world_size()

global_rank = dist.get_rank()

# 缓存模式为 part 即只取部分数据的情况

if config.DATA.ZIP_MODE and config.DATA.CACHE_MODE == 'part':

indices = np.arange(dist.get_rank(), len(dataset_train), dist.get_world_size())

sampler_train = SubsetRandomSampler(indices)

else:

#将数据集均匀分散在不同的GPU上

# 比如 GPU0:[0,2,4,6,8] GPU1:[1,3,5,7..]

sampler_train = torch.utils.data.DistributedSampler(

dataset_train, num_replicas=num_tasks, rank=global_rank, shuffle=True

)

#测试时候是否为顺序采样,即每次采样均为 0,1,2...顺序

if config.TEST.SEQUENTIAL:

sampler_val = torch.utils.data.SequentialSampler(dataset_val)

else:

#分布式采样

sampler_val = torch.utils.data.distributed.DistributedSampler(

dataset_val, shuffle=config.TEST.SHUFFLE

)

#训练集数据加载器

data_loader_train = torch.utils.data.DataLoader(

dataset_train, sampler=sampler_train,

batch_size=config.DATA.BATCH_SIZE,

num_workers=config.DATA.NUM_WORKERS,

pin_memory=config.DATA.PIN_MEMORY,

drop_last=True,

)

#验证集数据加载器

data_loader_val = torch.utils.data.DataLoader(

dataset_val, sampler=sampler_val,

batch_size=config.DATA.BATCH_SIZE,

shuffle=False,

num_workers=config.DATA.NUM_WORKERS,

pin_memory=config.DATA.PIN_MEMORY,

drop_last=False

)

# setup mixup / cutmix

mixup_fn = None

# true

mixup_active = config.AUG.MIXUP > 0 or config.AUG.CUTMIX > 0. or config.AUG.CUTMIX_MINMAX is not None

if mixup_active:

mixup_fn = Mixup(

mixup_alpha=config.AUG.MIXUP, cutmix_alpha=config.AUG.CUTMIX, cutmix_minmax=config.AUG.CUTMIX_MINMAX,

prob=config.AUG.MIXUP_PROB, switch_prob=config.AUG.MIXUP_SWITCH_PROB, mode=config.AUG.MIXUP_MODE,

label_smoothing=config.MODEL.LABEL_SMOOTHING, num_classes=config.MODEL.NUM_CLASSES)

return dataset_train, dataset_val, data_loader_train, data_loader_val, mixup_fn

#建立数据集

#is_train : true:train dataset false:val dataset

def build_dataset(is_train, config):

#进行数据变换

transform = build_transform(is_train, config)

#依据不同的数据格式进行划分数据

if config.DATA.DATASET == 'imagenet':

prefix = 'train' if is_train else 'val'

#如果使用的是zip格式的数据集 具体可以参看 get_start.md的数据集例子

if config.DATA.ZIP_MODE:

ann_file = prefix + "_map.txt"

prefix = prefix + ".zip@/"

dataset = CachedImageFolder(config.DATA.DATA_PATH, ann_file, prefix, transform,

cache_mode=config.DATA.CACHE_MODE if is_train else 'part')

else:

root = os.path.join(config.DATA.DATA_PATH, prefix)

dataset = datasets.ImageFolder(root, transform=transform)

nb_classes = 1000#数据的类别

elif config.DATA.DATASET == 'imagenet22K':

prefix = 'ILSVRC2011fall_whole'

if is_train:

ann_file = prefix + "_map_train.txt"

else:

ann_file = prefix + "_map_val.txt"

dataset = IN22KDATASET(config.DATA.DATA_PATH, ann_file, transform)

nb_classes = 21841

else:

raise NotImplementedError("We only support ImageNet Now.")

return dataset, nb_classes

#数据变换 预处理

def build_transform(is_train, config):

#判断输入图像尺寸大小是否大于32 相关配置在 config.py 中

resize_im = config.DATA.IMG_SIZE > 32

if is_train:

# this should always dispatch to transforms_imagenet_train

#相关参数可以在 config.py 找到

transform = create_transform(

input_size=config.DATA.IMG_SIZE,

is_training=True,

color_jitter=config.AUG.COLOR_JITTER if config.AUG.COLOR_JITTER > 0 else None,

auto_augment=config.AUG.AUTO_AUGMENT if config.AUG.AUTO_AUGMENT != 'none' else None,

re_prob=config.AUG.REPROB,

re_mode=config.AUG.REMODE,

re_count=config.AUG.RECOUNT,

interpolation=config.DATA.INTERPOLATION,

)

#如果输入图像大小过小 则采用随即裁剪的方式

if not resize_im:

# replace RandomResizedCropAndInterpolation with

# RandomCrop

transform.transforms[0] = transforms.RandomCrop(config.DATA.IMG_SIZE, padding=4)

return transform

# 非训练数据集的扩增方式

t = []

if resize_im:

#如果测试过程中使用中心裁剪 先放大输入图像的尺寸 再中心裁剪

#否则直接使用对应的插值策略进行设置输入图像大小

if config.TEST.CROP:

size = int((256 / 224) * config.DATA.IMG_SIZE)

t.append(

transforms.Resize(size, interpolation=_pil_interp(config.DATA.INTERPOLATION)),

# to maintain same ratio w.r.t. 224 images

)

t.append(transforms.CenterCrop(config.DATA.IMG_SIZE))

else:

t.append(

transforms.Resize((config.DATA.IMG_SIZE, config.DATA.IMG_SIZE),

interpolation=_pil_interp(config.DATA.INTERPOLATION))

)

t.append(transforms.ToTensor())#将数据转为 tensor类型 (H*W*C)->(C*H*W)

#进行归一化

t.append(transforms.Normalize(IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD))

return transforms.Compose(t)#把多个数据处理步骤整合到一起

cached_image_folder.py

import io

import os

import time

import torch.distributed as dist

import torch.utils.data as data

from PIL import Image

from .zipreader import is_zip_path, ZipReader

#检验文件是否符合所要求的文件后缀格式

def has_file_allowed_extension(filename, extensions):

"""Checks if a file is an allowed extension.

Args:

filename (string): path to a file

Returns:

bool: True if the filename ends with a known image extension

"""

filename_lower = filename.lower()

return any(filename_lower.endswith(ext) for ext in extensions)

#获得数据集中的种类

def find_classes(dir):

#通过数据集文件夹下的子文件夹名称来获取类名

classes = [d for d in os.listdir(dir) if os.path.isdir(os.path.join(dir, d))]

classes.sort()#进行类名排序

#将类名进行映射为从0开始的类别索引 {类名:类索引}

class_to_idx = {classes[i]: i for i in range(len(classes))}

#返回类别列表和类别索引字典

return classes, class_to_idx

#获取数据集中的所有图像数据

def make_dataset(dir, class_to_idx, extensions):

images = []

#如果是"~"开始的则进入系统根目录,如果不为"~"开头的则保持原路径

dir = os.path.expanduser(dir)

#获取文件列表

for target in sorted(os.listdir(dir)):

d = os.path.join(dir, target)

#如果不为文件夹则跳过

if not os.path.isdir(d):

continue

#便利文件子文件夹

for root, _, fnames in sorted(os.walk(d)):

for fname in sorted(fnames):#遍历所有文件

#判断文件后缀是否正确

if has_file_allowed_extension(fname, extensions):

#图片的绝对路径

path = os.path.join(root, fname)

#构建图片路径和类别索引元组

item = (path, class_to_idx[target])

images.append(item)

#返回images列表 元素为图片路径和类别索引元组

#[("./img1.jpg",0),("./img2.jpg",0),...]

return images

#生成带有标注文件的图像列表

def make_dataset_with_ann(ann_file, img_prefix, extensions):

images = []

#读取标注文件

with open(ann_file, "r") as f:

contents = f.readlines()#读取所有的行

#遍历内容列表

for line_str in contents:

# 遍历每一行的内容("\t")划分

path_contents = [c for c in line_str.split('\t')]

im_file_name = path_contents[0]#图片名字

class_index = int(path_contents[1])#图片所属类别

#判断文件后缀是否正确

assert str.lower(os.path.splitext(im_file_name)[-1]) in extensions

#构建图片路径和类别索引元组

item = (os.path.join(img_prefix, im_file_name), class_index)

images.append(item)

#返回images列表 元素为图片路径和类别索引元组

#[("./img1.jpg",0),("./img2.jpg",0),...]

return images

class DatasetFolder(data.Dataset):

"""A generic data loader where the samples are arranged in this way: ::

root/class_x/xxx.ext

root/class_x/xxy.ext

root/class_x/xxz.ext

root/class_y/123.ext

root/class_y/nsdf3.ext

root/class_y/asd932_.ext

Args:

root (string): Root directory path.

loader (callable): A function to load a sample given its path.

extensions (list[string]): A list of allowed extensions.

transform (callable, optional): A function/transform that takes in

a sample and returns a transformed version.

E.g, ``transforms.RandomCrop`` for images.

target_transform (callable, optional): A function/transform that takes

in the target and transforms it.

Attributes:

samples (list): List of (sample path, class_index) tuples

"""

def __init__(self, root, loader, extensions, ann_file='', img_prefix='', transform=None, target_transform=None,

cache_mode="no"):

#根据不同数据集类型读取数据

# image folder mode

if ann_file == '':

_, class_to_idx = find_classes(root)

samples = make_dataset(root, class_to_idx, extensions)

# zip mode

else:

samples = make_dataset_with_ann(os.path.join(root, ann_file),

os.path.join(root, img_prefix),

extensions)

if len(samples) == 0:

raise (RuntimeError("Found 0 files in subfolders of: " + root + "\n" +

"Supported extensions are: " + ",".join(extensions)))

self.root = root

self.loader = loader#声明加载器

self.extensions = extensions

#samples为images列表 元素为图片路径和类别索引元组

#[("./img1.jpg",0),("./img2.jpg",0),...]

self.samples = samples

self.labels = [y_1k for _, y_1k in samples]#得到样本的类别索引

self.classes = list(set(self.labels))#去重并构建类别索引列表

self.transform = transform#样本的数据变换策略

self.target_transform = target_transform#类标数据的变换

self.cache_mode = cache_mode

if self.cache_mode != "no":

self.init_cache()

def init_cache(self):

assert self.cache_mode in ["part", "full"]

n_sample = len(self.samples)#获取图像的数量

#分布式采样

global_rank = dist.get_rank()

world_size = dist.get_world_size()

#声明一个与样本数量同样大小的数据列表 默认为None

samples_bytes = [None for _ in range(n_sample)]

start_time = time.time()#记录开始时间

for index in range(n_sample):

# 每n_sample // 10个样本为一个数据缓存块

if index % (n_sample // 10) == 0:

t = time.time() - start_time

print(f'global_rank {dist.get_rank()} cached {index}/{n_sample} takes {t:.2f}s per block')

start_time = time.time()

path, target = self.samples[index]#path路径和类别索引

if self.cache_mode == "full":

samples_bytes[index] = (ZipReader.read(path), target)

elif self.cache_mode == "part" and index % world_size == global_rank:

samples_bytes[index] = (ZipReader.read(path), target)

else:

samples_bytes[index] = (path, target)

self.samples = samples_bytes#[(path1, 0),(path2, 1),...]

#返回变换过的图像数据和类标

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (sample, target) where target is class_index of the target class.

"""

path, target = self.samples[index]

sample = self.loader(path)

if self.transform is not None:

sample = self.transform(sample)

if self.target_transform is not None:

target = self.target_transform(target)

return sample, target

def __len__(self):

return len(self.samples)

def __repr__(self):

fmt_str = 'Dataset ' + self.__class__.__name__ + '\n'

fmt_str += ' Number of datapoints: {}\n'.format(self.__len__())

fmt_str += ' Root Location: {}\n'.format(self.root)

tmp = ' Transforms (if any): '

fmt_str += '{0}{1}\n'.format(tmp, self.transform.__repr__().replace('\n', '\n' + ' ' * len(tmp)))

tmp = ' Target Transforms (if any): '

fmt_str += '{0}{1}'.format(tmp, self.target_transform.__repr__().replace('\n', '\n' + ' ' * len(tmp)))

return fmt_str

IMG_EXTENSIONS = ['.jpg', '.jpeg', '.png', '.ppm', '.bmp', '.pgm', '.tif']

#根据路径打开图片 并转为RGB

def pil_loader(path):

# open path as file to avoid ResourceWarning (https://github.com/python-pillow/Pillow/issues/835)

if isinstance(path, bytes):

img = Image.open(io.BytesIO(path))

elif is_zip_path(path):

data = ZipReader.read(path)

img = Image.open(io.BytesIO(data))

else:

with open(path, 'rb') as f:

img = Image.open(f)

return img.convert('RGB')

return img.convert('RGB')

#使用accimage 进行加载图像数据(是比PIL Image更快的一种库)

def accimage_loader(path):

import accimage

try:

return accimage.Image(path)

except IOError:

# Potentially a decoding problem, fall back to PIL.Image

return pil_loader(path)

def default_img_loader(path):

from torchvision import get_image_backend

if get_image_backend() == 'accimage':

return accimage_loader(path)

else:

return pil_loader(path)

#DatasetFolder的继承类

class CachedImageFolder(DatasetFolder):

"""A generic data loader where the images are arranged in this way: ::

root/dog/xxx.png

root/dog/xxy.png

root/dog/xxz.png

root/cat/123.png

root/cat/nsdf3.png

root/cat/asd932_.png

Args:

root (string): Root directory path.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

target_transform (callable, optional): A function/transform that takes in the

target and transforms it.

loader (callable, optional): A function to load an image given its path.

Attributes:

imgs (list): List of (image path, class_index) tuples

"""

def __init__(self, root, ann_file='', img_prefix='', transform=None, target_transform=None,

loader=default_img_loader, cache_mode="no"):

super(CachedImageFolder, self).__init__(root, loader, IMG_EXTENSIONS,

ann_file=ann_file, img_prefix=img_prefix,

transform=transform, target_transform=target_transform,

cache_mode=cache_mode)

self.imgs = self.samples

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is class_index of the target class.

"""

path, target = self.samples[index]

image = self.loader(path)

if self.transform is not None:

img = self.transform(image)

else:

img = image

if self.target_transform is not None:

target = self.target_transform(target)

return img, target