chainer-目标检测-LightHeadRCNN

文章目录

- 前言

- 一、数据集的准备

-

- 1.标注工具的安装

- 2.数据集的准备

- 3.标注数据

- 4.解释xml文件的内容

- 二、基于chainer的目标检测构建-LightHeadRCNN

-

- 1.引入第三方标准库

- 2.数据加载器

- 3.模型构建

- 4.模型代码

-

- 1.LightHeadRCNN中的主体部分:

- 2.LightHeadRCNN中的ResNet部分:

- 3.LightHeadRCNN中的region_proposal部分:

- 5.整体代码构建

-

- 1.chainer初始化

- 2.数据集以及模型构建

- 3.模型训练

- 6、模型预测

- 三、训练预测代码

- 四、效果

- 总结

前言

通俗的讲就是在一张图像里边找感兴趣的物体,并且标出物体在图像上的位置,在后续很多应用中,都需要目标检测做初步识别结构后做处理,比如目标跟踪,检测数量,检测有无等。

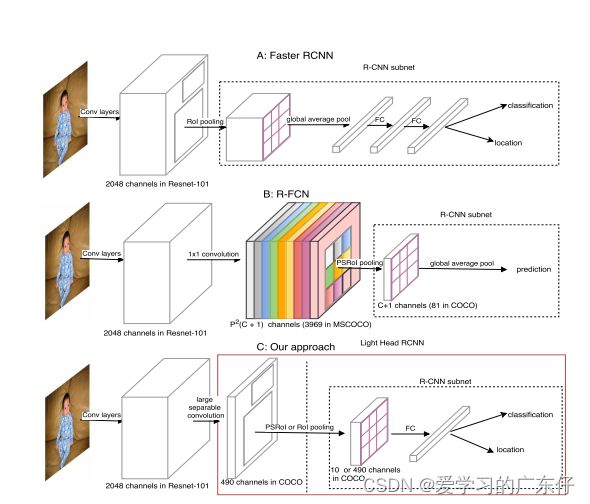

LightHeadRCNN主要是基于Faster RCNN 和R-FCN做的改进,速度都有很大的提升。

Faster R-CNN和R-FCN在RoI warping之前和之后进行密集计算。Faster R-CNN有2个全连接层,而R-FCN产生大的score maps。这些模型由于heavy-head的设计导致速度变慢。即使显著减小backbone,计算开销还是很大。

作者使用薄的feature map和简单的R-CNN子网络(池化和单个全连接组成),使得网络的head尽可能的轻。

一、数据集的准备

首先我是用的是halcon数据集里边的药片,去了前边的100张做标注,后面的300张做测试,其中100张里边选择90张做训练集,10张做验证集。

1.标注工具的安装

pip install labelimg

进入cmd,输入labelimg,会出现如图的标注工具:

![]()

2.数据集的准备

首先我们先创建3个文件夹,如图:

![]()

DataImage:100张需要标注的图像

DataLabel:空文件夹,主要是存放标注文件,这个在labelimg中生成标注文件

test:存放剩下的300张图片,不需要标注

DataImage目录下和test目录的存放样子是这样的(以DataImage为例):

![]()

3.标注数据

首先我们需要在labelimg中设置图像路径和标签存放路径,如图:

![]()

然后先记住快捷键:w:开始编辑,a:上一张,d:下一张。这个工具只需要这三个快捷键即可完成工作。

开始标注工作,首先按下键盘w,这个时候进入编辑框框的模式,然后在图像上绘制框框,输入标签(框框属于什么类别),即可完成物体1的标注,一张物体可以多个标注和多个类别,但是切记不可摸棱两可,比如这张图像对于某物体标注了,另一张图像如果出现同样的就需要标注,或者标签类别不可多个,比如这个图象A物体标注为A标签,下张图的A物体标出成了B标签,最终的效果如图:

![]()

最后标注完成会在DataLabel中看到标注文件,json格式:

![]()

4.解释xml文件的内容

![]()

xml标签文件如图,我们用到的就只有object对象,对其进行解析即可。

二、基于chainer的目标检测构建-LightHeadRCNN

1.引入第三方标准库

import functools, six, random, chainer, os, json, cv2, time

import numpy as np

from PIL import Image,ImageFont,ImageDraw

from chainer.dataset.convert import _concat_arrays

from chainer.dataset.convert import to_device

from chainer.training import extensions

from data.data_params import Create_pascol_voc_Data

from data.data_dataset import COCODataset

from data.data_transform import Transform,TransformDataset

from nets.light_head_rcnn_resnet import LightHeadRCNNResNet

from nets.light_head_rcnn_train_chain1 import LightHeadRCNNTrainChain

from nets.gradient_scaling import GradientScaling

from core.detection_coco_evaluator import DetectionCOCOEvaluator

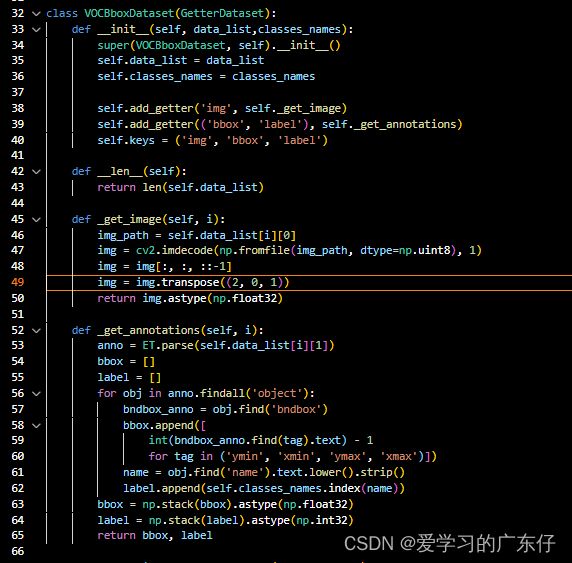

2.数据加载器

读取数据文件夹,这里为的是加载每一张图像以及对应的xml文件,并且解析成自定义格式即可,如图:

这里解释一下,IMGDir代表存放图像文件的路径,XMLDir代表存放标签文件的路径,train_split=0.9代表训练集和验证集9:1

数据格式使用yolo格式,在每次迭代的过程中才开始加载图像以及xml文件,节省内存,如图:

这里的输入data_list是前边CreateDataList_Detection函数的返回,分为训练和验证集合,这里是一个迭代器

下面这里主要是做一些数据增强的操作:

此步骤在训练的时候不是必要的。

3.模型构建

本次使用的LightHeadRCNN目标检测算法,我们先看论文上的图,这里主要是基于FasterRCNN、FCN、LightHeadRCNN进行对比,从图上即可看到几个网络结构的区别:

4.模型代码

1.LightHeadRCNN中的主体部分:

import numpy as np

import chainer

from chainer.backends import cuda

import chainer.functions as F

from nets.faster_rcnn.utils.loc2bbox import loc2bbox

from utils.image import resize

from chainercv.utils import non_maximum_suppression

class LightHeadRCNN(chainer.Chain):

def __init__(

self, extractor, rpn, head, mean,

min_size, max_size, loc_normalize_mean, loc_normalize_std,

):

super(LightHeadRCNN, self).__init__()

with self.init_scope():

self.extractor = extractor

self.rpn = rpn

self.head = head

self.mean = mean

self.min_size = min_size

self.max_size = max_size

self.loc_normalize_mean = loc_normalize_mean

self.loc_normalize_std = loc_normalize_std

self.use_preset('visualize')

@property

def n_class(self):

# Total number of classes including the background.

return self.head.n_class

def __call__(self, x, scales):

img_size = x.shape[2:]

rpn_features, roi_features = self.extractor(x)

rpn_locs, rpn_scores, rois, roi_indices, anchor = self.rpn(

rpn_features, img_size, scales)

roi_cls_locs, roi_scores = self.head(

roi_features, rois, roi_indices)

return roi_cls_locs, roi_scores, rois, roi_indices

def use_preset(self, preset):

if preset == 'visualize':

self.nms_thresh = 0.5

self.score_thresh = 0.5

self.max_n_predict = 100

elif preset == 'evaluate':

self.nms_thresh = 0.5

self.score_thresh = 0.0

self.max_n_predict = 100

else:

raise ValueError('preset must be visualize or evaluate')

def prepare(self, img):

_, H, W = img.shape

scale = 1.

scale = self.min_size / min(H, W)

if scale * max(H, W) > self.max_size:

scale = self.max_size / max(H, W)

img = resize(img, (int(H * scale), int(W * scale)))

img = (img - self.mean).astype(np.float32, copy=False)

return img

def _suppress(self, raw_cls_bbox, raw_prob):

bbox = []

label = []

prob = []

# skip cls_id = 0 because it is the background class

for l in range(1, self.n_class):

cls_bbox_l = raw_cls_bbox.reshape((-1, self.n_class, 4))[:, l, :]

prob_l = raw_prob[:, l]

mask = prob_l > self.score_thresh

cls_bbox_l = cls_bbox_l[mask]

prob_l = prob_l[mask]

keep = non_maximum_suppression(

cls_bbox_l, self.nms_thresh, prob_l)

bbox.append(cls_bbox_l[keep])

# The labels are in [0, self.n_class - 2].

label.append((l - 1) * np.ones((len(keep),)))

prob.append(prob_l[keep])

bbox = np.concatenate(bbox, axis=0).astype(np.float32)

label = np.concatenate(label, axis=0).astype(np.int32)

prob = np.concatenate(prob, axis=0).astype(np.float32)

return bbox, label, prob

def predict(self, imgs):

prepared_imgs = []

sizes = []

for img in imgs:

size = img.shape[1:]

img = self.prepare(img.astype(np.float32))

prepared_imgs.append(img)

sizes.append(size)

bboxes = []

labels = []

scores = []

for img, size in zip(prepared_imgs, sizes):

with chainer.using_config('train', False), \

chainer.function.no_backprop_mode():

img_var = chainer.Variable(self.xp.asarray(img[None]))

scale = img_var.shape[3] / size[1]

roi_cls_locs, roi_scores, rois, _ = self.__call__(

img_var, [scale])

# We are assuming that batch size is 1.

roi_cls_loc = roi_cls_locs.array

roi_score = roi_scores.array

roi = rois / scale

# Convert predictions to bounding boxes in image coordinates.

# Bounding boxes are scaled to the scale of the input images.

mean = self.xp.tile(self.xp.asarray(self.loc_normalize_mean),

self.n_class)

std = self.xp.tile(self.xp.asarray(self.loc_normalize_std),

self.n_class)

roi_cls_loc = (roi_cls_loc * std + mean).astype(np.float32)

roi_cls_loc = roi_cls_loc.reshape((-1, self.n_class, 4))

roi = self.xp.broadcast_to(roi[:, None], roi_cls_loc.shape)

cls_bbox = loc2bbox(roi.reshape((-1, 4)),

roi_cls_loc.reshape((-1, 4)))

cls_bbox = cls_bbox.reshape((-1, self.n_class * 4))

# clip bounding box

cls_bbox[:, 0::2] = self.xp.clip(cls_bbox[:, 0::2], 0, size[0])

cls_bbox[:, 1::2] = self.xp.clip(cls_bbox[:, 1::2], 0, size[1])

prob = F.softmax(roi_score).array

raw_cls_bbox = cuda.to_cpu(cls_bbox)

raw_prob = cuda.to_cpu(prob)

bbox, label, prob = self._suppress(raw_cls_bbox, raw_prob)

indices = np.argsort(prob)[::-1]

bbox = bbox[indices]

label = label[indices]

prob = prob[indices]

if len(bbox) > self.max_n_predict:

bbox = bbox[:self.max_n_predict]

label = label[:self.max_n_predict]

prob = prob[:self.max_n_predict]

bboxes.append(bbox)

labels.append(label)

scores.append(prob)

return bboxes, labels, scores

2.LightHeadRCNN中的ResNet部分:

import numpy as np

import chainer

import chainer.functions as F

import chainer.links as L

from nets.connection.conv_2d_bn_activ import Conv2DBNActiv

from nets.resblock import ResBlock

from nets.functions.psroi_max_align_2d import psroi_max_align_2d

from nets.functions.psroi_average_align_2d import psroi_average_align_2d

from nets.global_context_module import GlobalContextModule

from nets.region_proposal_network import RegionProposalNetwork

from nets.light_head_rcnn_base import LightHeadRCNN

class ResNetExtractor(chainer.Chain):

_blocks = {

50: [3, 4, 6, 3],

101: [3, 4, 23, 3],

152: [3, 8, 36, 3]

}

def __init__(self,n_layers=50,alpha=1, initialW=None):

super(ResNetExtractor, self).__init__()

self.alpha=alpha

if initialW is None:

initialW = chainer.initializers.HeNormal()

kwargs = {

'initialW': initialW,

'bn_kwargs': {'eps': 1e-5},

'stride_first': True

}

with self.init_scope():

# ResNet

self.conv1 = Conv2DBNActiv(3, 64//self.alpha, 7, 2, 3, nobias=True, initialW=initialW)

self.pool1 = lambda x: F.max_pooling_2d(x, ksize=3, stride=2)

self.res2 = ResBlock(self._blocks[n_layers][0], 64//self.alpha, 64//self.alpha, 256//self.alpha, 1, **kwargs)

self.res3 = ResBlock(self._blocks[n_layers][1], 256//self.alpha, 128//self.alpha, 512//self.alpha, 2, **kwargs)

self.res4 = ResBlock(self._blocks[n_layers][2], 512//self.alpha, 256//self.alpha, 1024//self.alpha, 2, **kwargs)

self.res5 = ResBlock(self._blocks[n_layers][3], 1024//self.alpha, 512//self.alpha, 2048//self.alpha, 1, 2, **kwargs)

def __call__(self, x):

with chainer.using_config('train', False):

h = self.pool1(self.conv1(x))

h = self.res2(h)

h = self.res3(h)

res4 = self.res4(h)

res5 = self.res5(res4)

return res4, res5

class LightHeadRCNNResNetHead(chainer.Chain):

def __init__(

self, n_class,alpha, roi_size, spatial_scale,

global_module_initialW=None,

loc_initialW=None, score_initialW=None

):

super(LightHeadRCNNResNetHead, self).__init__()

self.alpha = alpha

self.n_class = n_class

self.spatial_scale = spatial_scale

self.roi_size = roi_size

with self.init_scope():

self.global_context_module = GlobalContextModule(2048//self.alpha, 256//self.alpha, self.roi_size * self.roi_size * 10, 15, initialW=global_module_initialW)

self.fc1 = L.Linear(self.roi_size * self.roi_size * 10, 2048//self.alpha, initialW=score_initialW)

self.score = L.Linear(2048//self.alpha, n_class, initialW=score_initialW)

self.cls_loc = L.Linear(2048//self.alpha, 4 * n_class, initialW=loc_initialW)

def __call__(self, x, rois, roi_indices):

# global context module

h = self.global_context_module(x)

# psroi max align

pool = psroi_max_align_2d(

h, rois, roi_indices,

10, self.roi_size, self.roi_size,

self.spatial_scale, self.roi_size,

sampling_ratio=2.)

# fc

fc1 = F.relu(self.fc1(pool))

roi_cls_locs = self.cls_loc(fc1)

roi_scores = self.score(fc1)

return roi_cls_locs, roi_scores

class LightHeadRCNNResNet(LightHeadRCNN):

feat_stride = 16

proposal_creator_params = {

'nms_thresh': 0.7,

'n_train_pre_nms': 12000,

'n_train_post_nms': 2000,

'n_test_pre_nms': 6000,

'n_test_post_nms': 1000,

'force_cpu_nms': False,

'min_size': 0,

}

def __init__(

self,n_layers=50,

n_fg_class=None,alpha=1,

min_size=800, max_size=1333, roi_size=7,

ratios=[0.5, 1, 2], anchor_scales=[2, 4, 8, 16, 32],

loc_normalize_mean=(0., 0., 0., 0.),

loc_normalize_std=(0.1, 0.1, 0.2, 0.2),

resnet_initialW=None, rpn_initialW=None,

global_module_initialW=None,

loc_initialW=None, score_initialW=None,

proposal_creator_params=None,

):

self.alpha=alpha

if resnet_initialW is None:

resnet_initialW = chainer.initializers.HeNormal()

if rpn_initialW is None:

rpn_initialW = chainer.initializers.Normal(0.01)

if global_module_initialW is None:

global_module_initialW = chainer.initializers.Normal(0.01)

if loc_initialW is None:

loc_initialW = chainer.initializers.Normal(0.001)

if score_initialW is None:

score_initialW = chainer.initializers.Normal(0.01)

if proposal_creator_params is not None:

self.proposal_creator_params = proposal_creator_params

extractor = ResNetExtractor(n_layers=n_layers,alpha=self.alpha, initialW=resnet_initialW)

rpn = RegionProposalNetwork(

1024//self.alpha, 512//self.alpha,

ratios=ratios,

anchor_scales=anchor_scales,

feat_stride=self.feat_stride,

initialW=rpn_initialW,

proposal_creator_params=self.proposal_creator_params,

)

head = LightHeadRCNNResNetHead(

n_fg_class + 1,alpha=self.alpha,

roi_size=roi_size,

spatial_scale=1. / self.feat_stride,

global_module_initialW=global_module_initialW,

loc_initialW=loc_initialW,

score_initialW=score_initialW

)

mean = np.array([122.7717, 115.9465, 102.9801], dtype=np.float32)[:, None, None]

super(LightHeadRCNNResNet, self).__init__(

extractor, rpn, head, mean, min_size, max_size,

loc_normalize_mean, loc_normalize_std)

3.LightHeadRCNN中的region_proposal部分:

import numpy as np

import chainer

from chainer.backends import cuda

import chainer.functions as F

import chainer.links as L

from nets.utils.generate_anchor_base import generate_anchor_base

from nets.utils.proposal_creator import ProposalCreator

class RegionProposalNetwork(chainer.Chain):

def __init__(

self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32], feat_stride=16,

initialW=None,

proposal_creator_params={},

):

self.anchor_base = generate_anchor_base(

anchor_scales=anchor_scales, ratios=ratios)

self.feat_stride = feat_stride

self.proposal_layer = ProposalCreator(**proposal_creator_params)

n_anchor = self.anchor_base.shape[0]

super(RegionProposalNetwork, self).__init__()

with self.init_scope():

self.conv1 = L.Convolution2D(

in_channels, mid_channels, 3, 1, 1, initialW=initialW)

self.score = L.Convolution2D(

mid_channels, n_anchor * 2, 1, 1, 0, initialW=initialW)

self.loc = L.Convolution2D(

mid_channels, n_anchor * 4, 1, 1, 0, initialW=initialW)

def forward(self, x, img_size, scales=None):

n, _, hh, ww = x.shape

if scales is None:

scales = [1.0] * n

if not isinstance(scales, chainer.utils.collections_abc.Iterable):

scales = [scales] * n

anchor = _enumerate_shifted_anchor(

self.xp.array(self.anchor_base), self.feat_stride, hh, ww)

n_anchor = anchor.shape[0] // (hh * ww)

h = F.relu(self.conv1(x))

rpn_locs = self.loc(h)

rpn_locs = rpn_locs.transpose((0, 2, 3, 1)).reshape((n, -1, 4))

rpn_scores = self.score(h)

rpn_scores = rpn_scores.transpose((0, 2, 3, 1))

rpn_fg_scores =\

rpn_scores.reshape((n, hh, ww, n_anchor, 2))[:, :, :, :, 1]

rpn_fg_scores = rpn_fg_scores.reshape((n, -1))

rpn_scores = rpn_scores.reshape((n, -1, 2))

rois = []

roi_indices = []

for i in range(n):

roi = self.proposal_layer(

rpn_locs[i].array, rpn_fg_scores[i].array, anchor, img_size,

scale=scales[i])

batch_index = i * self.xp.ones((len(roi),), dtype=np.int32)

rois.append(roi)

roi_indices.append(batch_index)

rois = self.xp.concatenate(rois, axis=0)

roi_indices = self.xp.concatenate(roi_indices, axis=0)

return rpn_locs, rpn_scores, rois, roi_indices, anchor

def _enumerate_shifted_anchor(anchor_base, feat_stride, height, width):

xp = cuda.get_array_module(anchor_base)

shift_y = xp.arange(0, height * feat_stride, feat_stride)

shift_x = xp.arange(0, width * feat_stride, feat_stride)

shift_x, shift_y = xp.meshgrid(shift_x, shift_y)

shift = xp.stack((shift_y.ravel(), shift_x.ravel(),

shift_y.ravel(), shift_x.ravel()), axis=1)

A = anchor_base.shape[0]

K = shift.shape[0]

anchor = anchor_base.reshape((1, A, 4)) + \

shift.reshape((1, K, 4)).transpose((1, 0, 2))

anchor = anchor.reshape((K * A, 4)).astype(np.float32)

return anchor

5.整体代码构建

1.chainer初始化

self.image_size = image_size if (image_size == 300 or image_size == 512) else 300

if USEGPU =='-1':

self.gpu_devices = -1

else:

self.gpu_devices = int(USEGPU)

chainer.cuda.get_device_from_id(self.gpu_devices).use()

2.数据集以及模型构建

train_data_list, self.val_data_list, self.classes_names = CreateDataList_Detection(os.path.join(DataDir,'DataImage'),os.path.join(DataDir,'DataLabel'),train_split)

light_head_rcnn = LightHeadRCNNResNet(n_layers=self.n_layers, n_fg_class=len(self.classes_names),alpha=self.alpha, min_size=self.min_size, max_size=self.max_size)

light_head_rcnn.use_preset('evaluate')

self.model = FasterRCNNTrainChain(faster_rcnn)

if self.gpu_devices>=0:

self.model.to_gpu()

train = TransformDataset(VOCBboxDataset(data_list=train_data_list,classes_names=self.classes_names),Detection_Transform(self.model.coder, self.model.insize, self.model.mean))

self.train_iter = chainer.iterators.SerialIterator(train, self.batch_size)

test = VOCBboxDataset(data_list=self.val_data_list,classes_names=self.classes_names)

self.test_iter = chainer.iterators.SerialIterator(test, self.batch_size, repeat=False, shuffle=False)

3.模型训练

这里与分类网络一样需要先理解chainer的工作原理:

![]() 从图中我们可以了解到,首先我们需要设置一个Trainer,这个可以理解为一个大大的训练板块,然后做一个Updater,这个从图中可以看出是把训练的数据迭代器和优化器链接到更新器中,实现对模型的正向反向传播,更新模型参数。然后还有就是Extensions,此处的功能是在训练的中途进行操作可以随时做一些回调(描述可能不太对),比如做一些模型评估,修改学习率,可视化验证集等操作。

从图中我们可以了解到,首先我们需要设置一个Trainer,这个可以理解为一个大大的训练板块,然后做一个Updater,这个从图中可以看出是把训练的数据迭代器和优化器链接到更新器中,实现对模型的正向反向传播,更新模型参数。然后还有就是Extensions,此处的功能是在训练的中途进行操作可以随时做一些回调(描述可能不太对),比如做一些模型评估,修改学习率,可视化验证集等操作。

因此我们只需要严格按照此图建设训练步骤基本上没有什么大问题,下面一步一步设置

设置优化器:

optimizer = optimizers.MomentumSGD(lr=learning_rate, momentum=0.9)

optimizer.setup(self.train_chain)

optimizer.add_hook(chainer.optimizer.WeightDecay(rate=0.0005))

设置update和trainer:

updater = StandardUpdater(self.train_iter, optimizer, device=self.gpu_devices)

trainer = chainer.training.Trainer(updater, (TrainNum, 'epoch'), out=ModelPath)

Extensions功能设置:

# 修改学习率

trainer.extend(

extensions.ExponentialShift('lr', 0.9, init=learning_rate),

trigger=chainer.training.triggers.ManualScheduleTrigger([50,80,150,200,280,350], 'epoch'))

# 每过一次迭代验证集跑一次

trainer.extend(

DetectionVOCEvaluator(self.test_iter, self.train_chain.model, use_07_metric=True, label_names=self.classes_names),

trigger=chainer.training.triggers.ManualScheduleTrigger([each for each in range(1,TrainNum)], 'epoch'))

# 可视化验证集效果

trainer.extend(Detection_VIS(

self.model,

self.val_data_list,

self.classes_names, image_size=self.image_size,

trigger=chainer.training.triggers.ManualScheduleTrigger([each for each in range(1,TrainNum)], 'epoch'),

device=self.gpu_devices,ModelPath=ModelPath,predict_score=0.5

))

# 模型保存

trainer.extend(

extensions.snapshot_object(self.model, 'Ctu_best_Model.npz'),

trigger=chainer.training.triggers.MaxValueTrigger('validation/main/map',trigger=chainer.training.triggers.ManualScheduleTrigger([each for each in range(1,TrainNum)], 'epoch')),

)

# 日志及文件输出

log_interval = 0.1, 'epoch'

trainer.extend(chainer.training.extensions.LogReport(filename='ctu_log.json',trigger=log_interval))

trainer.extend(chainer.training.extensions.observe_lr(), trigger=log_interval)

trainer.extend(extensions.dump_graph("main/loss", filename='ctu_net.net'))

最后配置完之后只需要一行代码即可开始训练

trainer.run()

6、模型预测

模型预测主要还是输入为opencv格式,在数据预处理之前与前面数据加载时做的操作一致就行,直接上代码:

三、训练预测代码

因为本代码是以对象形式编写的,因此调用起来也是很方便的,如下显示:

# ctu = Ctu_LightHeadRCNN('0',600,1000)

# ctu.InitModel(r'/home/ctu/Ctu_Project/DL_Project/DataDir/DataSet_Detection_YaoPian',train_split=0.9,batch_size=2,Pre_Model=None,alpha=2)

# ctu.train(TrainNum=500,learning_rate=0.0001,ModelPath='result_Model1')

ctu = Ctu_LightHeadRCNN(USEGPU='0')

ctu.LoadModel('./result_Model')

predictNum=1

predict_cvs = []

cv2.namedWindow("result", 0)

cv2.resizeWindow("result", 640, 480)

for root, dirs, files in os.walk(r'/home/ctu/Ctu_Project/DL_Project/DataDir/DataSet_Detection_YaoPian/test'):

for f in files:

if len(predict_cvs) >=predictNum:

predict_cvs.clear()

img_cv = ctu.read_image(os.path.join(root, f))

if img_cv is None:

continue

predict_cvs.append(img_cv)

if len(predict_cvs) == predictNum:

result = ctu.predict(predict_cvs,0.0)

print(result['time'])

for each_id in range(result['img_num']):

for each_bbox in result['bboxes_result'][each_id]:

print(each_bbox)

cv2.imshow("result", result['imges_result'][each_id])

cv2.waitKey()

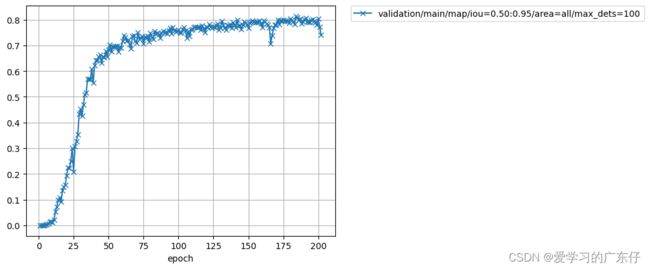

四、效果

训练时的可视化,数据是验证集数据(没有参与过训练的数据),效果 包含loss,map等指标如图:

总结

本文章主要是基于chainer的目标检测LightHeadRCNN的基本实现思路和步骤