Lenet网络训练MNIST数据集

Lenet网络训练MNIST数据集

使用pytorch搭建Lenet网络,并使用MNIST数据集进行训练,在测试集上能够实现99%以上的正确率。

实现代码可供参考:

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

# LeNet-5能达到98%的准确率

print(torch.__version__)

Batch_Size = 256

EPOCH = 20

Learning_rate = 0.01

DEVICE = torch.device('cuda:0')

print(DEVICE)

# 1.加载数据

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('./dataset', train=True, download=True,

transform=transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=Batch_Size, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./dataset', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=Batch_Size, shuffle=True)

# 2.定义网络

class AlexNet(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 6, kernel_size=5, stride=1, padding=0)

self.conv2 = nn.Conv2d(6, 16, kernel_size=5, stride=1, padding=0)

self.fc1 = nn.Linear(256, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x): # x: [B, 1, 28, 28]

insize = x.size(0) # insize = B

x = self.conv1(x) # x: [B, 6, 24, 24]

x = F.relu(F.avg_pool2d(x, 2, 2)) # x: [B, 6, 12, 12]

x = self.conv2(x) # x: [B, 16, 8, 8]

x = F.relu(F.avg_pool2d(x, 2, 2)) # x: [B, 16, 4, 4]

x = x.view(insize, -1) # x: [B, 256]

x = F.relu(self.fc1(x)) # x: [B, 120]

x = F.relu(self.fc2(x)) # x: [B, 84]

x = F.relu(self.fc3(x)) # x: [B, 10]

return x

# 3.定义网络和优化器

model = AlexNet().to(DEVICE)

optimizer = optim.SGD(model.parameters(), lr=Learning_rate, momentum=0.9)

criten = nn.CrossEntropyLoss().to(DEVICE)

# 4.训练模型

for epoch in range(EPOCH):

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(DEVICE), target.to(DEVICE)

output = model(data)

optimizer.zero_grad()

loss = criten(output, target)

loss.backward()

optimizer.step()

if (batch_idx + 1) % 30 == 0:

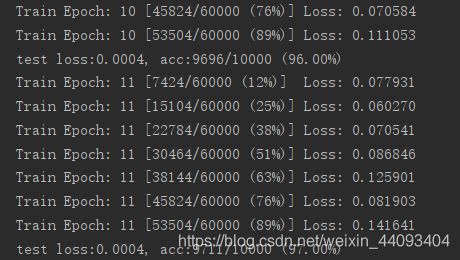

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

# 每个EPOCH测试准确率

correct = 0

test_loss = 0

for data, target in test_loader:

data, target = data.to(DEVICE), target.to(DEVICE) # data: [B, 1, 32, 32] target: [B, 10]

output = model(data)

loss = criten(output, target)

test_loss += loss.item()

pred = output.argmax(dim=1) # pred: [B]

correct += pred.eq(target.data).sum()

test_loss /= len(test_loader.dataset)

print('test loss:{:.4f}, acc:{}/{} ({:.2f}%)'.format(

test_loss, correct, len(test_loader.dataset), 100 * correct // len(test_loader.dataset)

))

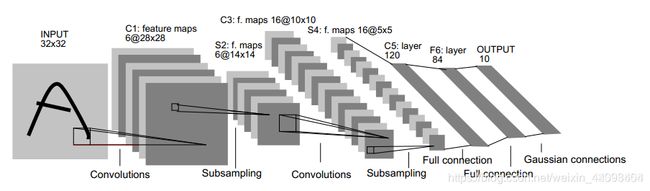

网络结论如下图所示:

值得注意的地方在于,当使用pytorch包中的television导入MNIST数据集时,其中每张输入图像大小为2828,而非原论文提到的3232。因此本文使用的最后全连接层的第一层不是[batch_size, 1655],而是[batch_size, 1644]。(也尝试过将第一个卷积层改为padding=2以保持维度不变,但效果不佳)。