Python——tensorflow2.8逻辑回归算法(mnist)

tensorflow在2.0以上去掉了mnist数据集的处理指令,如果要下载的话得转为keras来处理,但是下载的数据没办法减容next_batch()函数,所以我们可以使用另一种方法:把tensorflow.examples.tutorials.mnist import input_data这个程序里面所需要的库补齐。

首先查看你的site-packages里面的tensorflow有没有examples文件夹,如果没有的话就把附件里面整个example文件夹放进去,如果有但是程序提示没有tutorials那就把example里面的tutorials文件夹放进到你的路径中。

一、代码分析

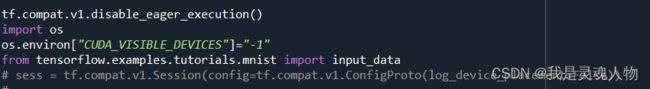

这部分是用来设置计算采用CPU还是GPU的,把os.environ["CUDA_VISIBLE_DEVICES"]="-1"的“-1”改成0、1、2可以换成使用GPU来计算,但在这个例子中用不到。sess那句改成True可以看到你的GPU使用的情况。

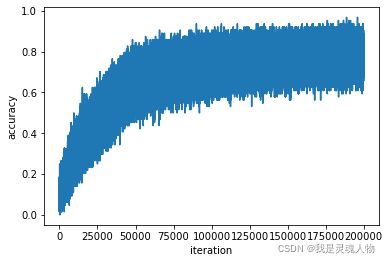

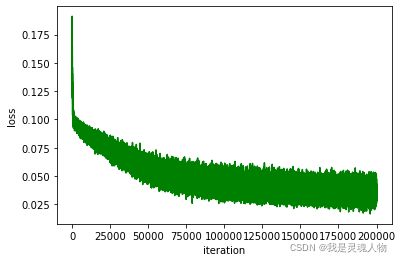

这里我是用两层来计算,因为使用一层我发现准去率大概在95以下,两层可以提高到99;另外激活函数使用relu,在这个例子中使用softmax也一样的;优化器和loss值我试了两个,区别不大;最后保存一下准去率和loss,用来画图。

最终结果是这个样子的,其实没什么难度,但tensorflow2.0跟以前很多例子不一样,所以把一些资源放出来。

# -*- coding: utf-8 -*-

"""

Created on Mon Mar 28 22:18:35 2022

@author: peter

"""

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import datetime

tf.compat.v1.disable_eager_execution()

import os

os.environ["CUDA_VISIBLE_DEVICES"]="0"

from tensorflow.examples.tutorials.mnist import input_data

sess = tf.compat.v1.Session(config=tf.compat.v1.ConfigProto(log_device_placement=True))

# =============================================================================

# import the dataset from keras.

# turn the labels into one-hot encoding

# =============================================================================

mnists = input_data.read_data_sets('data/', one_hot=True)

train_examples = mnists.train.images

train_labels = mnists.train.labels

test_examples = mnists.test.images

test_labels = mnists.test.labels

# =============================================================================

# show the data picture

# =============================================================================

nsample = 5

# =============================================================================

# # =============================================================================

# for i in range(nsample):

# cap_img = train_images[i,:]

# plt.matshow(cap_img,cmap=plt.get_cmap('gray'))

# plt.show()

#

# # =============================================================================

# =============================================================================

# =============================================================================

# build the model

# =============================================================================

batchSize = 64

numClass = 10

interaction = 200000

inputSize = 784

X = tf.compat.v1.placeholder(dtype = tf.float32,shape=[None,inputSize])

y = tf.compat.v1.placeholder(dtype = tf.float32,shape=[None,numClass])

W1 = tf.compat.v1.Variable(tf.compat.v1.random_normal([inputSize,numClass],stddev=0.1))

b1 = tf.compat.v1.Variable(tf.constant(0.1),[numClass])

h1 = tf.compat.v1.nn.relu(tf.matmul(X, W1)+b1)

W2 = tf.compat.v1.Variable(tf.compat.v1.random_normal([numClass,numClass],stddev=0.1))

b2 = tf.compat.v1.Variable(tf.constant(0.1),[numClass])

y_pred = tf.compat.v1.nn.relu(tf.matmul(h1, W2)+b2)

# y_pred = tf.matmul(h1,W2)+b2

# loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_pred,labels=y))

loss = tf.reduce_mean(tf.square(y-y_pred))

# opt = tf.compat.v1.train.AdamOptimizer().minimize(loss)

opt = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.001).minimize(loss)

correct_pre = tf.equal(tf.argmax(y_pred,1), tf.argmax(y,1))

accuracy = tf.reduce_mean(tf.cast(correct_pre, "float"))

sess = tf.compat.v1.Session()

init = tf.compat.v1.global_variables_initializer()

sess.run(init)

result_acc=[]

result_loss=[]

curr1 = datetime.datetime.now()

for i in range(interaction):

batchdata=mnists.train.next_batch(batchSize)

batchInput = batchdata[0]

batchLabels = batchdata[1]

# batchInput = get_nextBatch(start_batch, train_example)

# print("the shape of batchInput is {}".format(np.shape(batchInput)))

# batchLabels = get_nextBatch(start_batch, train_labels)

# print("the shape of batchInput is {} and batchLabels is {}".format(np.shape(batchInput),np.shape(batchLabels)))

accuracy_ = sess.run(accuracy,feed_dict={X:batchInput,y:batchLabels})

_,loss_ = sess.run([opt,loss],feed_dict={X:batchInput,y:batchLabels})

result_acc.append(accuracy_)

result_loss.append(loss_)

if (i%1000==0):

print("iteration {}/{}......".format(i+1,interaction),"loss {}......".format(loss_),"accuracy {}......".format(accuracy_))

print("the process is {}".format(100*i/interaction)+"%")

curr2 = datetime.datetime.now()

processing_time = curr2-curr1

print("------------message------------\r")

print("the processing time is {}".format(processing_time))

plt.Figure()

plt.plot(result_loss,color='g')

plt.xlabel("iteration")

plt.ylabel("loss")

plt.show()

plt.plot(result_acc)

plt.xlabel("iteration")

plt.ylabel("accuracy")

plt.show()

print("------------message------------\r")

print("run successfully")

附件链接:tensorflow.example-深度学习文档类资源-CSDN文库![]() https://download.csdn.net/download/wenpeitao/85072580

https://download.csdn.net/download/wenpeitao/85072580