安卓NDK开发——基于JNI与NCNN实现深度神经网络口罩佩带检测模型部署

前言

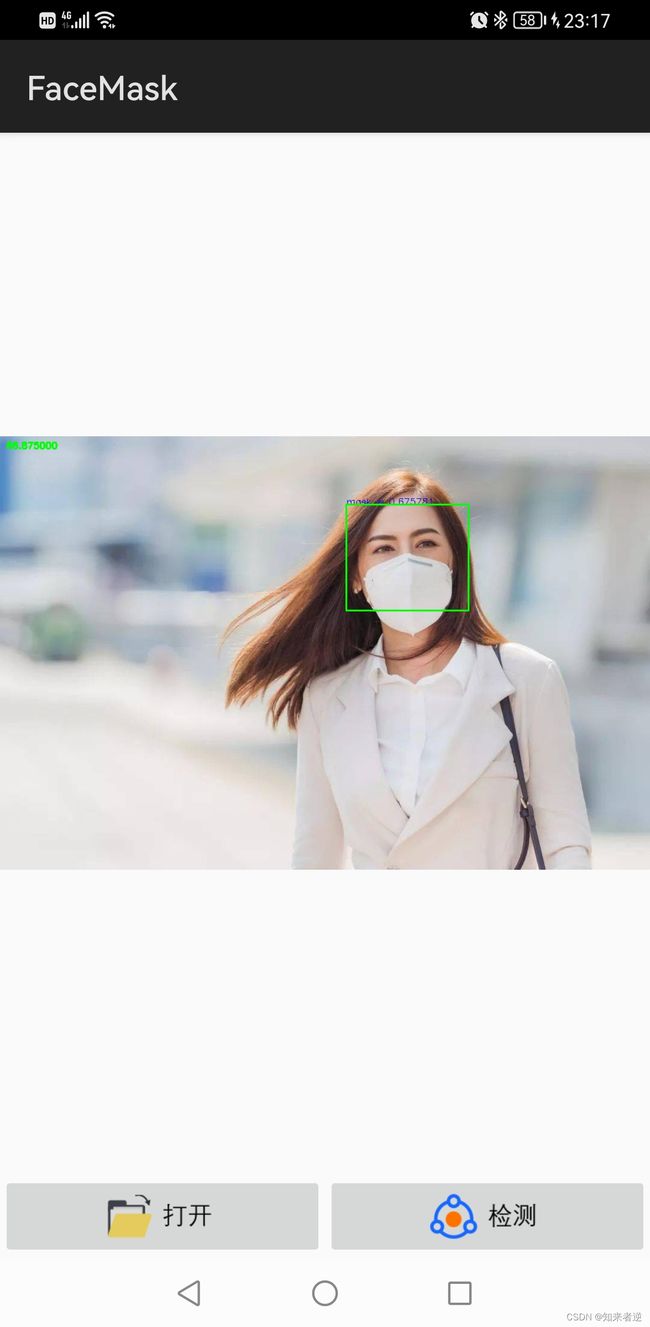

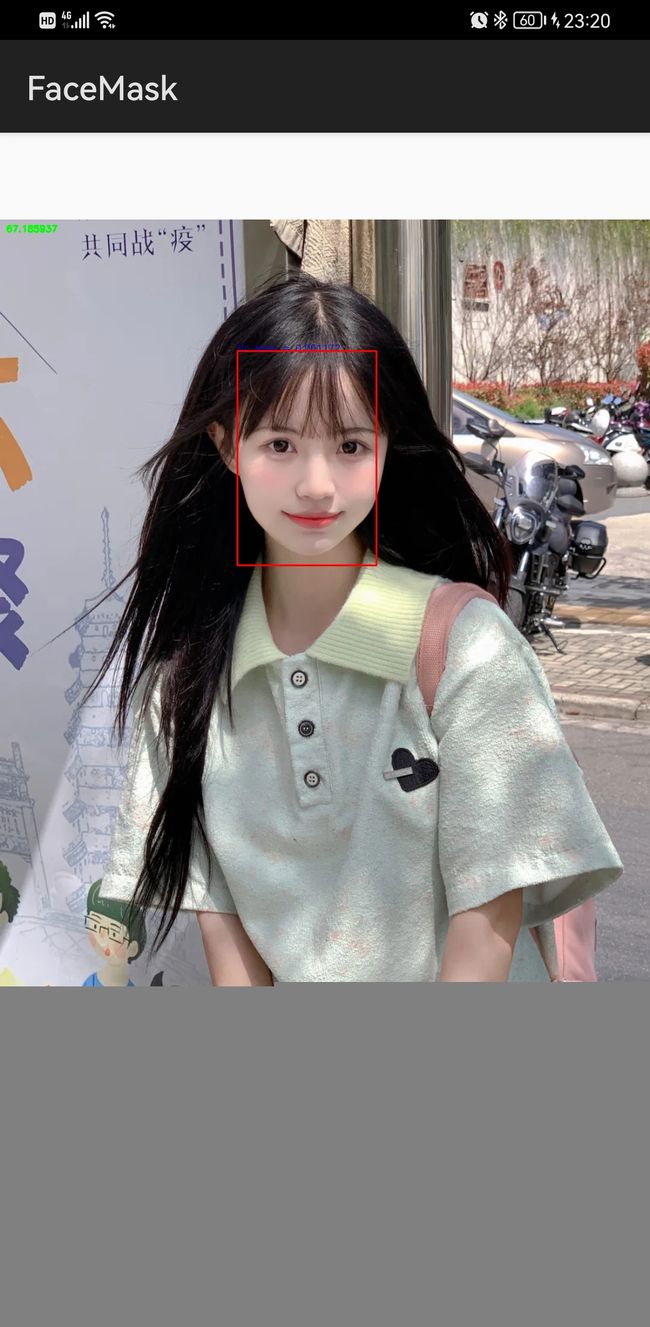

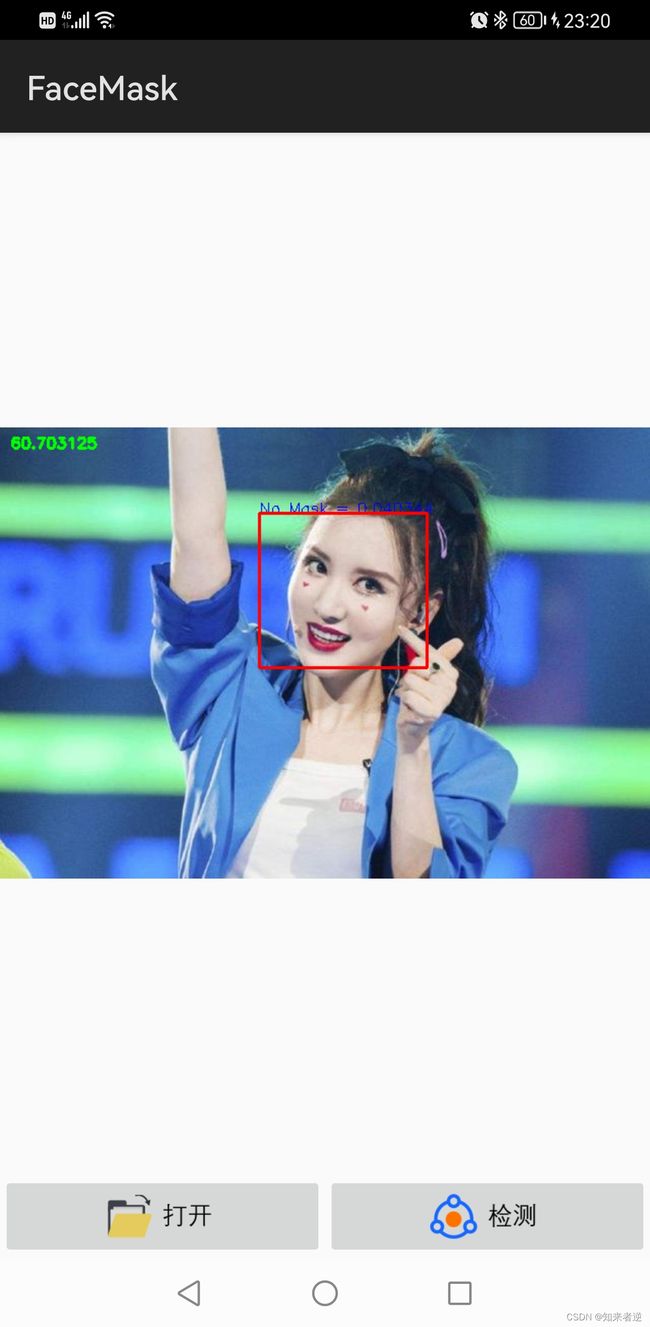

1.这是一个是检测人员是否佩戴口罩的的安卓NDK项目,只检测人脸上是否有口罩是,是于检测是否佩戴标准,比如是否盖住嘴巴和鼻子,是否标准的医用的口罩的模型还在优化训练中。项目源码地址:https://download.csdn.net/download/matt45m/85252239

2.开发环境是win10,IDE是Android studio 北极狐,用到的库有NCNN,OpenCV,NCNN库可以用官方编译好的releases库,也可以按官方文档自己编译。OpenCV用的是nihui大佬简化过的opencv-mobile,大小只有10多M,如果不嫌大也可以用OpenCV官方的版本。测试使用的安卓手机是mate 30 pro ,有测试CPU和GPU两个性能。

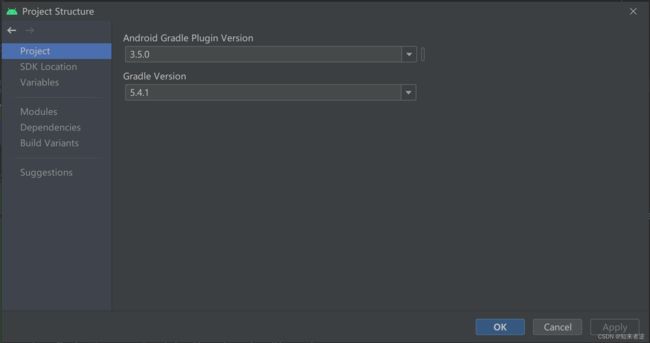

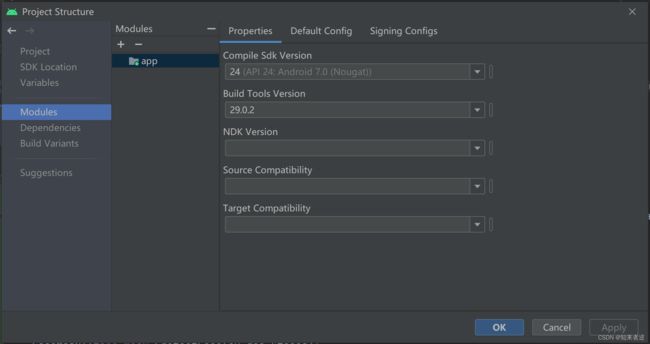

3.项目的各种依赖版本:

一、模型训练

1.使用的数据集是开源的人脸口罩数据集:https://github.com/X-zhangyang/Real-World-Masked-Face-Dataset。数据集基本都是标佩戴。

2.所用的模型训练框架是 yolov5 lite ,在移动端上在保证精度的同时,如果能用上GPU加速,速度能达到10FPS以上。

3.把训练好的模型先onnx,简化之后再转ncnn的模型,这个可以参考ncnn的官网,如果觉得麻烦,也可以直接转pnnx之后直接转ncnn。

二、项目部署

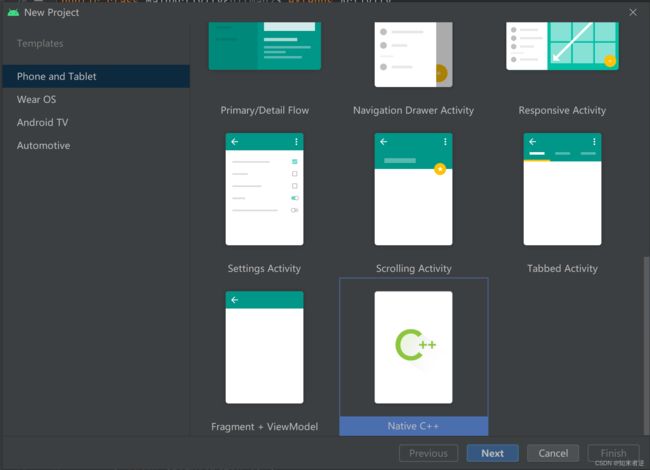

1.创建一个Native C++项目

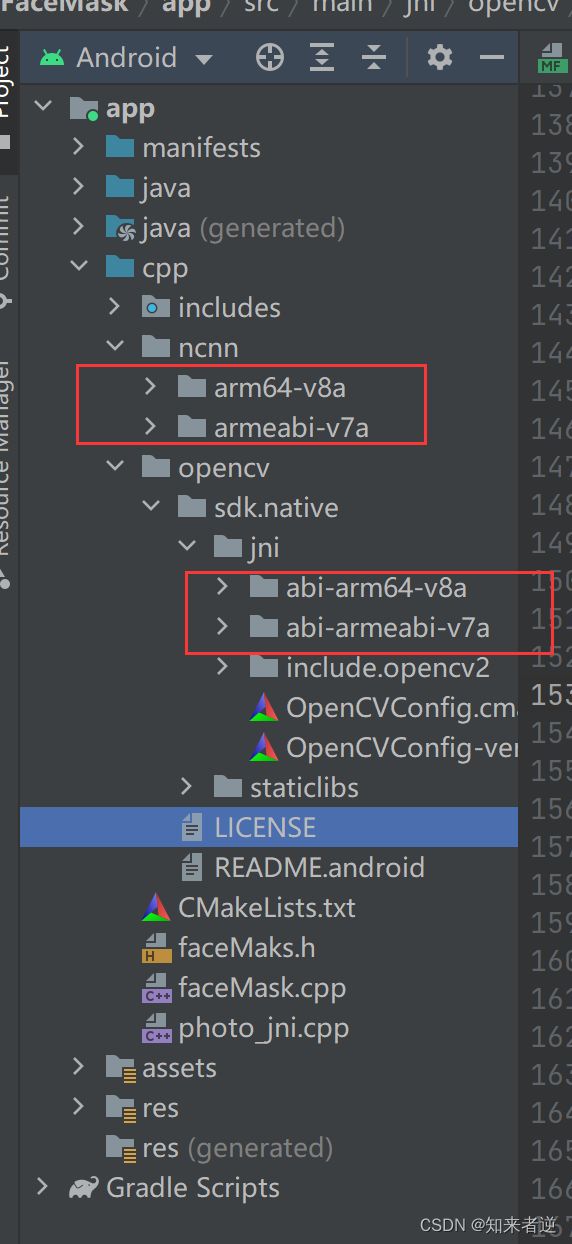

2.把下载好的NCNN和OpenCV so库粘贴到cpp目录下,这里只要arm64-v8a和armeadbi-v7a这两个库,这样的话,项目会小很多,但就是不能在安卓的虚拟机上运行,毕竟ncnn在虚拟机也是跑不动的。

3.添加推理代码

3.1 faceMask.h

#ifndef _FACE_FACEMASK_H_

#define _FACE_FACEMASK_H_

#include "net.h"

#include 3.2 faceMask.cpp

#include "faceMaks.h"

#include 3.3 在原本的jni.cpp文件上添加代码

#include 以上C++部分的代码已添加完成。

4.编写makefile文件

project(photo)

cmake_minimum_required(VERSION 3.4.1)

#导入ncnn库

set(ncnn_DIR ${CMAKE_SOURCE_DIR}/ncnn/${ANDROID_ABI}/lib/cmake/ncnn)

find_package(ncnn REQUIRED)

#导入opencv库

set(OpenCV_DIR ${CMAKE_SOURCE_DIR}/opencv/sdk/native/jni)

find_package(OpenCV REQUIRED core imgproc)

#导入cpp文件

add_library(${PROJECT_NAME} SHARED faceMask.cpp mask_jni.cpp)

target_link_libraries(${PROJECT_NAME} ncnn ${OpenCV_LIBS})

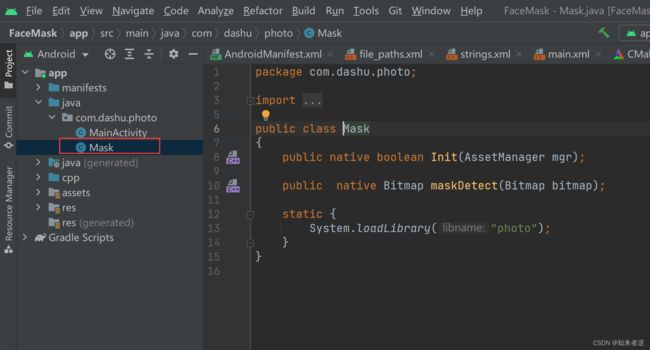

package com.dashu.photo;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

public class Mask

{

public native boolean Init(AssetManager mgr);

public native Bitmap maskDetect(Bitmap bitmap);

static {

System.loadLibrary("photo");

}

}

6.jave实现代码

package com.dashu.photo;

import android.app.Activity;

import android.content.Intent;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.os.Bundle;

import android.util.Log;

import android.view.View;

import android.widget.ImageButton;

import android.widget.ImageView;

import android.media.ExifInterface;

import android.graphics.Matrix;

import java.io.IOException;

import java.io.FileNotFoundException;

public class MainActivity extends Activity

{

private static final int SELECT_IMAGE = 1;

private ImageView imageView;

private Bitmap bitmap = null;

private Bitmap temp = null;

private Bitmap showImage = null;

private Bitmap bitmapCopy = null;

private Bitmap dst = null;

boolean useGPU = true;

private Mask mask = new Mask();

@Override

public void onCreate(Bundle savedInstanceState)

{

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

imageView = (ImageView) findViewById(R.id.imageView);

boolean ret_init = mask.Init(getAssets());

if (!ret_init)

{

Log.e("MainActivity", "Mask Init failed");

}

//打开图像

ImageButton openFile = (ImageButton) findViewById(R.id.btn_open_images);

openFile.setOnClickListener(new View.OnClickListener()

{

@Override

public void onClick(View arg0)

{

Intent i = new Intent(Intent.ACTION_PICK);

i.setType("image/*");

startActivityForResult(i, SELECT_IMAGE);

}

});

//检测图像

ImageButton image_gray = (ImageButton) findViewById(R.id.btn_detection);

image_gray.setOnClickListener(new View.OnClickListener()

{

@Override

public void onClick(View arg0)

{

if (showImage == null)

{

return;

}

Bitmap bitmap = mask.maskDetect(showImage);

imageView.setImageBitmap(bitmap);

}

});

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data)

{

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK && null != data) {

Uri selectedImage = data.getData();

try

{

if (requestCode == SELECT_IMAGE)

{

bitmap = decodeUri(selectedImage);

showImage = bitmap.copy(Bitmap.Config.ARGB_8888, true);

bitmapCopy = bitmap.copy(Bitmap.Config.ARGB_8888, true);

imageView.setImageBitmap(bitmap);

}

}

catch (FileNotFoundException e)

{

Log.e("MainActivity", "FileNotFoundException");

return;

}

}

}

private Bitmap decodeUri(Uri selectedImage) throws FileNotFoundException

{

// Decode image size

BitmapFactory.Options o = new BitmapFactory.Options();

o.inJustDecodeBounds = true;

BitmapFactory.decodeStream(getContentResolver().openInputStream(selectedImage), null, o);

// The new size we want to scale to

final int REQUIRED_SIZE = 640;

// Find the correct scale value. It should be the power of 2.

int width_tmp = o.outWidth, height_tmp = o.outHeight;

int scale = 1;

while (true) {

if (width_tmp / 2 < REQUIRED_SIZE

|| height_tmp / 2 < REQUIRED_SIZE) {

break;

}

width_tmp /= 2;

height_tmp /= 2;

scale *= 2;

}

// Decode with inSampleSize

BitmapFactory.Options o2 = new BitmapFactory.Options();

o2.inSampleSize = scale;

Bitmap bitmap = BitmapFactory.decodeStream(getContentResolver().openInputStream(selectedImage), null, o2);

// Rotate according to EXIF

int rotate = 0;

try

{

ExifInterface exif = new ExifInterface(getContentResolver().openInputStream(selectedImage));

int orientation = exif.getAttributeInt(ExifInterface.TAG_ORIENTATION, ExifInterface.ORIENTATION_NORMAL);

switch (orientation) {

case ExifInterface.ORIENTATION_ROTATE_270:

rotate = 270;

break;

case ExifInterface.ORIENTATION_ROTATE_180:

rotate = 180;

break;

case ExifInterface.ORIENTATION_ROTATE_90:

rotate = 90;

break;

}

}

catch (IOException e)

{

Log.e("MainActivity", "ExifInterface IOException");

}

Matrix matrix = new Matrix();

matrix.postRotate(rotate);

return Bitmap.createBitmap(bitmap, 0, 0, bitmap.getWidth(), bitmap.getHeight(), matrix, true);

}

}

7.布局文件

<LinearLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

xmlns:app="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

tools:context=".MainActivity">

<ImageView

android:id="@+id/imageView"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="1">

ImageView>

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="horizontal">

<ImageButton

android:id="@+id/btn_open_images"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:src="@mipmap/open_images" />

<ImageButton

android:id="@+id/btn_detection"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:src="@mipmap/detection" />

LinearLayout>

LinearLayout>