基于卷积神经网络LeNet的MNIST手写数字数据集分类

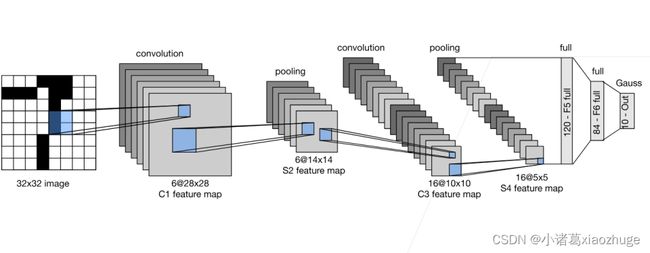

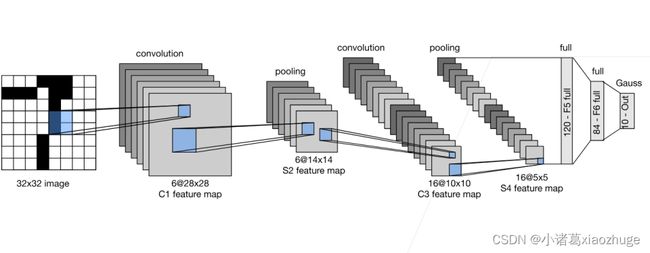

1.LeNet模型介绍

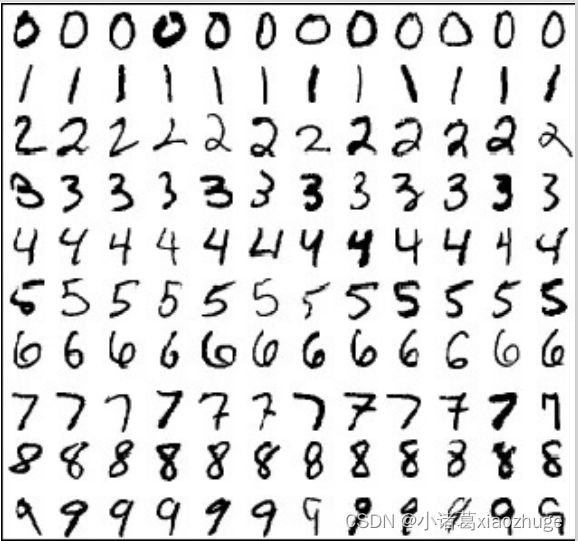

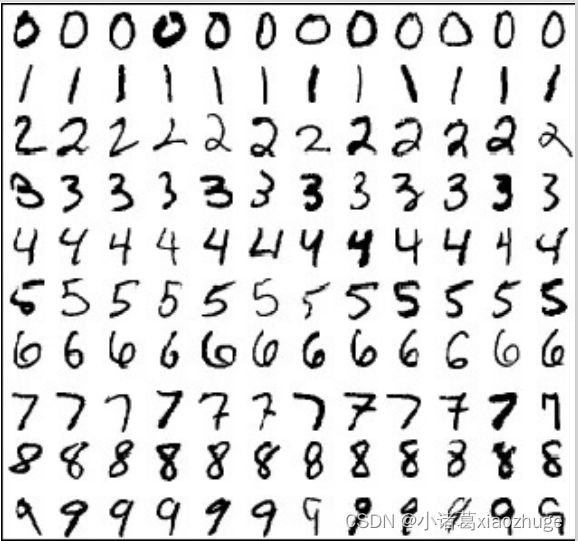

2.MNIST手写数据集介绍

- 50,000个训练数据

- 10,000个测试数据

- 图像大小为28*28

3.代码

import torchvision

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import transforms

import time

print(torch)

print(torch.cuda.device_count())

!nvidia-smi

from google.colab import drive

drive.mount("/content/drive")

import sys

sys.path.append("/content/drive/MyDrive/mnist")

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(1, 6, kernel_size=5),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(6, 16, kernel_size=5),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

nn.Linear(16*4*4, 120),

nn.Linear(120, 84),

nn.Linear(84, 10)

)

def forward(self, x):

output = self.model(x)

return output

train_datasets = torchvision.datasets.MNIST(

root = r'../data',

download=True,

train=True,

transform=transforms.ToTensor()

)

train_dataloader = DataLoader(

dataset=train_datasets,

batch_size=64

)

test_datasets = torchvision.datasets.MNIST(

root = r'../data',

train=False,

download=True,

transform=transforms.ToTensor()

)

test_dataloader = DataLoader(

dataset=test_datasets,

batch_size=64

)

from google.colab import output

output.enable_custom_widget_manager()

from google.colab import output

output.disable_custom_widget_manager()

train_datasets_size = len(train_datasets)

test_datasets_size = len(test_datasets)

print("训练集数量为:{}".format(train_datasets_size))

print("测试集数量为:{}".format(test_datasets_size))

runing_mode = "gpu"

if runing_mode == "gpu" and torch.cuda.is_available():

print("use cuda")

device = torch.device("cuda")

else:

print("use cpu")

device = torch.device("cpu")

model = LeNet()

model.to(device)

loss_fn = nn.CrossEntropyLoss()

loss_fn.to(device)

learning_rate = 1e-2

optim = torch.optim.SGD(model.parameters(), lr=learning_rate)

epoch = 10

train_step, test_step = 0, 0

for i in range(epoch):

print("~~~~~~~~~~~~第{}轮训练开始~~~~~~~~~~~".format(i+1))

start = time.time()

model.train()

for data in train_dataloader:

imgs, targets = data

imgs, targets = imgs.to(device), targets.to(device)

output = model(imgs)

loss = loss_fn(output, targets)

optim.zero_grad()

loss.backward()

optim.step()

train_step += 1

if train_step % 200 == 0:

print("第{}次训练,loss={:.3f}".format(train_step, loss.item()))

model.eval()

with torch.no_grad():

test_loss, true_num = 0, 0

for data in test_dataloader:

imgs, targets = data

imgs, targets = imgs.to(device), targets.to(device)

output = model(imgs)

test_loss += loss_fn(output, targets)

true_num += (output.argmax(1) == targets).sum()

end = time.time()

print("第{}轮测试集上的loss:{:.3f}, 正确率为:{:.3f}%,耗时:{:.3f}".format(test_step+1, test_loss.item(), 100 * true_num / test_datasets_size, end-start))

test_step += 1

4.结果