深度学习(1) 线性回归问题实战

1.步骤

(1)根据随机初始化的w,x,b,y的数值来计算Loss Function;

(2)根据当前的w,x,b,y的值来计算梯度;

(3)更新梯度,将w’赋值给w,如此循环往复;

(4)最后的w’和b’会作为模型的参数

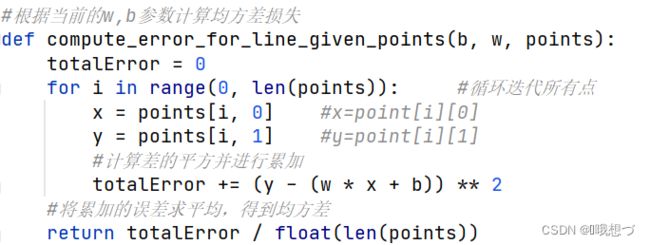

(1)计算误差

循环计算在每个点(xi,yi)处的预测值与真实值之间差的平方并累加,从而获得训练集上的均方差损失值。即Loss= ∑ i \sum \limits_{i} i∑(w*xi+b-yi) 2 ^2 2。具体实现如下:

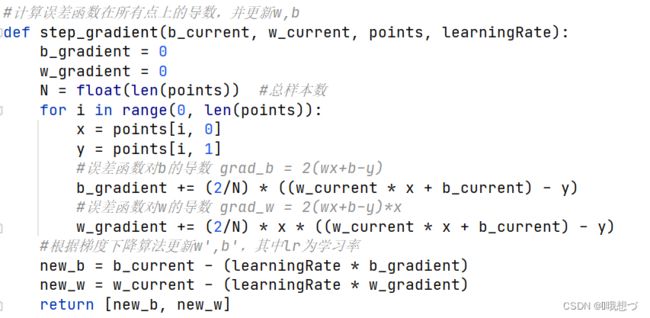

(2)计算梯度

根据梯度下降算法,我们需要计算出函数在每一个点上的梯度信息:

∂ l ∂ w = ∂ 1 n ∑ i = 1 n ( w ∗ x i + b − y i ) 2 ∂ w = 1 n ∑ i = 1 n ∂ ( w ∗ x i + b − y i ) 2 ∂ w = 2 n ∑ i = 1 n ( w ∗ x i + b − y i ) ∗ x i \dfrac{\partial l}{\partial w}=\dfrac{\partial {\dfrac{1}{n}}\sum \limits_{i=1}^n(w*x_i+b-y_i)^2}{\partial w} =\dfrac{1}{n}\sum\limits_{i=1}^n\dfrac{\partial (w*x_i+b-y_i)^2}{ \partial w}=\dfrac{2}{n}\sum\limits_{i=1}^n(w*x_i+b-y_i)*x_i ∂w∂l=∂w∂n1i=1∑n(w∗xi+b−yi)2=n1i=1∑n∂w∂(w∗xi+b−yi)2=n2i=1∑n(w∗xi+b−yi)∗xi

同理可推导出偏导数

∂ l ∂ b = 2 n ∑ i = 1 n ( w ∗ x i + b − y i ) \dfrac{\partial l}{\partial b}=\dfrac{2}{n}\sum\limits_{i=1}^n(w*x_i+b-y_i) ∂b∂l=n2i=1∑n(w∗xi+b−yi)

具体实现如下:

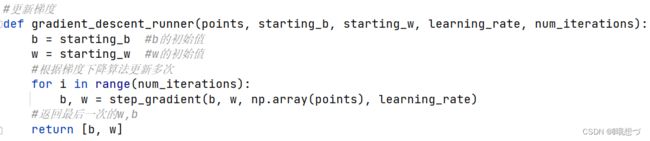

(3)梯度更新

我们把对数据集的所有样本训练一次称为一个Epoch,共循环迭代num_iterations个Epoch。具体实现如下:

计算出最终的w和b的值就可以带入模型进行预测了

计算出最终的w和b的值就可以带入模型进行预测了

2.全部代码

import numpy as np

#根据当前的w,b参数计算均方差损失

def compute_error_for_line_given_points(b, w, points):

totalError = 0

for i in range(0, len(points)): #循环迭代所有点

x = points[i, 0] #x=point[i][0]

y = points[i, 1] #y=point[i][1]

#计算差的平方并进行累加

totalError += (y - (w * x + b)) ** 2

#将累加的误差求平均,得到均方差

return totalError / float(len(points))

#计算误差函数在所有点上的导数,并更新w,b

def step_gradient(b_current, w_current, points, learningRate):

b_gradient = 0

w_gradient = 0

N = float(len(points)) #总样本数

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

#误差函数对b的导数 grad_b = 2(wx+b-y)

b_gradient += (2/N) * ((w_current * x + b_current) - y)

#误差函数对w的导数 grad_w = 2(wx+b-y)*x

w_gradient += (2/N) * x * ((w_current * x + b_current) - y)

#根据梯度下降算法更新w',b',其中lr为学习率

new_b = b_current - (learningRate * b_gradient)

new_w = w_current - (learningRate * w_gradient)

return [new_b, new_w]

#更新梯度

def gradient_descent_runner(points, starting_b, starting_w, learning_rate, num_iterations):

b = starting_b #b的初始值

w = starting_w #w的初始值

#根据梯度下降算法更新多次

for i in range(num_iterations):

b, w = step_gradient(b, w, np.array(points), learning_rate)

#返回最后一次的w,b

return [b, w]

def run():

points = np.genfromtxt("data.csv", delimiter=",")

learning_rate = 0.0001 #学习率

initial_b = 0 #初始化b=0

initial_w = 0 #初始化w=0

num_iterations = 1000

#训练优化1000次,返回最优的w'和b'

print("Starting gradient descent at b = {0}, w = {1}, error = {2}"

.format(initial_b, initial_w,

compute_error_for_line_given_points(initial_b, initial_w, points))

)

print("Running...")

[b, w] = gradient_descent_runner(points, initial_b, initial_w, learning_rate, num_iterations)

print("After {0} iterations b = {1}, w = {2}, error = {3}".

format(num_iterations, b, w,

compute_error_for_line_given_points(b, w, points))

)

if __name__ == '__main__':

run()

经过1000次的迭代更新后,保存最后的w和b值,此时的w和b就是我们要找的w和b数值解。运行结果如下:

![]() 可以看到,在 w=0,b=0的时候,损失值 error≈5565.11;

可以看到,在 w=0,b=0的时候,损失值 error≈5565.11;

经过1000次迭代后, w ≈1.48,b≈0.09,损失值 error≈ 112.61,远远小于原来的损失值。