【论文总结】 Multi-source Domain Adaptation (持续更新)

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

-

-

- Single-source DA vs Multi-source DA

- Paper I: Aligning Domain-specific Distribution and Classifier for Cross-domain Classification from Multiple Sources (AAAI'19)

-

- Problem Formulation

- Methodology

- Two-stage alignment Framework

-

- Common feature extractor

- Domain-specific feature extractor

- Domain-specific feature extractor

- Two Alignment

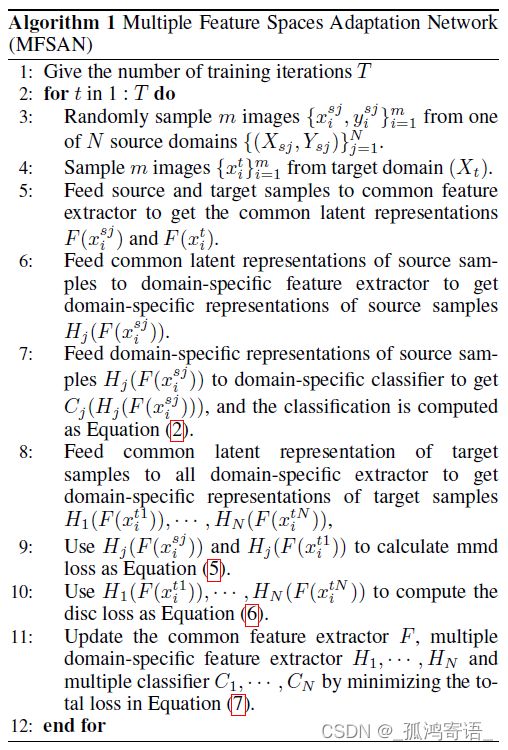

- Overall Multiple Feature Spaces Adaptation Network (MFSAN)

- Paper II: Multi-source Distilling Domain Adaptation(AAAI' 20)

-

- Motivation

- Problem Formulation

-

- Objective

- MDDA Framework

-

- Source Classifier Pre-training (Step 1)

- Adversarial Discriminative Adaptation (Step 2)

- Source Distilling (Step 3)

- Aggregated Target Prediction (Step 4)

-

Single-source DA vs Multi-source DA

SUDA

- labeled data is from one single source domain

- 常用solution: learn to map the data from source & target domains into a common feature space to learn domain-invariant representations by minimizing domain distribution discrepancy (MMD/)

MUDA

- shift between multiple source domains (hard to align)

- 有时候 domain 之间拥有的 class 范围甚至不同

- 在domain-specific decision boundary 附近的 target samples 可能会被不同的classifier 判别出不同的labels

- 常用sol:two-stage alignments

– stage I: map each pair of source and target domains data into multiple different feature spaces --> align domain-specific distributions to learn multiple domain-invariant representations --> train multiple domain-specific classifiers using multiple domains-invariant representations

– stage II: aligning domain-specific classifiers

Paper I: Aligning Domain-specific Distribution and Classifier for Cross-domain Classification from Multiple Sources (AAAI’19)

Problem Formulation

Given

- N N N different underlying source distributions { p s j ( x , y ) } j = 1 N \{p_{sj}(x,y)\}_{j=1}^{N} {psj(x,y)}j=1N and labeled source domain data { ( X s j , Y s j ) } j = 1 N \{(X_{sj}, Y_{sj})\}_{j=1}^{N} {(Xsj,Ysj)}j=1N drawn from these distributions

- target distribution { p t ( x , y ) } \{p_{t}(x,y)\} {pt(x,y)} , from which target domain data X t X_t Xt are sampled yet without label observation Y t Y_t Yt .

Objective

Methodology

Two-stage alignment Framework

Common feature extractor

A common subnetwork f ( . ) f(.) f(.) is used to extract common representations for all domains, which map the images from the original feature space into a common feature space.

Domain-specific feature extractor

Given:

- x s j x^{sj} xsj from source domain ( X s j , Y s j ) (X_{sj}, Y_{sj}) (Xsj,Ysj), x t x^{t} xt from target domain X t X^{t} Xt

- N N N unshared domain-specific subnetworks h g ( . ) h_{g}(.) hg(.) for each source domain ( X s j , Y s j ) (X_{sj}, Y_{sj}) (Xsj,Ysj), which map each pair of source and target domains into a specific feature space

- These domain-specific feature extractors receive the common features f ( x s j ) f(x^{sj}) f(xsj) and f ( x t ) f(x^{t}) f(xt) from common feature extractor f ( . ) f(.) f(.)

- Use the MMD/adversarial/CORAL loss method to reduce the distribution discrepancy between domains.

Domain-specific feature extractor

- C C C is a multi-output net composed by N N N domain-specific predictor { C j } j = 1 N \{C_j\}_{j=1}^N {Cj}j=1N.

- For each classifier, we add a classification loss using cross entropy

Two Alignment

- Domain-specific Distribution Alignment: MMD

- Domain-specific Classifier Alignment:

- Intuition: the same target sample predicted by different classifiers should get the same prediction

- Utilize the absolute values of the difference between all pairs of classifiers’ probabilistic outputs of target domain data as discrepancy loss

Overall Multiple Feature Spaces Adaptation Network (MFSAN)

注意: 计算 Domain-specific Classifier Alignment loss 用的是target samples

Paper II: Multi-source Distilling Domain Adaptation(AAAI’ 20)

Motivation

Limitations of the state-of-the-art MDA methods:

- Sacrifice the discriminative property of the extracted features for the desired task learner in order to learn domain invariant features

- Treat the multiple sources equally and fail to consider the different discrepancy among sources and target, as illustrated in Figure 1. Such treatment may lead to suboptimal performance when some sources are very different from the target (Zhao et al. 2018a).

- Treat different samples from each source equally, without distilling the source data based on the fact that different samples from the same source domain may have different similarities from the target.

- The adversarial learning based methods suffer from vanishing gradient problem when the domain classifier network can perfectly distinguish target representations from the source ones.

Problem Formulation

Given

- M M M different labeled source domains S 1 , S 2 . . . S M S_1, S_2... S_M S1,S2...SM and a fully unlabeled target domain T T T

- Homogeneity: data from different domains are observed in the same feature space but exhibit different distributions

- Close set: All the domains share their categories in class label space

Objective

To learn an adaptation model that can correctly predict a sample from the target domain based on { ( X i , Y i ) } i = 1 M \{(X_i, Y_i)\}_{i=1}^M {(Xi,Yi)}i=1M and { X T } \{X_T\} {XT}

MDDA Framework

Source Classifier Pre-training (Step 1)

- Pre-train a feature extractor F i F_i Fi and classifier C i C_i Ci for each labeled source domain S i S_i Si with unshared weights between different domains. F i F_i Fi and C i C_i Ci are optimized by minimizing the following cross-entropy loss.

- Comparing with a shared feature extractor network, the unshared feature extractor network can obtain the discriminative feature representations and accurate classifiers for each source domain.

Adversarial Discriminative Adaptation (Step 2)

- Fix feature extractor F i F_i Fi.

- Learn a separate target encoder F i T F_i^T FiT to map the target feature into the same space of source S i S_i Si.

- A discriminator D i D_i Di is trained adversarially to maximize the Wasserstein distance of correctly classifying the encoded target features from F i T F_i^T FiT and the source feature from pre-trained F i F_i Fi , while F i T F_i^T FiT tries to maximize the probability of D i D_i Di making a mistake, i.e. minimizing the Wasserstein distance(still a two-player minimax game)

Source Distilling (Step 3)

- Dig into each source domain to select the source training samples that are closer to the target based on the estimated Wasserstein distance to fine-tune the source classifiers (In this paper, N i 2 \frac{N_i}{2} 2Ni of source data is selected).

Aggregated Target Prediction (Step 4)

- Extract the features F i T ( x T ) F_i^T(x_T) FiT(xT) of the target image based on the learned target encoder from stage 2;

- Obtain source-specific prediction C i ′ ( F i T ( x T ) ) C_i'(F_i^T(x_T)) Ci′(FiT(xT)) using the distilled source classifier;

- Combine the different predictions from each source classifier to obtain the final prediction;

- The weighting strategy is based on the discrepancy between each source and target to emphasize more relevant sources

and suppress the irrelevant ones.