[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测

转载请注明作者和出处: http://blog.csdn.net/john_bh/

论文链接:PFLD: A Practical Facial Landmark Detector

作者及团队: 天津大学 & 武汉大学 & 腾讯AI实验室 & 美国天普大学

会议及时间:Arxiv 201902

code:官方项目,国内不能下载,百度云链接链接,提取码:glwr

code:PFLD_Tensorflow版

QQ交流群:945933636

文章目录

-

- 论文解读

-

- 1.人脸关键点检测挑战

- 2.解决方案

- 3.实验结果

- 论文翻译

-

- Abstract

- 1.Introduction

- 2. Methodology

- 3. Experimental Evaluation

- 4. Concluding Remarks

论文解读

PFLD 作者分别来自天津大学、武汉大学、腾讯AI实验室、美国天普大学。

PFLD算法,目前主流数据集上达到最高精度、ARM安卓机140fps,模型大小仅2.1M。

精度高、速度快、模型小!

1.人脸关键点检测挑战

- Challenge #1 - Local Variation:人脸表情变化很大,真实环境光照复杂,而且现实中大量存在人脸局部被遮挡的情况等。

- Challenge #2 - Global Variation:人脸是 3D 的,位姿变化多样,另外因拍摄设备和环境影响,成像质量也有好有坏。

- Challenge #3 - Data Imbalance:现有训练样本各个类别存在不平衡的问题。

- Challenge #4 - Model Efficiency:在计算受限的设备比如手机终端,必须要考虑计算速度和模型文件大小问题。

2.解决方案

对于上述影响精度的挑战,修改loss函数在训练时关注那些稀有样本,而提高计算速度和减小模型size则是使用轻量级模型。

-

整体算法思想:

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第1张图片](http://img.e-com-net.com/image/info8/a49063bd1a0b42bea427fcb652e81a6a.jpg) 其中,黄色曲线包围的是主网络,用于预测特征点的位置;绿色曲线包围的部分为辅网络,在训练时预测人脸姿态(有文献表明给网络加这个辅助任务可以提高定位精度,具体参考原论文),这部分在测试时不需要。

其中,黄色曲线包围的是主网络,用于预测特征点的位置;绿色曲线包围的部分为辅网络,在训练时预测人脸姿态(有文献表明给网络加这个辅助任务可以提高定位精度,具体参考原论文),这部分在测试时不需要。 -

Loss函数设计:

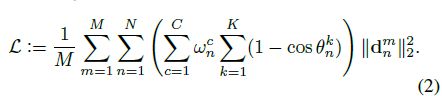

Loss函数用于神经网络在每次训练时预测的形状和标注形状的误差。考虑到样本的不平衡,作者希望能对那些稀有样本赋予更高的权重,这种加权的Loss函数被表达为:

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第2张图片](http://img.e-com-net.com/image/info8/69b7581d57bc42cdb13affbb914ec10d.jpg)

M为样本个数,N为特征点个数, Y n Y_n Yn为不同的权重,|| * ||为特征点的距离度量( L 1 L_1 L1或 L 2 L_2 L2距离)。(以Y代替公式里的希腊字母)。进一步细化 Y n Y_n Yn:

其中 ∑ c = 1 C w n c ∑ k = 1 K ( 1 − c o s θ n k ) \sum^{C}_{c=1}w^{c}_{n}\sum^{K}_{k=1}(1-cos\theta^{k}_{n}) ∑c=1Cwnc∑k=1K(1−cosθnk),即为最终的样本权重。K=3,这一项代表着人脸姿态的三个维度,即yaw, pitch, roll 角度,可见角度越高,权重越大。C为不同的人脸类别数,作者将人脸分成多个类别,比如侧脸、正脸、抬头、低头、表情、遮挡等,w为与类别对应的给定权重,如果某类别样本少则给定权重大。

3.实验结果

论文翻译

Abstract

Being accurate, efficient, and compact is essential to a facial landmark detector for practical use. To simultaneously consider the three concerns, this paper investigates a neat model with promising detection accuracy under wild environments (e.g., unconstrained pose, expression, lighting,and occlusion conditions) and super real-time speed on a mobile device. More concretely, we customize an endto-end single stage network associated with acceleration techniques. During the training phase, for each sample,rotation information is estimated for geometrically regularizing landmark localization, which is then NOT involved in the testing phase. A novel loss is designed to, besides considering the geometrical regularization, mitigate the issue of data imbalance by adjusting weights of samples to different states, such as large pose, extreme lighting,and occlusion, in the training set. Extensive experiments are conducted to demonstrate the efficacy of our design and reveal its superior performance over state-ofthe-art alternatives on widely-adopted challenging benchmarks,i.e., 300W (including iBUG, LFPW, AFW, HELEN,and XM2VTS) and AFLW. Our model can be merely 2.1Mb of size and reach over 140 fps per face on a mobile phone (Qualcomm ARM 845 processor) with high precision, making it attractive for large-scale or real-time applications.We have made our practical system based on PFLD 0.25X model publicly available at http://sites.google.com/view/xjguo/fld for encouraging comparisons and improvements from the community.

准确,高效且紧凑对于实际使用的人脸界标检测器至关重要。为了同时考虑这三个问题,本文研究了一种纯模型,该模型在野外环境(例如不受约束的姿势,表情,光线和遮挡条件)下具有可检测的准确性,并且在移动设备上具有超实时速度。更具体地说,我们定制与加速技术相关的端到端单级网络。在训练阶段,对于每个样本,估计旋转信息以几何化地标界标定位,然后不参与测试阶段。一种新颖的损失设计为,除了考虑几何正则化以外,还可以通过将样本的权重调整为训练集中的不同状态(例如,大姿势,极端光照和遮挡)来减轻数据不平衡的问题。进行了广泛的实验以证明我们的设计的功效,并在广泛采用的具有挑战性的基准(即300W(包括iBUG,LFPW,AFW,HELEN和XM2VTS)和AFLW)上证明其优于最新替代产品的优越性能。我们的模型大小仅为2.1Mb,在手机(高通ARM 845处理器)上具有很高的精度,每张面孔的面部表情速度超过140 fps,使其对于大规模或实时应用具有吸引力。我们已在http://sites.google.com/view/xjguo/fld上公开提供了基于PFLD 0.25X模型的实用系统,以鼓励社区进行比较和改进。

1.Introduction

Facial landmark detection a.k.a. face alignment aims to automatically localize a group of pre-defined fiducial points (e.g., eye corners, mouth corners, etc.) on human faces. As a fundamental component in a variety of face applications,such as face recognition [21, 49] and verification [27], as well as face morphing [11] and editing [28], this problem has been drawing much attention from the vision community with a great progress made over the past years. However,developing a practical facial landmark detector re-mains challenging, as the detection accuracy, processing speed, and model size should all be concerned.

面部界标检测也称为面部对齐,旨在自动定位人脸上的一组预定义基准点(例如眼角,嘴角等)。作为各种面部应用程序的基本组成部分,例如面部识别[21,49]和验证[27]以及面部变形[11]和编辑[28],这个问题已经引起了人们的广泛关注。社区在过去几年中取得了巨大进步。然而,开发一种实用的面部标志检测器仍然具有挑战性,因为应该同时考虑检测精度,处理速度和模型大小。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第8张图片](http://img.e-com-net.com/image/info8/a33a73a6e5454520858cba6f05e5a06c.jpg)

Acquiring perfect faces is barely the case in real-world situations. In other words, human faces are often exposed in under-controlled or even unconstrained environments.The appearance has large variations of poses, expressions and shapes under various lighting conditions, sometimes with partial occlusions. Figure 1 provides several such examples. Besides, sufficient training data for data-driven approaches is also key to model performance. It may be viable to capture several persons’ faces under different conditions with balanced consideration though, this collecting manner becomes impractical especially when large-scale data is required to train (deep) models. Under the circumstances,one often comes across an imbalanced data distribution. The following summarizes issues regarding the landmark detection accuracy into three challenges.

在现实世界中,获得完美面孔几乎是不可能的。换句话说,人脸经常暴露于不受控制甚至不受限制的环境中。在各种光照条件下,人脸的姿势,表情和形状变化很大,有时会部分遮挡。图1提供了几个这样的示例。此外,用于数据驱动方法的足够训练数据也是模型性能的关键。尽管可以平衡考虑在不同条件下捕获多个人的面部,但这种收集方式不切实际,尤其是在需要大量数据来训练(深度)模型时。在这种情况下,经常会遇到数据分配不平衡的情况。以下将与地标检测精度有关的问题归纳为三个挑战。

Challenge #1 - Local Variation. Expression, local extreme lighting (e.g., highlight and shading), and occlusion bring partial changes/interferences onto face images.Landmarks of some regions may deviate from their normal positions or even disappear.

挑战1-局部变化。表情,局部极端光照(例如高光和阴影)和遮挡会在面部图像上产生部分更改/干扰,某些区域的标记可能会偏离其正常位置甚至消失。

Challenge #2 - Global Variation. Pose and imaging quality are two main factors globally affecting the appearance of faces in images, which would result in poor localization of a (large) fraction of landmarks when the global structure of faces is mis-estimated.

挑战2-全球变化。姿势和成像质量是全局影响图像中人脸外观的两个主要因素,当错误估计人脸的全局结构时,这会导致(大)比例的地标定位不良。

Challenge #3 - Data Imbalance. It is not uncommon that, in both shallow learning and deep learning, an available dataset exhibits an unequal distribution between its classes/attributes. The imbalance highly likely makes an algorithm/model fail to properly represent the characteristics of the data, thus offering unsatisfactory accuracies

across different attributes.

挑战3-数据不平衡。在浅层学习和深度学习中,可用数据集在其类/属性之间表现出不均等的分布并不少见。这种不平衡很可能使算法/模型无法正确表示数据的特征,从而提供了令人满意的准确性

跨不同的属性。

The above challenges considerably increase the difficulty of accurate detection, demanding the detector to be robust.

上述挑战大大增加了精确检测的难度,要求检测器要坚固。

With the emergence of portable devices, more and more people prefer to deal with their business or get entertained anytime and anywhere. Therefore, the challenge below,aside from pursuing high accuracy of detection, should be taken into account.

随着便携式设备的出现,越来越多的人更喜欢随时随地开展业务或获得娱乐。因此,除了追求高检测精度外,还应考虑以下挑战。

Challenge #4 - Model Efficiency. Another two constraints on applicability are model size and computing requirement.Tasks like robotics, augmented reality, and video chat are expected to be executed in a timely fashion on a platform equipped with limited computation and memory resources e.g., smart phones or embedded products.

挑战4-模型效率。适用性的另外两个限制是模型大小和计算要求,诸如机器人技术,增强现实和视频聊天之类的任务预计将在配备有限的计算和内存资源的平台(例如智能手机或嵌入式产品)上及时执行。

This point particularly requires the detector to be of small model size and fast processing speed. Undoubtedly, it is desired to build accurate, efficient, and compact systems for practical landmark detection.

这一点特别要求检测器的模型尺寸小且处理速度快。毫无疑问,期望构建用于实际路标检测的准确,高效且紧凑的系统。

1.1. Previous Arts

Over last decades, a number of classic methods have been proposed in the literature for facial landmark detection.Parameterized appearance models, with active appearance models (AAMs) [6] and constrained local models (CLMs) [7] as representatives, accomplish the job through maximizing the confidence of part locations in an image.Specifically, AAMs and its follow-ups [23, 17, 20] attempt to jointly model holistic appearance and shape, while CLMs and variants [2, 31] instead learn a group of local experts via imposing various shape constraints. In addition, the tree structure part model (TSPM) [50] utilizes a deformable part-based model for simultaneous detection, pose estimation,and landmark localization. The methods including explicit shape regression (ESR) [5] and supervised descent method (SDM) [38] try to address the problem in a regression manner. The main limitations of these methods are the inferior robustness against difficult cases, expensive computation,and/or high model complexity. A more elaborated review for the classic approaches can be found in [32].

在过去的几十年中,文献中提出了许多经典方法来进行人脸界标检测。以活动外观模型(AAM)[6]和约束局部模型(CLM)[7]为代表的参数化外观模型完成了这项工作。通过最大化图像中零件位置的置信度。具体地说,AAM及其后续文献[23、17、20]试图共同对整体外观和形状进行建模,而CLM和变体[2、31]则是学习一组局部专家通过施加各种形状约束。另外,树结构零件模型(TSPM)[50]利用基于可变形零件的模型进行同时检测,姿态估计和界标定位。包括显式形状回归(ESR)[5]和监督下降法(SDM)[38]在内的方法试图以回归方式解决该问题。这些方法的主要局限性是对困难情况的鲁棒性差,计算量大和/或模型复杂度高。在[32]中可以找到有关经典方法的详细说明。

Recently, deep learning based strategies have dominated state-of-the-art performances on this task. In what follows,we briefly introduce representative works in this category.Zhang et al. [45] built up a multi-task learning network,called TCDCN, for jointly learning landmark locations and pose attributes. TCDCN, due to its multi-task nature, is difficult to train in practice. An end-to-end recurrent convolutional model for face alignment from coarse to fine was proposed by Trigeorgis et al., termed as MDM [29]. Lv et al.[22] proposed a deep regression architecture with the twostage re-initialization scheme, namely TSR, which divides a whole face into several parts to boost the detection accuracy.Using pose angles including pitch, yaw, and roll as attributes,[39] constructs a network to directly estimate these three angles for helping landmark detection. But the cascaded nature of [39] makes it suboptimal in the following landmark detection. Pose-invariant face alignment (PIFA for short) proposed by Jourabloo et al. [14] estimates the projection matrix from 3D to 2D via deep cascade regressors,which is followed by the work PIFA-CNN [15] using a single convolutional neural network (CNN). The work in [48] first models the face depth in a Z-buffer and then fits a 3D model for 2D images.

最近,基于深度学习的策略已在该任务的最先进表现中占据主导地位。接下来,我们简要介绍这一类别的代表作品。 [45]建立了一个多任务学习网络,称为TCDCN,用于共同学习地标位置和姿势属性。由于TCDCN具有多任务性质,因此在实践中很难进行培训。 Trigeorgis等人提出了一种从粗到细的人脸对齐的端到端循环卷积模型,称为MDM [29]。吕等[22] [39]提出了一种采用两阶段重新初始化方案(TSR)的深度回归架构,该方案将整个人脸分成几个部分以提高检测精度。[39]以俯仰,偏航和侧倾等姿态角为属性,构建了一个网络来直接估计这三个角度以帮助进行地标检测。但是[39]的级联性质使得它在随后的界标检测中不是最佳的。 Jourabloo等人提出的姿势不变的面部对齐方式(简称PIFA)。 [14]通过深级联回归估计从3D到2D的投影矩阵,然后是使用单个卷积神经网络(CNN)的PIFA-CNN [15]。 [48]中的工作首先在Z缓冲区中模拟面部深度,然后将3D模型拟合为2D图像。

Most recently, Kumar and Chellapa designed a single dendritic CNN, named as pose conditioned dendritic convolution neural network (PCD-CNN) [19], which combines a classification network with a second and modular classification network, for improving the detection accuracy. Honari et al. designed a network, called sequential multi-tasking (SeqMT) net, with an equivariant landmark transformation (ELT) loss term [12]. In [30], the authors presented a facial landmark regression method based on a coarse-to-fine ensemble of regression trees (ERT) [16]. To make the facial landmark detector robust against the intrinsic variance of image styles, Dong et al. developed a style-aggregated network(SAN) [9], which accompanies the original face images with style-aggregated ones to train the landmark detector.By considering boundary information as the geometric structure of human faces, Wu et al. presented a boundaryaware face alignment algorithm, i.e. LAB, to improve the detection accuracy. LAB derives face landmarks from boundary lines. By doing so, the ambiguities in the landmark definition can be largely avoided. Other face alignment techniques include [33, 42, 47, 10, 37, 36]. Though the existing deep learning strategies have made great strides for the task, huge space still exists for improvement especially jointly taking into account the accuracy, efficiency,and model compactness of detectors for practical use.

最近,Kumar和Chellapa设计了一个单一的树状CNN,称为姿势条件树状卷积神经网络(PCD-CNN)[19],该网络将分类网络与第二个和模块化分类网络相结合,以提高检测精度。 Honari等。设计了一个网络,称为顺序多任务(SeqMT)网络,其等价地标变换(ELT)损耗项[12]。在[30]中,作者提出了一种基于粗糙树到精细回归树(ERT)的面部界标回归方法[16]。为了使脸部界标检测器对图像样式的内在变化具有鲁棒性,Dong等人(2002)提出了一种方法。 Wu等人开发了一种样式集合网络(SAN)[9],该网络将原始人脸图像与样式集合的图像相结合以训练路标检测器。通过将边界信息视为人脸的几何结构,Wu等人。提出了一种边界感知人脸对齐算法,即LAB,以提高检测精度。 LAB从边界线得出人脸地标。这样,可以在很大程度上避免界标定义中的歧义。其他面部对齐技术包括[33、42、47、10、37、36]。尽管现有的深度学习策略已在该任务上取得了长足的进步,但仍然存在巨大的改进空间,尤其是结合实际使用的检测器的准确性,效率和模型紧凑性。

1.2. Our Contributions

The main intention of this work is to show that a good design can save a lot resources with the state-of-the-art performance on the target task. This work develops a practical facial landmark detector, denoted as PFLD, with high accuracy against complex situations including unconstrained poses, expressions, lightings, and occlusions. Compared with the local variation, the global one deserves more efforts,as it can greatly influence the whole set of landmarks.To boost the robustness, we employ a branch of network to estimate the geometric information for each face sample,and subsequently regularize the landmark localization.Besides, in deep learning, the data imbalance issue often limits the performance in accurate detection. For instance,a training set may contain plenty of frontal faces while lacking those with large poses. This would degrade the accuracy when dealing with large pose cases. To address this issue,we advocate to penalize more on errors corresponding to rare training samples than on those to rich ones. Considering the above two concerns, say the geometric constraint and the data imbalance, a novel loss is designed. To enlarge the receptive field and better catch the global structure on faces, a multi-scale fully-connected (MS-FC) layer is added for precisely localizing landmarks in images. As for the processing speed and model compactness, we build the backbone network of our PFLD using MobileNet blocks [13, 26]. In experiments, we evaluate the efficacy of our design,and demonstrate its superior performance over other state-of-the-art alternatives on two widely-adopted challenging datasets including 300W [25] and AFLW [18]. Our model can be adjusted to merely 2.1Mb of size and achieve over 140 fps per face on a mobile phone. All the above merits make our PFLD attractive for practical use. We have released our practical system based on PFLD 0.25X model at http://sites.google.com/view/xjguo/fld for encouraging comparisons and improvements from the community.

这项工作的主要目的是证明一个好的设计可以节省大量资源,并且具有目标任务上的最新性能。这项工作开发了一种实用的面部界标检测器,称为PFLD,可以在包括不受约束的姿势,表情,照明和遮挡等复杂情况下实现高精度。与局部变量相比,全局变量需要付出更多的努力,因为它可以极大地影响整个地标集。为了提高鲁棒性,我们使用网络的一个分支来估计每个人脸样本的几何信息,然后对地标进行正则化此外,在深度学习中,数据不平衡问题通常会限制准确检测的性能。例如,训练集可能包含大量正面,而缺少那些姿势较大的面孔。在处理大姿势情况时,这会降低准确性。为了解决这个问题,我们主张对与稀有训练样本相对应的错误而不是对富裕样本进行惩罚。考虑到上述两个问题,例如几何约束和数据不平衡,设计了一种新的损耗。为了扩大接收范围并更好地捕捉脸部的整体结构,添加了多尺度的全连接(MS-FC)层以精确定位图像中的地标。至于处理速度和模型紧凑性,我们使用MobileNet模块[13,26]构建PFLD的骨干网络。在实验中,我们评估了我们设计的有效性,并在两个广泛采用的具有挑战性的数据集(包括300W [25]和AFLW [18])上证明了其优于其他最新技术的性能。我们的模型可以调整为仅2.1Mb的大小,并在手机上实现每张面孔超过140 fps的速度。以上所有优点使我们的PFLD更具实用性。我们已在http://sites.google.com/view/xjguo/fld上发布了基于PFLD 0.25X模型的实用系统,以鼓励社区进行比较和改进。

2. Methodology

Against the aforementioned challenges, effective measures need to be taken. In this section, we first focus on the design of loss function, which simultaneously takes care of Challenges #1, #2, and #3. Then, we detail our architecture.The whole deep network consists of a backbone subnet for predicting landmark coordinates, which specifically considers Challenge #4, as well as an auxiliary one for estimating geometric information.

针对上述挑战,需要采取有效措施。 在本节中,我们首先关注损失函数的设计,该函数同时处理挑战#1,#2和#3。 然后,我们详细介绍我们的架构。整个深层网络由一个用于预测地标坐标的骨干子网组成,该子网专门考虑了Challenge#4,以及一个用于估计几何信息的辅助网络。

2.1. Loss Function

The quality of training greatly depends on the design of loss function, especially when the scale of training data is not sufficiently large. For penalizing errors between ground-truth landmarks X : = [ x 1 , x 2 . . . x N ] ∈ R 2 ∗ N X :=[x_1,x_2...x_N]\in R^{2*N} X:=[x1,x2...xN]∈R2∗Nand predicted ones Y : = [ y 1 , y 2 . . . y N ] ∈ R 2 ∗ N Y:=[y_1,y_2...y_N]\in R^{2*N} Y:=[y1,y2...yN]∈R2∗N, the simplest losses arguably go to l 2 l_2 l2 and l 1 l_1 l1 losses. However, equally measuring the differences of landmark pairs is not so wise,without considering geometric/structural information. For instance, given a pair of x i x_i xi and y i y_i yi with their deviation d i : = x i − y i d_i := x_i - y_i di:=xi−yi in the image space, if two projections (poses with respect to a camera) are applied from 3D real face to 2D image, the intrinsic distances on the real face could be significantly different. Hence, integrating geometric information into penalization is helpful to mitigating this issue.For face images, the global geometric status - 3D pose - is sufficient to determine the manner of projection. Formally, let X X X denote the concerned location of 2D landmarks,which is a projection of 3D face landmarks, i.e. U ∈ R 4 ∗ N U\in R^{4*N} U∈R4∗N, each column of which corresponds to a 3D location [ u i ; v i ; z i ; 1 ] T [u_i; v_i; z_i; 1]^T [ui;vi;zi;1]T . By assuming a weak perspective model as [14], a 2x4 projection matrix P P P can connect U U U and X X X via X = P U X = PU X=PU. This projection matrix has six degrees of freedom including yaw, roll, pitch, scale, and 2D translation. In this work, the faces are supposed to be well detected, centralized, and normalized. And local variation like expression barely affects the projection. This is to say,three degrees of freedom including scale and 2D translation can be reduced, and thus only three Euler angles (yaw, roll,and pitch) are needed to be estimated.

训练的质量很大程度上取决于损失函数的设计,尤其是当训练数据的规模不够大时。为了惩罚真实的地标之间的误差 X : = [ x 1 , x 2 . . . x N ] R 2 ∗ N X:= [x_1,x_2 ... x_N] \ R ^ {2 * N} X:=[x1,x2...xN] R2∗N和预测的 Y : = [ y 1 , y 2 . . . y N ] R 2 ∗ N Y:= [y_1,y_2 ... y_N] \ R ^ {2 * N} Y:=[y1,y2...yN] R2∗N,最简单的损失可以说是 l 2 l_2 l2和 l 1 l_1 l1损失。但是,如果不考虑几何/结构信息,同样地测量地标对的差异并不是那么明智。例如,给定一对 x i x_i xi和 y i y_i yi在图像空间中的偏差 d i : = x i − y i d_i:= x_i-y_i di:=xi−yi,如果将两个投影(相对于摄影机的姿势)从3D真实面孔应用到2D图像,真实面孔上的内在距离可能会明显不同。因此,将几何信息整合到惩罚中有助于缓解此问题。对于人脸图像,全局几何状态-3D姿势-足以确定投影方式。正式地,让 X X X表示2D地标的相关位置,它是3D人脸地标的投影,即 U ∈ R 4 ∗ N U\in R^{4*N} U∈R4∗N,其每一列对应于3D位置 [ u i ; v i ; z i ; 1 ] T [u_i; v_i; z_i; 1] ^T [ui;vi;zi;1]T。通过假设弱透视模型为[14],2x4投影矩阵 P P P可以通过 X = P U X = PU X=PU连接 U U U和 X X X。该投影矩阵具有六个自由度,包括偏航,滚动,俯仰,比例和2D平移。在这项工作中,人脸应该被很好地检测,集中和标准化。像表情这样的局部变化几乎不会影响投影。也就是说,可以减小包括比例尺和2D平移的三个自由度,因此仅需要估计三个欧拉角(偏航角,侧倾角和俯仰角)。

Moreover, in deep learning, data imbalance is another issue often limiting the performance in accurate detection.For example, a training set may contain a large number of frontal faces while lacking those with large poses. Without extra tricks, it is almost sure that the model trained by such a training set is unable to handle large pose cases well.Under the circumstances, “equally” penalizing each sample makes it unequal instead. To address this issue, we advocate to penalize more on errors corresponding to rare training samples than on those to rich ones.

此外,在深度学习中,数据不平衡是另一个经常限制准确检测性能的问题,例如,训练集可能包含大量正面,而缺少那些姿势较大的面孔。没有额外的技巧,几乎可以肯定的是,由这样的训练集训练的模型不能很好地处理大姿势的情况。在这种情况下,“相等地”惩罚每个样本将使其不相等。为了解决这个问题,我们主张对与稀有训练样本相对应的错误而不是对丰富样本进行惩罚。

Mathematically, the loss can be written in the following general form:

从数学上讲,损失可以用以下一般形式表示:

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第9张图片](http://img.e-com-net.com/image/info8/e02e17c9fc5641bba8143083330fb5c0.jpg)

where ∥ . ∥ \| .\| ∥.∥designates a certain metric to measure the distance/error of the n-th landmark of the m-th input. N N N is the pre-defined number of landmarks per face to detect. M M M denotes the number of training images in each process. Given the metric used (e.g., l 2 l_2 l2 in this work), the weight γ n \gamma_n γn plays a key role. Consolidating the aforementioned concerns, say the geometric constraint and the data imbalance, a novel loss is designed as follows:

其中 ∥ . ∥ \| .\| ∥.∥指定一个度量来测量第m个输入的第n个界标的距离/误差。 N N N是每个面孔要检测的预定义地标数量。 M M M表示每个过程中训练图像的数量。给定所使用的指标(例如,本工作中的 l 2 l_2 l2),权重 γ n \gamma_n γn起着关键作用。结合上述考虑因素(例如几何约束和数据不平衡),新的损失设计如下:

It is easy to obtain that ∑ c = 1 C w n c ∑ k = 1 K ( 1 − c o s θ n k ) \sum^{C}_{c=1}w^{c}_{n}\sum^{K}_{k=1}(1-cos\theta^{k}_{n}) ∑c=1Cwnc∑k=1K(1−cosθnk) in Eq.(2) acts as γ n \gamma_n γn in Eq(1). Let us here take a close look at the loss. In which, θ 1 , θ 2 , θ 3 ( K = 3 ) \theta^1, \theta^2, \theta^3 (K=3) θ1,θ2,θ3(K=3) represent the angles of deviation between the ground-truth and estimated yaw, pitch, and roll angles. Clearly, as the deviation angle increases, the penalization goes up. In addition, we categorize a sample into one or multiple attribute classes including profile-face, frontal-face, head-up, head-down, expression,and occlusion. The weighting parameter w n c w^{c}_{n} wnc is adjusted according to the fraction of samples belonging to class c (this work simply adopts the reciprocal of fraction). For instance,if disabling the geometry and data imbalance functionalities,our loss degenerates to a simple l 2 l2 l2 loss. No matter whether the 3D pose and/or the data imbalance bother(s) the training or not, our loss can handle the local variation by its distance measurement.

很容易获得等式(2)中的 ∑ c = 1 C w n c ∑ k = 1 K ( 1 − c o s θ n k ) \sum^{C}_{c=1}w^{c}_{n}\sum^{K}_{k=1}(1-cos\theta^{k}_{n}) ∑c=1Cwnc∑k=1K(1−cosθnk)在等式(1)中充当 γ n \gamma_n γn。让我们在这里仔细看看损失。其中, θ 1 , θ 2 , θ 3 ( K = 3 \theta^1,\theta ^2,\theta ^3(K = 3 θ1,θ2,θ3(K=3表示地面真角与估计偏航角,俯仰角和侧倾角之间的偏离角。显然,随着偏差角的增加,惩罚增加。此外,我们将样本分为一个或多个属性类别,包括侧面,正面,仰头,低头,表情和遮挡。加权参数 w n c w^{c}_{n} wnc根据属于 c c c类的样本的分数进行调整(这项工作仅采用分数的倒数)。例如,如果禁用几何和数据不平衡功能,我们的损失会退化为简单的 l 2 l_2 l2损失。无论3D姿势和/或数据不平衡是否困扰训练,我们的损失都可以通过其距离测量来处理局部变化。

Although, in the literature, several works have considered the 3D pose information to improve the performance,our loss has following merits: 1) it plays in a coupled way between 3D pose estimation and 2D distance measurement,which is much more reasonable than simply adding two concerns [14, 15]; 2) it is intuitive and easy to be computed both forward and backward, comparing with [19];and 3) it makes the network work in a single-stage manner instead of cascaded [39, 14], which improves the optimality.We here notice that the variable d n m d^{m}_{n} dnm comes from the backbone net, while θ n k \theta^{k}_{n} θnk from the auxiliary one, which are coupled/connected by the loss in Eq. (2). In the next two subsections, we detail our network, which is schematically illustrated in Fig. 2.

尽管在文献中,有几篇著作考虑了3D姿态信息以提高性能,但我们的损失具有以下优点:1)它以3D姿态估计与2D距离测量之间的耦合方式发挥作用,这比简单地添加更为合理。两个问题[14、15]; 2)与[19]相比,它既直观又易于向前和向后计算;并且3)它使网络以单级方式工作而不是级联[39,14],从而提高了最优性。在这里请注意,变量 d n m d^{m}_ {n} dnm来自骨干网,而 θ n k \theta ^ {k} _ {n} θnk来自辅助变量,它们由Eq(2)的损失耦合/连接。在接下来的两个小节中,我们详细介绍了我们的网络,该网络在图2中进行了示意性说明。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第10张图片](http://img.e-com-net.com/image/info8/c2701c3f8a3841598878222614fd210e.jpg)

2.2. Backbone Network

Similar to other CNN based models, we employ several convolutional layers to extract features and predict landmarks.Considering that human faces are of strong global structures, like symmetry and spacial relationships among eyes, mouth, nose, etc., such global structures could help localize landmarks more precisely. Therefore, instead of single scale feature maps, we extend them into multi-scale maps. The extension is finished via executing convolution operations with strides, which enlarges the receptive field.Then we perform the final prediction through fully connecting the multi-scale feature maps.The detailed configuration of the backbone subnet is summarized in Table 1. From the perspective of architecture, the backbone net is simple. Our primary intention is to verify that, associated with our novel loss and the auxiliary subnet (discussed in the next subsection),even a very simple architecture can achieve state-ofthe-art performance.

与其他基于CNN的模型相似,我们使用了多个卷积层来提取特征并预测地标。考虑到人脸具有强大的全局结构,例如眼,口,鼻等之间的对称性和空间关系,此类全局结构可以帮助定位地标。因此,我们将其扩展为多比例尺地图,而不是单比例尺特征图。扩展是通过大步执行卷积操作完成的,从而扩大了接收域。然后,我们通过完全连接多尺度特征图来执行最终预测。主干网子网的详细配置总结在表1中。在架构上,骨干网很简单。我们的主要目的是验证与我们新颖的损耗和辅助子网(在下一小节中讨论)相关联,即使是非常简单的体系结构也可以实现最新性能。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第11张图片](http://img.e-com-net.com/image/info8/8dd8e57a31684bfba091905964270424.jpg)

The backbone network is the bottleneck in terms of processing speed and model size, as in the testing only this branch is involved. Thus, it is critical to make it fast and compact. Over the last years, several strategies including ShuffleNet [44], Binarization [3], and MobileNet [13] have been investigated to speed up networks. Due to the satisfactory performance of MobileNet techniques (depthwise separable convolutions, linear bottlenecks, and inverted residuals)[13, 26], we replace the traditional convolution operations with the MobileNet blocks. By doing so, the computa-tional load of our backbone network is significantly reduced and the speed is thus accelerated. In addition, our network can be compressed by adjusting the width parameter of MobileNets according to demand from users, for making the model smaller and faster. This operation is based on the observation and assumption that a large amount of individual feature channels of a deep convolutional layer could lie in a lower-dimensional manifold. Thus, it is highly possible to reduce the number of feature maps without (obvious) accuracy degradation. We will show in experiments, losing 80% of the model size can still provide promising accuracy of detection. This again corroborates that a well-designed simple/small architecture can perform sufficiently well on the task of facial landmark detection. It is worth to mention that the quantization techniques are totally compatible with ShuffleNet and MobileNet, which means the size of our model can be further reduced by quantization.

在处理速度和模型大小方面,骨干网是瓶颈,因为在测试中仅涉及此分支。因此,使其快速紧凑是至关重要的。在过去的几年中,已经研究了包括ShuffleNet [44],Binarization [3]和MobileNet [13]在内的几种策略来加速网络。由于MobileNet技术的令人满意的性能(深度可分卷积,线性瓶颈和倒数残差)[13,26],我们用MobileNet块代替了传统的卷积运算。通过这样做,我们的骨干网的计算负载大大降低,从而加快了速度。此外,我们可以根据用户需求通过调整MobileNets的width参数来压缩我们的网络,从而使模型更小,更快。此操作基于以下观察和假设:一个深卷积层的大量单个特征通道可能位于一个较低维的流形中。因此,极有可能减少特征图的数量而不会(明显)降低精度。我们将在实验中显示,丢失模型尺寸的80%仍可以提供有希望的检测精度。这再次证实,设计良好的简单/小型体系结构可以在面部界标检测任务中充分发挥作用。值得一提的是,量化技术与ShuffleNet和MobileNet完全兼容,这意味着可以通过量化进一步减小模型的大小。

2.3. Auxiliary Network

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第12张图片](http://img.e-com-net.com/image/info8/406c7a8339db4025b5190f0dd701ae25.jpg)

It has been verified by previous works [48, 14, 19, 34] that a proper auxiliary constraint is beneficial to making the landmark localization stable and robust. Our auxiliary network plays this role. Different from the previous methods,like [14] learning the 3D to 2D projection matrix, [19] discovering the dendritic structure of parts, and [34] employing boundary lines, our intention is to estimate the 3D rotation information including yaw, pitch, and roll angles.Having these three Euler angles, the pose of head can be determined.

先前的工作[48、14、19、34]已经证明,适当的辅助约束有利于使地标定位稳定且健壮。我们的辅助网络扮演着这个角色。与以前的方法不同,例如[14]学习3D到2D投影矩阵,[19]发现零件的树状结构以及[34]使用边界线,我们的目的是估计3D旋转信息,包括偏航,俯仰,有了这三个欧拉角,就可以确定头部的姿势了。

One may wonder that given predicted and ground-truth landmarks, why not directly compute the Euler angles from them? Technically, it is feasible. However, the landmark prediction may be too inaccurate especially at the beginning of training, which consequently results in a low-quality estimation of the angles. This could drag the training into dilemmas, like over-penalization and slow convergence. To decouple the estimation of rotation information from landmark localization, we bring the auxiliary subnet.

可能会感到奇怪的是,给定预测的和地面的地标,为什么不直接从它们计算出欧拉角呢?从技术上讲,这是可行的。然而,界标预测可能太不准确,尤其是在训练开始时,因此导致角度的低质量估计。这可能会使训练陷入困境,例如过度惩罚和缓慢收敛。为了使旋转信息的估计与地标定位分离,我们带来了辅助子网。

It is worth mentioning that DeTone et al. [8] proposed a deep network for estimating the homography between two related images. The yaw, roll, and pitch angles can be calculated from the estimated homography matrix. But for our task, we do not have a frontal face with respect to each training sample. Intriguingly, our auxiliary net can output the target angles without a requirement of frontal faces as input.The reason is that our task is specific to human faces that are of strong regularity and structure from the frontal view.In addition, the factors such as expressions and lightings barely affect the pose. Thus, an identical average frontal face can be considered available for different persons. In other words, there is NO extra annotation used for computing the Euler angles. The following is our way to calculate them: 1) predefine ONE standard face (averaged over a bunch of frontal faces) and fix 11 landmarks on the dominant face plane as references for ALL of training faces; 2) use the corresponding 11 landmarks of each face and the reference ones to estimate the rotation matrix; and 3) compute the Euler angles from the rotation matrix. For accuracy,the angles may not be exact for each face, as the averaged face is used for all the faces. Even though, they are sufficiently accurate for our task as verified later in experiments.Table 2 provides the configuration of our proposed auxiliary network. Please notice that the input of the auxiliary net is from the 4-th block of the backbone net (see Table 1).

值得一提的是DeTone等。 [8]提出了一种用于估计两个相关图像之间的单应性的深层网络。偏航角,横滚角和俯仰角可以从估计的单应矩阵中计算得出。但是对于我们的任务,对于每个训练样本,我们都没有正面的面孔。有趣的是,我们的辅助网络无需输入正面就可以输出目标角度,这是因为我们的任务特定于从正面来看具有较强规律性和结构的人脸,此外,还存在表达式等因素。灯光几乎不会影响姿势。因此,相同的平均正面可以被认为可用于不同的人。换句话说,没有用于计算欧拉角的额外注释。以下是我们计算它们的方法:1)预定义一张标准人脸(在一堆正面中平均),并在主导人脸平面上固定11个界标作为所有训练人脸的参考; 2)使用每个人脸的相应11个界标和参考界标来估计旋转矩阵; 3)根据旋转矩阵计算欧拉角。为了精确起见,每个面的角度可能不精确,因为对所有面都使用了平均面。即使如此,它们对于我们的任务也足够准确,我们稍后将在实验中对此进行验证。表2提供了我们建议的辅助网络的配置。请注意,辅助网络的输入来自主干网络的第4个块(请参见表1)。

2.4. Implementation Details

During training, all faces are cropped and resized into112 * 112 according to given bounding boxes for preprocessing.We implement the network via the Kera framework,using the batch size of 256, and employ the Adam technique for optimization with the weight decay of 1 0 − 6 10^{-6} 10−6 and momentum of 0.9. The maximum number of iterations is 64K, and the learning rate is fixed to 1 0 − 4 10^{-4} 10−4 throughout the training. The entire network is trained on a Nvidia GTX 1080Ti GPU. For 300W, we augment the training data by flipping each sample and rotating them from -30 to 30 with 5 interval. Further, each sample has a region of 20% face size randomly occluded. While for AFLW, we feed the original training set into the network without any data augmentation. In the testing, only the backbone network is involved, which is efficient.

在训练过程中,根据给定的边界框将所有面孔裁剪并调整为112 * 112的大小进行预处理。我们通过Kera框架(使用256的批量大小)实现网络,并采用Adam技术进行优化,权重衰减为 1 0 − 6 10^{-6} 10−6,动量为0.9。 最大迭代次数为64K,在整个训练过程中,学习率固定为 1 0 − 4 10^{-4} 10−4。 整个网络在Nvidia GTX 1080Ti GPU上进行培训。 对于300W,我们通过翻转每个样本并以5度个间隔将它们从-30度旋转到30度来增强训练数据。 此外,每个样品具有随机遮挡的20%面部尺寸的区域。 对于AFLW,我们将原始训练集输入网络,而无需任何数据扩充。 在测试中,仅涉及骨干网,这是有效的。

3. Experimental Evaluation

3.1. Experimental Settings

Datasets. To evaluate the performance of our proposed PFLD, we conduct experiments on two widely-adopted challenging datasets, say 300W [25] and AFLW [18].

数据集。为了评估我们提出的PFLD的性能,我们在两个广泛采用的具有挑战性的数据集上进行了实验,例如300W [25]和AFLW [18]。

300W. This dataset annotates five face datasets including LFPW, AFW, HELEN, XM2VTS and IBUG, with 68 landmarks. We follow [9, 34, 19] to utilize 3,148 images for training and 689 images for testing. The testing images are divided into two subsets, say the common subset formed by 554 images from LFPW and HELEN, and the challenging subset by 135 images from IBUG. The common and the challenging subsets form the full testing set.

300W。该数据集注释了五个人脸数据集,包括LFPW,AFW,HELEN,XM2VTS和IBUG,具有68个地标。我们遵循[9,34,19]来利用3,148张图像进行训练,并使用689张图像进行测试。测试图像分为两个子集,即由LFPW和HELEN的554张图像形成的公共子集,以及IBUG的135张图像形成的挑战子集。常见和具有挑战性的子集构成完整的测试集。

AFLW. This dataset consists of 24,386 in-the-wild human faces, which are obtained from Flicker with extreme poses,expressions and occlusions. The faces are with head pose ranging from 0 to 120 for yaw, and upto 90 for pitch and roll, respectively. AFLW offers at most 21 landmarks for each face. We use 20,000 images and 4,386 images for training and testing, respectively.

AFLW。该数据集包含24,386张狂野的人脸,这些面孔是从Flicker获得的,具有极端的姿势,表情和遮挡。这些脸部的头部姿势在偏航范围内分别为0到120,在俯仰和侧倾范围内分别为90和90。 AFLW每张脸最多可提供21个地标。我们分别使用20,000张图像和4,386张图像进行训练和测试。

Competitors. The compared approaches include classic and recently proposed deep learning based schemes, which are RCPR (ICCV’13) [4], SDM (CVPR’13)[38], CFAN (ECCV’14) [42], CCNF (ECCV’14) [1], ESR(IJCV’14) [5], ERT (CVPR’14) [16], LBF (CVPR’14) [24],TCDCN (ECCV’14) [45], CFSS (CVPR’15) [46], 3DDFA (CVPR’16) [48], MDM (CVPR’16) [29], RAR (ECCV’16)[37], CPM (CVPR’16) [33], DVLN (CVPRW’17) [35],TSR (CVPR’17) [22], Binary-CNN (ICCV’17) [3],PIFA-CNN (ICCV’17) [15], RDR (CVPR’17) [36],DCFE (ECCV’18) [30], SeqMT (CVPR’18) [12], PCDCNN(CVPR’18) [19], SAN (CVPR’18) [9] and LAB(CVPR’18) [34].

竞争对手。比较的方法包括经典的和最近提出的基于深度学习的方案,它们是RCPR(ICCV’13)[4],SDM(CVPR’13)[38],CFAN(ECCV’14)[42],CCNF(ECCV’14 )[1],ESR(IJCV’14)[5],ERT(CVPR’14)[16],LBF(CVPR’14)[24],TCDCN(ECCV’14)[45],CFSS(CVPR’15 )[46],3DDFA(CVPR’16)[48],MDM(CVPR’16)[29],RAR(ECCV’16)[37],CPM(CVPR’16)[33],DVLN(CVPRW’17 )[35],TSR(CVPR’17)[22],Binary-CNN(ICCV’17)[3],PIFA-CNN(ICCV’17)[15],RDR(CVPR’17)[36],DCFE (ECCV’18)[30],SeqMT(CVPR’18)[12],PCDCNN(CVPR’18)[19],SAN(CVPR’18)[9]和LAB(CVPR’18)[34]。

Evaluation Metrics. Following most previous works[5, 34, 9, 19], normalized mean error (NME) is employed to measure the accuracy, which averages normalized errors over all annotated landmarks. For 300W, we report the results using two normalizing factors. One adopts the eyecenter-distance as the inter-pupil normalizing factor, while the other is normalized by the outer-eye-corner distance denoted as inter-ocular. For ALFW, due to various profile faces, we follow [19, 9, 34] to normalize the obtained er-ror by the ground-truth bounding box size over all visible landmarks. The cumulative error distribution (CED) curve is also used to compare the methods. Besides the accuracy,the processing speed and model size are also compared.

评估指标。遵循大多数先前的工作[5、34、9、19],使用归一化平均误差(NME)来测量准确性,该准确性是对所有带注释地标的归一化误差进行平均的。对于300W,我们使用两个归一化因子报告结果。一个采用眼中心距离作为瞳孔之间的归一化因子,而另一个通过表示为眼间的外眼角距离进行归一化。对于ALFW,由于存在各种轮廓面,我们遵循[19,9,34]通过所有可见地标上的地面真实边界框大小对获得的错误进行归一化。累积误差分布(CED)曲线也用于比较方法。除了准确性,还比较了处理速度和模型大小。

3.2. Experimental Results

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第13张图片](http://img.e-com-net.com/image/info8/ee8ad6f0f41948189773dfe2cbf89142.jpg)

Detection Accuracy. We first compare our PFLD against the state-of-the-art methods on 300W dataset. The results are given in Table 4. Three versions of our model including PFLD 0.25X, PFLD 1X and PFLD 1X+ are reported.PFLD 1X and PFLD 0.25X respectively stand for the entire model and the compressed one by setting the width parameter (of MobileNet blocks) to 0.25, both trained using 300W training data only, while PFLD 1X+ represents the entire model additionally pre-trained on the WFLW dataset [40]. From the numerical results in Table 3, we can observe that our PFLD 1X significantly outperforms previous methods, especially on the challenging subset. Though the performance of PFLD 0.25X is slightly behind that of PFLD 1X, it still achieves better results than the other competitors including most recently proposed LAB [34], SAN[9] and PCD-CNN [19]. This comparison is evident to show that PFLD 0.25X is a good trade-off in practice, which cuts about 80% model size without sacrificing much in accuracy.It also corroborates the assumption that a large number of feature channels of a deep learning convolutional layer could lie in a lower-dimensional manifold. We will see shortly that the speed of PFLD 0.25X is also largely accelerated compared with PFLD 1X. As for PFLD 1X+, it further enlarges the margin of precision to the others. This indicates that there is space for our network to achieve further improvement by feeding in more training data.

检测精度。我们首先将PFLD与300W数据集上的最新方法进行比较。结果在表4中给出。报告了我们的模型的三个版本,包括PFLD 0.25X,PFLD 1X和PFLD 1X +。PFLD1X和PFLD 0.25X分别代表整个模型,而通过设置width参数(MobileNet)压缩了模型块)到0.25,两者都仅使用300W训练数据进行训练,而PFLD 1X +表示在WFLW数据集上另外进行了预训练的整个模型[40]。从表3中的数值结果,我们可以观察到我们的PFLD 1X明显优于以前的方法,尤其是在具有挑战性的子集上。尽管PFLD 0.25X的性能略低于PFLD 1X,但它仍比包括最新提议的LAB [34],SAN [9]和PCD-CNN [19]在内的其他竞争对手获得更好的结果。这种比较显然表明,PFLD 0.25X在实践中是一个很好的折衷方案,可在不牺牲准确性的情况下缩减约80%的模型尺寸,也证实了深度学习卷积层的大量特征通道的假设可能位于较低维的流形中。我们很快就会看到,与PFLD 1X相比,PFLD 0.25X的速度也大大提高了。至于PFLD 1X +,它进一步扩大了精度。这表明我们的网络有空间通过输入更多的训练数据来实现进一步的改进。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第14张图片](http://img.e-com-net.com/image/info8/fc2ad2b17c4b4628958c130fd24dca5d.jpg)

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第15张图片](http://img.e-com-net.com/image/info8/8188ee2ab5ab4d11bf2b48bb4339733a.jpg)

Moreover, we provide CED curves to evaluate the accuracy difference in Fig. 3. From a more practical perspective,different from the previous comparison (the ground-truth bounding boxes of faces are given, which are constructed according to ground-truth landmarks), the faces in the testing set are detected by 300W detector for all the involved competitors in this experiment. The performance of some compared methods might be degraded compared with using GT bounding boxes, such as SAN, which reflects the stability of the landmark detector with respect to the face detector. From the curves, we can see that PFLD can outperform the others by a large margin.

此外,我们提供了CED曲线以评估图3中的精度差异。从更实际的角度来看,与以前的比较不同(给出了地面的真实边界框,这些面是根据真实的地标构造的),该实验中所有涉及的竞争者都用300W检测器检测了测试集中的面孔。与使用GT边界框(例如SAN)相比,某些比较方法的性能可能会降低,这反映了界标检测器相对于面部检测器的稳定性。从曲线中可以看出,PFLD可以大大超越其他PFLD。

We further evaluate the performance difference among different methods on AFLW. Table 5 reports the NME results obtained by the competitors. As can be observed from the table, the methods including TSR, CPM, SAN and our PFLDs significantly outperform the rest competing approaches. Among TSRM CPM, SAN and PFLDs, our PFLD 1X achieves the best accuracy (NME 1.88) followed by SAN (NME1.91). The third place is taken by our PFLD 0.25X with competitive NME 2.07. We again emphasize that the model size and processing speed of PFLD 0.25X are greatly superior over those of SAN, please see Table 3.

我们进一步评估了AFLW不同方法之间的性能差异。表5报告了竞争对手获得的NME结果。从表中可以看出,包括TSR,CPM,SAN和我们的PFLD在内的方法明显优于其他竞争方法。在TSRM CPM,SAN和PFLD中,我们的PFLD 1X实现了最高的精度(NME 1.88),其次是SAN(NME1.91)。第三名是我们的PFLD 0.25X和具有竞争力的NME 2.07。我们再次强调,PFLD 0.25X的模型大小和处理速度大大优于SAN,请参见表3。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第16张图片](http://img.e-com-net.com/image/info8/2c4b5eaae698481da6b0828f4a599e7a.jpg)

Model Size. Table 3 compares our PFLDs with some classic and recently proposed deep learning methods in terms of model size and processing speed. As for model size, our PFLD 0.25X is merely 2.1Mb, saving more than 10Mb from PFLD 1X. PFLD 0.25X is much smaller than the other models including SDM 10.1Mb, LAB 50.7Mb and SAN about 800Mb (containing two VGG-based subnets 270.5Mb+528Mb).

模型大小。表3在模型大小和处理速度方面将我们的PFLD与一些经典的和最近提出的深度学习方法进行了比较。至于型号,我们的PFLD 0.25X仅为2.1Mb,比PFLD 1X节省了10Mb以上。 PFLD 0.25X比其他型号小得多,包括SDM 10.1Mb,LAB 50.7Mb和SAN约800Mb(包含两个基于VGG的子网270.5Mb + 528Mb)。

Processing Speed. Further, we evaluate the efficiency of each algorithm on an i7-6700K CPU (denoted as C) and a Nvidia GTX 1080Ti GPU (denoted as G) unless otherwise stated. Since only the CPU version of SDM [38] and the GPU version of SAN [9] are publicly available,we only report the elapsed CPU time and GPU time for them respectively. As for LAB [34], only the CPU version can be downloaded from its project page. Nevertheless, in the paper [34], the authors stated that their algorithm costs about 60ms on a TITAN X GPU (denoted as G*). As can be seen from the comparison, our PFLD 0.25X and PFLD 1X are remarkably faster than the others in both CPU and GPU times. Please note that the CPU time of LAB is in seconds instead of in milliseconds. The proposed PFLD 0.25X spends the same time (1.2ms) on CPU and GPU,this is because most of time comes from I/O operations.Moreover, PFLD 1X takes about 5 times in CPU and 3 times in GPU of PFLD 0.25X. Even though, PFLD 1X still performs much faster that the others. In addition, for PFLD 0.25X and PFLD 1X, we also perform a test on a Qualcomm ARM 845 processor (denoted as A in the table).Our PFLD 0.25X spends 7ms per face (over 140 fps) while PFLD 1X costs 26.4ms per face (over 37 fps).

处理速度。此外,除非另有说明,否则我们将评估i7-6700K CPU(表示为C)和Nvidia GTX 1080Ti GPU(表示为G)上每种算法的效率。由于只有SDM的CPU版本[38]和SAN的GPU版本[9]是公开的,因此我们仅分别报告它们的CPU时间和GPU时间。对于LAB [34],只能从其项目页面下载CPU版本。然而,在论文[34]中,作者指出,他们的算法在TITAN X GPU(表示为G *)上花费约60毫秒。从比较中可以看出,我们的PFLD 0.25X和PFLD 1X在CPU和GPU时间上均明显快于其他PFLD。请注意,LAB的CPU时间以秒为单位,而不是毫秒。提议的PFLD 0.25X在CPU和GPU上花费相同的时间(1.2ms),这是因为大多数时间来自I / O操作。此外,PFLD 0.25X在CPU和GPU中的耗时约为PFLD 0.25X的5倍和GPU的3倍。即使PFLD 1X的性能仍然比其他产品快得多。此外,对于PFLD 0.25X和PFLD 1X,我们还对Qualcomm ARM 845处理器(在表中以A表示)进行测试。我们的PFLD 0.25X每张脸花费7ms(超过140 fps),而PFLD 1X花费26.4ms每张面孔(超过37 fps)。

Ablation Study. To validate the advantages of our loss, we further carry out ablation study on both of 300W and AFLW. Two typical losses including l 2 l_2 l2 and l 1 l_1 l1 are involved. As shown in Table 6, the difference between l 2 l_2 l2 and l 1 l_1 l1 losses is not very obvious, which obtain [4.40 vs. 4.35] in terms of IPN on 300W and [2.33 vs. 2.31] in NME on AFLW, respectively. We note that our base loss is l 2 l_2 l2 . Three settings are considered: l 2 l_2 l2 with the geometric constraint only ( w c = 1 w^c = 1 wc=1, denoted as ours w/o w w w), l 2 l_2 l2 with the weighting strategy only ( θ k = 0 \theta^k = 0 θk=0, disabling the auxiliary network, denoted as ours w/o θ \theta θ), and l 2 l_2 l2 with both the geometric constraint and the weighting strategy (denoted as ours). From the numerical results, we see that both ours w/o θ \theta θ and ours w/o w w w respectively improve the base l 2 l_2 l2 , by relative 4.1% (IPN 4.22) and 5.9% (IPN 4.14) on 300W, and relative 4.3% (NME 2.23) and 7.3% (NME 2.16) on AFLW. By taking into account both the geometric information and the weighting trick, ours catches relative 10.2% (IPN 3.95) improvement on 300W and 19.3% (NME 1.88) on AFLW, respectively. This study verifies the effectiveness of the design of our loss.

消融研究。为了验证损失的优势,我们进一步对300W和AFLW进行了消融研究。涉及两个典型的损失,包括 l 2 l_2 l2和 l 1 l_1 l1。如表6所示, l 2 l_2 l2和 l 1 l_1 l1的损失之间的差异不是很明显,就300W的IPN而言,分别为AFLW的NME而言,分别为[4.40对4.35]和[2.33对2.31]。我们注意到我们的基本损失是 l 2 l_2 l2。考虑三个设置: l 2 l_2 l2仅具有几何约束( w c = 1 w ^ c = 1 wc=1,表示为我们的w / o w w w), l 2 l_2 l2仅具有加权策略( θ k = 0 \theta^k = 0 θk=0,禁用辅助网络,表示为我们的w / o θ \theta θ)和 l 2 l_2 l2,同时具有几何约束和加权策略(表示为我们的)。从数值结果中,我们看到我们的w / o θ \theta θ 和我们的w / o w w w分别将基础 l 2 l_2 l2提高了300W的相对4.1%(IPN 4.22)和5.9%(IPN 4.14),以及AFLW的相对4.3%(NME 2.23)和7.3%(NME 2.16)。通过同时考虑几何信息和加权技巧,我们的AWW分别获得了300W的10.2%(IPN 3.95)和19.3%(NME 1.88)的相对改进。这项研究验证了损失设计的有效性。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第17张图片](http://img.e-com-net.com/image/info8/6ed97747eac1427693f36a3baed3b1c5.jpg)

Additional Results. Figure 4 displays a number of visual results of testing faces in 300W and AFLW. The faces are under different poses, lightings, expressions and occlusions, as well makeups and styles. Our PFLD 0.25X can obtain very pleasant landmark localization results. For the completeness of system, we simply employ MTCNN [43] to detect faces in images/video frames, and then feed the detected faces into our PFLD to localize landmarks. In Fig. 5, we give two example containing multiple faces. The results are obtained by our system. As can be seen, in the first case of Fig. 5, all the faces are successfully detected,and the landmarks of each face are accurately localized. In the second picture, there are two faces in the back row missed, because they are severely occluded and hardly detected. We emphasize that this omission comes from the face detector instead of the landmark detector. The landmarks of all the detected faces are very well computed.

其他结果。 图4显示了300W和AFLW下测试面的许多视觉结果。 这些面孔具有不同的姿势,灯光,表情和遮挡以及化妆和样式。 我们的PFLD 0.25X可以获得非常令人满意的界标定位结果。 为了系统的完整性,我们仅使用MTCNN [43]来检测图像/视频帧中的人脸,然后将检测到的人脸输入到我们的PFLD中以定位地标。 在图5中,我们给出了两个包含多个面的示例。 结果是通过我们的系统获得的。 可以看出,在图5的第一种情况下,所有面部都被成功检测到,并且每个面部的地标都被精确定位。 在第二张图片中,由于严重遮挡并且几乎不检测到它们,因此在后排遗漏了两个面孔。 我们强调,这种遗漏来自面部检测器,而不是地标检测器。 所有检测到的人脸的地标都得到了很好的计算。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第18张图片](http://img.e-com-net.com/image/info8/2f9cabb573394682bfce39c070e95134.jpg)

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第19张图片](http://img.e-com-net.com/image/info8/a8b143dd6f06446396c75d9c56e2b493.jpg)

4. Concluding Remarks

Three aspects of facial landmark detectors need to be concerned for being competent on large-scale and/or realtime tasks, which are accuracy, efficiency, and compact-ness. This paper proposed a practical facial landmark detector,termed as PFLD, which consists of two subnets, i.e. the backbone network and the auxiliary network. The backbone network is built by the MobileNet blocks, which can largely release the computational pressure from convolutional layers, and make the model flexible in size according to a user’s requirement by adjusting the width parameter. A multi-scale fully connected layer was introduced to enlarge the receptive field and thus enhance the ability of capturing face structures. To further regularize the landmark localization,we customized another branch, say the auxiliary network, by which the rotation information can be effectively estimated. Considering the geometric regularization and data imbalance issue, a novel loss was designed. The extensive experimental results demonstrate the superior performance of our design over the state-of-the-art methods in terms of accuracy, model size, and processing speed, therefore verifying that our PFLD 0.25X is a good trade-off for practical use.

为了能够胜任大规模和/或实时任务,需要关注面部标志检测器的三个方面,即准确性,效率和紧凑性。本文提出了一种实用的人脸标志检测器,称为PFLD,它由两个子网组成,即骨干网和辅助网。骨干网是由MobileNet块构建的,它可以在很大程度上释放卷积层的计算压力,并可以通过调整width参数来根据用户的需求灵活调整模型的大小。引入了多尺度的完全连接层以扩大接收场,从而增强捕获面部结构的能力。为了进一步规范地标定位,我们定制了另一个分支,即辅助网络,通过它可以有效地估计旋转信息。考虑到几何正则化和数据不平衡问题,设计了一种新颖的损失算法。广泛的实验结果证明,我们的设计在准确性,模型大小和处理速度方面均优于最新方法,因此证明了我们的PFLD 0.25X是在实际使用中的良好折衷.

In the current version, PFLD only adopts the rotation information(yaw, roll and pitch angles) as the geometric constraint.It is expected to employ other geometric/structural information to help further improve the accuracy. For instance, like LAB [34], we can regularize landmarks not to deviate far away from boundary lines. From another point of view, a possible attempt for boosting the performance is replacing the base loss, i.e., l 2 l_2 l2 loss, by some task-specific ones. Designing more sophisticated weighting strategies in the loss would be also beneficial, especially when training data is imbalanced and limited. We leave the above mentioned thoughts as our future work.

在当前版本中,PFLD仅采用旋转信息(偏航角,横滚角和俯仰角)作为几何约束,并有望采用其他几何/结构信息来帮助进一步提高精度。例如,像LAB [34]一样,我们可以对地标进行正则化,使其不会偏离边界线。从另一个角度来看,提高性能的一种可能尝试是用一些特定于任务的损失来代替基本损失,即 l 2 l_2 l2损失。在损失中设计更复杂的加权策略也将是有益的,尤其是在训练数据不平衡且有限的情况下。我们将上述思想留作未来的工作。

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第3张图片](http://img.e-com-net.com/image/info8/6fa9eed18106445190487f2bf5cb7bde.jpg)

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第4张图片](http://img.e-com-net.com/image/info8/c6e970f6045741eb8f03f80ceb0073b8.jpg)

![[人脸关键点检测] PFLD:简单、快速、超高精度人脸关键检测_第5张图片](http://img.e-com-net.com/image/info8/9334a6ea68124df5a2fac2c2bc7ea21a.jpg)