paddle2.0高层API实现人脸关键点检测(人脸关键点检测综述_自定义网络_paddleHub_趣味ps)

paddle2.0高层API实现人脸关键点检测(人脸关键点检测综述_自定义网络_paddleHub_趣味ps)

本文包含了:

- 人脸关键点检测综述

- 人脸关键点检测数据集介绍以及数据处理实现

- 自定义网络实现关键点检测

- paddleHub实现关键点检测

- 基于关键点检测的趣味ps

『深度学习7日打卡营·day3』

零基础解锁深度学习神器飞桨框架高层API,七天时间助你掌握CV、NLP领域最火模型及应用。

-

课程地址

传送门:https://aistudio.baidu.com/aistudio/course/introduce/6771 -

目标

- 掌握深度学习常用模型基础知识

- 熟练掌握一种国产开源深度学习框架

- 具备独立完成相关深度学习任务的能力

- 能用所学为AI加一份年味

一、问题定义

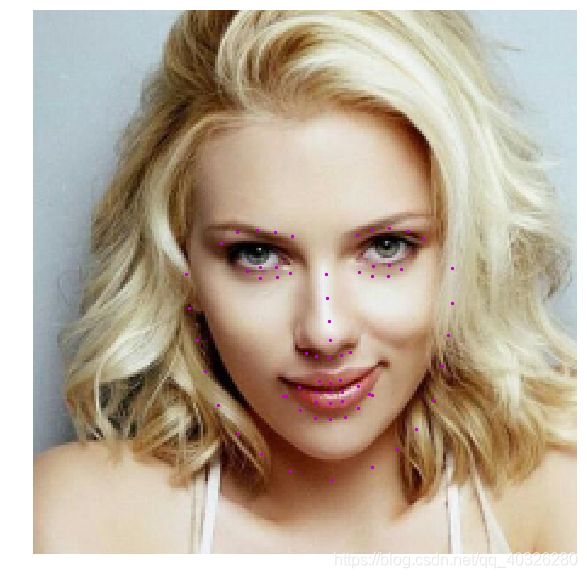

人脸关键点检测,是输入一张人脸图片,模型会返回人脸关键点的一系列坐标,从而定位到人脸的关键信息。

![]()

人脸关键点检测是人脸识别和分析领域中的关键一步,它是诸如自动人脸识别、表情分析、三维人脸重建及三维动画等其它人脸相关问题的前提和突破口。近些年来,深度学习方法由于其自动学习及持续学习能力,已被成功应用到了图像识别与分析、语音识别和自然语言处理等很多领域,且在这些方面都带来了很显著的改善。因此,本文针对深度学习方法进行了人脸关键点检测的研究。

人脸关键点检测深度学习方法综述

Deep Convolutional Network Cascade for Facial Point Detection

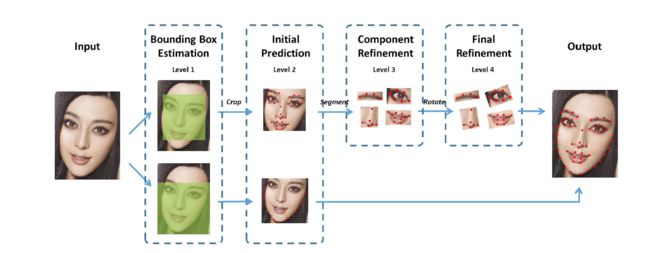

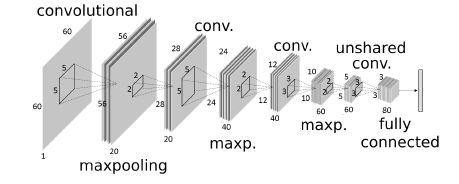

2013 年,Sun 等人首次将 CNN 应用到人脸关键点检测,提出一种级联的 CNN(拥有三个层级)——DCNN(Deep Convolutional Network),此种方法属于级联回归方法。作者通过精心设计拥有三个层级的级联卷积神经网络,不仅改善初始不当导致陷入局部最优的问题,而且借助于 CNN 强大的特征提取能力,获得更为精准的关键点检测。

如图所示,DCNN 由三个 Level 构成。Level-1 由 3 个 CNN 组成;Level-2 由 10 个 CNN 组成(每个关键点采用两个 CNN);Level-3 同样由 10 个 CNN 组成。

DCNN 采用级联回归的思想,从粗到精的逐步得到精确的关键点位置,不仅设计了三级级联的卷积神经网络,还引入局部权值共享机制,从而提升网络的定位性能。最终在数据集 BioID 和 LFPW 上均获得当时最优结果。速度方面,采用 3.3GHz 的 CPU,每 0.12 秒检测一张图片的 5 个关键点。

Extensive Facial Landmark Localization with Coarse-to-fine Convolutional Network Cascade

2013 年,Face++在 DCNN 模型上进行改进,提出从粗到精的人脸关键点检测算法,实现了 68 个人脸关键点的高精度定位。该算法将人脸关键点分为内部关键点和轮廓关键点,内部关键点包含眉毛、眼睛、鼻子、嘴巴共计 51 个关键点,轮廓关键点包含 17 个关键点。

针对内部关键点和外部关键点,该算法并行的采用两个级联的 CNN 进行关键点检测,网络结构如图所示。

算法主要创新点由以下三点:

- 把人脸的关键点定位问题,划分为内部关键点和轮廓关键点分开预测,有效的避免了 loss 不均衡问题

- 在内部关键点检测部分,并未像 DCNN 那样每个关键点采用两个 CNN 进行预测,而是每个器官采用一个 CNN 进行预测,从而减少计算量

- 相比于 DCNN,没有直接采用人脸检测器返回的结果作为输入,而是增加一个边界框检测层(Level-1),可以大大提高关键点粗定位网络的精度。

Face++版 DCNN 首次利用卷积神经网络进行 68 个人脸关键点检测,针对以往人脸关键点检测受人脸检测器影响的问题,作者设计 Level-1 卷积神经网络进一步提取人脸边界框,为人脸关键点检测获得更为准确的人脸位置信息,最终在当年 300-W 挑战赛上获得领先成绩。

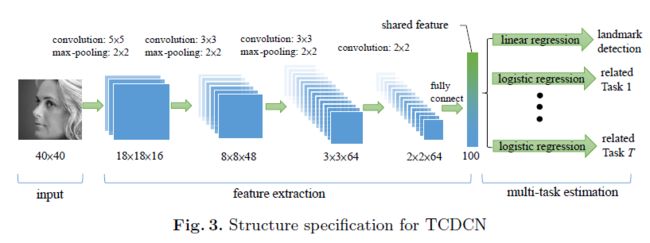

TCDCN-Facial Landmark Detection by Deep Multi-task Learning

优点是快和多任务,不仅使用简单的端到端的人脸关键点检测方法,而且能够做到去分辨人脸的喜悦、悲伤、愤怒等分类标签属性,这样跟文章的标题或者说是文章的主题贴合——多任务。

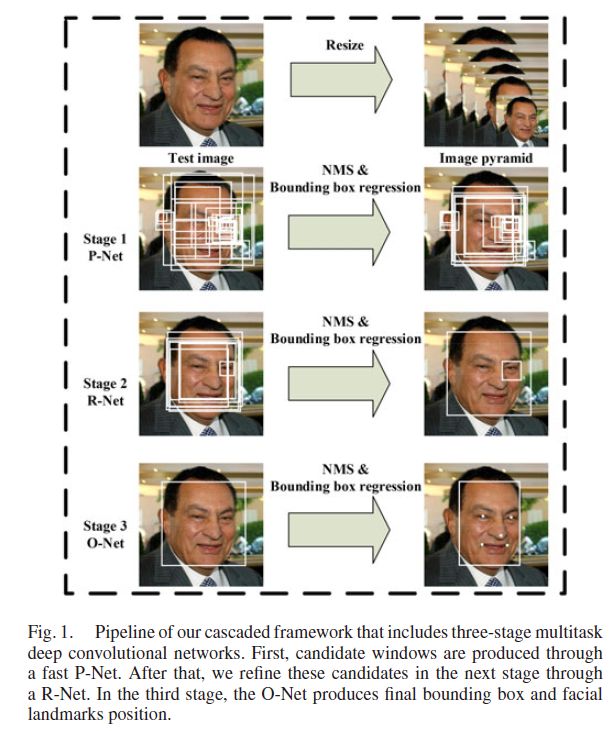

Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks

2016 年,Zhang 等人提出一种多任务级联卷积神经网络(MTCNN, Multi-task Cascaded Convolutional Networks)用以同时处理人脸检测和人脸关键点定位问题。作者认为人脸检测和人脸关键点检测两个任务之间往往存在着潜在的联系,然而大多数方法都未将两个任务有效的结合起来,本文为了充分利用两任务之间潜在的联系,提出一种多任务级联的人脸检测框架,将人脸检测和人脸关键点检测同时进行。

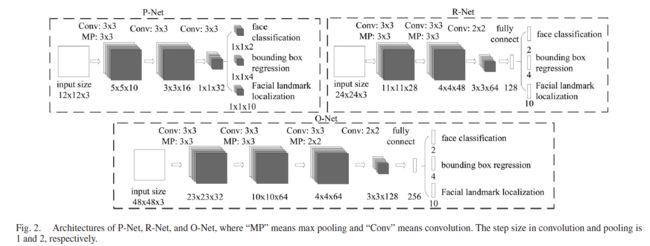

MTCNN 包含三个级联的多任务卷积神经网络,分别是 Proposal Network (P-Net)、Refine Network (R-Net)、Output Network (O-Net),每个多任务卷积神经网络均有三个学习任务,分别是人脸分类、边框回归和关键点定位。网络结构如图所示:

MTCNN 实现人脸检测和关键点定位分为三个阶段。首先由 P-Net 获得了人脸区域的候选窗口和边界框的回归向量,并用该边界框做回归,对候选窗口进行校准,然后通过非极大值抑制(NMS)来合并高度重叠的候选框。然后将 P-Net 得出的候选框作为输入,输入到 R-Net,R-Net 同样通过边界框回归和 NMS 来去掉那些 false-positive 区域,得到更为准确的候选框;最后,利用 O-Net 输出 5 个关键点的位置。

DAN(Deep Alignment Networks)

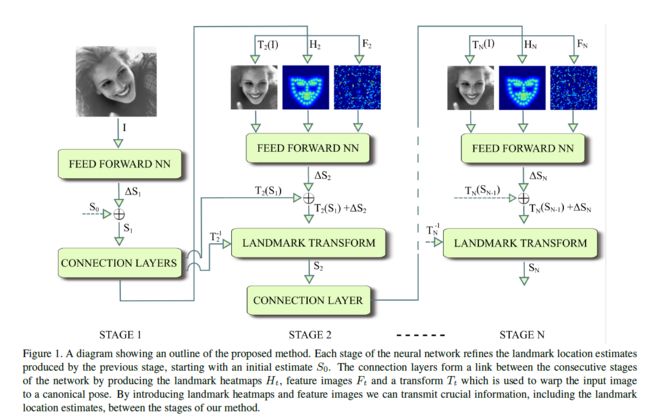

2017 年,Kowalski 等人提出一种新的级联深度神经网络——DAN(Deep Alignment Network),以往级联神经网络输入的是图像的某一部分,与以往不同,DAN 各阶段网络的输入均为整张图片。当网络均采用整张图片作为输入时,DAN 可以有效的克服头部姿态以及初始化带来的问题,从而得到更好的检测效果。之所以 DAN 能将整张图片作为输入,是因为其加入了关键点热图(Landmark Heatmaps),关键点热图的使用是本文的主要创新点。DAN 基本框架如图所示:

DAN 包含多个阶段,每一个阶段含三个输入和一个输出,输入分别是被矫正过的图片、关键点热图和由全连接层生成的特征图,输出是面部形状(Face Shape)。其中,CONNECTION LAYER 的作用是将本阶段得输出进行一系列变换,生成下一阶段所需要的三个输入

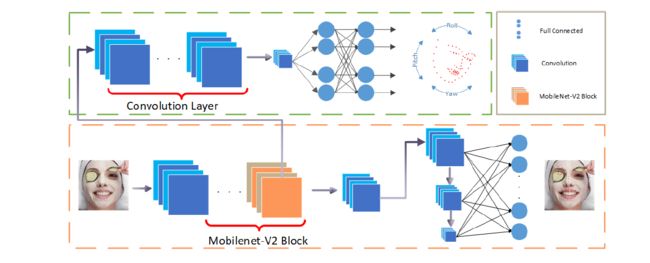

PFLD: A Practical Facial Landmark Detector

这个人脸检测算法PFLD,全文名称为《PFLD: A Practical Facial Landmark Detector》。作者分别来自天津大学、武汉大学、腾讯AI实验室、美国天普大学。该算法对嵌入式设备非常优化,在骁龙845的芯片中效率可达140fps;另外模型大小较小,仅2.1MB;此外在许多关键点检测的benchmark中也取得了相当好的结果。综上,该算法在实际的应用场景中(如低算力的端上设备)有很大的应用空间。

PFLD模型设计

在模型设计上,PFLD的模型设计上骨干网络没有采用VGG16、ResNet等大模型,但是为了增加模型的表达能力,对Mobilenet的输出特征进行了结构上的修改。

PFLD的模型训练策略

一开始我们设计的那个简单的网络,采用的损失函数为MSE,所以为了平衡各种情况的训练数据,我们只能通过增加极端情况下的训练数据、平衡各类情况下的训练数据的比例、控制数据数据的采样形式(非完全随机采样)等方式进行性能调优。

-

损失函数设计

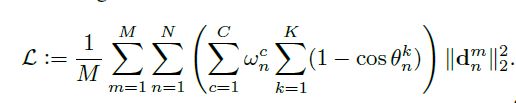

PFLD采用了一种很优雅的方式来处理上述各情况样本不均衡的问题,我们先看看其损失函数的设计:

上式中 wn为可调控的权值函数(针对不同的情况选取不同的权值,如正常情况、遮挡情况、暗光情况等等),theta为人脸姿态的三维欧拉角(K=3),d为回归的landmark和groundtrue的度量(一般情况下为MSE,也可以选L1度量)。该损失函数设计的目的是,对于样本量比较大的数据(如正脸,即欧拉角都相对较小的情况),给予一个小的权值,在进行梯度的反向传播的时候,对模型训练的贡献小一些;对于样本量比较少的数据(侧脸、低头、抬头、表情极端),给予一个较大的权值,从而使在进行梯度的反向传播的时候,对模型训练的贡献大一些。该模型的损失函数的设计,非常巧妙的解决了平衡各类情况训练样本不均衡的问题。

-

配合训练的子网络

PFLD的训练过程中引入了一个子网络,用以监督PFLD网络模型的训练。该子网络仅在训练的阶段起作用,在inference的时候不参与;该子网络的用处,是对于每一个输入的人脸样本,对该样本进行三维欧拉角的估计,其groundtruth由训练数据中的关键点信息进行估计,虽然估计的不够精确,但是作为区分数据分布的依据已经足够了,毕竟还该网络的目的是监督和辅助训练收敛,主要是为了服务关键点检测网络。有一个地方挺有意思的是,该子网络的输入不是训练数据,而是PFLD主网络的中间输出,如下图:

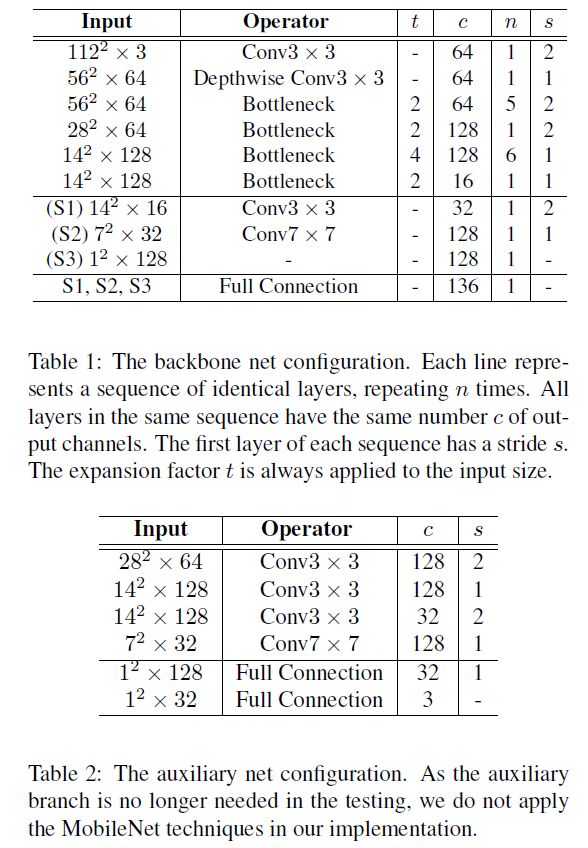

主网络和姿态估计子网络的详细配置如下表:

# 环境导入

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import cv2

import paddle

paddle.set_device('gpu') # 设置为GPU

import warnings

warnings.filterwarnings('ignore') # 忽略 warning

paddle.__version__

'2.0.0'

二、数据准备

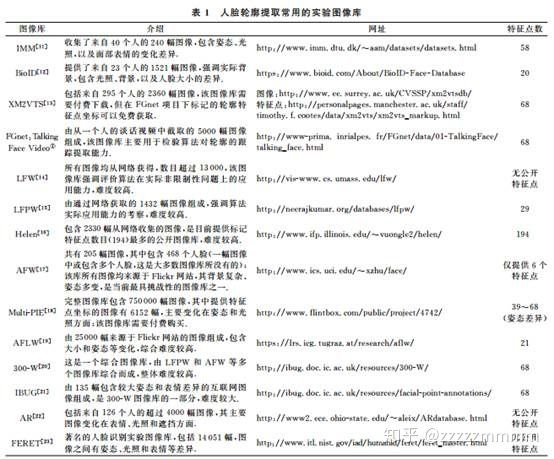

传统人脸关键点检测数据库为室内环境下采集的数据库,比如Multi-pie、Feret、Frgc、AR、BioID 等人脸数据库。而现阶段人脸关键点检测数据库通常为复杂环境下采集的数据库.LFPW 人脸数据库有 1132 幅训练人脸图像和 300 幅测试人脸图像,大部分为正面人脸图像,每个人脸标定 29 个关键点。AFLW 人脸数据库包含 25993 幅从 Flickr 采集的人脸图像,每个人脸标定 21 个关键点。COFW 人脸数据库包含 LFPW 人脸数据库训练集中的 845 幅人脸图像以及其他 500 幅遮挡人脸图像,而测试集为 507 幅严重遮挡(同时包含姿态和表情的变化)的人脸图像,每个人脸标定 29 个关键点。MVFW 人脸数据库为多视角人脸数据集,包括 2050 幅训练人脸图像和 450 幅测试人脸图像,每个人脸标定 68 个关键点。OCFW 人脸数据库包含 2951 幅训练人脸图像(均为未遮挡人脸)和 1246 幅测试人脸图像(均为遮挡人脸),每个人脸标定 68 个关键点。

2.1 下载数据集

本次实验所采用的数据集来源为github的开源项目

目前该数据集已上传到 AI Studio 人脸关键点识别,加载后可以直接使用下面的命令解压。

# 覆盖且不显示

# !unzip -o -q data/data69065/data.zip

解压后的数据集结构为

data/

|—— test

| |—— Abdel_Aziz_Al-Hakim_00.jpg

... ...

|—— test_frames_keypoints.csv

|—— training

| |—— Abdullah_Gul_10.jpg

... ...

|—— training_frames_keypoints.csv

其中,training 和 test 文件夹分别存放训练集和测试集。training_frames_keypoints.csv 和 test_frames_keypoints.csv 存放着训练集和测试集的标签。接下来,我们先来观察一下 training_frames_keypoints.csv 文件,看一下训练集的标签是如何定义的。

key_pts_frame = pd.read_csv('data/training_frames_keypoints.csv') # 读取数据集

print('Number of images: ', key_pts_frame.shape[0]) # 输出数据集大小

key_pts_frame.head(5) # 看前五条数据

Number of images: 3462

| Unnamed: 0 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ... | 126 | 127 | 128 | 129 | 130 | 131 | 132 | 133 | 134 | 135 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Luis_Fonsi_21.jpg | 45.0 | 98.0 | 47.0 | 106.0 | 49.0 | 110.0 | 53.0 | 119.0 | 56.0 | ... | 83.0 | 119.0 | 90.0 | 117.0 | 83.0 | 119.0 | 81.0 | 122.0 | 77.0 | 122.0 |

| 1 | Lincoln_Chafee_52.jpg | 41.0 | 83.0 | 43.0 | 91.0 | 45.0 | 100.0 | 47.0 | 108.0 | 51.0 | ... | 85.0 | 122.0 | 94.0 | 120.0 | 85.0 | 122.0 | 83.0 | 122.0 | 79.0 | 122.0 |

| 2 | Valerie_Harper_30.jpg | 56.0 | 69.0 | 56.0 | 77.0 | 56.0 | 86.0 | 56.0 | 94.0 | 58.0 | ... | 79.0 | 105.0 | 86.0 | 108.0 | 77.0 | 105.0 | 75.0 | 105.0 | 73.0 | 105.0 |

| 3 | Angelo_Reyes_22.jpg | 61.0 | 80.0 | 58.0 | 95.0 | 58.0 | 108.0 | 58.0 | 120.0 | 58.0 | ... | 98.0 | 136.0 | 107.0 | 139.0 | 95.0 | 139.0 | 91.0 | 139.0 | 85.0 | 136.0 |

| 4 | Kristen_Breitweiser_11.jpg | 58.0 | 94.0 | 58.0 | 104.0 | 60.0 | 113.0 | 62.0 | 121.0 | 67.0 | ... | 92.0 | 117.0 | 103.0 | 118.0 | 92.0 | 120.0 | 88.0 | 122.0 | 84.0 | 122.0 |

5 rows × 137 columns

上表中每一行都代表一条数据,其中,第一列是图片的文件名,之后从第0列到第135列,就是该图的关键点信息。因为每个关键点可以用两个坐标表示,所以 136/2 = 68,就可以看出这个数据集为68点人脸关键点数据集。

Tips1: 目前常用的人脸关键点标注,有如下点数的标注

- 5点

- 21点

- 68点

- 98点

Tips2:本次所采用的68标注,标注顺序如下:

![]()

# 计算标签的均值和标准差,用于标签的归一化

key_pts_values = key_pts_frame.values[:,1:] # 取出标签信息

data_mean = key_pts_values.mean() # 计算均值

data_std = key_pts_values.std() # 计算标准差

print('标签的均值为:', data_mean)

print('标签的标准差为:', data_std)

标签的均值为: 104.4724870017331

标签的标准差为: 43.17302271754281

2.2 查看图像

def show_keypoints(image, key_pts):

"""

Args:

image: 图像信息

key_pts: 关键点信息,

展示图片和关键点信息

"""

plt.imshow(image.astype('uint8')) # 展示图片信息

for i in range(len(key_pts)//2,):

plt.scatter(key_pts[i*2], key_pts[i*2+1], s=20, marker='.', c='b') # 展示关键点信息 蓝色散点

# 展示单条数据

n = int(np.random.randint(1, 3462, size=1)) # n为数据在表格中的索引

image_name = key_pts_frame.iloc[n, 0] # 获取图像名称

key_pts = key_pts_frame.iloc[n, 1:].as_matrix() # 将图像label格式转为numpy.array的格式

key_pts = key_pts.astype('float').reshape(-1) # 获取图像关键点信息

print(key_pts.shape)

plt.figure(figsize=(10, 10)) # 展示的图像大小

show_keypoints(mpimg.imread(os.path.join('data/training/', image_name)), key_pts) # 展示图像与关键点信息

plt.show() # 展示图像

(136,)

2.3 数据集定义

使用飞桨框架高层API的 paddle.io.Dataset 自定义数据集类,具体可以参考官网文档 自定义数据集。

作业1:自定义 Dataset,完成人脸关键点数据集定义

按照 __init__ 中的定义,实现 __getitem__ 和 __len__.

# 按照Dataset的使用规范,构建人脸关键点数据集

from paddle.io import Dataset

class FacialKeypointsDataset(Dataset):

# 人脸关键点数据集

"""

步骤一:继承paddle.io.Dataset类

"""

def __init__(self, csv_file, root_dir, transform=None):

"""

步骤二:实现构造函数,定义数据集大小

Args:

csv_file (string): 带标注的csv文件路径

root_dir (string): 图片存储的文件夹路径

transform (callable, optional): 应用于图像上的数据处理方法

"""

self.key_pts_frame = pd.read_csv(csv_file) # 读取csv文件

self.root_dir = root_dir # 获取图片文件夹路径

self.transform = transform # 获取 transform 方法

def __getitem__(self, idx):

"""

步骤三:实现__getitem__方法,定义指定index时如何获取数据,并返回单条数据(训练数据,对应的标签)

"""

# 实现 __getitem__

image_dir = os.path.join(self.root_dir, self.key_pts_frame.iloc[idx, 0])

# 读取图像

image = mpimg.imread(image_dir)

# 去除图像\alpha通道

if image.shape[-1] == 4:

image = image[..., 0:3]

# 读取关键点

key_pts = self.key_pts_frame.iloc[idx, 1:].as_matrix() # 不读取name

key_pts = key_pts.astype('float').reshape(-1) # [136, 1]

# 数据增强

if self.transform:

image, key_pts = self.transform([image, key_pts])

# to_numpy

image = np.array(image, dtype='float32')

key_pts = np.array(key_pts, dtype='float32')

return image, key_pts

def __len__(self):

"""

步骤四:实现__len__方法,返回数据集总数目

"""

# 实现 __len__

return len(self.key_pts_frame)

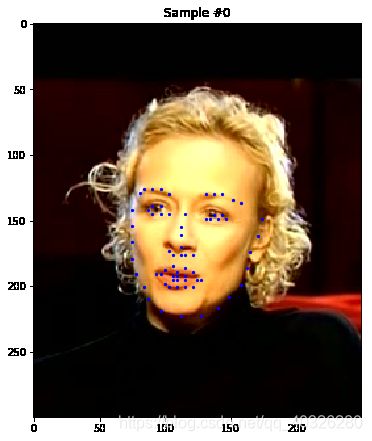

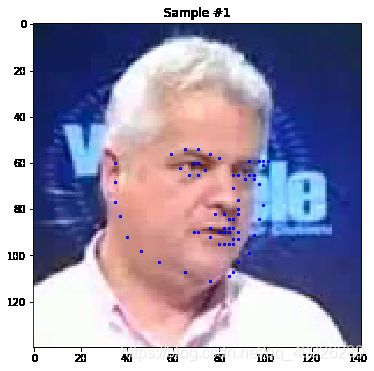

2.4 训练集可视化

实例化数据集并显示一些图像。

# 构建一个数据集类

face_dataset = FacialKeypointsDataset(csv_file='data/training_frames_keypoints.csv',

root_dir='data/training/')

# 输出数据集大小

print('数据集大小为: ', len(face_dataset))

# 根据 face_dataset 可视化数据集

num_to_display = 3

for i in range(num_to_display):

# 定义图片大小

fig = plt.figure(figsize=(20,10))

# 随机选择图片

rand_i = np.random.randint(0, len(face_dataset))

sample = face_dataset[rand_i]

# 输出图片大小和关键点的数量

print(i, sample[0].shape, sample[1].shape)

# 设置图片打印信息

ax = plt.subplot(1, num_to_display, i + 1)

ax.set_title('Sample #{}'.format(i))

# 输出图片

show_keypoints(sample[0], sample[1])

数据集大小为: 3462

0 (300, 250, 3) (136,)

1 (140, 142, 3) (136,)

2 (208, 174, 3) (136,)

上述代码虽然完成了数据集的定义,但是还有一些问题,如:

- 每张图像的大小不一样,图像大小需要统一以适配网络输入要求

- 图像格式需要适配模型的格式输入要求

- 数据量比较小,没有进行数据增强

这些问题都会影响模型最终的性能,所以需要对数据进行预处理。

2.5 Transforms

对图像进行预处理,包括灰度化、归一化、重新设置尺寸、随机裁剪,修改通道格式等等,以满足数据要求;每一类的功能如下:

- 灰度化:丢弃颜色信息,保留图像边缘信息;识别算法对于颜色的依赖性不强,加上颜色后鲁棒性会下降,而且灰度化图像维度下降(3->1),保留梯度的同时会加快计算。

- 归一化:加快收敛

- 重新设置尺寸:数据增强

- 随机裁剪:数据增强

- 修改通道格式:改为模型需要的结构

作业2:实现自定义ToCHW

实现数据预处理方法 ToCHW

# 标准化自定义 transform 方法

class TransformAPI(object):

"""

步骤一:继承 object 类

"""

def __call__(self, data):

"""

步骤二:在 __call__ 中定义数据处理方法

"""

processed_data = data

return processed_data

import paddle.vision.transforms.functional as F

class GrayNormalize(object):

# 将图片变为灰度图,并将其值放缩到[0, 1]

# 将 label 放缩到 [-1, 1] 之间

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

# 灰度化图片

gray_scale = paddle.vision.transforms.Grayscale(num_output_channels=3)

image_copy = gray_scale(image_copy)

# 将图片值放缩到 [0, 1]

image_copy = image_copy / 255.0

# 将坐标点放缩到 [-1, 1]

mean = data_mean # 获取标签均值

std = data_std # 获取标签标准差

key_pts_copy = (key_pts_copy - mean)/std

return image_copy, key_pts_copy

class Resize(object):

# 将输入图像调整为指定大小

def __init__(self, output_size):

assert isinstance(output_size, (int, tuple))

self.output_size = output_size

def __call__(self, data):

image = data[0] # 获取图片

key_pts = data[1] # 获取标签

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

h, w = image_copy.shape[:2]

if isinstance(self.output_size, int):

if h > w:

new_h, new_w = self.output_size * h / w, self.output_size

else:

new_h, new_w = self.output_size, self.output_size * w / h

else:

new_h, new_w = self.output_size

new_h, new_w = int(new_h), int(new_w)

img = F.resize(image_copy, (new_h, new_w))

# scale the pts, too

key_pts_copy[::2] = key_pts_copy[::2] * new_w / w

key_pts_copy[1::2] = key_pts_copy[1::2] * new_h / h

return img, key_pts_copy

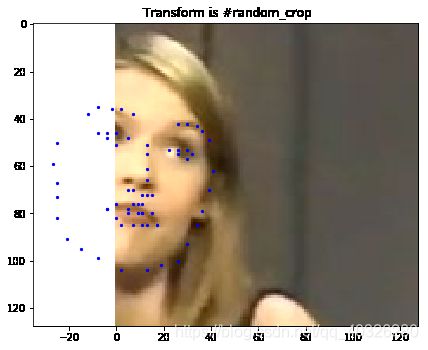

class RandomCrop(object):

# 随机位置裁剪输入的图像

def __init__(self, output_size):

assert isinstance(output_size, (int, tuple))

if isinstance(output_size, int):

self.output_size = (output_size, output_size)

else:

assert len(output_size) == 2

self.output_size = output_size

def __call__(self, data):

image = data[0]

key_pts = data[1]

image_copy = np.copy(image)

key_pts_copy = np.copy(key_pts)

h, w = image_copy.shape[:2]

new_h, new_w = self.output_size

top = np.random.randint(0, h - new_h)

left = np.random.randint(0, w - new_w)

image_copy = image_copy[top: top + new_h,

left: left + new_w]

key_pts_copy[::2] = key_pts_copy[::2] - left

key_pts_copy[1::2] = key_pts_copy[1::2] - top

return image_copy, key_pts_copy

class ToCHW(object):

# 将图像的格式由HWC改为CHW

def __call__(self, data):

# 实现ToCHW,可以使用 paddle.vision.transforms.Transpose 实现

image = data[0]

key_pts = data[1]

transpose = paddle.vision.transforms.Transpose((2, 0, 1)) # [h w c] -> [c h w]

image = transpose(image)

return image, key_pts

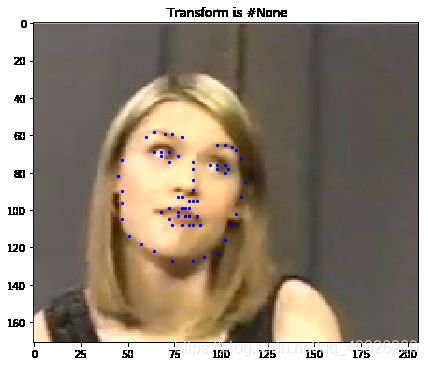

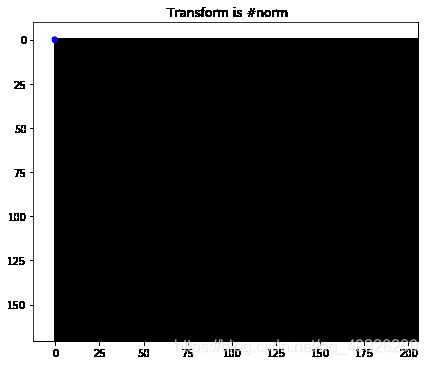

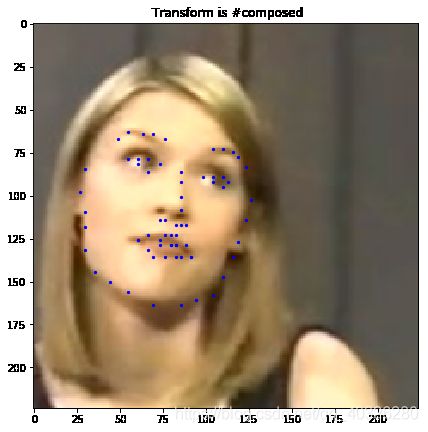

看一下每种图像预处理方法的的效果。

import paddle.vision.transforms as T

# 测试 Resize

resize = Resize(256)

# 测试 RandomCrop

random_crop = RandomCrop(128)

# 测试 GrayNormalize

norm = GrayNormalize()

# 测试 Resize + RandomCrop,图像大小变到250*250, 然后截取出224*224的图像块

composed = paddle.vision.transforms.Compose([Resize(250), RandomCrop(224)])

test_num = 800 # 测试的数据下标

data = face_dataset[test_num]

transforms = {'None': None,

'norm': norm,

'random_crop': random_crop,

'resize': resize,

'composed': composed}

for i, func_name in enumerate(transforms):

# 定义图片大小

fig = plt.figure(figsize=(40, 40))

# 处理图片

if transforms[func_name] != None:

transformed_sample = transforms[func_name](data)

else:

transformed_sample = data

# 设置图片打印信息

ax = plt.subplot(1, 5, i + 1)

ax.set_title(' Transform is #{}'.format(func_name))

# 输出图片

show_keypoints(transformed_sample[0], transformed_sample[1])

2.6 使用数据预处理的方式完成数据定义

让我们将 Resize、RandomCrop、GrayNormalize、ToCHW 应用于新的数据集

from paddle.vision.transforms import Compose

data_transform = Compose([Resize(256), RandomCrop(224), GrayNormalize(), ToCHW()])

# create the transformed dataset

train_dataset = FacialKeypointsDataset(csv_file='data/training_frames_keypoints.csv',

root_dir='data/training/',

transform=data_transform)

print('Number of train dataset images: ', len(train_dataset))

for i in range(2):

sample = train_dataset[i]

print(i, sample[0].shape, sample[1].shape)

test_dataset = FacialKeypointsDataset(csv_file='data/test_frames_keypoints.csv',

root_dir='data/test/',

transform=data_transform)

print('Number of test dataset images: ', len(test_dataset))

Number of train dataset images: 3462

0 (3, 224, 224) (136,)

1 (3, 224, 224) (136,)

Number of test dataset images: 770

3、模型组建

3.1 组网可以很简单

根据前文的分析可知,人脸关键点检测和分类,可以使用同样的网络结构,如LeNet、Resnet50等完成特征的提取,只是在原来的基础上,需要修改模型的最后部分,将输出调整为 人脸关键点的数量*2,即每个人脸关键点的横坐标与纵坐标,就可以完成人脸关键点检测任务了,具体可以见下面的代码,也可以参考官网案例:人脸关键点检测

网络结构如下:

![]()

作业3:根据上图,实现网络结构

import paddle.nn as nn

from paddle.vision.models import resnet50

# Sequential继承

class SimpleNet(paddle.nn.Sequential):

# 实现 __init__

def __init__(self, key_pts):

super(SimpleNet, self).__init__(

paddle.vision.models.resnet50(pretrained=True),

nn.Linear(1000, 512),

nn.ReLU(),

nn.Linear(512, key_pts*2)

)

3.2 网络结构可视化

使用model.summary可视化网络结构。

model = paddle.Model(SimpleNet(key_pts=68))

model.summary((-1, 3, 224, 224))

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-107 [[1, 3, 224, 224]] [1, 64, 112, 112] 9,408

BatchNorm2D-107 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-37 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-3 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-109 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-109 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-38 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-110 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-110 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-111 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-111 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-108 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-108 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-33 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-112 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-112 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-39 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-113 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-113 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-114 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-114 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-34 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-115 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-115 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-40 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-116 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-116 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

Conv2D-117 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-117 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

BottleneckBlock-35 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-119 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-119 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-41 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-120 [[1, 128, 56, 56]] [1, 128, 28, 28] 147,456

BatchNorm2D-120 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-121 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-121 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-118 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-118 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-36 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-122 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-122 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-42 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-123 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-123 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-124 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-124 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-37 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-125 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-125 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-43 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-126 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-126 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-127 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-127 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-38 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-128 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-128 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-44 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-129 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-129 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

Conv2D-130 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-130 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

BottleneckBlock-39 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-132 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-132 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-133 [[1, 256, 28, 28]] [1, 256, 14, 14] 589,824

BatchNorm2D-133 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-134 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-134 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-131 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-131 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-40 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-135 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-135 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-46 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-136 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-136 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-137 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-137 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-41 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-138 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-138 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-47 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-139 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-139 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-140 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-140 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-42 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-141 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-141 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-48 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-142 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-142 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-143 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-143 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-144 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-144 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-49 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-145 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-145 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-146 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-146 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-44 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-147 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-147 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-50 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-148 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-148 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

Conv2D-149 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-149 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

BottleneckBlock-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-151 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-151 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-51 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-152 [[1, 512, 14, 14]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-152 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-153 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-153 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-150 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-150 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-46 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

Conv2D-154 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-154 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-52 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-155 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-155 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-156 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-156 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-47 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-157 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-157 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-53 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-158 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-158 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

Conv2D-159 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-159 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

BottleneckBlock-48 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AdaptiveAvgPool2D-3 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Linear-7 [[1, 2048]] [1, 1000] 2,049,000

ResNet-3 [[1, 3, 224, 224]] [1, 1000] 0

Linear-8 [[1, 1000]] [1, 512] 512,512

ReLU-54 [[1, 512]] [1, 512] 0

Linear-9 [[1, 512]] [1, 136] 69,768

===============================================================================

Total params: 26,192,432

Trainable params: 26,086,192

Non-trainable params: 106,240

-------------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 261.50

Params size (MB): 99.92

Estimated Total Size (MB): 361.99

-------------------------------------------------------------------------------

{'total_params': 26192432, 'trainable_params': 26086192}

四、模型训练

4.1 模型配置

训练模型前,需要设置训练模型所需的优化器,损失函数和评估指标。

- 优化器:Adam优化器,快速收敛。

- 损失函数:SmoothL1Loss

- 评估指标:NME

4.2 自定义评估指标

特定任务的 Metric 计算方式在框架既有的 Metric接口中不存在,或算法不符合自己的需求,那么需要我们自己来进行Metric的自定义。这里介绍如何进行Metric的自定义操作,更多信息可以参考官网文档自定义Metric;首先来看下面的代码。

from paddle.metric import Metric

class NME(Metric):

"""

1. 继承paddle.metric.Metric

"""

def __init__(self, name='nme', *args, **kwargs):

"""

2. 构造函数实现,自定义参数即可

"""

super(NME, self).__init__(*args, **kwargs)

self._name = name

self.rmse = 0

self.sample_num = 0

def name(self):

"""

3. 实现name方法,返回定义的评估指标名字

"""

return self._name

def update(self, preds, labels):

"""

4. 实现update方法,用于单个batch训练时进行评估指标计算。

- 当`compute`类函数未实现时,会将模型的计算输出和标签数据的展平作为`update`的参数传入。

"""

N = preds.shape[0]

preds = preds.reshape((N, -1, 2))

labels = labels.reshape((N, -1, 2))

self.rmse = 0

for i in range(N):

pts_pred, pts_gt = preds[i, ], labels[i, ]

interocular = np.linalg.norm(pts_gt[36, ] - pts_gt[45, ])

self.rmse += np.sum(np.linalg.norm(pts_pred - pts_gt, axis=1)) / (interocular * preds.shape[1])

self.sample_num += 1

return self.rmse / N

def accumulate(self):

"""

5. 实现accumulate方法,返回历史batch训练积累后计算得到的评价指标值。

每次`update`调用时进行数据积累,`accumulate`计算时对积累的所有数据进行计算并返回。

结算结果会在`fit`接口的训练日志中呈现。

"""

return self.rmse / self.sample_num

def reset(self):

"""

6. 实现reset方法,每个Epoch结束后进行评估指标的重置,这样下个Epoch可以重新进行计算。

"""

self.rmse = 0

self.sample_num = 0

作业4:实现模型的配置和训练

# 使用 paddle.Model 封装模型

model = paddle.Model(SimpleNet(key_pts=68))

# 配置模型

model.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.001, weight_decay=5e-4, parameters=model.parameters()),

loss=paddle.nn.SmoothL1Loss(),

metrics=NME())

# 训练可视化VisualDL工具的回调函数

visualdl = paddle.callbacks.VisualDL(log_dir='visualdl_log')

# 模型训练

model.fit(train_dataset,

test_dataset,

epochs=50,

batch_size=64,

shuffle=True,

verbose=1,

save_freq=10,

save_dir='./checkpoints',

callbacks=[visualdl])

The loss value printed in the log is the current step, and the metric is the average value of previous step.

Epoch 1/50

step 55/55 [==============================] - loss: 0.0314 - nme: 3.5256e-04 - 519ms/step

save checkpoint at /home/aistudio/checkpoints/0

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1009 - nme: 8.2678e-04 - 392ms/step

Eval samples: 770

Epoch 2/50

step 55/55 [==============================] - loss: 0.0243 - nme: 3.5679e-04 - 515ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1283 - nme: 9.6968e-04 - 384ms/step

Eval samples: 770

Epoch 3/50

step 55/55 [==============================] - loss: 0.0842 - nme: 5.4441e-04 - 516ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1667 - nme: 0.0011 - 384ms/step

Eval samples: 770

Epoch 4/50

step 55/55 [==============================] - loss: 0.0288 - nme: 3.6530e-04 - 508ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0784 - nme: 6.5352e-04 - 386ms/step

Eval samples: 770

Epoch 5/50

step 55/55 [==============================] - loss: 0.0151 - nme: 2.4205e-04 - 515ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1389 - nme: 9.6945e-04 - 395ms/step

Eval samples: 770

Epoch 6/50

step 55/55 [==============================] - loss: 0.0566 - nme: 5.3502e-04 - 515ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0740 - nme: 7.7999e-04 - 387ms/step

Eval samples: 770

Epoch 7/50

step 55/55 [==============================] - loss: 0.0764 - nme: 5.0105e-04 - 527ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1483 - nme: 0.0010 - 394ms/step

Eval samples: 770

Epoch 8/50

step 55/55 [==============================] - loss: 0.0620 - nme: 5.2153e-04 - 517ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0452 - nme: 5.5858e-04 - 388ms/step

Eval samples: 770

Epoch 9/50

step 55/55 [==============================] - loss: 0.0319 - nme: 3.1529e-04 - 548ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0614 - nme: 5.9176e-04 - 389ms/step

Eval samples: 770

Epoch 10/50

step 55/55 [==============================] - loss: 0.0278 - nme: 3.3610e-04 - 535ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0530 - nme: 5.8935e-04 - 395ms/step

Eval samples: 770

Epoch 11/50

step 55/55 [==============================] - loss: 0.0287 - nme: 3.5150e-04 - 539ms/step

save checkpoint at /home/aistudio/checkpoints/10

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0630 - nme: 6.0881e-04 - 391ms/step

Eval samples: 770

Epoch 12/50

step 55/55 [==============================] - loss: 0.0568 - nme: 5.7893e-04 - 519ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1034 - nme: 7.6108e-04 - 391ms/step

Eval samples: 770

Epoch 13/50

step 55/55 [==============================] - loss: 0.0217 - nme: 3.2146e-04 - 516ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0812 - nme: 7.3246e-04 - 390ms/step

Eval samples: 770

Epoch 14/50

step 55/55 [==============================] - loss: 0.0303 - nme: 4.3396e-04 - 519ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0300 - nme: 4.4063e-04 - 390ms/step

Eval samples: 770

Epoch 15/50

step 55/55 [==============================] - loss: 0.0206 - nme: 3.6483e-04 - 512ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1166 - nme: 8.2566e-04 - 384ms/step

Eval samples: 770

Epoch 16/50

step 55/55 [==============================] - loss: 0.0557 - nme: 4.9904e-04 - 518ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0294 - nme: 4.3339e-04 - 404ms/step

Eval samples: 770

Epoch 17/50

step 55/55 [==============================] - loss: 0.0132 - nme: 2.2774e-04 - 547ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0422 - nme: 4.7383e-04 - 389ms/step

Eval samples: 770

Epoch 18/50

step 55/55 [==============================] - loss: 0.0101 - nme: 2.0102e-04 - 526ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0657 - nme: 6.2621e-04 - 401ms/step

Eval samples: 770

Epoch 19/50

step 55/55 [==============================] - loss: 0.0243 - nme: 3.7471e-04 - 523ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0342 - nme: 4.0499e-04 - 388ms/step

Eval samples: 770

Epoch 20/50

step 55/55 [==============================] - loss: 0.0149 - nme: 2.2458e-04 - 518ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0324 - nme: 4.5619e-04 - 389ms/step

Eval samples: 770

Epoch 21/50

step 55/55 [==============================] - loss: 0.0239 - nme: 3.0768e-04 - 522ms/step

save checkpoint at /home/aistudio/checkpoints/20

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0590 - nme: 6.0212e-04 - 398ms/step

Eval samples: 770

Epoch 22/50

step 55/55 [==============================] - loss: 0.0136 - nme: 2.5585e-04 - 516ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0358 - nme: 4.5645e-04 - 394ms/step

Eval samples: 770

Epoch 23/50

step 55/55 [==============================] - loss: 0.0138 - nme: 2.3678e-04 - 517ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0431 - nme: 4.7841e-04 - 403ms/step

Eval samples: 770

Epoch 24/50

step 55/55 [==============================] - loss: 0.0118 - nme: 2.5309e-04 - 543ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0551 - nme: 5.4791e-04 - 394ms/step

Eval samples: 770

Epoch 25/50

step 55/55 [==============================] - loss: 0.0194 - nme: 2.6883e-04 - 523ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0606 - nme: 6.2750e-04 - 395ms/step

Eval samples: 770

Epoch 26/50

step 55/55 [==============================] - loss: 0.1156 - nme: 8.1230e-04 - 507ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0253 - nme: 4.3194e-04 - 398ms/step

Eval samples: 770

Epoch 27/50

step 55/55 [==============================] - loss: 0.0211 - nme: 2.9135e-04 - 524ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0242 - nme: 3.5315e-04 - 724ms/step

Eval samples: 770

Epoch 28/50

step 55/55 [==============================] - loss: 0.0257 - nme: 3.4604e-04 - 527ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0165 - nme: 3.0425e-04 - 389ms/step

Eval samples: 770

Epoch 29/50

step 55/55 [==============================] - loss: 0.0275 - nme: 3.3530e-04 - 571ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0216 - nme: 3.7149e-04 - 394ms/step

Eval samples: 770

Epoch 30/50

step 55/55 [==============================] - loss: 0.0183 - nme: 2.9012e-04 - 513ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0315 - nme: 4.3564e-04 - 393ms/step

Eval samples: 770

Epoch 31/50

step 55/55 [==============================] - loss: 0.0163 - nme: 2.7395e-04 - 510ms/step

save checkpoint at /home/aistudio/checkpoints/30

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.1089 - nme: 8.2030e-04 - 400ms/step

Eval samples: 770

Epoch 32/50

step 55/55 [==============================] - loss: 0.0181 - nme: 2.3484e-04 - 516ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0211 - nme: 3.9242e-04 - 401ms/step

Eval samples: 770

Epoch 33/50

step 55/55 [==============================] - loss: 0.0474 - nme: 5.8619e-04 - 531ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0372 - nme: 4.5803e-04 - 395ms/step

Eval samples: 770

Epoch 34/50

step 55/55 [==============================] - loss: 0.0225 - nme: 3.2990e-04 - 512ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0412 - nme: 4.8774e-04 - 392ms/step

Eval samples: 770

Epoch 35/50

step 55/55 [==============================] - loss: 0.0155 - nme: 2.7035e-04 - 517ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0372 - nme: 5.1617e-04 - 396ms/step

Eval samples: 770

Epoch 36/50

step 55/55 [==============================] - loss: 0.0179 - nme: 3.2274e-04 - 520ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0186 - nme: 3.1128e-04 - 396ms/step

Eval samples: 770

Epoch 37/50

step 55/55 [==============================] - loss: 0.0360 - nme: 4.2556e-04 - 581ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0138 - nme: 2.6913e-04 - 389ms/step

Eval samples: 770

Epoch 38/50

step 55/55 [==============================] - loss: 0.0098 - nme: 2.0373e-04 - 523ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0332 - nme: 5.0381e-04 - 390ms/step

Eval samples: 770

Epoch 39/50

step 55/55 [==============================] - loss: 0.0453 - nme: 4.6348e-04 - 518ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0307 - nme: 4.7557e-04 - 392ms/step

Eval samples: 770

Epoch 40/50

step 55/55 [==============================] - loss: 0.0291 - nme: 4.4760e-04 - 528ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0161 - nme: 2.9352e-04 - 395ms/step

Eval samples: 770

Epoch 41/50

step 55/55 [==============================] - loss: 0.0886 - nme: 6.5529e-04 - 562ms/step

save checkpoint at /home/aistudio/checkpoints/40

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0242 - nme: 3.5008e-04 - 392ms/step

Eval samples: 770

Epoch 42/50

step 55/55 [==============================] - loss: 0.0636 - nme: 5.7302e-04 - 553ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0232 - nme: 3.2434e-04 - 393ms/step

Eval samples: 770

Epoch 43/50

step 55/55 [==============================] - loss: 0.0363 - nme: 4.2757e-04 - 521ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0276 - nme: 4.5470e-04 - 383ms/step

Eval samples: 770

Epoch 44/50

step 55/55 [==============================] - loss: 0.0244 - nme: 3.6430e-04 - 522ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0246 - nme: 3.7649e-04 - 574ms/step

Eval samples: 770

Epoch 45/50

step 55/55 [==============================] - loss: 0.0382 - nme: 4.3469e-04 - 521ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0280 - nme: 4.2459e-04 - 409ms/step

Eval samples: 770

Epoch 46/50

step 55/55 [==============================] - loss: 0.0487 - nme: 4.3741e-04 - 523ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0316 - nme: 4.8172e-04 - 390ms/step

Eval samples: 770

Epoch 47/50

step 55/55 [==============================] - loss: 0.0146 - nme: 2.3825e-04 - 515ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0136 - nme: 2.7338e-04 - 391ms/step

Eval samples: 770

Epoch 48/50

step 55/55 [==============================] - loss: 0.0224 - nme: 2.9963e-04 - 526ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0150 - nme: 2.8412e-04 - 394ms/step

Eval samples: 770

Epoch 49/50

step 55/55 [==============================] - loss: 0.0139 - nme: 2.3343e-04 - 514ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0323 - nme: 4.2434e-04 - 388ms/step

Eval samples: 770

Epoch 50/50

step 55/55 [==============================] - loss: 0.0171 - nme: 2.8570e-04 - 515ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 13/13 [==============================] - loss: 0.0187 - nme: 3.2592e-04 - 385ms/step

Eval samples: 770

save checkpoint at /home/aistudio/checkpoints/final

损失函数的选择:L1Loss、L2Loss、SmoothL1Loss的对比

- L1Loss: 在训练后期,预测值与ground-truth差异较小时, 损失对预测值的导数的绝对值仍然为1,此时如果学习率不变,损失函数将在稳定值附近波动,难以继续收敛达到更高精度。

- L2Loss: 在训练初期,预测值与ground-truth差异较大时,损失函数对预测值的梯度十分大,导致训练不稳定。

- SmoothL1Loss: 在x较小时,对x梯度也会变小,而在x很大时,对x的梯度的绝对值达到上限 1,也不会太大以至于破坏网络参数。

模型保存

checkpoints_path = './output/models'

model.save(checkpoints_path, training=True)

五、模型预测

# 定义功能函数

def show_all_keypoints(image, predicted_key_pts):

"""

展示图像,预测关键点

Args:

image:裁剪后的图像 [224, 224, 3]

predicted_key_pts: 预测关键点的坐标

"""

# 展示图像

plt.imshow(image.astype('uint8'))

# 展示关键点

for i in range(0, len(predicted_key_pts), 2):

plt.scatter(predicted_key_pts[i], predicted_key_pts[i+1], s=20, marker='.', c='m')

def visualize_output(test_images, test_outputs, batch_size=1, h=20, w=10):

"""

展示图像,预测关键点

Args:

test_images:裁剪后的图像 [224, 224, 3]

test_outputs: 模型的输出

batch_size: 批大小

h: 展示的图像高

w: 展示的图像宽

"""

if len(test_images.shape) == 3:

test_images = np.array([test_images])

for i in range(batch_size):

plt.figure(figsize=(h, w))

ax = plt.subplot(1, batch_size, i+1)

# 随机裁剪后的图像

image = test_images[i]

# 模型的输出,未还原的预测关键点坐标值

predicted_key_pts = test_outputs[i]

# 还原后的真实的关键点坐标值

predicted_key_pts = predicted_key_pts * data_std + data_mean

# 展示图像和关键点

show_all_keypoints(np.squeeze(image), predicted_key_pts)

plt.axis('off')

plt.show()

# 读取图像

img = mpimg.imread('./test.jpeg')

print(img.shape)

# 关键点占位符

kpt = np.ones((136, 1))

# data_transform = Compose([Resize(256), RandomCrop(224), GrayNormalize(), ToCHW()])

transform = Compose([Resize(256), RandomCrop(224)])

# 对图像先重新定义大小,并裁剪到 224*224的大小

rgb_img, kpt = transform([img, kpt])

norm = GrayNormalize()

to_chw = ToCHW()

# 对图像进行归一化和格式变换

img, kpt = norm([rgb_img, kpt])

img, kpt = to_chw([img, kpt])

img = np.array([img], dtype='float32')

# 加载保存好的模型进行预测

model = paddle.Model(SimpleNet(key_pts=68))

model.load(checkpoints_path)

model.prepare()

# 预测结果

out = model.predict_batch([img])

out = out[0].reshape((out[0].shape[0], 136, -1))

# 可视化

visualize_output(rgb_img, out, batch_size=1)

(847, 700, 3)

使用PaddleHub进行测试

便捷地获取PaddlePaddle生态下的预训练模型,完成模型的管理和一键预测。配合使用Fine-tune API,可以基于大规模预训练模型快速完成迁移学习,让预训练模型能更好地服务于用户特定场景的应用

图像 - 关键点检测->face_landmark_localization

!hub install face_landmark_localization==1.0.2

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pandas/core/tools/datetimes.py:3: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import MutableMapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/rcsetup.py:20: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Iterable, Mapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/colors.py:53: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Sized

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/nltk/decorators.py:68: DeprecationWarning: `formatargspec` is deprecated since Python 3.5. Use `signature` and the `Signature` object directly

regargs, varargs, varkwargs, defaults, formatvalue=lambda value: ""

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/nltk/lm/counter.py:15: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Sequence, defaultdict

Downloading face_landmark_localization

[==================================================] 100.00%

Uncompress /home/aistudio/.paddlehub/tmp/tmp2uffqr16/face_landmark_localization

[==================================================] 100.00%

Successfully installed face_landmark_localization-1.0.2

[0m

import paddlehub as hub

import cv2

face_landmark = hub.Module(name="face_landmark_localization")

# Replace face detection module to speed up predictions but reduce performance

# face_landmark.set_face_detector_module(hub.Module(name="ultra_light_fast_generic_face_detector_1mb_320"))

result = face_landmark.keypoint_detection(images=[cv2.imread('./test.jpeg')])

# or

# result = face_landmark.keypoint_detection(paths=['/PATH/TO/IMAGE'])

[2021-02-06 01:30:35,589] [ INFO] - Installing face_landmark_localization module

[2021-02-06 01:30:35,704] [ INFO] - Module face_landmark_localization already installed in /home/aistudio/.paddlehub/modules/face_landmark_localization

[2021-02-06 01:30:35,707] [ INFO] - Installing ultra_light_fast_generic_face_detector_1mb_640 module

Downloading ultra_light_fast_generic_face_detector_1mb_640

[==================================================] 100.00%

Uncompress /home/aistudio/.paddlehub/tmp/tmp_7n8dm48/ultra_light_fast_generic_face_detector_1mb_640

[==================================================] 100.00%

[2021-02-06 01:30:40,971] [ INFO] - Successfully installed ultra_light_fast_generic_face_detector_1mb_640-1.1.2

image = cv2.imread('./test.jpeg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

key_pts = np.array(result[0]['data'])

key_pts = key_pts.reshape((136, -1))

key_pts.shape

(136, 1)

# 设置画幅

plt.figure(figsize=(8, 16))

# 展示图像

plt.imshow(image.astype('uint8'))

# 展示关键点

for i in range(0, len(key_pts), 2):

plt.scatter(key_pts[i], key_pts[i+1], s=20, marker='.', c='m')

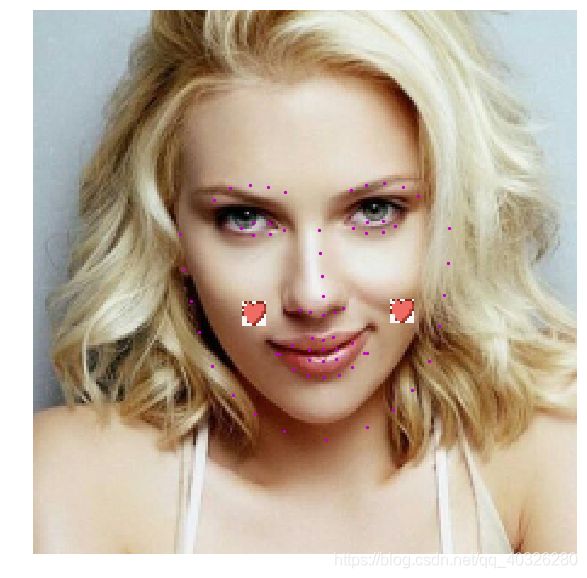

六、趣味应用

当我们得到关键点的信息后,就可以进行一些趣味的应用。

装饰预览

star_image = cv2.imread('ps.jpeg')

star_image = cv2.cvtColor(star_image, cv2.COLOR_BGR2RGB)

# star_image = cv2.resize(star_image, (40, 40))

print(star_image.shape)

plt.imshow(star_image)

(524, 650, 3)

# 定义功能函数

def show_fu(image, predicted_key_pts, size=10):

"""

展示加了贴纸的图像

Args:

image:裁剪后的图像 [224, 224, 3]

predicted_key_pts: 预测关键点的坐标

"""

# 3-32, 36-15

# 计算坐标,15 和 34点的中间值

x1 = (int(predicted_key_pts[28]) + int(predicted_key_pts[70]))//2

y1 = (int(predicted_key_pts[29]) + int(predicted_key_pts[71]))//2

x2 = (int(predicted_key_pts[4]) + int(predicted_key_pts[62]))//2

y2 = (int(predicted_key_pts[5]) + int(predicted_key_pts[63]))//2

# star_image = cv2.imread('rat.jpg')

star_image = cv2.imread('ps.jpeg')

star_image = cv2.cvtColor(star_image, cv2.COLOR_BGR2RGB)

# resize

star_image = cv2.resize(star_image, (size, size))

# 处理通道

if(star_image.shape[2] == 4):

star_image = star_image[:,:,1:4]

# 将小图放到原图上

image[y1:y1+len(star_image[0]), x1:x1+len(star_image[1]),:] = star_image

image[y2:y2+len(star_image[0]), x2:x2+len(star_image[1]),:] = star_image

# 展示处理后的图片

plt.imshow(image.astype('uint8'))

# 展示关键点信息

for i in range(len(predicted_key_pts)//2,):

plt.scatter(predicted_key_pts[i*2], predicted_key_pts[i*2+1], s=20, marker='.', c='m') # 展示关键点信息

def custom_output(test_images, test_outputs, batch_size=1, h=20, w=10):

"""

展示图像,预测关键点

Args:

test_images:裁剪后的图像 [224, 224, 3]

test_outputs: 模型的输出

batch_size: 批大小

h: 展示的图像高

w: 展示的图像宽

"""

if len(test_images.shape) == 3:

test_images = np.array([test_images])

for i in range(batch_size):

plt.figure(figsize=(h, w))

ax = plt.subplot(1, batch_size, i+1)

# 随机裁剪后的图像

image = test_images[i]

# 模型的输出,未还原的预测关键点坐标值

predicted_key_pts = test_outputs[i]

# 还原后的真实的关键点坐标值

predicted_key_pts = predicted_key_pts * data_std + data_mean

# 展示图像和关键点

show_fu(np.squeeze(image), predicted_key_pts)

plt.axis('off')

plt.show()

作业6:实现趣味PS

根据人脸检测的结果,实现趣味PS。

# 读取图像

img = mpimg.imread('./test.jpeg')

# 关键点占位符

kpt = np.ones((136, 1))

transform = Compose([Resize(256), RandomCrop(224)])

# 对图像先重新定义大小,并裁剪到 224*224的大小

rgb_img, kpt = transform([img, kpt])

norm = GrayNormalize()

to_chw = ToCHW()

# 对图像进行归一化和格式变换

img, kpt = norm([rgb_img, kpt])

img, kpt = to_chw([img, kpt])

img = np.array([img], dtype='float32')

# 加载保存好的模型进行预测

# model = paddle.Model(SimpleNet())

# model.load(checkpoints_path)

# model.prepare()

# 预测结果

out = model.predict_batch([img])

out = out[0].reshape((out[0].shape[0], 136, -1))

# 可视化1

custom_output(rgb_img, out, batch_size=1)

# paddleHub 效果可视化

# 设置画幅

plt.figure(figsize=(12, 20))

)]

```python

# paddleHub 效果可视化

# 设置画幅

plt.figure(figsize=(12, 20))

show_fu(image, key_pts, size=40)