RNN学习之时间序列预测sin正弦函数

在学习《Tensorflow实战Google深度学习框架》的循环神经网络应用样例:预测sin正弦函数的时间序列问题。源码运行一直有问题。如下错误:

ValueError: Trying to share variable rnn/multi_rnn_cell/cell_0/basic_lstm_cell/kernel, but specified shape (60, 120) and found shape (40, 120).

找原因,网上看到有人从多层LSTM入手,改为list添加两层Cell。

- 原Code

lstm_cell = tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True)

cell = tf.contrib.rnn.MultiRNNCell([lstm_cell] * NUM_LAYERS)

- 修改后的Code

lstm_model = []

for i in range(2):

lstm_model.append(tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True))

cell = tf.contrib.rnn.MultiRNNCell(cells=lstm_model, state_is_tuple=True)

1. 完整代码

备注:学习,抠的书籍作者的代码,见谅。

import numpy as np

import tensorflow as tf

from tensorflow.contrib.learn.python.learn.estimators.estimator import SKCompat

from tensorflow.python.ops import array_ops as array_ops_

import matplotlib.pyplot as plt

learn = tf.contrib.learn

HIDDEN_SIZE = 30

NUM_LAYERS = 2

TIMESTEPS = 10

TRAINING_STEPS = 10000

BATCH_SIZE = 32

TRAINING_EXAMPLES = 10000

TESTING_EXAMPLES = 1000

SAMPLE_GAP = 0.01

def generate_data(seq):

"""生成正弦数据"""

X = []

y = []

for i in range(len(seq) - TIMESTEPS):

X.append([seq[i: i + TIMESTEPS]])

y.append([seq[i + TIMESTEPS]])

return np.array(X, dtype=np.float32), np.array(y, dtype=np.float32)

def lstm_model(X, y):

# 将如下两行代码注释掉

# lstm_cell = tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True)

# cell = tf.contrib.rnn.MultiRNNCell([lstm_cell] * NUM_LAYERS)

# 以上两行代码改为如下四行:

lstm_model = []

for i in range(2):

lstm_model.append(tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True))

cell = tf.contrib.rnn.MultiRNNCell(cells=lstm_model, state_is_tuple=True)

output, _ = tf.nn.dynamic_rnn(cell, X, dtype=tf.float32)

output = tf.reshape(output, [-1, HIDDEN_SIZE])

# 通过无激活函数的全联接层计算线性回归,并将数据压缩成一维数组的结构。

predictions = tf.contrib.layers.fully_connected(output, 1, None)

# 将predictions和labels调整统一的shape

labels = tf.reshape(y, [-1])

predictions=tf.reshape(predictions, [-1])

loss = tf.losses.mean_squared_error(predictions, labels)

train_op = tf.contrib.layers.optimize_loss(

loss, tf.contrib.framework.get_global_step(),

optimizer="Adagrad", learning_rate=0.1)

return predictions, loss, train_op

if __name__ == '__main__':

regressor = SKCompat(learn.Estimator(model_fn=lstm_model,model_dir="../src/RNN_Tensorflow/TimeSeries/Models/model_2"))

# 生成数据。

test_start = TRAINING_EXAMPLES * SAMPLE_GAP

test_end = (TRAINING_EXAMPLES + TESTING_EXAMPLES) * SAMPLE_GAP

train_X, train_y = generate_data(np.sin(np.linspace(

0, test_start, TRAINING_EXAMPLES, dtype=np.float32)))

print(train_X.shape)

print(train_y.shape)

test_X, test_y = generate_data(np.sin(np.linspace(

test_start, test_end, TESTING_EXAMPLES, dtype=np.float32)))

# 拟合数据。

regressor.fit(train_X, train_y, batch_size=BATCH_SIZE, steps=TRAINING_STEPS)

# 计算预测值。

predicted = [[pred] for pred in regressor.predict(test_X)]

# 计算MSE。

rmse = np.sqrt(((predicted - test_y) ** 2).mean(axis=0))

print ("Mean Square Error is: %f" % rmse[0])

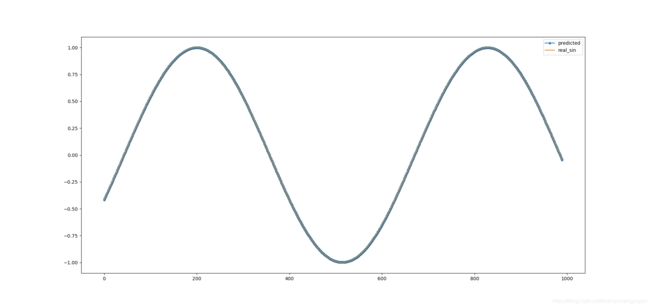

plot_predicted, = plt.plot(predicted, label='predicted', linestyle='-', marker='*')

plot_test, = plt.plot(test_y, label='real_sin')

plt.legend([plot_predicted, plot_test],['predicted', 'real_sin'])

plt.show()