基于pytorch的文本分类

THUCNews:利用RNN(BILSTM)和CNN ,实现THUCNews新闻文本分类模型构建。针对文本进行分词构建词表,完成分词文本的词频过滤、长度统一、序列转换等逻辑。利用训练好的词表模型构建embedding+BILTM 和embedding +CNN的模型,实现对新闻文本分类。同时,利用tensorboardX对acc,loss等变量进行收集,进行tensorboard可视化。

目录结构

text

│ run.py

│ train_eval.py

│ utils.py

│ utils_fasttext.py

│

├─models

│ │ TextCNN.py

│ │ TextRNN.py

│ │

│ └─__pycache__

│ TextCNN.cpython-36.pyc

│ TextRNN.cpython-36.pyc

│

├─THUCNews

│ ├─data

│ │ class.txt

│ │ dev.txt

│ │ embedding_SougouNews.npz

│ │ embedding_Tencent.npz

│ │ test.txt

│ │ train.txt

│ │ vocab.pkl

│ │

│ ├─log

│ │ ├─TextCNN

│ │ │ └─10-25_01.55

│ │ │ events.out.tfevents.1603562104.LAPTOP-OAK2FBND

│ │ │

│ │ └─TextRNN

│ │ └─10-25_02.30

│ │ events.out.tfevents.1603564209.LAPTOP-OAK2FBND

│ │

│ └─saved_dict

│ model.ckpt

│ TextCNN.ckpt

│ TextRNN.ckpt

│

└─__pycache__

train_eval.cpython-36.pyc

utils.cpython-36.pyc

基于RNN-BILSTM实现文本分类

utils.py

import os

import torch

import numpy as np

import pickle

from tqdm import tqdm

import time

from datetime import timedelta

MAX_VOCAB_SIZE = 10000 # 词表长度限制

UNK = '' # 未知词标记

PAD = '' # 填充词标记

def build_vocab(file_path, tokenizer, max_size, min_freq):

"""

构建词频词典

:param file_path: path

:param tokenizer: 按字或者句进行分词

:param max_size: 每个词出现最大词频

:param min_freq: 每个词出现最小词频

:return:

"""

vocab_dict = {}

with open(file_path, 'r', encoding = 'utf-8') as f:

for line in tqdm(f):

line = line.strip()

if not line:

continue

# 数据集 为'词汇阅读是关键 08年考研暑期英语复习全指南\t3'

# split后['词汇阅读是关键 08年考研暑期英语复习全指南', '3']

# [0]为文本内容,[1]为label

content = line.split('\t')[0]

for word in tokenizer(content):

vocab_dict[word] = vocab_dict.get(word, 0) + 1 # 统计每个词出现次数

# 根据词频进行排序,过滤低频词语并裁剪过长句子[(word, count),...]

vocab_list = dict(sorted([_ for _ in vocab_dict.items() if _[1] >= min_freq], key = lambda x : x[1], reverse = True)[:max_size])

# 将UNK和PAD更新入词典, valuse 为 len(vocab_dict) 和 len(vocab_dict)+1

vocab_dict.update({UNK:len(vocab_dict), PAD:len(vocab_dict) + 1})

return vocab_dict

def build_dataset(config, use_word):

"""

实现句子长度统一和文本向量转序列方法

并获得词典、训练集、验证集、测试集

:param config: 命令行参数

:param use_word: 是否按字进行分词

:return:

"""

if use_word:

tokenizer = lambda x : x.split(' ') # 空格拆分,即切分为整句

else:

tokenizer = lambda x : [i for i in x] # 按单个字进行切分

# 加载已准备好的词典模型或重新训练新模型并保存

if os.path.exists(config.vocab_path):

vocab = pickle.load(open(config.vacab_path, 'rb'))

else:

vocab = build_vocab(config.train_path, tokenizer = tokenizer,max_size=MAX_VOCAB_SIZE, min_freq=1)

pickle.dump(vocab, open(config.vocab_path, 'wb'))

print(f'Vocab size:{len(vocab)}')

def load_dataset(path, pad_size = 32):

contents = []

with open(path, 'r', encoding = 'utf-8')as f:

for line in tqdm(f):

if not line:

continue

content, label = line.split('\t')

words_line = []

token = tokenizer(content)

seq_len = len(token)

# 判断句子长度,如果长度小于32,进行pad填充,过长进行裁剪

if pad_size:

if len(token) < pad_size:

token.extend([vocab.get(PAD)] * (pad_size - seq_len))

else:

token = token[:pad_size]

seq_len = len(token)

# 实现词或者句子和序列的相互转化,未出现在词典中的词用UNK标记

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))

contents.append((words_line, int(label), seq_len))

return contents

# 处理词典、训练集、验证集、测试集

train = load_dataset(config.train_path, config.pad_size)

dev = load_dataset(config.dev_path, config.pad_size)

test = load_dataset(config.test_path, config.pad_size)

return vocab, train, dev, test

class DatasetIterator(object):

# 构建数据集可迭代方法

def __init__(self, batches, batch_size, device):

self.batches = batches

self.batch_size = batch_size

self.n_batches = len(batches) // batch_size

self.residue = False # 记录batch数量是否为整数

if len(batches) % self.n_batches != 0:

self.residue = True

self.index = 0

self.device = device

def _to_tensor(self, datas):

# 对数据格式进行转换

# [词频, 类别值, seq_len]

x = torch.LongTensor([_[0] for _ in datas]).to(self.device)

y = torch.LongTensor([_[1] for _ in datas]).to(self.device)

# pad前的长度(超过pad_size的设为pad_size)

seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device)

return (x, seq_len), y

def __next__(self):

if self.residue and self.index == self.n_batches:

batches = self.batches[self.index * self.batch_size:len(self.batches)]

self.index += 1

batches = self._to_tensor(batches)

return batches

elif self.index > self.n_batches:

self.index = 0

raise StopIteration

else:

batches = self.batches[self.index * self.batch_size:(self.index + 1)*self.batch_size]

self.index += 1

batches = self._to_tensor(batches)

return batches

def __iter__(self):

return self

def __len__(self):

if self.residue:

return self.n_batches + 1

else:

return self.n_batches

def build_iterator(dataset, config):

iter = DatasetIterator(dataset, config.batch_size, config.device)

return iter

def get_time_dif(start_time):

"""

获取已使用时间

:param start_time:

:return:

"""

end_time = time.time()

time_dif = end_time - start_time

return timedelta(seconds = int(round(time_dif)))

if __name__ == '__main__':

train_dir = "./THUCNews/data/train.txt"

vocab_dir = "./THUCNews/data/vocab.pkl"

pretrain_dir = "./THUCNews/data/sgns.sogou.char"

embedding_dim = 300 # 每个词向量长度

filename_trimmed_dir = "./THUCNews/data/embedding_SougouNews"

if os.path.exists(vocab_dir):

word2seq = pickle.load(open(vocab_dir, 'rb'))

else:

# tokenizer = lambda x : x.split(' ')

tokenizer = lambda x : [i for i in x]

word2seq = build_vocab(train_dir, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pickle.dump(word2seq, open(vocab_dir, 'wb'))

embeddings = np.random.rand(len(word2seq), embedding_dim)

with open(pretrain_dir, 'r', encoding='utf-8') as f:

for i, line in enumerate(f.readlines()):

# 若第一行是标题

if i == 0:

continue

line = line.strip().split('\t')

if line[0] in word2seq:

idx = word2seq[line[0]]

emd = [float(x) for x in line[1:301]]

embeddings[idx] = np.asarray(emd, dtype='float32')

np.savez_compressed(filename_trimmed_dir,embeddings = embeddings)

utilsfasttext.py

# 相应的bigram特征为:我/来到 来到/达观数据 达观数据/参观

#

# 相应的trigram特征为:我/来到/达观数据 来到/达观数据/参观

import os

import torch

import numpy as np

import pickle as pkl

from tqdm import tqdm

import time

from datetime import timedelta

MAX_VOCAB_SIZE = 10000

UNK, PAD = '' , ''

def build_vocab(file_path, tokenizer, max_size, min_freq):

vocab_dic = {}

with open(file_path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content = lin.split('\t')[0]

for word in tokenizer(content):

vocab_dic[word] = vocab_dic.get(word, 0) + 1

vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1], reverse=True)[:max_size]

vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})

return vocab_dic

def build_dataset(config, ues_word):

if ues_word:

tokenizer = lambda x: x.split(' ') # 以空格隔开,word-level

else:

tokenizer = lambda x: [y for y in x] # char-level

if os.path.exists(config.vocab_path):

vocab = pkl.load(open(config.vocab_path, 'rb'))

else:

vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(vocab, open(config.vocab_path, 'wb'))

print(f"Vocab size: {len(vocab)}")

def biGramHash(sequence, t, buckets):

t1 = sequence[t - 1] if t - 1 >= 0 else 0

return (t1 * 14918087) % buckets

def triGramHash(sequence, t, buckets):

t1 = sequence[t - 1] if t - 1 >= 0 else 0

t2 = sequence[t - 2] if t - 2 >= 0 else 0

return (t2 * 14918087 * 18408749 + t1 * 14918087) % buckets

def load_dataset(path, pad_size=32):

contents = []

with open(path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content, label = lin.split('\t')

words_line = []

token = tokenizer(content)

seq_len = len(token)

if pad_size:

if len(token) < pad_size:

token.extend([vocab.get(PAD)] * (pad_size - len(token)))

else:

token = token[:pad_size]

seq_len = pad_size

# word to id

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))

# fasttext ngram

buckets = config.n_gram_vocab

bigram = []

trigram = []

# ------ngram------

for i in range(pad_size):

bigram.append(biGramHash(words_line, i, buckets))

trigram.append(triGramHash(words_line, i, buckets))

# -----------------

contents.append((words_line, int(label), seq_len, bigram, trigram))

return contents # [([...], 0), ([...], 1), ...]

train = load_dataset(config.train_path, config.pad_size)

dev = load_dataset(config.dev_path, config.pad_size)

test = load_dataset(config.test_path, config.pad_size)

return vocab, train, dev, test

class DatasetIterater(object):

def __init__(self, batches, batch_size, device):

self.batch_size = batch_size

self.batches = batches

self.n_batches = len(batches) // batch_size

self.residue = False # 记录batch数量是否为整数

if len(batches) % self.n_batches != 0:

self.residue = True

self.index = 0

self.device = device

def _to_tensor(self, datas):

# xx = [xxx[2] for xxx in datas]

# indexx = np.argsort(xx)[::-1]

# datas = np.array(datas)[indexx]

x = torch.LongTensor([_[0] for _ in datas]).to(self.device)

y = torch.LongTensor([_[1] for _ in datas]).to(self.device)

bigram = torch.LongTensor([_[3] for _ in datas]).to(self.device)

trigram = torch.LongTensor([_[4] for _ in datas]).to(self.device)

# pad前的长度(超过pad_size的设为pad_size)

seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device)

return (x, seq_len, bigram, trigram), y

def __next__(self):

if self.residue and self.index == self.n_batches:

batches = self.batches[self.index * self.batch_size: len(self.batches)]

self.index += 1

batches = self._to_tensor(batches)

return batches

elif self.index > self.n_batches:

self.index = 0

raise StopIteration

else:

batches = self.batches[self.index * self.batch_size: (self.index + 1) * self.batch_size]

self.index += 1

batches = self._to_tensor(batches)

return batches

def __iter__(self):

return self

def __len__(self):

if self.residue:

return self.n_batches + 1

else:

return self.n_batches

def build_iterator(dataset, config):

iter = DatasetIterater(dataset, config.batch_size, config.device)

return iter

def get_time_dif(start_time):

"""获取已使用时间"""

end_time = time.time()

time_dif = end_time - start_time

return timedelta(seconds=int(round(time_dif)))

if __name__ == "__main__":

'''提取预训练词向量'''

vocab_dir = "./THUCNews/data/vocab.pkl"

pretrain_dir = "./THUCNews/data/sgns.sogou.char"

emb_dim = 300

filename_trimmed_dir = "./THUCNews/data/vocab.embedding.sougou"

word_to_id = pkl.load(open(vocab_dir, 'rb'))

embeddings = np.random.rand(len(word_to_id), emb_dim)

f = open(pretrain_dir, "r", encoding='UTF-8')

for i, line in enumerate(f.readlines()):

# if i == 0: # 若第一行是标题,则跳过

# continue

lin = line.strip().split(" ")

if lin[0] in word_to_id:

idx = word_to_id[lin[0]]

emb = [float(x) for x in lin[1:301]]

embeddings[idx] = np.asarray(emb, dtype='float32')

f.close()

np.savez_compressed(filename_trimmed_dir, embeddings=embeddings)

models/TextRNN.py

import torch

import torch.nn as nn

import numpy as np

import torch.nn.functional as F

class Config(object):

"""配置参数"""

def __init__(self, dataset, embedding):

self.model_name = 'TextCNN'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.vocab_path = dataset + '/data/vocab.pkl' # 词表

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.log_path = dataset + '/log/' + self.model_name

self.embedding_pretrained = torch.tensor(

np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32')) \

if embedding != 'random' else None # 预训练词向量

self.device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu') # 设备

self.dropout = 0.5 # 随机失活

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.n_vocab = 0 # 词表大小,在运行时赋值

self.num_epochs = 10 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 1e-3 # 学习率

self.embed = self.embedding_pretrained.size(1)\

if self.embedding_pretrained is not None else 300 # 字向量维度, 若使用了预训练词向量,则维度统一

self.hidden_size = 128 # lstm隐藏层

self.num_layers = 2 # lstm层数

# Bi_LSTM

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

if config.embedding_pretrained is not None:

# from_pretrained 导入预训练词向量,freeze 这个参数:若为True,那么这个词向量文件在不会在后续被训练。

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze = False)

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx = config.n_vocab-1)

self.lstm = nn.LSTM(

input_size = config.embed,

hidden_size = config.hidden_size,

num_layers = config.num_layers,

dropout = config.dropout,

bidirectional = True,

batch_first = True,

)

self.fc = nn.Linear(config.hidden_size*2, config.num_classes)

def forward(self, x):

x, _ = x

out = self.embedding(x) # [batch_size, seq_len, embedding_dim] -> [128,32,300]

out, _ = self.lstm(out) # [batch_size, seq_len, hidden_size] -> [128,32,128]

out = self.fc(out[:, -1, :]) # 最后时间步t的hidden state

return out

train_eval.py

初始化方法:

kaiming 分布

Xavier初始化方法

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from sklearn import metrics

import time

from utils import get_time_dif

from tensorboardX import SummaryWriter

# 权重初始化,默认xavier

def init_network(model, method='xavier', exclude='embedding', seed=123):

for name, w in model.named_parameters():

if exclude not in name:

if 'weight' in name:

if method == 'xavier':

nn.init.xavier_normal_(w)

elif method == 'kaiming':

nn.init.kaiming_normal_(w)

else:

nn.init.normal_(w)

elif 'bias' in name:

nn.init.constant_(w, 0)

else:

pass

def train(config, model, train_iter, dev_iter, test_iter,writer):

start_time = time.time()

model.train()

optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)

# 学习率指数衰减,每次epoch:学习率 = gamma * 学习率

# scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

total_batch = 0 # 记录进行到多少batch

dev_best_loss = float('inf')

last_improve = 0 # 记录上次验证集loss下降的batch数

flag = False # 记录是否很久没有效果提升

#writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))

for epoch in range(config.num_epochs):

print('Epoch [{}/{}]'.format(epoch + 1, config.num_epochs))

# scheduler.step() # 学习率衰减

for i, (trains, labels) in enumerate(train_iter):

#print (trains[0].shape)

outputs = model(trains)

model.zero_grad()

loss = F.cross_entropy(outputs, labels)

loss.backward()

optimizer.step()

if total_batch % 100 == 0:

# 每多少轮输出在训练集和验证集上的效果

true = labels.data.cpu()

predic = torch.max(outputs.data, 1)[1].cpu()

train_acc = metrics.accuracy_score(true, predic)

dev_acc, dev_loss = evaluate(config, model, dev_iter)

if dev_loss < dev_best_loss:

dev_best_loss = dev_loss

torch.save(model.state_dict(), config.save_path)

improve = '*'

last_improve = total_batch

else:

improve = ''

time_dif = get_time_dif(start_time)

msg = 'Iter: {0:>6}, Train Loss: {1:>5.2}, Train Acc: {2:>6.2%}, Val Loss: {3:>5.2}, Val Acc: {4:>6.2%}, Time: {5} {6}'

print(msg.format(total_batch, loss.item(), train_acc, dev_loss, dev_acc, time_dif, improve))

writer.add_scalar("loss/train", loss.item(), total_batch)

writer.add_scalar("loss/dev", dev_loss, total_batch)

writer.add_scalar("acc/train", train_acc, total_batch)

writer.add_scalar("acc/dev", dev_acc, total_batch)

model.train()

total_batch += 1

if total_batch - last_improve > config.require_improvement:

# 验证集loss超过1000batch没下降,结束训练

print("No optimization for a long time, auto-stopping...")

flag = True

break

if flag:

break

writer.close()

test(config, model, test_iter)

def test(config, model, test_iter):

# test

model.load_state_dict(torch.load(config.save_path))

model.eval()

start_time = time.time()

test_acc, test_loss, test_report, test_confusion = evaluate(config, model, test_iter, test=True)

msg = 'Test Loss: {0:>5.2}, Test Acc: {1:>6.2%}'

print(msg.format(test_loss, test_acc))

print("Precision, Recall and F1-Score...")

print(test_report)

print("Confusion Matrix...")

print(test_confusion)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

def evaluate(config, model, data_iter, test=False):

model.eval()

loss_total = 0

predict_all = np.array([], dtype=int)

labels_all = np.array([], dtype=int)

with torch.no_grad():

for texts, labels in data_iter:

outputs = model(texts)

loss = F.cross_entropy(outputs, labels)

loss_total += loss

labels = labels.data.cpu().numpy()

predic = torch.max(outputs.data, 1)[1].cpu().numpy()

labels_all = np.append(labels_all, labels)

predict_all = np.append(predict_all, predic)

acc = metrics.accuracy_score(labels_all, predict_all)

if test:

report = metrics.classification_report(labels_all, predict_all, target_names=config.class_list, digits=4)

confusion = metrics.confusion_matrix(labels_all, predict_all)

return acc, loss_total / len(data_iter), report, confusion

return acc, loss_total / len(data_iter)

run.py

import time

import torch

import numpy as np

from train_eval import train, init_network

from importlib import import_module

import argparse

from tensorboardX import SummaryWriter

parser = argparse.ArgumentParser(description='Chinese Text Classification')

parser.add_argument('--model', type=str, required=True, help='choose a model: TextCNN, TextRNN, FastText, TextRCNN, TextRNN_Att, DPCNN, Transformer')

parser.add_argument('--embedding', default='pre_trained', type=str, help='random or pre_trained')

parser.add_argument('--word', default=False, type=bool, help='True for word, False for char')

args = parser.parse_args()

if __name__ == '__main__':

dataset = 'THUCNews' # 数据集

# 搜狗新闻:embedding_SougouNews.npz, 腾讯:embedding_Tencent.npz, 随机初始化:random

embedding = 'embedding_SougouNews.npz'

if args.embedding == 'random':

embedding = 'random'

model_name = args.model #TextCNN, TextRNN,

if model_name == 'FastText':

from utils_fasttext import build_dataset, build_iterator, get_time_dif

embedding = 'random'

else:

from utils import build_dataset, build_iterator, get_time_dif

x = import_module('models.' + model_name)

config = x.Config(dataset, embedding)

np.random.seed(1)

torch.manual_seed(1)

torch.cuda.manual_seed_all(1)

torch.backends.cudnn.deterministic = True # 保证每次结果一样

start_time = time.time()

print("Loading data...")

vocab, train_data, dev_data, test_data = build_dataset(config, args.word)

train_iter = build_iterator(train_data, config)

dev_iter = build_iterator(dev_data, config)

test_iter = build_iterator(test_data, config)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

# train

config.n_vocab = len(vocab)

model = x.Model(config).to(config.device)

writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))

if model_name != 'Transformer':

init_network(model)

print(model.parameters)

train(config, model, train_iter, dev_iter, test_iter,writer)

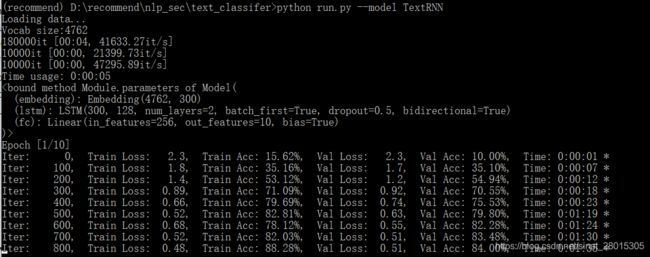

运行

python run.py --model TextRNN

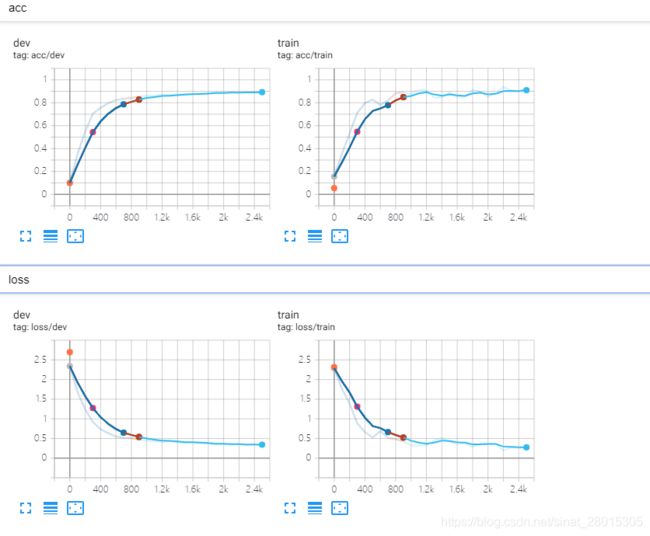

tensorboardX 对收集变量进行可视化

注意:需要环境有tensorflow依赖

pip list

tensorboard --logdir="./THUCNews/log"

(recommend) D:\recommend\nlp_sec\text_classifer>tensorboard --logdir="./THUCNews/log"

基于CNN实现文本分类

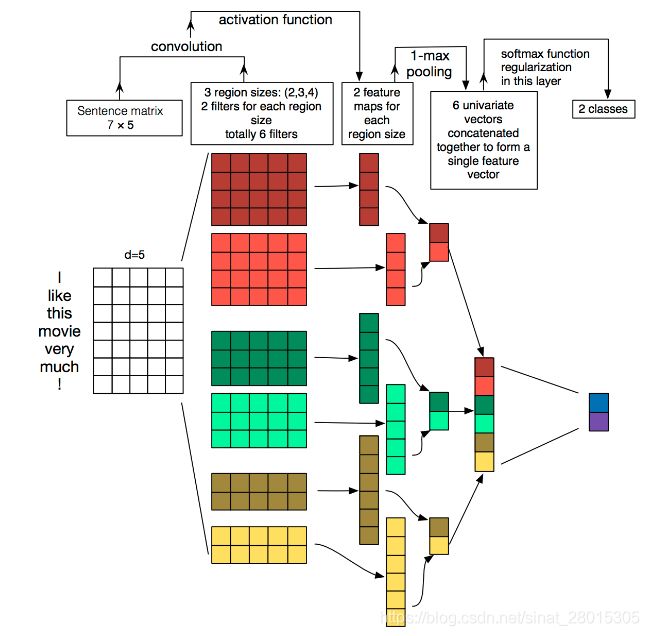

TextCNN网络结构如图所示:

利用TextCNN做文本分类基本流程(以句子分类为例):

(1)将句子转成词,利用词建立字典

(2)词转成向量(word2vec,Glove,bert,nn.embedding)

(3)句子补0操作变成等长

(4)建TextCNN模型,训练,测试

TextCNN按照流程的一个例子。

1,预测结果不是很好,句子太少

2,没有用到复杂的word2vec的模型

3,句子太少,没有eval function。

cnn最适合做的是文本分类,由于卷积和池化会丢失句子局部字词的排列关系,所以纯cnn不太适合序列标注和命名实体识别之类的任务。

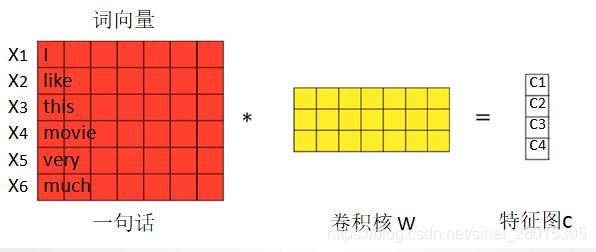

nlp任务的输入都不再是图片像素,而是以矩阵表示的句子或者文档。矩阵的每一行对应一个单词或者字符。也即每行代表一个词向量,通常是像 word2vec 或 GloVe 词向量。在图像问题中,卷积核滑过的是图像的一“块”区域,但在自然语言领域里我们一般用卷积核滑过矩阵的一“行”(单词)。

如上图,如果一个句子有6个词,每个词的词向量长度为7,那输入矩阵就是6x7,这就是我们的“图像”。可以理解为通道为1的图片。

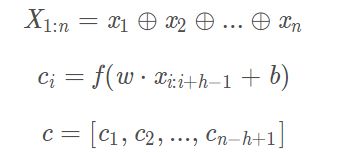

其计算公式如下,其中⊕ 是按行拼接,f 为非线性激活函数

卷积核的“宽度”就是输入矩阵的宽度(词向量维度),“高度”可能会变(句子长度),但一般是每次扫过2-5个单词,是整行整行的进行的。这有点像n-gram语言模型,

也称为n元语言模型。n元语言模型简单来说就是:一个词出现的概率只与它前面的n-1个词有关,考虑到了词与词之间的关联。一般来说,在大的词表中计算3元语言模型就会很吃力,更别说4甚至5了。由于参数量少,CNN做这种类似的计算却很轻松。

卷积核的大小:由于在卷积的时候是整行整行的卷积的,因此只需要确定每次卷积的行数即可,这个行数也就是n-gram模型中的n。一般来讲,n一般按照2,3,4这样来取值,这也和n-gram模型中n的取值相照应。

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

class Config(object):

"""配置参数"""

def __init__(self, dataset, embedding):

self.model_name = 'TextCNN'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.vocab_path = dataset + '/data/vocab.pkl' # 词表

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.log_path = dataset + '/log/' + self.model_name

self.embedding_pretrained = torch.tensor(

np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\

if embedding != 'random' else None # 预训练词向量

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.dropout = 0.5 # 随机失活

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.n_vocab = 0 # 词表大小,在运行时赋值

self.num_epochs = 20 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 1e-3 # 学习率

self.embed = self.embedding_pretrained.size(1)\

if self.embedding_pretrained is not None else 300 # 字向量维度

self.filter_sizes = (2, 3, 4) # 卷积核尺寸

self.num_filters = 256 # 卷积核数量(channels数)

# CNN

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

if config.embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)

self.convs = nn.ModuleList(

[

nn.Conv2d(

in_channels = 1, # 文本当做灰度图,单通道输入

out_channels = config.num_filters,

kernel_size = (k, config.embed)) for k in config.filter_sizes # 2*300 3*300 4*300

]

)

self.dropout = nn.Dropout(config.dropout)

self.fc = nn.Linear(config.num_filters * len(config.filter_sizes), config.num_classes) # (256*3,10)

def conv_and_pool(self, x, conv):

# print(x.shape) [128,1,32,300]

x = F.relu(conv(x)).squeeze(3)

# print(x.shape) # [128,256,31] ->[batch_size, 特征图个数,特征图大小即经过卷积后向量长度]

x = F.max_pool1d(x, x.size(2)).squeeze(2)

# print(x.shape) #[128,256]

return x

def forward(self, x):

# print (x[0].shape) [128,32]->[batch_size, seq_len]

out = self.embedding(x[0]) # [128,32,300]->[batch_size, seq_len, embedding_dim]

# 转化为图像特征需要的数据格式--增加单通道

out = out.unsqueeze(1) # [128,1,32,300]->[batch_size, 1,seq_len, embedding_dim]

out = torch.cat([self.conv_and_pool(out, conv) for conv in self.convs], 1) # [128, 256] ->[128,768] 256*3 = 768 首尾向量进行拼接

out = self.dropout(out) # [128, 768]

out = self.fc(out) # [128,768]->[128,10]

return out