机器学习项目-音乐系统推荐系统-音乐分类-Pydub-python_speech_featrures MFCC-SVM

文章目录

- 1.项目说明

- 2.代码

-

- 2.1 代码结构

- 2.2 feature

- 2.3 svm

- 2.4 acc

- 2.5 class_demo

- 2.6 features_main

- 2.7 svm_main

- 3. 相关资料

1.项目说明

声音处理接口属性:nfft = 2048接口每次处理音乐数据的量

声音文件处理需要安装的包:python_speech_featrures MFCC

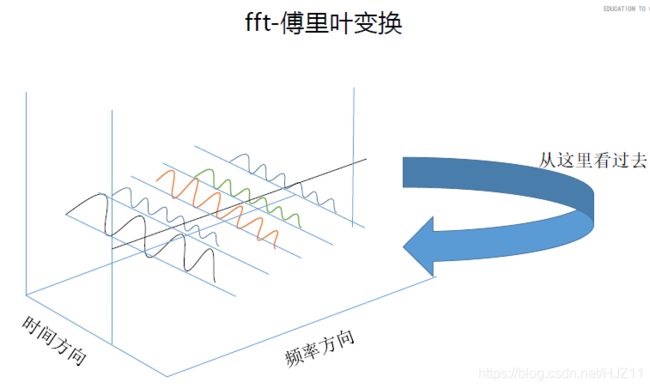

MFCC分两步,第一做傅里叶变换,第二步再做梅尔倒谱

pydub包,做mp3与wav之间的转换,因为Scipy的wavfile接口

只能调用wav格式的文件

第一步,把MP3转换成wav

第二步,读入wav格式文件

第三步,进行mfcc转换================》矩阵

需要有点音乐常识

如何计算空间向量相似度:

第一,欧里几何距离

第二,皮尔森相关系数

1, 配置环境,需要安装两个包,

① pydub, 命令:pip install pydub ----> 把mp3格式文件转化为wav格式

② python_speech_features ,命令:pip install python_speech_features \

----->完成傅里叶变化和梅尔倒谱(MFCC)

③ 安装ffmpeg:

从http://www.ffmpeg.org/download.html下载对应自己电脑版本的软件包,在本地解压,

并将解压后文件夹中的bin目录添加到本地的环境变量中。

2, 特征工程:

如何把音频转化为数值矩阵,至于标签列,只需通过zip映射即可完成。

如果我们了解了一首歌是如何转化为数值矩阵的,那么只需要将这个定义好的函数做for loop;即可完成音乐文件的批量处理。

具体方法是:讲原来m×13的矩阵,在0方向上取均值得到一个1×13的矩阵,然后在1方向上,

对列两两之间计算协方差,得到1×91维的向量,再与之前1×13维的向量进行append,最终得到一个

1×104维的向量来表征1首歌的特征.

['海绵宝宝片尾曲', '快乐'], ['神奇宝贝·胖丁之歌', '快乐'], ['四季的问候', '快乐'],

['晚安喵', '快乐'], ['让她开心(童声版)', '快乐'], ['简单爱', '快乐']]

3, 建模调参:

对选择的模型-支持向量机(svm)进行网格交叉验证(gridSearch),打印出最有的参数来构造一个新的模型。用新的模型来训练样本,并将训练好的模型进行持久化保存,以便于后来预测的时候调用。

2.代码

2.1 代码结构

2.2 feature

#coding:utf-8

import pandas as pd

import numpy as np

import glob

from pydub.audio_segment import AudioSegment

from scipy.io import wavfile

from python_speech_features import mfcc

import os

music_info_csv_file_path = './data/music_info.csv'

music_audio_dir = './data/music/*.mp3'

music_index_label_path = './data/music_index_label.csv'

music_features_file_path = './data/music_features.csv'

def extract_label():

data = pd.read_csv(music_info_csv_file_path)

data = data[['name','tag']]

return data

def fetch_index_label():

"""

从文件中读取index和label之间的映射关系,并返回dict

"""

data = pd.read_csv(music_index_label_path, header=None, encoding='utf-8')

name_label_list = np.array(data).tolist()

index_label_dict = dict(map(lambda t: (t[1], t[0]), name_label_list))

return index_label_dict

def extract(file):

items = file.split('.')

file_format = items[-1].lower()#获取歌曲格式 MP3

file_name = file[: -(len(file_format)+1)] #获取名称

if file_format != 'wav':

song = AudioSegment.from_file(file, format = 'mp3')

file = file_name + '.wav'

song.export(file, format = 'wav') #把MP3格式转化为wav

try:

rate, data = wavfile.read(file) #提取wav格式特征

mfcc_feas = mfcc(data, rate, numcep = 13, nfft = 2048)#接口每次处理音乐数据的量

mm = np.transpose(mfcc_feas)

mf = np.mean(mm ,axis = 1)#求均值

mc = np.cov(mm)

result = mf

for i in range(mm.shape[0]):

result = np.append(result, np.diag(mc, i))#得到13+91=104维特征

os.remove(file)

return result

except Exception as msg:

print(msg)

def extract_and_export():#主函数

df = extract_label()

name_label_list = np.array(df).tolist()

name_label_dict = dict(map(lambda t: (t[0], t[1]), name_label_list))

labels = set(name_label_dict.values())

label_index_dict = dict(zip(labels, np.arange(len(labels))))

all_music_files = glob.glob(music_audio_dir)

all_music_files.sort()

loop_count = 0

flag = True

all_mfcc = np.array([])

for file_name in all_music_files:

print('开始处理:' + file_name.replace('\xa0', ''))

music_name = file_name.split('\\')[-1].split('.')[-2].split('-')[-1]

music_name = music_name.strip()

if music_name in name_label_dict:

label_index = label_index_dict[name_label_dict[music_name]]

ff = extract(file_name)

ff = np.append(ff, label_index)

if flag:

all_mfcc = ff

flag = False

else:

all_mfcc = np.vstack([all_mfcc, ff])

else:

print('无法处理:' + file_name.replace('\xa0', '') +'; 原因是:找不到对应的label')

print('looping-----%d' % loop_count)

print('all_mfcc.shape:', end='')

print(all_mfcc.shape)

loop_count +=1

#保存数据

label_index_list = []

for k in label_index_dict:

label_index_list.append([k, label_index_dict[k]])

pd.DataFrame(label_index_list).to_csv(music_index_label_path, header = None, \

index = False, encoding = 'utf-8')

pd.DataFrame(all_mfcc).to_csv(music_features_file_path, header= None, \

index =False, encoding='utf-8')

return all_mfcc

2.3 svm

#coding:utf-8

from sklearn import svm

import feature, acc

import pandas as pd

from sklearn.utils import shuffle

import numpy as np

from sklearn.model_selection import GridSearchCV, train_test_split

# from sklearn.cross_validation import train_test_split

from sklearn.externals import joblib

# from sklearn.grid_search import GridSearchCV

default_music_csv_file_path = './data/music_features.csv'

default_model_file_path = './data/music_model.pkl'

index_lable_dict = feature.fetch_index_label()

def poly(X, Y):

"""进行模型训练,并且计算训练集上预测值与label的准确性

"""

clf = svm.SVC(kernel = 'poly', C= 0.1, probability = True, decision_function_shape = 'ovo', random_state = 0)

clf.fit(X, Y)

res = clf.predict(X)

restrain = acc.get(res,Y)

return clf, restrain

def fit_dump_model(train_percentage = 0.7, fold = 1, music_csv_file_path=None, model_out_f= None):

"""pass"""

if not music_csv_file_path:

music_csv_file_path = default_music_csv_file_path

data = pd.read_csv(music_csv_file_path, sep=',', header = None, encoding = 'utf-8')

max_train_source = None

max_test_source = None

max_source = None

best_clf = None

flag = True

for index in range(1, int(fold) + 1):

shuffle_data = shuffle(data)

X = shuffle_data.T[:-1].T

Y = np.array(shuffle_data.T[-1:])[0]

x_train, x_test, y_train, y_test = train_test_split(X, Y, train_size = train_percentage)

(clf, train_source) = poly(x_train, y_train)

y_predict = clf.predict(x_test)

test_source = acc.get(y_predict, y_test)

source = 0.35 * train_source + 0.65 * test_source

if flag:

max_source = source

max_train_source = train_source

max_test_source = test_source

best_clf = clf

flag = False

else:

if max_source < source:

max_source = source

max_train_source = train_source

max_test_source = test_source

best_clf = clf

print('第%d次训练,训练集上的正确率为:%0.2f, 测试集上正确率为:%0.2f,加权平均正确率为:%0.2f'%(index , train_source,\

test_source, source ))

print('最优模型效果:训练集上的正确率为:%0.2f,测试集上的正确率为:%0.2f, 加权评卷正确率为:%0.2f'%(max_train_source,\

max_test_source, max_source))

print('最优模型是:')

print(best_clf)

if not model_out_f:

model_out_f = default_model_file_path

joblib.dump(best_clf, model_out_f)

def load_model(model_f = None):

if not model_f:

model_f = default_model_file_path

clf = joblib.load(model_f)

return clf

def internal_cross_validation(X, Y):

parameters = {

'kernel':('linear', 'rbf', 'poly'),

'C':[0.1, 1],

'probability':[True, False],

'decision_function_shape':['ovo', 'ovr']

}

clf = GridSearchCV(svm.SVC(random_state = 0), param_grid = parameters, cv = 5)#固定格式

print('开始交叉验证获取最优参数构建')

clf.fit(X, Y)

print('最优参数:', end = '')

print(clf.best_params_)

print('最优模型准确率:', end = '')

print(clf.best_score_)

def cross_validation(music_csv_file_path= None, data_percentage = 0.7):

if not music_csv_file_path:

music_csv_file_path = default_music_csv_file_path

print('开始读取数据:' + music_csv_file_path)

data = pd.read_csv(music_csv_file_path, sep = ',', header = None, encoding = 'utf-8')

sample_fact = 0.7

if isinstance(data_percentage, float) and 0 < data_percentage < 1:

sample_fact = data_percentage

data = data.sample(frac = sample_fact).T

X = data[:-1].T

Y = np.array(data[-1:])[0]

internal_cross_validation(X, Y)

def fetch_predict_label(clf, X):

label_index = clf.predict([X])

label = index_lable_dict[label_index[0]]

return label

2.4 acc

# encoding:utf-8

def get(res,tes):

#精确度

n = len(res)

truth = (res == tes)

pre = 0

for flag in truth:

if flag:

pre += 1

return (pre * 100) /n

2.5 class_demo

#coding:utf-8

from pydub.audio_segment import AudioSegment

from scipy.io import wavfile

from python_speech_features.base import mfcc

#第一步,MP3=========wav

song = AudioSegment.from_file('./data/3D音效音乐/天空之城.MP3', format = 'MP3')

song.export('./data/3D音效音乐/红白玫瑰.wav', format = 'wav')

rate, data = wavfile.read('./data/3D音效音乐/红白玫瑰.wav')

mf_feat = mfcc(data, rate, numcep = 13, nfft = 2048)

#进一步的降维处理,便于后续的机器学习使用,这里会涉及到一些数学经验算法

2.6 features_main

from music_category import feature

feature.extract_and_export()

2.7 svm_main

#coding:utf-8

from music_category import svm

from music_category import feature

# svm.cross_validation(data_percentage=0.99)

svm.fit_dump_model(train_percentage = 0.9, fold = 100)

# path = './data/test/Lasse Lindh - Run To You.mp3'

# path = './data/test/Maize - I Like You-浪漫.mp3'

# path = './data/test/孙燕姿 - 我也很想他 - 怀旧.mp3'

# music_feature = feature.extract(path)

# clf = svm.load_model()

# label = svm.fetch_predict_label(clf, music_feature)

# print('预测标签为:%s'% label)

path = ['./data/test/Lasse Lindh - Run To You.mp3','./data/test/Maize - I Like You-浪漫.mp3','./data/test/孙燕姿 - 我也很想他 - 怀旧.mp3']

for index in path:

# print(str(index))

music_feature = feature.extract(index)

clf = svm.load_model()

label = svm.fetch_predict_label(clf, music_feature)

print('预测标签为:%s'% label)

3. 相关资料

1. 提取特征属性,比如:歌名、专辑名、作者、发行时间、流派等字段属性作为初始的特征值

2. 进行特征工程,将原始特征属性转换成为向量

3. 使用 kmeans 进行聚类模型构建并进行优化

4. 使用模型对所有的的音乐数据进行预测,并将预测结果 音乐 id ,所属族 id) 保 存到数据库表中

5. 对数据库中的预测结果数据按照族 id 进行聚合,并将聚合结果写到数据库的 另外一张表中

6. 推荐系统直接根据音乐 id 从数据库中获取最相似的其它音乐 id 作为推荐结果。