AI | 第2章 机器学习算法 - sklearn 分类算法

AI | 第2章 机器学习算法 - sklearn 分类算法

- 前言

- 1. sklearn 的转换器和估计器

-

- 1.1 转换器

- 1.2 估计器

- 2. K-近邻算法(KNN 算法)

-

- 2.1 概述

- 2.2 应用

-

-

-

- *Code1 KNN 算法代码示例

-

-

- 3. 模型选择与调优

-

- 3.1 交叉验证 cross validation

- 3.2 超参数搜索-网格搜索 Grid Search

-

-

-

- *Code2 网格搜索代码示例

-

-

- 4. 朴素贝叶斯算法

-

- 4.1 联合概率、条件概率与相互独立

- 4.2 朴素 + 贝叶斯公式

- 4.3 朴素贝叶斯算法在文本分类中的应用

-

- 4.3.1 拉普拉斯平滑系数

-

-

- *Code3 朴素贝叶斯算法代码示例

-

- 5. 决策树

-

- 5.1 概述

- 5.2 信息熵、信息增益与条件熵

- 5.3 决策树概述

- 5.4 决策树的三种算法实现

-

-

-

- *Code4 决策树代码示例

-

-

- 5.5 决策树的可视化

-

-

-

- *Code5 可视化决策树代码示例

-

-

- 6. 集成学习方法 - 随机森林

-

- 6.1 概述

- 6.2 随机森林原理过程

-

-

-

- *Code6 随机森林代码示例

-

-

- 最后

前言

仅供参考

1. sklearn 的转换器和估计器

1.1 转换器

- 特征工程的步骤:实例化一个转换器类(Transformer)、调用 fit_transform() 方法;

- 把特征工程的接口称之为转换器,其中转换器调用有这么几种形式:

- fit_transform:fit 与 transform 的合成;

- fit:进行列计算(例如标准化:求 mean);

- transform:最终转换(例如标准化:求 (x-mean)/std);

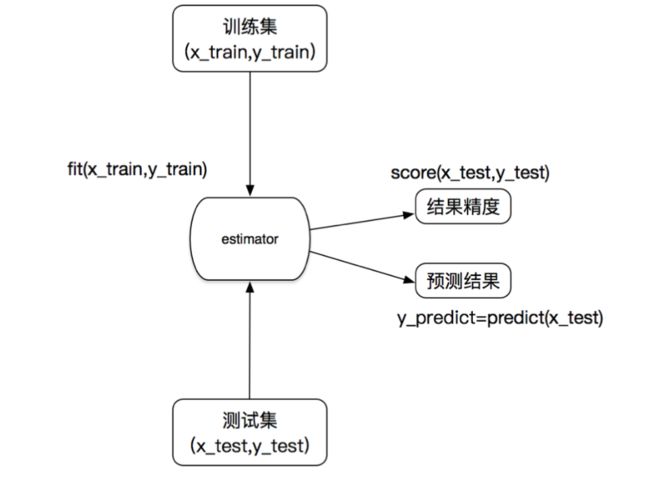

1.2 估计器

- 作用:sklearn 机器学习算法的实现;

- sklearn 拥有的估计器:

- 用于分类的估计器:

- sklearn.neighbors k-近邻算法;

- sklearn.naive_bayes 贝叶斯;

- sklearn.linear_model.LogisticRegression 逻辑回归;

- sklearn.tree 决策树与随机森林;

- 用于回归的估计器:

- sklearn.linear_model.LinearRegression 线性回归;

- sklearn.linear_model.Ridge 岭回归;

- 用于无监督学习的估计器:

- sklearn.cluster.KMeans 聚类;

- 用于分类的估计器:

- 步骤:

- 实例化一个 estimator;

- 调用

estimator.fit(x_train, y_train)方法计算,调用完毕模型生成; - 模型评估:直接比对预测值与真实值、计算准确率

estimator.score(x_test, y_test);

- 工作流程:

2. K-近邻算法(KNN 算法)

2.1 概述

- 定义:如果一个样本在特征空间中的k个最相似(即特征空间中最邻近)的样本中的大多数属于某一个类别,则该样本也属于这个类别;

- 距离公式:欧式距离 r = ( a 1 − b 1 ) 2 + ( a 2 − b 2 ) 2 + ( a 3 − b 3 ) 2 r=\sqrt{(a_1-b_1)^2 + (a_2-b_2)^2 + (a_3-b_3)^2} r=(a1−b1)2+(a2−b2)2+(a3−b3)2

- 其他:曼哈顿距离(绝对值距离)、明科夫斯基距离 minkowski;

- 问题:

- K 值取得过小,容易受到异常点影响;

- K 值取得过大,会有样本不均匀的影响;

- 需要先进行无量钢化处理-标准化;

- 优点:

- 简单,易于理解,易于实现,无需训练;

- 缺点:

- 懒惰算法,对测试样本分类时的计算量大,内存开销大;

- 必须指定 K 值,K 值选择不当则分类精度不能保证;

- 应用场景:小数据场景,几千~几万样本,具体场景具体业务去测试;

2.2 应用

- API:

sklearn.neighbors.KNeighborsClassifier(n_neighbors=5,algorithm='auto');- n_neighbors:int,可选(默认= 5),k_neighbors 查询默认使用的邻居数;

- algorithm:{‘auto’,‘ball_tree’,‘kd_tree’,‘brute’},可选用于计算最近邻居的算法:‘ball_tree’将会使用 BallTree,‘kd_tree’将使用 KDTree。‘auto’ 将尝试根据传递给 fit 方法的值来决定最合适的算法。 (不同实现方式影响效率)

*Code1 KNN 算法代码示例

def knn_iris():

# 1.获取数据

iris = load_iris()

print(iris)

# 2.划分数据集

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, random_state=6)

print(x_train)

print(x_test)

print(y_train)

print(y_test)

# 3.特征工程:标准化

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test) #测试集使用训练集的数据(平均值)等进行标准化

# 4.KNN 算法预估器

estimator = KNeighborsClassifier(n_neighbors=3)

estimator.fit(x_train, y_train)

# 5.模型评估

# 方法一:直接比对预测值与真实值

y_predict = estimator.predict(x_test)

print("y_predict:\n", y_predict)

print("y_test:\n", y_test)

print("预测值与真实值比对:\n", y_predict == y_test)

# 方法二:计算准确率

score = estimator.score(x_test, y_test)

print("准确率为:\n", score)

return None

3. 模型选择与调优

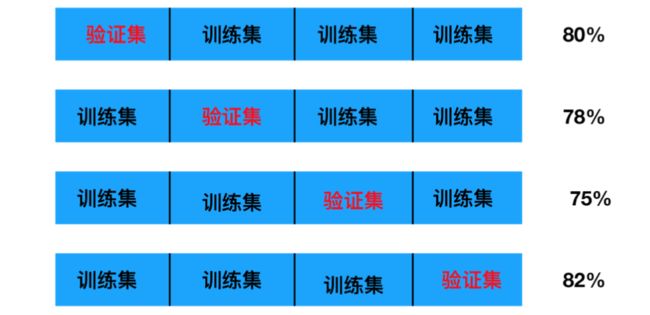

3.1 交叉验证 cross validation

- 目的:为了让被评估的模型更加准确可信;

- 定义:将拿到的训练数据,分为训练和验证集。以下图为例:将数据分成 4 份,其中一份作为验证集。然后经过 4 次(组)的测试,每次都更换不同的验证集。即得到 4 组模型的结果,取平均值作为最终结果。又称 4 折交叉验证。

3.2 超参数搜索-网格搜索 Grid Search

- 超参数:有很多参数是需要手动指定的(如k-近邻算法中的K值),这种叫超参数;

- 网格搜索:但是手动过程繁杂,所以需要对模型预设几种超参数组合。每组超参数都采用交叉验证来进行评估。最后选出最优参数组合建立模型;

- 模型选择与调优:

- API:

sklearn.model_selection.GridSearchCV(estimator, param_grid=None,cv=None);- 对估计器的指定参数值进行详尽搜索;

- estimator:估计器对象;

- param_grid:估计器参数(dict){“n_neighbors”:[1,3,5]};

- cv:指定几折交叉验证;

- fit:输入训练数据;

- score:准确率;

- 结果分析:

- bestscore:在交叉验证中验证的最好结果;

- bestestimator:最好的参数模型;

- cvresults:每次交叉验证后的验证集准确率结果和训练集准确率结果;

- API:

*Code2 网格搜索代码示例

def knncls():

# 一、处理数据以及特征工程

# 1、读取收

data = pd.read_csv("../resources/p01_machine_learning_sklearn/FBlocation/train.csv")

# 2、数据处理

# 1)数据逻辑筛选操作,缩小数据的范围 df.query()

data = data.query("x > 1.0 & x < 1.25 & y > 2.5 & y < 2.75")

# 2)处理时间特征

time_value = pd.to_datetime(data["time"], unit="s")

date = pd.DatetimeIndex(time_value)

data["day"] = date.day

data["weekday"] = date.weekday

data["hour"] = date.hour

# 3)删除入住次数少于三次位置

place_count = data.groupby('place_id').count()["row_id"]

tf = place_count[place_count > 3].index.values

data_final = data[data['place_id'].isin(tf)]

# 3、取出特征值和目标值

y = data_final['place_id']

# y = data[['place_id']]

x = data_final[["x","y","accuracy","day","weekday","hour"]]

# 4、数据分割与特征工程

# (1)、数据分割

x_train, x_test, y_train, y_test = train_test_split(x, y)

# (2)、标准化

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test) # 测试集使用训练集的数据(平均值)等进行标准化

# (3).KNN 算法预估器

estimator = KNeighborsClassifier()

# 4.1 加入网格搜索与交叉验证

param_dict = {"n_neighbors": [3, 5, 7, 9]}

estimator = GridSearchCV(estimator, param_grid=param_dict, cv=3)

estimator.fit(x_train, y_train)

# (4).模型评估

# 方法一:直接比对预测值与真实值

y_predict = estimator.predict(x_test)

print("y_predict:\n", y_predict)

print("y_test:\n", y_test)

print("预测值与真实值比对:\n", y_predict == y_test)

# 方法二:计算准确率

score = estimator.score(x_test, y_test)

print("准确率为:\n", score)

# 最佳参数 best_params_

print("最佳参数:\n", estimator.best_params_)

# 最佳结果 best_score_

print("最佳结果:\n", estimator.best_score_)

# 最佳估计器 best_estimator_

print("最佳估计器:\n", estimator.best_estimator_)

# 交叉验证结果 cv_results_

print("交叉验证结果:\n", estimator.cv_results_)

return None

4. 朴素贝叶斯算法

4.1 联合概率、条件概率与相互独立

- 联合概率:包含多个条件,且所有条件同时成立的概率;

- 记作:P(A,B)

- 特性:P(A, B) = P(A)P(B)

- 条件概率:就是事件A在另外一个事件B已经发生条件下的发生概率;

- 记作:P(A|B)

- 特性:P(A1,A2|B) = P(A1|B)P(A2|B)

- 相互独立:如果 P(A,B) = P(A)P(B),则称事件 A 与事件 B 相互独立;

4.2 朴素 + 贝叶斯公式

- 朴素:假设特征与特征之间是相互独立的;

- 公式:

P ( C ∣ W ) = P ( W ∣ C ) P ( C ) P ( W ) P(C|W)=\frac{P(W|C)P(C)}{P(W)} P(C∣W)=P(W)P(W∣C)P(C)

P ( C ∣ F 1 , F 2 , . . . ) = P ( F 1 , F 2 , . . . ∣ C ) P ( C ) P ( F 1 , F 2 , . . . ) P(C|F1, F2,...)=\frac{P(F1, F2,...|C)P(C)}{P(F1, F2,...)} P(C∣F1,F2,...)=P(F1,F2,...)P(F1,F2,...∣C)P(C)

- 应用场景:文本分类;

- 优点:

- 朴素贝叶斯模型发源于古典数学理论,有稳定的分类效率;

- 对缺失数据不太敏感,算法也比较简单,常用于文本分类;

- 分类准确度高,速度快;

- 缺点:

- 由于使用了样本属性独立性的假设,所以如果特征属性有关联时其效果不好;

4.3 朴素贝叶斯算法在文本分类中的应用

- 公式分为三个部分:

- P(C ):每个文档类别的概率(某文档类别数/总文档数量);

- P(W│C):给定类别下特征(被预测文档中出现的词)的概率;

- 计算方法:P(F1│C)=Ni/N (训练文档中去计算);

- Ni为该F1词在C类别所有文档中出现的次数;

- N为所属类别C下的文档所有词出现的次数和;

- 计算方法:P(F1│C)=Ni/N (训练文档中去计算);

- P(F1,F2,…) 预测文档中每个词的概率;

- 问题:样本数量少时可能会出现概率为 0 的情况。解决:引入拉普拉斯平滑系数;

4.3.1 拉普拉斯平滑系数

- 目的:防止计算出的分类概率为 0;

- 公式:α 为指定的系数,一般为 1。m 为训练文档中统计出的特征词个数;

P ( F 1 ∣ C ) = N i + α N + α m P(F_1|C)=\frac{N_i+\alpha}{N+\alpha m} P(F1∣C)=N+αmNi+α

- API:

sklearn.naive_bayes.MultinomialNB(alpha = 1.0);- 朴素贝叶斯分类;

- alpha:拉普拉斯平滑系数;

*Code3 朴素贝叶斯算法代码示例

def nb_cls():

# 1.获取新闻的数据

news = fetch_20newsgroups(subset='all')

# 2.进行数据集分割

x_train, x_test, y_train, y_test = train_test_split(news.data, news.target,test_size=0.3)

# 3.对于文本数据,进行特征抽取

transfer = TfidfVectorizer()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test)

# 4.estimator估计器流程,朴素贝叶斯算法

mlb = MultinomialNB(alpha=1.0)

mlb.fit(x_train, y_train)

# 5.进行预测

y_predict = mlb.predict(x_test)

print("预测每篇文章的类别:", y_predict[:100])

print("真实类别为:", y_test[:100])

print("预测准确率为:", mlb.score(x_test, y_test))

return None

5. 决策树

5.1 概述

- 信息是为了消除不确定性;

- 信息的衡量用信息熵;

- 信息和消除不确定性是相联系的;

- 信息熵单位为比特;

- 信息增益表示得知特征 X 的信息而息的不确定性减少的程度使得类 Y 的信息熵减少的程度;

- 决策树划分的依据是信息增益;

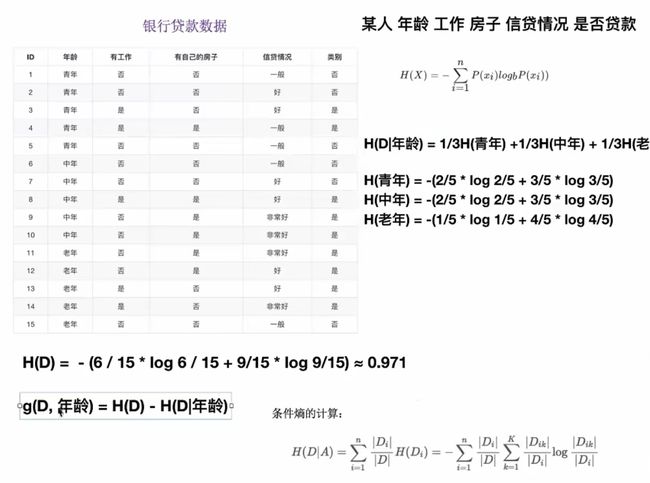

5.2 信息熵、信息增益与条件熵

- 信息熵公式:Ck 表示属于某个类别的样本数;

H ( X ) = − ∑ r i = 1 n ( P ( x i ) ∗ l o g b P ( x i ) ) H(X)=-\sum_{ri=1}^n(P(x_i)*log_bP(x_i)) H(X)=−ri=1∑n(P(xi)∗logbP(xi))

H ( D ) = − ∑ k = 1 K ∣ C k ∣ ∣ D ∣ l o g ∣ C k ∣ ∣ D ∣ H(D)=-\sum_{k=1}^K\frac{|C_k|}{|D|}log\frac{|C_k|}{|D|} H(D)=−k=1∑K∣D∣∣Ck∣log∣D∣∣Ck∣

- 信息增益公式:

g ( D , A ) = H ( D ) − H ( D ∣ A ) g(D,A)=H(D)-H(D|A) g(D,A)=H(D)−H(D∣A)

- 条件熵公式:(信息熵 - 条件熵)

H ( D ∣ A ) = ∑ i = 1 n ∣ D i ∣ D H ( D i ) = − ∑ i = 1 n ∣ D i ∣ ∣ D ∣ ∑ k = 1 K ∣ D i k ∣ ∣ D i ∣ l o g ∣ D i k ∣ ∣ D i ∣ H(D|A)=\sum_{i=1}^n\frac{|D_i|}{D}H(D_i)=-\sum_{i=1}^n\frac{|D_i|}{|D|}\sum_{k=1}^K\frac{|D_{ik}|}{|D_i|}log\frac{|D_{ik}|}{|D_i|} H(D∣A)=i=1∑nD∣Di∣H(Di)=−i=1∑n∣D∣∣Di∣k=1∑K∣Di∣∣Dik∣log∣Di∣∣Dik∣

- 示例:

5.3 决策树概述

- 优点:

- 简单的理解和解释,树木可视化;

- 缺点:

- 决策树学习者可以创建不能很好地推广数据的过于复杂的树,这被称为过拟合;

- 改进:

- 减枝 cart 算法(决策树 API 当中已经实现,随机森林参数调优有相关介绍);

- 随机森林;

- 注:企业重要决策,由于决策树很好的分析能力,在决策过程应用较多, 可以选择特征;

5.4 决策树的三种算法实现

-

三种算法:

- ID3:

- 信息增益,最大的准则;

- C4.5:

- 信息增益比,最大的准则;

- ID3:

-

CART:

- 分类树:基尼系数 最小的准则,在 sklearn 中可以选择划分的默认原则;

- 优势:划分更加细致;

-

API:

class sklearn.tree.DecisionTreeClassifier(criterion=’gini’,max_depth=None,random_state=None)- 决策树分类器;

- criterion:默认是’gini’系数,也可以选择信息增益的熵’entropy’;

- max_depth:树的深度大小;

- random_state:随机数种子;

-

其中会有些超参数:max_depth:树的深度大小;

- 其它超参数会结合随机森林;

*Code4 决策树代码示例

def decision_iris():

# 1.获取数据集

iris = load_iris()

# 2.划分数据集

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, random_state=6)

# 3.决策树预估器

estimator = DecisionTreeClassifier(criterion="entropy")

estimator.fit(x_train, y_train)

# 4.模型评估

# 方法一:直接比对预测值与真实值

y_predict = estimator.predict(x_test)

print("y_predict:\n", y_predict)

print("y_test:\n", y_test)

print("预测值与真实值比对:\n", y_predict == y_test)

# 方法二:计算准确率

score = estimator.score(x_test, y_test)

print("准确率为:\n", score)

return None

5.5 决策树的可视化

- API:

sklearn.tree.export_graphviz()该函数能够导出 DOT 格式;tree.export_graphviz(estimator,out_file='tree.dot’,feature_names=[‘’,’’])

- 工具:(能够将dot文件转换为pdf、png)

- 安装 graphviz:ubuntu:sudo apt-get install graphviz Mac:brew install graphviz

- 或者网站:

- http://webgraphviz.com/

- http://dreampuf.github.io/GraphvizOnline/

- 运行命令:

- 然后运行命令:dot -Tpng tree.dot -o tree.png

*Code5 可视化决策树代码示例

export_graphviz(estimator, out_file="iris_tree.dot", feature_names=iris.feature_names)

6. 集成学习方法 - 随机森林

6.1 概述

- 集成学习方法:通过建立几个模型组合的来解决单一预测问题。它的工作原理是生成多个分类器/模型,各自独立地学习和作出预测。这些预测最后结合成组合预测,因此优于任何一个单分类的做出预测;

- 随机森林:在机器学习中,随机森林是一个包含多个决策树的分类器,并且其输出的类别是由个别树输出的类别的众数而定;

- 特点:

- 在当前所有算法中,具有极好的准确率;

- 能够有效地运行在大数据集上,处理具有高维特征的输入样本,而且不需要降维;

- 能够评估各个特征在分类问题上的重要性;

6.2 随机森林原理过程

- 两个随机:

- 训练集随机:BootStrap 随机有放回抽样;

- N 个样本中随机有放回的抽样 N 个;

- 特征随机:

- 从 M 个特征中随机抽取 m 个特征,M >> m;(降维效果)

- 训练集随机:BootStrap 随机有放回抽样;

- 为什么采用 BootStrap 抽样:

- 为什么要随机抽样训练集:

- 如果不进行随机抽样,每棵树的训练集都一样,那么最终训练出的树分类结果也是完全一样的;

- 为什么要有放回地抽样:

- 如果不是有放回的抽样,那么每棵树的训练样本都是不同的,都是没有交集的,这样每棵树都是“有偏的”,都是绝对“片面的”(当然这样说可能不对),也就是说每棵树训练出来都是有很大的差异的;而随机森林最后分类取决于多棵树(弱分类器)的投票表决;

- 为什么要随机抽样训练集:

- API:

class sklearn.ensemble.RandomForestClassifier(n_estimators=10, criterion=’gini’, max_depth=None, bootstrap=True, random_state=None, min_samples_split=2)- 随机森林分类器;

- n_estimators:integer,optional(default = 10)森林里的树木数量 120,200,300,500,800,1200;

- criteria:string,可选(default =“gini”)分割特征的测量方法;

- max_depth:integer或None,可选(默认=无)树的最大深度 5,8,15,25,30;

- max_features="auto”,每个决策树的最大特征数量;

- If “auto”, then max_features=sqrt(n_features) ;

- If “sqrt”, then max_features=sqrt(n_features) (same as “auto”);

- If “log2”, then max_features=log2(n_features) ;

- If None, then max_features=n_features .

- bootstrap:boolean,optional(default = True)是否在构建树时使用放回抽样;

- min_samples_split:节点划分最少样本数;

- min_samples_leaf:叶子节点的最小样本数;

- 超参数:n_estimator, max_depth, min_samples_split,min_samples_lea

*Code6 随机森林代码示例

def random_iris():

# 1.获取数据集

iris = load_iris()

# 2.划分数据集

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, random_state=6)

# 3.随机森林预估器

estimator = RandomForestClassifier(criterion="entropy", max_depth=8)

estimator.fit(x_train, y_train)

# 4.模型评估

# 方法一:直接比对预测值与真实值

y_predict = estimator.predict(x_test)

print("y_predict:\n", y_predict)

print("y_test:\n", y_test)

print("预测值与真实值比对:\n", y_predict == y_test)

# 方法二:计算准确率

score = estimator.score(x_test, y_test)

print("准确率为:\n", score)

return None