【技术文档】HRNet姿态估计

目录

- 模型结构

-

- augment

- cfg

- loss

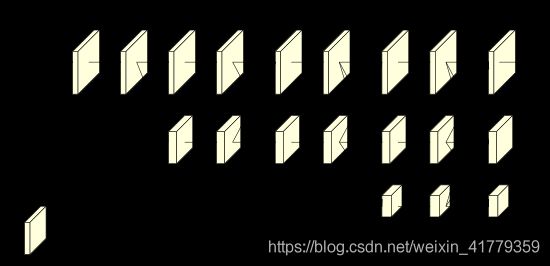

模型结构

class PoseHighResolutionNet(nn.Module):

def __init__(self, cfg, **kwargs):

self.inplanes = 64

extra = cfg['MODEL']['EXTRA']

super(PoseHighResolutionNet, self).__init__()

# stem net

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=2, padding=1,

bias=False)

self.bn1 = nn.BatchNorm2d(64, momentum=BN_MOMENTUM)

self.conv2 = nn.Conv2d(64, 64, kernel_size=3, stride=2, padding=1,

bias=False)

self.bn2 = nn.BatchNorm2d(64, momentum=BN_MOMENTUM)

self.relu = nn.ReLU(inplace=True)

self.layer1 = self._make_layer(Bottleneck, 64, 4)

self.stage2_cfg = extra['STAGE2']

num_channels = self.stage2_cfg['NUM_CHANNELS']

block = blocks_dict[self.stage2_cfg['BLOCK']]

num_channels = [

num_channels[i] * block.expansion for i in range(len(num_channels))

]

self.transition1 = self._make_transition_layer([256], num_channels)

self.stage2, pre_stage_channels = self._make_stage(

self.stage2_cfg, num_channels)

self.stage3_cfg = extra['STAGE3']

num_channels = self.stage3_cfg['NUM_CHANNELS']

block = blocks_dict[self.stage3_cfg['BLOCK']]

num_channels = [

num_channels[i] * block.expansion for i in range(len(num_channels))

]

self.transition2 = self._make_transition_layer(

pre_stage_channels, num_channels)

self.stage3, pre_stage_channels = self._make_stage(

self.stage3_cfg, num_channels)

self.stage4_cfg = extra['STAGE4']

num_channels = self.stage4_cfg['NUM_CHANNELS']

block = blocks_dict[self.stage4_cfg['BLOCK']]

num_channels = [

num_channels[i] * block.expansion for i in range(len(num_channels))

]

self.transition3 = self._make_transition_layer(

pre_stage_channels, num_channels)

self.stage4, pre_stage_channels = self._make_stage(

self.stage4_cfg, num_channels, multi_scale_output=False)

self.final_layer = nn.Conv2d(

in_channels=pre_stage_channels[0],

out_channels=cfg['MODEL']['NUM_JOINTS'],

kernel_size=extra['FINAL_CONV_KERNEL'],

stride=1,

padding=1 if extra['FINAL_CONV_KERNEL'] == 3 else 0

)

self.pretrained_layers = extra['PRETRAINED_LAYERS']

def _make_transition_layer(

self, num_channels_pre_layer, num_channels_cur_layer):

num_branches_cur = len(num_channels_cur_layer)

num_branches_pre = len(num_channels_pre_layer)

transition_layers = []

for i in range(num_branches_cur):

if i < num_branches_pre:

if num_channels_cur_layer[i] != num_channels_pre_layer[i]:

transition_layers.append(

nn.Sequential(

nn.Conv2d(

num_channels_pre_layer[i],

num_channels_cur_layer[i],

3, 1, 1, bias=False

),

nn.BatchNorm2d(num_channels_cur_layer[i]),

nn.ReLU(inplace=True)

)

)

else:

transition_layers.append(None)

else:

conv3x3s = []

for j in range(i+1-num_branches_pre):

inchannels = num_channels_pre_layer[-1]

outchannels = num_channels_cur_layer[i] \

if j == i-num_branches_pre else inchannels

conv3x3s.append(

nn.Sequential(

nn.Conv2d(

inchannels, outchannels, 3, 2, 1, bias=False

),

nn.BatchNorm2d(outchannels),

nn.ReLU(inplace=True)

)

)

transition_layers.append(nn.Sequential(*conv3x3s))

return nn.ModuleList(transition_layers)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(

self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False

),

nn.BatchNorm2d(planes * block.expansion, momentum=BN_MOMENTUM),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def _make_stage(self, layer_config, num_inchannels,

multi_scale_output=True):

num_modules = layer_config['NUM_MODULES']

num_branches = layer_config['NUM_BRANCHES']

num_blocks = layer_config['NUM_BLOCKS']

num_channels = layer_config['NUM_CHANNELS']

block = blocks_dict[layer_config['BLOCK']]

fuse_method = layer_config['FUSE_METHOD']

modules = []

for i in range(num_modules):

# multi_scale_output is only used last module

if not multi_scale_output and i == num_modules - 1:

reset_multi_scale_output = False

else:

reset_multi_scale_output = True

modules.append(

HighResolutionModule(

num_branches,

block,

num_blocks,

num_inchannels,

num_channels,

fuse_method,

reset_multi_scale_output

)

)

num_inchannels = modules[-1].get_num_inchannels()

return nn.Sequential(*modules), num_inchannels

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.layer1(x)

x_list = []

for i in range(self.stage2_cfg['NUM_BRANCHES']):

if self.transition1[i] is not None:

x_list.append(self.transition1[i](x))

else:

x_list.append(x)

y_list = self.stage2(x_list)

x_list = []

for i in range(self.stage3_cfg['NUM_BRANCHES']):

if self.transition2[i] is not None:

x_list.append(self.transition2[i](y_list[-1]))

else:

x_list.append(y_list[i])

y_list = self.stage3(x_list)

x_list = []

for i in range(self.stage4_cfg['NUM_BRANCHES']):

if self.transition3[i] is not None:

x_list.append(self.transition3[i](y_list[-1]))

else:

x_list.append(y_list[i])

y_list = self.stage4(x_list)

x = self.final_layer(y_list[0])

augment

旋转,半身增强

cfg

AUTO_RESUME: false

CUDNN:

BENCHMARK: true

DETERMINISTIC: false

ENABLED: true

DATA_DIR: ''

GPUS: (0,1,2,3)

OUTPUT_DIR: 'output'

LOG_DIR: 'log'

WORKERS: 24

PRINT_FREQ: 100

DATASET:

COLOR_RGB: true

DATASET: 'hie'

DATA_FORMAT: jpg

FLIP: true

NUM_JOINTS_HALF_BODY: 8

PROB_HALF_BODY: 0.3

ROOT: '/home/ubuntu/Datasets/HIE20'

ROT_FACTOR: 45

SCALE_FACTOR: 0.35

TEST_SET: train

TRAIN_SET: train

MODEL:

INIT_WEIGHTS: true

NAME: pose_hrnet

NUM_JOINTS: 14

PRETRAINED: '/home/ubuntu/Workspace/deep-high-resolution-net.pytorch/models/hrnet_w48-8ef0771d.pth'

TARGET_TYPE: gaussian

IMAGE_SIZE:

- 288

- 384

HEATMAP_SIZE:

- 72

- 96

SIGMA: 3

EXTRA:

PRETRAINED_LAYERS:

- 'conv1'

- 'bn1'

- 'conv2'

- 'bn2'

- 'layer1'

- 'transition1'

- 'stage2'

- 'transition2'

- 'stage3'

- 'transition3'

- 'stage4'

FINAL_CONV_KERNEL: 1

STAGE2:

NUM_MODULES: 1

NUM_BRANCHES: 2

BLOCK: BASIC

NUM_BLOCKS:

- 4

- 4

NUM_CHANNELS:

- 48

- 96

FUSE_METHOD: SUM

STAGE3:

NUM_MODULES: 4

NUM_BRANCHES: 3

BLOCK: BASIC

NUM_BLOCKS:

- 4

- 4

- 4

NUM_CHANNELS:

- 48

- 96

- 192

FUSE_METHOD: SUM

STAGE4:

NUM_MODULES: 3

NUM_BRANCHES: 4

BLOCK: BASIC

NUM_BLOCKS:

- 4

- 4

- 4

- 4

NUM_CHANNELS:

- 48

- 96

- 192

- 384

FUSE_METHOD: SUM

LOSS:

USE_TARGET_WEIGHT: true

TRAIN:

BATCH_SIZE_PER_GPU: 24

SHUFFLE: true

BEGIN_EPOCH: 0

END_EPOCH: 210

OPTIMIZER: adam

LR: 0.001

LR_FACTOR: 0.1

LR_STEP:

- 170

- 200

WD: 0.0001

GAMMA1: 0.99

GAMMA2: 0.0

MOMENTUM: 0.9

NESTEROV: false

TEST:

BATCH_SIZE_PER_GPU: 12

COCO_BBOX_FILE: '/home/liuhao/seedland/dataset/coco2017/person_detection_results/COCO_val2017_detections_AP_H_56_person.json'

BBOX_THRE: 1.0

IMAGE_THRE: 0.0

IN_VIS_THRE: 0.2

MODEL_FILE: ''

NMS_THRE: 1.0

OKS_THRE: 0.9

USE_GT_BBOX: true

FLIP_TEST: true

POST_PROCESS: true

SHIFT_HEATMAP: true

DEBUG:

DEBUG: true

SAVE_BATCH_IMAGES_GT: true

SAVE_BATCH_IMAGES_PRED: true

SAVE_HEATMAPS_GT: true

SAVE_HEATMAPS_PRED: true

loss

class JointsMSELoss(nn.Module):

def __init__(self, use_target_weight):

super(JointsMSELoss, self).__init__()

self.criterion = nn.MSELoss(reduction='mean')

self.use_target_weight = use_target_weight

def forward(self, output, target, target_weight):

batch_size = output.size(0)

num_joints = output.size(1)

heatmaps_pred = output.reshape((batch_size, num_joints, -1)).split(1, 1)

heatmaps_gt = target.reshape((batch_size, num_joints, -1)).split(1, 1)

loss = 0

for idx in range(num_joints):

heatmap_pred = heatmaps_pred[idx].squeeze()

heatmap_gt = heatmaps_gt[idx].squeeze()

if self.use_target_weight:

loss += 0.5 * self.criterion(

heatmap_pred.mul(target_weight[:, idx]),

heatmap_gt.mul(target_weight[:, idx])

)

else:

loss += 0.5 * self.criterion(heatmap_pred, heatmap_gt)

return loss / num_joints