机器学习回归算法汇总_加利福尼亚房价

一.探索数据

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import seaborn as sns

plt.style.use('fivethirtyeight')

import warnings

warnings.filterwarnings('ignore')

plt.rcParams['font.sans-serif'] = ['Microsoft YaHei']

plt.rcParams['font.serif'] = ['Microsoft YaHei']

from sklearn.datasets.california_housing import fetch_california_housing

housing = fetch_california_housing()

X = housing.data

y = housing.target

X.shape

(20640, 8)

y.shape

(20640,)

housing.feature_names

['MedInc',

'HouseAge',

'AveRooms',

'AveBedrms',

'Population',

'AveOccup',

'Latitude',

'Longitude']

| MedInc | 人均收入 |

|---|---|

| HouseAge | 房龄 |

| AveRooms | 房间数 |

| AveBedrooms | 卧室数 |

| Population | 小区人口数 |

| AveOccup | 房屋居住人数 |

| Longitude | 小区经度 |

| Latitude | 小区纬度 |

housing.feature_names =['人均收入','房龄','房间数', '卧室数', '小区人口数', '房屋居住人数', '小区经度', '小区纬度']

housing.feature_names

['人均收入', '房龄', '房间数', '卧室数', '小区人口数', '房屋居住人数', '小区经度', '小区纬度']

X

array([[ 8.3252 , 41. , 6.98412698, ..., 2.55555556,

37.88 , -122.23 ],

[ 8.3014 , 21. , 6.23813708, ..., 2.10984183,

37.86 , -122.22 ],

[ 7.2574 , 52. , 8.28813559, ..., 2.80225989,

37.85 , -122.24 ],

...,

[ 1.7 , 17. , 5.20554273, ..., 2.3256351 ,

39.43 , -121.22 ],

[ 1.8672 , 18. , 5.32951289, ..., 2.12320917,

39.43 , -121.32 ],

[ 2.3886 , 16. , 5.25471698, ..., 2.61698113,

39.37 , -121.24 ]])

X = pd.DataFrame(X,columns=housing.feature_names)

X.head(10)

| 人均收入 | 房龄 | 房间数 | 卧室数 | 小区人口数 | 房屋居住人数 | 小区经度 | 小区纬度 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

| 5 | 4.0368 | 52.0 | 4.761658 | 1.103627 | 413.0 | 2.139896 | 37.85 | -122.25 |

| 6 | 3.6591 | 52.0 | 4.931907 | 0.951362 | 1094.0 | 2.128405 | 37.84 | -122.25 |

| 7 | 3.1200 | 52.0 | 4.797527 | 1.061824 | 1157.0 | 1.788253 | 37.84 | -122.25 |

| 8 | 2.0804 | 42.0 | 4.294118 | 1.117647 | 1206.0 | 2.026891 | 37.84 | -122.26 |

| 9 | 3.6912 | 52.0 | 4.970588 | 0.990196 | 1551.0 | 2.172269 | 37.84 | -122.25 |

y = pd.DataFrame(y,columns =[ '房价'])

y

| 房价 | |

|---|---|

| 0 | 4.526 |

| 1 | 3.585 |

| 2 | 3.521 |

| 3 | 3.413 |

| 4 | 3.422 |

| ... | ... |

| 20635 | 0.781 |

| 20636 | 0.771 |

| 20637 | 0.923 |

| 20638 | 0.847 |

| 20639 | 0.894 |

20640 rows × 1 columns

data = pd.concat([X,y],axis = 1)

data.head()

| 人均收入 | 房龄 | 房间数 | 卧室数 | 小区人口数 | 房屋居住人数 | 小区经度 | 小区纬度 | 房价 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 | 4.526 |

| 1 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 | 3.585 |

| 2 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 | 3.521 |

| 3 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 | 3.413 |

| 4 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 | 3.422 |

data.info()

RangeIndex: 20640 entries, 0 to 20639

Data columns (total 9 columns):

人均收入 20640 non-null float64

房龄 20640 non-null float64

房间数 20640 non-null float64

卧室数 20640 non-null float64

小区人口数 20640 non-null float64

房屋居住人数 20640 non-null float64

小区经度 20640 non-null float64

小区纬度 20640 non-null float64

房价 20640 non-null float64

dtypes: float64(9)

memory usage: 1.4 MB

data.describe()

data.hist(bins=40,figsize=(12,12))

plt.show()

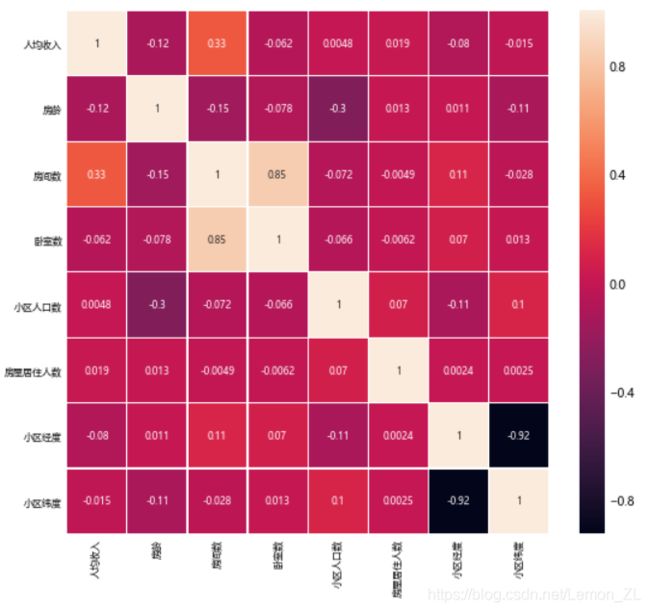

ax = sns.heatmap(X.corr(),annot=True,linewidths=0.2,annot_kws={'size':8})

fig=plt.gcf()

fig.set_size_inches(8,8)

plt.xticks(fontsize=8)

plt.yticks(fontsize=8)

bottom, top = ax.get_ylim()

ax.set_ylim(bottom + 0.5, top - 0.5)

plt.show()

数据探索在这部分中,我们不会修改任何数据,只是通过各种统计手段帮助我们对这组数据有一个直观的了解,为之后的处理提取信息。

data.isnull().sum()

人均收入 0

房龄 0

房间数 0

卧室数 0

小区人口数 0

房屋居住人数 0

小区经度 0

小区纬度 0

房价 0

dtype: int64

二.数据预处理

- 缺失值

- 异常值

- 重复值

- 数据变换 标准化

- 编码转换

- 主成分分析

三.算法建模

划分训练集 测试集

from sklearn.model_selection import train_test_split

Xtrain,Xtest,Ytrain,Ytest = train_test_split(X,y,test_size=0.2, random_state=40)

1.线性回归

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

lr =lr.fit(Xtrain,Ytrain)

lr.score(Xtest,Ytest)

0.6075794091011189

2.决策树

from sklearn.tree import DecisionTreeRegressor

dtr = DecisionTreeRegressor(random_state=40)

dtr = dtr.fit(Xtrain, Ytrain)

dtr.score(Xtest,Ytest)

0.615018515749356

3.随机森林

from sklearn.ensemble import RandomForestRegressor

rfr = RandomForestRegressor(random_state = 40)

rfr = rfr.fit(Xtrain, Ytrain)

rfr.score(Xtest, Ytest)

0.7748884071228732

4.回归提升树

from sklearn.ensemble import GradientBoostingRegressor

gbr = GradientBoostingRegressor(n_estimators=100,random_state=40)

gbr = gbr.fit(Xtrain, Ytrain)

gbr.score(Xtest, Ytest)

0.7835836333713624

5.AdaBoostRegressor

from sklearn.ensemble import AdaBoostRegressor

abr = AdaBoostRegressor(random_state=40,n_estimators=300,learning_rate=0.1)

abr = abr.fit(Xtrain, Ytrain)

abr.score(Xtest, Ytest)

0.4957214730873699

6.KNN

from sklearn.neighbors import KNeighborsRegressor

knn = KNeighborsRegressor()

knn = knn.fit(Xtrain, Ytrain)

knn.score(Xtest, Ytest)

0.6972888927183265

7.BaggingRegressor

from sklearn.ensemble import BaggingRegressor

bgr =BaggingRegressor()

bgr = bgr.fit(Xtrain, Ytrain)

bgr.score(Xtest, Ytest)

0.7885598734862586

8.Xgboost

import xgboost as xgb

xgb = xgb.XGBRegressor(n_estimators=120,max_depth=7, learning_rate=0.3,objective ='reg:squarederror')

xgb = xgb.fit(Xtrain, Ytrain)

xgb.score(Xtest,Ytest)

0.8305799952865707

def try_different_method(model, method):

model.fit(Xtrain, Ytrain)

score = model.score(Xtest, Ytest)

result = model.predict(Xtest)

plt.figure()

plt.plot(np.arange(len(result)), Ytest, "go-", label="True value")

plt.plot(np.arange(len(result)), result, "ro-", label="Predict value")

plt.title(f"method:{method}---score:{score}")

plt.legend(loc="best")

plt.show()

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

from sklearn.tree import DecisionTreeRegressor

dtr = DecisionTreeRegressor(random_state=40)

from sklearn.ensemble import RandomForestRegressor

rfr = RandomForestRegressor(random_state = 40)

from sklearn.ensemble import GradientBoostingRegressor

gbr = GradientBoostingRegressor(n_estimators=100,random_state=40)

from sklearn.ensemble import AdaBoostRegressor

abr = AdaBoostRegressor(random_state=40,n_estimators=300,learning_rate=0.1)

from sklearn.neighbors import KNeighborsRegressor

knn = KNeighborsRegressor(n_neighbors=6)

from sklearn.ensemble import BaggingRegressor

bgr =BaggingRegressor(random_state=40)

import xgboost as xgb

xgb = xgb.XGBRegressor(n_estimators=120,max_depth=7, learning_rate=0.3,objective ='reg:squarederror',random_state = 40)

regressor = [lr,dtr,rfr,gbr,abr,knn,bgr,xgb]

def different_model(model,name):

model.fit(Xtrain, Ytrain)

score = model.score(Xtest, Ytest)

result = model.predict(Xtest)

print(f'{name}',score)

name = ['lr','dtr','rfr','gbr','abr','knn','bgr','xgb']

for i,j in zip(regressor,name):

different_model(i,j)

lr 0.6075794091011189

dtr 0.615018515749356

rfr 0.7748884071228732

gbr 0.7835836333713624

abr 0.4957214730873699

knn 0.14199477279929695

bgr 0.7735241405394527

xgb 0.8305799952865707

模型调参

from sklearn import tree

dtr = tree.DecisionTreeRegressor(random_state=40)

dtr = dtr.fit(Xtrain, Ytrain)

dtr.score(Xtest,Ytest)

score = []

C = range(1,20)

for i in C:

dtr = tree.DecisionTreeRegressor(max_depth= i #树的最大深度

,min_samples_leaf=13 #最小分支节点

,random_state=40)

dtr = dtr.fit(Xtrain, Ytrain)

score.append(dtr.score(Xtest,Ytest))

plt.plot(C,score)

print('max score:',max(score),'max_depth:',score.index(max(score))+1)

max score: 0.7269488014943908 max_depth: 12

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-IupvFiyM-1592571954638)(output_42_1.png)]

from sklearn.ensemble import RandomForestRegressor

rfr = RandomForestRegressor(random_state = 40)

rfr = rfr.fit(Xtrain, Ytrain)

rfr.score(Xtest, Ytest)

score = []

C = range(140,240,20)

for i in C:

rfr = RandomForestRegressor(n_estimators = i #基评估器

,random_state = 40#随机种子

#,n_jobs = -1 #决定了使用的CPU内核个数,使用更多的内核能使速度增快,而令n_jobs=-1可以调用所有内核

)

rfr = rfr.fit(Xtrain, Ytrain)

score.append(rfr.score(Xtest,Ytest))

plt.plot(C,score)

print('max score:',max(score))

max score: 0.80334868183468

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-7I1Z9r2B-1592571954641)(output_43_1.png)]

rfr = RandomForestRegressor(n_estimators=200,random_state = 40)

rfr = rfr.fit(Xtrain, Ytrain)

rfr.score(Xtest, Ytest)

0.80334868183468

网格搜索

from sklearn.model_selection import GridSearchCV

tree_param_grid = { 'min_samples_split': list((3,6,9)),'n_estimators':list((10,50,100))}

grid = GridSearchCV(RandomForestRegressor(),param_grid=tree_param_grid, cv=5)

grid.fit(Xtrain, Ytrain)

GridSearchCV(cv=5, error_score='raise-deprecating',

estimator=RandomForestRegressor(bootstrap=True, criterion='mse',

max_depth=None,

max_features='auto',

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators='warn', n_jobs=None,

oob_score=False, random_state=None,

verbose=0, warm_start=False),

iid='warn', n_jobs=None,

param_grid={'min_samples_split': [3, 6, 9],

'n_estimators': [10, 50, 100]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

grid.best_score_

0.7992116401881113

grid.best_params_

{'min_samples_split': 6, 'n_estimators': 100}

是否一定要标准化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X=scaler.fit_transform(X)

X

array([[ 2.34476576, 0.98214266, 0.62855945, ..., -0.04959654,

1.05254828, -1.32783522],

[ 2.33223796, -0.60701891, 0.32704136, ..., -0.09251223,

1.04318455, -1.32284391],

[ 1.7826994 , 1.85618152, 1.15562047, ..., -0.02584253,

1.03850269, -1.33282653],

...,

[-1.14259331, -0.92485123, -0.09031802, ..., -0.0717345 ,

1.77823747, -0.8237132 ],

[-1.05458292, -0.84539315, -0.04021111, ..., -0.09122515,

1.77823747, -0.87362627],

[-0.78012947, -1.00430931, -0.07044252, ..., -0.04368215,

1.75014627, -0.83369581]])

from sklearn.model_selection import train_test_split

Xtrain,Xtest,Ytrain,Ytest = train_test_split(X,y,test_size=0.2, random_state=40)

from sklearn import tree

dtr = tree.DecisionTreeRegressor(random_state=40)

dtr = dtr.fit(Xtrain, Ytrain)

dtr.score(Xtest,Ytest)

0.6145069972322226

from sklearn.preprocessing import MinMaxScaler

X = housing.data

scaler = MinMaxScaler()

X = scaler.fit_transform(X)

from sklearn.model_selection import train_test_split

Xtrain,Xtest,Ytrain,Ytest = train_test_split(X,y,test_size=0.2, random_state=40)

from sklearn import tree

dtr = tree.DecisionTreeRegressor(random_state=40)

dtr = dtr.fit(Xtrain, Ytrain)

dtr.score(Xtest,Ytest)

0.6161125643854056