Yolov5笔记--RKNN推理部署源码的粗略理解

1--基础知识

①Yolov5的输出

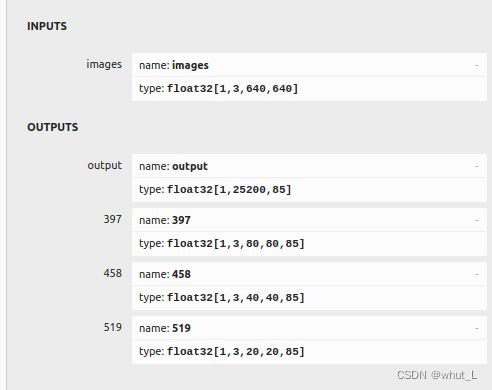

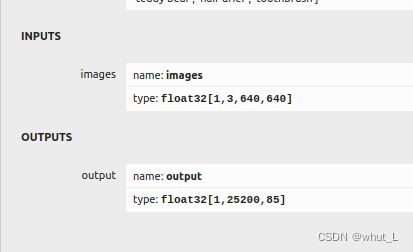

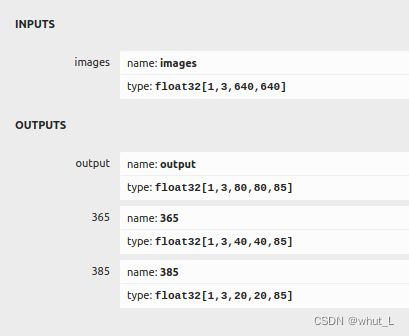

格式一般为a × b × c × 85的形式,其中a*b*c表示框的数目,85则涵盖框的位置信息(x,y,w,h)、置信度Pc和80个类别的预测概率c1,...,c80。下图展示了不同版本Yolov5的输出信息:

②阈值过滤锚框

简要介绍两种过滤锚框的方法:

A:利用置信度(box_confidence,即Pc)和预测概率(box_class_probs,即c1,...,c80)计算锚框的得分(box_scores),如果最高的得分高于过滤阈值(threshold),则保留该锚框的信息,反之过滤该锚框。

B:利用非线性激活函数(如Sigmoid)函数等处理置信度(box_confidence,即Pc),将最高的结果与过滤阈值比较,高则保留,低则过滤。(RKNN提供的方式)

③非极大值抑制(NMS)

通过计算交并比(intersection over Union,IOU)进一步过滤锚框。

IOU介绍:IOU即两个矩形框的交集面积与并集面积的比值。

简略介绍Yolov5利用NMS过滤锚框的一种方法:计算IOU的值,若value≤NMS_THRESH,则保留锚框,反之去除。

2--RKNN部署Yolov5源码

项目地址:rknn-toolkit2

import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

from rknn.api import RKNN

ONNX_MODEL = 'yolov5s.onnx'

RKNN_MODEL = 'yolov5s.rknn'

IMG_PATH = './bus.jpg'

DATASET = './dataset.txt'

QUANTIZE_ON = True

BOX_THESH = 0.5

NMS_THRESH = 0.6

IMG_SIZE = 640

CLASSES = ("person", "bicycle", "car", "motorbike ", "aeroplane ", "bus ", "train", "truck ", "boat", "traffic light",

"fire hydrant", "stop sign ", "parking meter", "bench", "bird", "cat", "dog ", "horse ", "sheep", "cow", "elephant",

"bear", "zebra ", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite",

"baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife ",

"spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza ", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet ", "tvmonitor", "laptop ", "mouse ", "remote ", "keyboard ", "cell phone", "microwave ",

"oven ", "toaster", "sink", "refrigerator ", "book", "clock", "vase", "scissors ", "teddy bear ", "hair drier", "toothbrush ")

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def xywh2xyxy(x):

# Convert [x, y, w, h] to [x1, y1, x2, y2]

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = sigmoid(input[..., 4])

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = sigmoid(input[..., 5:])

box_xy = sigmoid(input[..., :2])*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(sigmoid(input[..., 2:4])*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

return box, box_confidence, box_class_probs

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!

# Arguments

boxes: ndarray, boxes of objects.

box_confidences: ndarray, confidences of objects.

box_class_probs: ndarray, class_probs of objects.

# Returns

boxes: ndarray, filtered boxes.

classes: ndarray, classes for boxes.

scores: ndarray, scores for boxes.

"""

box_classes = np.argmax(box_class_probs, axis=-1)

box_class_scores = np.max(box_class_probs, axis=-1)

pos = np.where(box_confidences[..., 0] >= BOX_THESH)

boxes = boxes[pos]

classes = box_classes[pos]

scores = box_class_scores[pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Arguments

boxes: ndarray, boxes of objects.

scores: ndarray, scores of objects.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],

[59, 119], [116, 90], [156, 198], [373, 326]]

boxes, classes, scores = [], [], []

for input, mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def draw(image, boxes, scores, classes):

"""Draw the boxes on the image.

# Argument:

image: original image.

boxes: ndarray, boxes of objects.

classes: ndarray, classes of objects.

scores: ndarray, scores of objects.

all_classes: all classes name.

"""

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

print('class: {}, score: {}'.format(CLASSES[cl], score))

print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))

top = int(top)

left = int(left)

right = int(right)

bottom = int(bottom)

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 0, 255), 2)

def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN(verbose=True)

# pre-process config

print('--> Config model')

rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]])

print('done')

# Load ONNX model

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['378', '439', '500'])

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET)

if ret != 0:

print('Build model failed!')

exit(ret)

print('done')

# Export RKNN model

print('--> Export rknn model')

ret = rknn.export_rknn(RKNN_MODEL)

if ret != 0:

print('Export rknn model failed!')

exit(ret)

print('done')

# Init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime()

# ret = rknn.init_runtime('rk3566')

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

# Set inputs

img = cv2.imread(IMG_PATH)

# img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[img])

np.save('./onnx_yolov5_0.npy', outputs[0])

np.save('./onnx_yolov5_1.npy', outputs[1])

np.save('./onnx_yolov5_2.npy', outputs[2])

print('done')

# post process

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

boxes, classes, scores = yolov5_post_process(input_data)

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if boxes is not None:

draw(img_1, boxes, scores, classes)

# show output

# cv2.imshow("post process result", img_1)

# cv2.waitKey(0)

# cv2.destroyAllWindows()

rknn.release()3--代码粗略解读

①模型及数据:

ONNX_MODEL = 'yolov5s.onnx'

RKNN_MODEL = 'yolov5s.rknn'

IMG_PATH = './bus.jpg'

DATASET = './dataset.txt'在rknn的开源项目中,提供了一个用于测试的yolov5s.onnx文件。

②模型导入

# Load ONNX model

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['378', '439', '500'])

if ret != 0:

print('Load model failed!')

exit(ret)

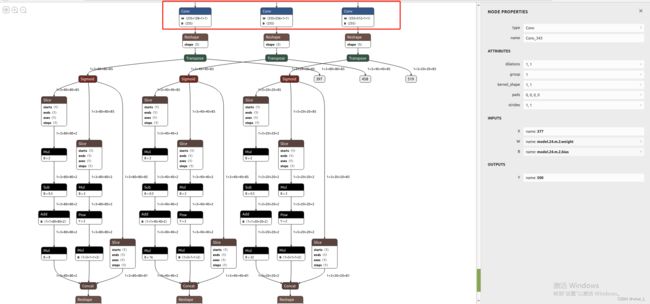

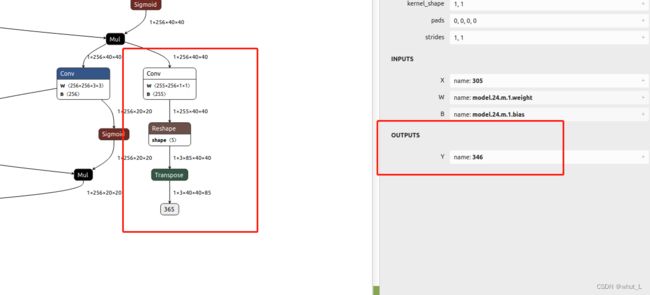

print('done')这段代码截取导入了onnx模型的三个输出,其编号为'378'、‘439’、‘500’,即上图中红框的三个输出。

③后处理模块

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!

# Arguments

boxes: ndarray, boxes of objects.

box_confidences: ndarray, confidences of objects.

box_class_probs: ndarray, class_probs of objects.

# Returns

boxes: ndarray, filtered boxes.

classes: ndarray, classes for boxes.

scores: ndarray, scores for boxes.

"""

box_classes = np.argmax(box_class_probs, axis=-1)

box_class_scores = np.max(box_class_probs, axis=-1)

pos = np.where(box_confidences[..., 0] >= BOX_THESH)

boxes = boxes[pos]

classes = box_classes[pos]

scores = box_class_scores[pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Arguments

boxes: ndarray, boxes of objects.

scores: ndarray, scores of objects.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],

[59, 119], [116, 90], [156, 198], [373, 326]]

boxes, classes, scores = [], [], []

for input, mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores主要是阈值过滤(filter_boxes)和NMS(nms_boxes)两部分。

4--利用自导出yolov5.onnx模型

①使用yolov5提供的export.py函数导出yolov5.onnx模型

python export.py --weights yolov5s.pt --img-size 640 --include onnx --train②再使用onnxsim简化导出的yolov5.onnx模型

onnxsim安装和使用:onnx-simplifier

③要完全使用rknn提供的部署转换代码,需要根据简化后的onnx模型,选取合适层的输出,以替代以下代码中的‘378’,‘439’和‘500’,如下图onnx例子中的'326',‘346’,‘366’.

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['378', '439', '500'])上图中onnx模型的下载地址: code:q2dl

未完待续!

5--运行rknn的demo时出现的问题

①错误:E build: ImportError: /lib/x86_64-linux-gnu/libm.so.6: version `GLIBC_2.29' not found

解决方法:

#解决方法

wget http://ftp.gnu.org/gnu/glibc/glibc-2.29.tar.gz (建议手动下载)

tar -zxvf glibc-2.29.tar.gz

cd glibc-2.29

sudo apt-get install bison

sudo apt-get install

sudo apt-get install gcc build-essential

unset LD_LIBRARY_PATH

mkdir build

cd build

../configure --prefix=/usr/local/glibc-2.29

make -j8

sudo make install

cd /lib/x86_64-linux-gnu

sudo ln -sf /usr/local/glibc-2.29/lib/libm-2.29.so libm.so.6 # 建立软连接未完待续!