李宏毅机器学习 PM2.5预测

刚开始入门机器学习,看了李宏毅的视频课之后,磕磕绊绊写出了自己简易版的代码,欢迎大佬们指点,也希望能帮助到入门的其他小白们

数据文件链接:https://pan.baidu.com/s/1TjwmtCRMVJhm8fHksKMpAA

提取码:xxzy

复制这段内容后打开百度网盘手机App,操作更方便哦

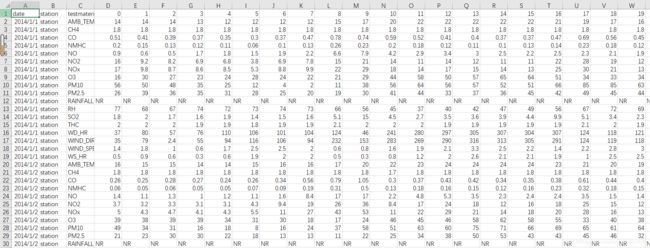

数据说明,下图是训练集,我们只提取出每日的PM2.5的数据来进行训练(本人目前水平有限)

import pandas as pd

import numpy as np

# 用来读取三个数据集

def read_train2numpy():

path = 'F:\\python_book\\machine_learning\\train.csv'

data = pd.read_csv(path)

# 取出每一天的PM2.5的数据,且取出日期等无关信息,仅保留PM2.5的数值

data = data[data['testmaterial'] == 'PM2.5'].iloc[:, 3:]

tempxlist = []

tempylist = []

for i in range(15):

# 取每一天前九个小时的数据用来训练

tempx = data.iloc[:, i:i + 9] # 使用前9小时数据作为feature

# 指定列名,如果不指定的话,每一行的列名会不同(这句话是我从别人那里看的)

tempx.columns = np.array(range(9))

tempy = data.iloc[:, i + 9] # 使用第10个小数数据作为lable

tempy.columns = np.array(range(1))

tempxlist.append(tempx)

tempylist.append(tempy)

# 转换为pandas的数据格式

xdata = pd.concat(tempxlist) # feature数据

x = np.array(xdata, float)

ydata = pd.concat(tempylist) # lable数据

y = (np.array(ydata, float))

return x, y

def read_test2numpy():

path = 'F:\\python_book\\machine_learning\\test.csv'

data = pd.read_csv(path)

data = data[data['testmaterial'] == 'PM2.5'].iloc[:, 2:]

x_test = np.array(data, float)

return x_test

def read_result2numpy():

path = 'F:\\python_book\\machine_learning\\ans.csv'

data = pd.read_csv(path)

data = data.iloc[:,1:]

y = np.array(data, float)

return y

import numpy as np

class LinearRegression:

def __init__(self, input_size):

self.params = {}

# 初始化权重,大小为训练集的列长

self.params['w1'] = np.random.randn(input_size)

def predict(self, x):

return np.dot(x, self.params['w1'])

# 计算损失

def loss(self, x, t):

y = self.predict(x)

return y-t

'''

计算出权重矩阵的梯度,这里用x.T和loss相乘是因为w和dw的维度要一致,所以用x.t

正常来说,损失应该用均方误差去做,但是我们得出的loss主要是用来更新梯度的

如果用视频中提到的loss去计算的话,那么我们的dw就应该是2*loss*x.T

所以和我们这种写法的结果一致,最后的dw的结果是一样的

'''

def gradient(self, x, t):

loss = self.loss(x, t)

grads = {}

dw = np.dot(x.T, loss)*2

grads['w1'] = dw

return grads

class AdaGrad:

def __init__(self, lr=0.1):

self.lr = lr

self.h = None

def update(self, params, grads):

if self.h is None:

self.h = {}

for key, value in params.items():

self.h[key] = np.zeros_like(value)

# 1e-7是为了防止出现除零异常

for key in params.keys():

self.h[key] += grads[key] ** 2

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

用来训练的代码:

from read_Data import *

from linear_Regression import *

import numpy as np

x_train, y_train = read_train2numpy()

x_test = read_test2numpy()

liner = LinearRegression(x_train.shape[1])

Ada = AdaGrad(lr=0.01)

for i in range(10000000):

grads = liner.gradient(x_train, y_train)

Ada.update(liner.params, grads)

print(liner.params['w1'])

# 预测

h = np.dot(x_test, liner.params['w1'])

real = read_result2numpy()

h = h.reshape((240, -1))

erro = abs(h - real).sum() / h.shape[0]

print('平均绝对值误差', erro)

最后得到的结果是:

[ 0.03021916 -0.05199883 0.20457103 -0.20002777 -0.04606431 0.47595851

-0.54311636 0.01185609 1.10873472]

平均绝对值误差 5.017995858417576