网络爬虫入门到实战

简介

数据采集文章

开始

入门程序

环境准备

pip3 install beautifulsoup4基本操作

from urllib.request import urlopen

from bs4 import BeautifulSoup

html = urlopen("http://www.baidu.com")

# print(html.read()) (打印html完整内容)

bsObj = BeautifulSoup(html.read())

#选择上面完整内容的a标签

print(bsObj.a)结果

![]()

更具class获取网页信息

得到的元素还可以像操作dom一样得到他们的父节点,兄弟节点等等,也就是可以关系获取信息。

from urllib.request import urlopen

from bs4 import BeautifulSoup

# 请求网站数据

html = urlopen("https://www.pythonscraping.com/pages/warandpeace.html")

bsObj = BeautifulSoup(html)

# 根据网站数据得到class为red的元素

name_list = bsObj.find_all("span", {"class": "green"})

for name_item in name_list:

# the Empress

# print(name_item)

#得到最后的名称the Empress

print(name_item.get_text())结合正则表达式抓取指定图片(淘宝网为例)

这个不能成功

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

html = urlopen("https://www.taobao.com/")

bsObj = BeautifulSoup(html)

images = bsObj.find_all("img",{"src": re.compile("\.\.\.webp")})

for image in images:

print(image)获取网站

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

html = urlopen("http://en.wikipedia.org/wiki/Kevin_Bacon")

bsObj = BeautifulSoup(html)

#得到a标签,并且得到最后的结果

for link in bsObj.find_all("a",href=re.compile("^(/wiki/)((?!:).)*$")):

if 'href' in link.attrs:

# /wiki/Bernie_Madoff

print(link.attrs['href'])爬虫实战

相关软件安装

安装requests

pip install requestspython

import requests如果能够导入说明安装成功了

安装Selenium

pip install seleniumpython

import selenium安装ChromeDriver

下载地址

CNPM Binaries Mirror

https://chromedriver.storage.googleapis.com/index.html

官网

https://sites.google.com/chromium.org/driver/?pli=1

https://sites.google.com/a/chromium.org/chromedriver/downloads

先查看下自己的浏览器版本号

找到和自己浏览器支持的版本

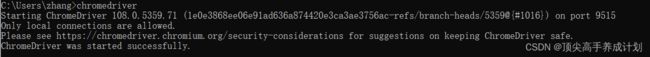

在命令行输入

chromedriver看到上面的说明安装成功

安装PhantomJS

下载地址

Download PhantomJS

加入环境变量以后打开命令行输入

phantomjs由于高版本selenium放弃了phantomjs的使用,下面是使用chrome的无界面模式

from selenium import webdriver

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')

browser = webdriver.Chrome(chrome_options=chrome_options)

browser.get('https://www.baidu.com/')

print("==============")

print(browser.current_url)aiohttp安装

pip install aiohttplxml安装

pip install lxmlpyquery安装

tesserocr安装

Index of /tesseract

pip3 install tesserocr pillowtornado安装

pip install tornado创建一个简单的访问

import tornado.ioloop

import tornado.web

# 每一个handler表示一个请求处理结果

class MainHandler(tornado.web.RequestHandler):

def get(self):

self.write("hello, world")

# 下面的r表示访问的路径

def make_app():

return tornado.web.Application([

(r"/", MainHandler)

])

if __name__ == "__main__":

app = make_app()

app.listen(8888)

tornado.ioloop.IOLoop.current().start() Charles安装

Download a Free Trial of Charles • Charles Web Debugging Proxy

证书配置

mitmproxy安装

Appium安装

https://github.com/appium/appium-desktop/releases

实战

chromedriver

下面是打开百度找到输入框输入python搜索

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

browser = webdriver.Chrome()

try:

browser.get("https://baidu.com")

input = browser.find_element(By.ID, "kw")

input.send_keys('python')

input.send_keys(Keys.ENTER)

wait = WebDriverWait(browser, 10)

print(browser.current_url)

print(browser.get_cookie)

print(browser.page_source)

finally:

browser.close()