Shuffle机制的详细介绍

Shuffle机制

Shuffle是在Mapper之后,Reducer之前的操作

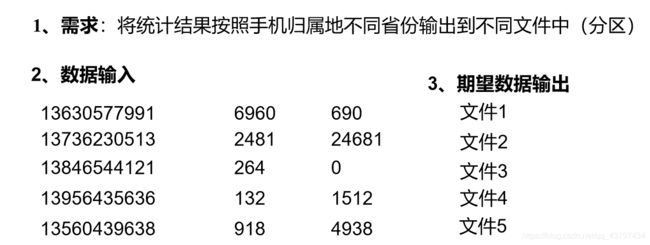

分区

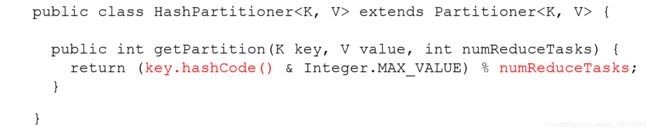

默认分区时,若numReduceTask>1,会根据所求key的hashcode值进行分区

设置MAX_VALUES的目的是为了防止hashcode过大

分区时按照条件的不同进行分区,有几个分区就会有几个reduce

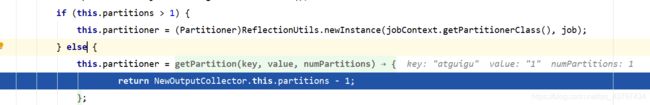

若numReduceTask=1,直接输出0号文件

分区主要需要写四个类,分别是partitioner类,mapper类,reducer类,driver类

导包的时候导最长的,最长的是最新的

//partitioner类

public class ProvicePartitioner extends Partitioner<Text, FlowBean> {

@Override

public int getPartition(Text key, FlowBean value, int numPartitions) {

// 1 获取电话号码的前三位

String preNum = key.toString().substring(0, 3);

int partition = 4;

// 2 判断是哪个省

if ("136".equals(preNum)) {

partition = 0;

} else if ("137".equals(preNum)) {

partition = 1;

} else if ("138".equals(preNum)) {

partition = 2;

} else if ("139".equals(preNum)) {

partition = 3;

}

return partition;

}

}

//FlowBean类

//实现Writable,并重写write和readFileds方法

public class FlowBean implements Writable {

//定义upFlow,downFlow,sumFlow属性

private long upFlow;

private long downFlow;

private long sumFlow;

//提供一个空参构造器

public FlowBean() {

}

//提供属性的getter,setter方法

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

//重载setSumFlow方法

public void setSumFlow() {

this.sumFlow = this.upFlow + this.downFlow;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(this.upFlow);

out.writeLong(this.downFlow);

out.writeLong(this.sumFlow);

}

@Override

public void readFields(DataInput in) throws IOException {

this.upFlow = in.readLong();

this.downFlow = in.readLong();

this.sumFlow = in.readLong();

}

//重写toString方法,并按照给定格式输出

@Override

public String toString() {

return this.upFlow + "\t" + this.downFlow + "\t" + this.sumFlow;

}

}

//Mapper类

//继承Mapper类,并提供输入输出K,V

public class FlowMapper extends Mapper<LongWritable, Text, Text, FlowBean> {

private Text k = new Text();

private FlowBean v = new FlowBean();

//重写Mapper中的map方法,按行分

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//获取一行数据

String line = value.toString();

//切割此行数据

String[] filds = line.split("\t");

//设置K,V

k.set(filds[1]);

v.setUpFlow(Long.parseLong(filds[filds.length - 3]));

v.setDownFlow(Long.parseLong(filds[filds.length - 2]));

v.setSumFlow();

//输出K,V

context.write(k, v);

}

}

//Reducer类

//继承Reducer类,并提供输入输出K,V,这里Reducer的输入K,V,是Mapper的输出K,V

public class FlowReducer extends Reducer<Text, FlowBean, Text, FlowBean> {

private long sumUpFlow;

private long sumDownFlow;

private FlowBean v = new FlowBean();

//重写Reducer中的reduce方法,按相同k分

@Override

protected void reduce(Text key, Iterable<FlowBean> values, Context context) throws IOException, InterruptedException {

sumUpFlow = 0;

sumDownFlow = 0;

//定义values集合,若手机号码相同,进行求和工作

for (FlowBean value : values) {

sumUpFlow += value.getUpFlow();

sumDownFlow += value.getDownFlow();

}

//设置输出v

v.setUpFlow(sumUpFlow);

v.setDownFlow(sumDownFlow);

v.setSumFlow();

//输出K,V

context.write(key, v);

}

}

//driver类

public class FlowDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

//获取job对象

Job job = Job.getInstance(conf);

//关联driver对象

job.setJarByClass(FlowDriver.class);

//关联Mapper,Reducer对象

job.setMapperClass(FlowMapper.class);

job.setReducerClass(FlowReducer.class);

//设置Map输出K,V

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

//设置最终输出K,V

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

//关联分区对象

job.setPartitionerClass(ProvicePartitioner.class);

//设置ReduceTask个数

job.setNumReduceTasks(5);

//设置输入输出路径(写自己的路径)

FileInputFormat.setInputPaths(job,new Path("d:/input/aaa.txt"));

FileOutputFormat.setOutputPath(job,new Path("d:/output1"));

//提交job

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

若设置ReduceTask等于1,则进入默认的分区,最终只生成一个文件

若设置ReduceTask大于1且小于本身数目,则会报IOException

若设置ReduceTask等于本身数目,正常输出

若设置ReduceTask大于本身数目,可以输出,但是只有本身数目个文件被使用

排序

在Shuffle机制中,分为全排序,二次排序,分区排序和辅助排序(开发很少用到)

在这里,排序就是实现WritableComparable接口,并重写其中的序列化,反序列化,compareTo方法

我们以上面的输出文件为例再写一个案例

全排序

//这个FlowBean和上面FlowBean大致相同,只是实现了WritableComparable接口,多重写了个compareTo方法

public class FlowBean implements WritableComparable<FlowBean> {

private long upFlow;

private long downFlow;

private long sumFlow;

public FlowBean() {

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

public void setSumFlow() {

this.sumFlow = this.upFlow + this.downFlow;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(this.upFlow);

out.writeLong(this.downFlow);

out.writeLong(this.sumFlow);

}

@Override

public void readFields(DataInput in) throws IOException {

this.upFlow = in.readLong();

this.downFlow = in.readLong();

this.sumFlow = in.readLong();

}

@Override

public String toString() {

return this.upFlow + "\t" + this.downFlow + "\t" + this.sumFlow;

}

@Override

public int compareTo(FlowBean o) {

int result = 0;

if (this.sumFlow > o.sumFlow) {

result = -1;

} else if (this.sumFlow < o.sumFlow) {

result = 1;

}

return result;

}

}

//Mapper类,由于按照sumFlow进行排序,所以Mapper的输出K,V应该是FlowBean, Text

public class FlowMapper extends Mapper<LongWritable, Text, FlowBean, Text> {

private FlowBean k = new FlowBean();

private Text v = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//提取一行数据

String line = value.toString();

//对这一行进行分割

String[] fileds = line.split("\t");

//设置k,v

k.setUpFlow(Long.parseLong(fileds[1]));

k.setDownFlow(Long.parseLong(fileds[2]));

k.setSumFlow();

v.set(fileds[0]);

//输出k,v

context.write(k, v);

}

}

//Reducer类,按照需求,Reducer输出为Text, FlowBean

public class FlowReducer extends Reducer<FlowBean,Text ,Text, FlowBean> {

//若sumFlow相同,不需要相加,也应该直接输出数据

@Override

protected void reduce(FlowBean key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//遍历value

for (Text value : values) {

//根据需求,输入为value,输出为key

context.write(value,key);

}

}

}

//driver类

public class FlowDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(FlowDriver.class);

job.setMapperClass(FlowMapper.class);

job.setReducerClass(FlowReducer.class);

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

FileInputFormat.setInputPaths(job, new Path("D:\\input\\inputflow2"));

FileOutputFormat.setOutputPath(job, new Path("d:/hadoop/output2"));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

二次排序

二次排序只是在全排序的基础上修改FlowBean中compareTo方法,修改代码如下

@Override

public int compareTo(FlowBean o) {

int result = 0;

if (this.sumFlow > o.sumFlow) {

result = -1;

} else if (this.sumFlow < o.sumFlow) {

result = 1;

}else{

if(this.upFlow > o.upFlow){

result = 1;

}else if (this.upFlow < o.upFlow){

result = -1;

}

}

return result;

}

分区排序

在二次排序的基础上加入自定义分区和在driver类中加入关联自定义分区和设置ReduceTask值,具体代码如下:

public class ProvincePartitioner2 extends Partitioner<FlowBean, Text> {

@Override

public int getPartition(FlowBean flowBean, Text text, int numPartitions) {

//获取手机号前三位

String phone = text.toString();

String prePhone = phone.substring(0, 3);

//定义一个分区号变量partition,根据prePhone设置分区号

int partition = 4;

if("136".equals(prePhone)){

partition = 0;

}else if("137".equals(prePhone)){

partition = 1;

}else if("138".equals(prePhone)){

partition = 2;

}else if("139".equals(prePhone)){

partition = 3;

}

//最后返回分区号partition

return partition;

}

}

// 在获得最终输出路径后,设置自定义分区器

job.setPartitionerClass(ProvincePartitioner2.class);

// 设置对应的ReduceTask的个数

job.setNumReduceTasks(5);

合并(Combiner)

Combiner是Mapper和Reducer之间的一个组件

Combiner继承Reducer父类,其代码和自定义Reducer相同

Combiner是对一个MapperTask进行汇总,然后传入ReducerTask中,这样会提高效率

Combiner的输入K,V是Mapper的输出K,V。Combiner的输出K,V是Reducer的输入K,V。

Combiner不能影响最终逻辑结果,比如不能用Combiner求取平均值

可以直接在文件的driver中加入代码即可

job.setCombinerClass(WordCountCombiner.class);

设置对应的ReduceTask的个数

job.setNumReduceTasks(5);