week6&7(2021.10.23-2021.11.5)

Step1

一.RNN:递归神经网络

在输入和输出都为序列时候常用

例如:语音识别(音频到文字),翻译(汉语文字到英语文字)

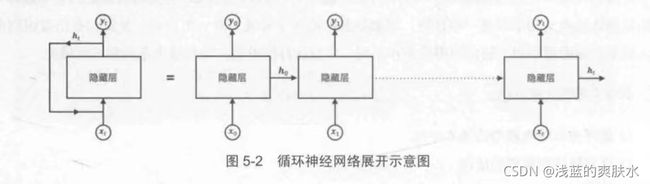

循环神经网络的数据是循环传递的,输入x经过隐含层到输出y,在隐含层的结果h也会作为下次输入的一部分,循环往复,如下图所示:

第一层x0为输入,y0为输出

第二层x1和h0为输入,y1为输出

... ... ...

h0,h1等隐藏向量的目的:提高网络的记忆力,为了重视序列信息(举个例子:图片很容易分辨出狗和长颈鹿,不需要对之前的输入有记忆能力,但是在预测文字的时候,比如:想预测“我爱吃饭”,输入了“我爱吃”,程序应该输出“饭”,这就是记忆能力的体现。反例:输入了“吃饭爱”,程序输出了“我”。所以反例是错误的)

隐藏向量不断地循环传递信息,因此叫做循环神经网络

二.LSTM的概念

LSTM(Long-short Term Memory)叫做长短期记忆网络,为RNN的改进版本,解决了RNN的“梯度消失”的问题(更好地记忆力)

在RNN中,先输入的会被遗忘的快,而LSTM弥补了这个缺点

简单来说,就是多了一个隐藏向量c 即:(h0,c0),(h1,c1)......

Step2

参考:以一个简单的RNN为例梳理神经网络的训练过程 - 简书 (jianshu.com)

一.RNN实现sin预测cos

import torch

from torch import nn

# import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

import numpy as np

torch.manual_seed(1) # reproducible

# Hyper Parameters

TIME_STEP = 10 # rnn time step

INPUT_SIZE = 1 # rnn input size

LR = 0.02 # learning rate

class RNN(nn.Module):

def __init__(self):

super(RNN, self).__init__()

self.rnn = nn.RNN(

input_size=INPUT_SIZE,

hidden_size=32,

num_layers=1, #表示多少个rnn堆在一起,1就代表只有1个

batch_first=True #把batch_size提前

)

self.out = nn.Linear(32, 1)

def forward(self, x, h_state):

# x (batch, time_step, input_size)

# h_state (n_layers, batch, hidden_size)

# r_out (batch, time_step, hidden_size)

r_out, h_state = self.rnn(x, h_state)

outs = []

for time_step in range(r_out.size(1)):

outs.append(self.out(r_out[:, time_step, :]))

return torch.stack(outs, dim=1), h_state #知识点

rnn = RNN()

# print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR) # optimize all cnn parameters #Adam在week2&3有写

loss_func = nn.MSELoss()

h_state = None #初始化数据

plt.figure(1, figsize=(12, 5))

plt.ion()

for step in range(300):

start, end = step * np.pi, (step + 1)*np.pi # 开始和结束的范围,这里的pi代表π

# use sin predicts cos

steps = np.linspace(start, end, TIME_STEP, dtype=np.float32) # 生成 input 数据和 output 数据, TIME_STEP为10

x_np = np.sin(steps) #这里的steps指的是作图时候的横坐标

y_np = np.cos(steps)

x = torch.from_numpy(x_np[np.newaxis, :, np.newaxis]) # shape (batch, time_step, input_size) #np.newaxis是选取增加维度

y = torch.from_numpy(y_np[np.newaxis, :, np.newaxis])

prediction, h_state = rnn(x, h_state) #每个step输出的结果为prediction

# print(h_state)

h_state = h_state.data #传回去进行迭代

# print(h_state)

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

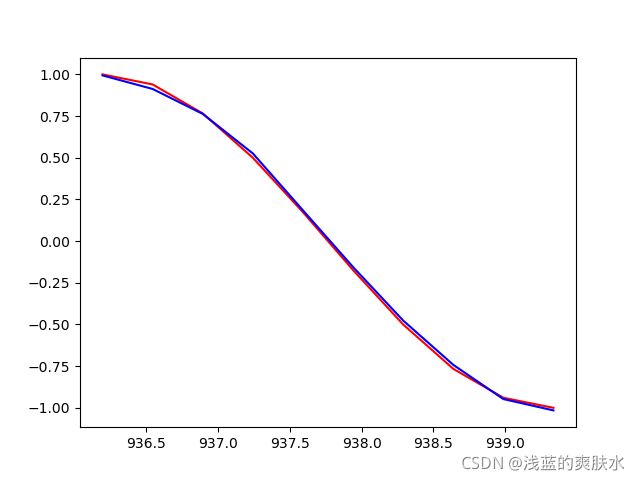

plt.plot(steps, y_np.flatten(), 'r-')

plt.plot(steps, prediction.data.numpy().flatten(), 'b-')

plt.draw()

plt.pause(0.05)

plt.ioff()

plt.show()

print('loss=%.4f' % loss.item())知识点:

1.torch.manual_seed(1)

【pytorch】torch.manual_seed()用法详解_XavierJ的博客-CSDN博客

2.batch_first=True

Pytorch的参数“batch_first”的理解 - 简书 (jianshu.com)

3.num_layers=1, #表示多少个rnn堆在一起,1就代表只有1个

pytorch中RNN参数的详细解释_lwgkzl的博客-CSDN博客_pytorch rnn

4.torch.append()

Python学习高光时刻记录1——(append,torch.zeros,torch.ones,torch.log,torch.from_numpy)_快乐的小虾米的博客-CSDN博客

5.torch.stack()

pytorch中torch.stack()函数总结_RealCoder的博客-CSDN博客_python torch.stack

6.torch.form_numpy()

pytorch中的torch.from_numpy()_tyler的博客-CSDN博客

7.np.newaxis()

关于python 中np.newaxis的用法_fei-CSDN博客_newaxis

8.flatten()

Python中flatten( )函数及函数用法详解 - yvonnes - 博客园 (cnblogs.com)

结果:

二.LSTM实现sin预测cos

1.代码

#coding=utf-8

import torch

from torch import nn

import numpy as np

import matplotlib.pyplot as plt

INPUT_SIZE=1

steps = np.linspace(0, np.pi*2, 100, dtype=np.float32) #0到100按照2π等分,开始到结束

input_x = np.sin(steps)

target_y = np.cos(steps)

plt.plot(steps, input_x, 'b-', label='input:sin')

plt.plot(steps, target_y, 'r-', label='target:cos')

plt.legend(loc='best') #设置图例位置

plt.show() #sin和cos的图像

class LSTM(nn.Module):

def __init__(self):

super(LSTM, self).__init__()

self.lstm = nn.LSTM(

input_size=INPUT_SIZE,

hidden_size=20,

batch_first=True,

)

self.out = nn.Linear(20, 1)

def forward(self, x, h_state,c_state):

r_out,(h_state,c_state) = self.lstm(x,(h_state,c_state)) #self.lstm()传入变量,然后输出r_out

outputs = self.out(r_out[0,:]).unsqueeze(0) #r_out输入线性神经网络层self.out() 得到outputs

return outputs,h_state,c_state

def InitHidden(self): #隐含单元初始化

h_state = torch.randn(1,1,20)

c_state = torch.randn(1,1,20)

return h_state,c_state

lstm = LSTM()

optimizer = torch.optim.Adam(lstm.parameters(), lr= 0.001)

loss_func = nn.MSELoss()

h_state,c_state = lstm.InitHidden()

plt.figure(1, figsize=(12, 5))

plt.ion()

for step in range(300):

start, end = step * np.pi, (step+1)*np.pi

steps = np.linspace(start, end, 100, dtype=np.float32)

x_np = np.sin(steps)

y_np = np.cos(steps)

x=torch.from_numpy(x_np).unsqueeze(0).unsqueeze(-1)

y=torch.from_numpy(y_np).unsqueeze(0).unsqueeze(-1)

prediction, h_state,c_state = lstm(x, h_state,c_state)

#防止程序自动计算隐藏向量的梯度值

h_state = h_state.data

c_state = c_state.data

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

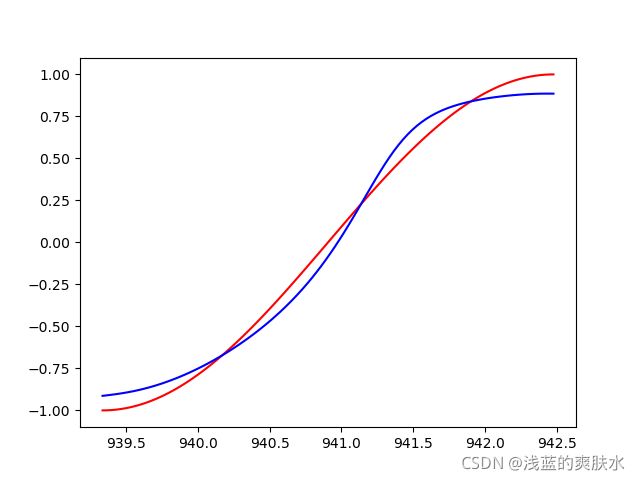

plt.plot(steps, y_np.flatten(), 'r-')

plt.plot(steps, prediction.data.numpy().flatten(), 'b-')

plt.draw(); plt.pause(0.05)

plt.ioff()

plt.show()

print('loss=%.4f' % loss.item())困惑:1.forward那里能不能改一下代码,可以看出程序运行的逻辑感?

2.def InitHidden(self): 不加可以么?

结果:

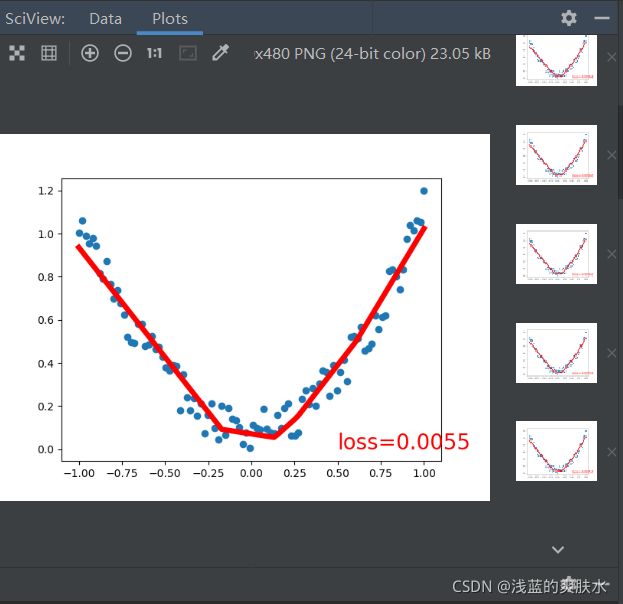

其他:线性网络示例代码:(看这个代码还挺有意思的,就拿过来了)

import torch

from torch.autograd import Variable

import torch.nn.functional as F

import matplotlib.pyplot as plt

x = torch.unsqueeze(torch.linspace(-1, 1, 100), dim=1) #input:生成-1开始,1结束,中间分为100步的一维张良,linspace代表等分 dim:在第二维(程序中的1代表第二维)增加1维

y = x.pow(2) + 0.2*torch.rand(x.size()) # 加入噪声

x, y = Variable(x), Variable(y) #tensor转变量

# plt.scatter(x.data.numpy(), y.data.numpy())

# plt.show()

# print(x)

# print(x.data)

# print(x.numpy())

# print(x.data.numpy())

class Net(torch.nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net, self).__init__()

self.hidden = torch.nn.Linear(n_feature, n_hidden)

self.predict = torch.nn.Linear(n_hidden, n_output)

def forward(self, x):

x = F.relu(self.hidden(x))

x = self.predict(x)

return x

net = Net(1, 10, 1) # n_feature, n_hidden, n_output

plt.ion() # 由于程序默认阻塞模式,所以现在要打开交互模式ion

plt.show()

optimizer = torch.optim.SGD(net.parameters(), lr=0.5)

loss_func = torch.nn.MSELoss()

for t in range(100):

prediction = net(x)

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if t % 5 == 0:

# plot and show learning process

plt.cla()

plt.scatter(x.data.numpy(), y.data.numpy())

plt.plot(x.data.numpy(), prediction.data.numpy(), 'r-', lw=5)

plt.text(0.5, 0, 'loss=%.4f' % loss.item(), fontdict={'size': 20, 'color': 'red'})

plt.pause(0.1)

plt.ioff()

plt.show()

知识点:

1.torch.linspace

PyTorch中torch.linspace的详细用法_WhiffeYF的博客-CSDN博客

2.torch.unsqueeze

python中的unsqueeze()和squeeze()函数 - OliYoung - 博客园 (cnblogs.com)

3.Variable(x)

Pytorch中Variable变量详解_h1239757443的博客-CSDN博客

4.plt.ion()

matplotlib的plt.ion()和plt.ioff()函数_yzy的博客-CSDN博客

5.plt.off():参考4

6.nn.linear():

PyTorch的nn.Linear()详解_风雪夜归人o的博客-CSDN博客_nn.linear

结果:(loss存在浮动)