PyTorch深度学习快速入门教程

PyTorch深度学习快速入门教程

- 1、Pytorch加载数据

- 2、Tensorbord的使用

- 3、Transforms的使用

- 4、常见的Transforms

- 5、torchvision中的数据集使用

- 6、DataLoader的使用

- 7、神经网络的基本骨架—nn.module

- 8、卷积操作

- 9、神经网络—卷积层

- 10、神经网络—池化层的使用

- 11、神经网络—非线性激活

- 12、神经网络—线性层及其他层介绍

- 13、神经网络—搭建小实战和Sequential

- 14、损失函数与反向传播

- 15、优化器

- 16、现有网络模型的使用及修改

- 17、网络模型的保存与读取

- 18、完整的模型训练套路

- 19、利用GPU训练

- 20、完整的模型验证套路

- 学习链接

1、Pytorch加载数据

Dataset主要是提供一种方式去获取数据及其对应的真实label,这个需要我们去写。需要实现的功能是:1)如何获取每一个数据及其label,2)告诉我们总共有多少个数据

Dataloader为后面的网络提供不同的数据形式。

from torch.utils.data import Dataset

from PIL import Image

import os

class MyData(Dataset):

def __init__(self,root_dir,label_dir):

# self 相当于指定了一个类当中的全局变量

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir,self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir,self.label_dir,img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img, label

def __len__(self):

return len(self.img_path)

root_dir = "dataset/train"

ants_label_dir = "ants"

ants_dataset = MyData(root_dir,ants_label_dir)

bees_label_dir = "bees"

bees_dataset = MyData(root_dir,bees_label_dir)

train_dataset = ants_dataset+bees_dataset

2、Tensorbord的使用

首先通过指令安装pip install tensorboard。

显示tensorboard界面,有两种方式,默认端口和指定端口。

关于tensorboard的小练习。

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

for i in range(100):

writer.add_scalar("y=2x",3*i,i)

writer.close()

当遇到上述问题的时候可以采用下面的方式解决。

采用opencv读入的图像是numpy中的数组的数据类型,安装指令pip install opencv-python 。

也可以采用numpy进行数据的转换,如图所示。

tensorboard显示图片代码。

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter("logs")

image_path = "data/train/bees_image/16838648_415acd9e3f.jpg"

img_PIL = Image.open(image_path)

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

writer.add_image("test",img_array,2,dataformats='HWC')

for i in range(100):

writer.add_scalar("y=2x",3*i,i)

writer.close()

3、Transforms的使用

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

# python 的用法 -》 tensor数据类型

# 通过transforms.ToTensor去看两个问题

# 2、为什么我们需要Tensor数据类型

# 绝对路径: D:\shanda_python\2022_pytorch\Learning\dataset\train\ants_image\0013035.jpg

# 相对路径: dataset/train/ants_image/0013035.jpg

img_path = "dataset/train/ants_image/0013035.jpg"

img = Image.open(img_path)

writer = SummaryWriter("logs")

# 1、transforms该如何使用(python)

tensor_trans = transforms.ToTensor() # 这里返回的是一个ToTensor的对象

tensor_img = tensor_trans(img) # 将PIL类型转换成Tensor

print(tensor_img.shape)

writer.add_image("Tensor_img",tensor_img)

writer.close()

4、常见的Transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

writer = SummaryWriter("logs")

img = Image.open("images/img.png")

print(img)

# ToTensor--数据由PIL转换成tensor

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

writer.add_image("ToTensor",img_tensor)

# Normalize--归一化,设置均值方差

# 计算公式: output[channel] = (input[channel] - mean[channel]) / std[channel]

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([3,2,1,5],[2,3,8,7]) # 我这里读入的图像是四个通道的,RGBA

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalize",img_norm,2)

# Resize

print(img.size)

trans_resize = transforms.Resize((512,512))

# img PIL->resize->img_resize PIL

img_resize = trans_resize(img)

# img_resize PIL -> totensor -> img_resize tensor

img_resize = trans_totensor(img_resize)

writer.add_image("Resize",img_resize,0)

print(img_resize.shape)

# Compose - resize - 2 将两种变换组合在一起使用

trans_resize_2 = transforms.Resize(512)

# PIL - > PIL -> tensor

trans_compose = transforms.Compose([trans_resize_2,trans_totensor])

img_resize_2 = trans_compose(img)

writer.add_image("Resize",img_resize_2,1)

#

trans_random = transforms.RandomCrop((500,600))

trans_compose2 = transforms.Compose([trans_random,trans_totensor])

for i in range(10):

img_crop = trans_compose2(img)

writer.add_image("RandomCrop",img_crop,i)

writer.close()

小结:多从官方文档进行各个函数的学习和使用。

5、torchvision中的数据集使用

import torchvision

from torch.utils.tensorboard import SummaryWriter

# 设置数据的转化,这里只是将PIL-》Tensor

dataset_tranform = torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])

# 转化成Tensor之前

train_set = torchvision.datasets.CIFAR10(root="./dataset",train=True,download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,download=True)

print(test_set[0])

print(test_set.classes)

img, target = test_set[0]

print(img)

print(target)

print(test_set.classes[target])

img.show()

# 转化成Tensor之后

train_set = torchvision.datasets.CIFAR10(root="./dataset",train=True,transform=dataset_tranform,download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=dataset_tranform,download=True)

# print(test_set[0])

writer = SummaryWriter("P14")

for i in range(10):

img, target = test_set[i]

writer.add_image("test_set",img,i)

writer.close()

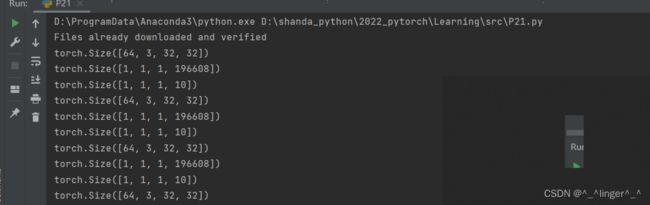

6、DataLoader的使用

DataLoader的主要功能是用来加载数据集,加载前面讲过的dataset,分批次送入模型 中。

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

# shuffle:是否打乱数据,如果不打乱,设置为False,如果打乱,设置为True

# drop_last: 最后不能成为一个batch的是否丢弃,如果不丢弃,设置为False,如果丢弃,设置为True

test_loader = DataLoader(dataset=test_set,batch_size=64,shuffle=False,num_workers=0,drop_last=False)

# 测试数据集中第一张图片及target

img, target = test_set[0]

print(img.shape)

print(target)

writer = SummaryWriter("P15_dataloader")

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images("Epoch: {}".format(epoch),imgs,step)

step = step + 1

writer.close()

7、神经网络的基本骨架—nn.module

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super().__init__()

def forward(self,input):

output = input + 1

return output

tudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)

8、卷积操作

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

input = torch.reshape(input,(1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input,kernel,stride=1)

print(output)

output2 = F.conv2d(input,kernel,stride=2)

print(output2)

output3 = F.conv2d(input,kernel,stride=1,padding=1)

print(output3)

9、神经网络—卷积层

10、神经网络—池化层的使用

import torch

from torch import nn

from torch.nn import MaxPool2d

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root="../dataset",train=False,download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64)

# input = torch.tensor([[1,2,0,3,1],

# [0,1,2,3,3],

# [1,2,1,0,0],

# [5,2,3,1,1],

# [2,1,0,1,1]],dtype=torch.float32)

#

# input = torch.reshape(input,(-1,1,5,5))

# print(input.shape)

# 构建了一个神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self,input):

output = self.maxpool1(input)

return output

tudui = Tudui()

# output = tudui(input)

# print(output)

writer = SummaryWriter("../logs_maxpool")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input",imgs,step)

output = tudui(imgs)

writer.add_images("output",output,step)

step = step+1

writer.close()

11、神经网络—非线性激活

import torch

from torch.nn import Sigmoid

from torch import nn

from torch.nn import ReLU

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root="../dataset",train=False,download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64)

# input = torch.tensor([[1,-0.5],

# [-1,3]])

# input = torch.reshape(input,(-1,1,2,2))

# print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self,input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

# output = tudui(input)

# print(output)

writer = SummaryWriter("../logs_relu")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input",imgs,step)

output = tudui(imgs)

writer.add_images("output",output,step)

step+=1

writer.close()

12、神经网络—线性层及其他层介绍

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root="../dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.linear1 = Linear(196608,10)

def forward(self,input):

output = self.linear1(input)

return output

tudui = Tudui()

for data in dataloader:

imgs,targets = data

print(imgs.shape)

output = torch.reshape(imgs,(1,1,1,-1))

print(output.shape)

output = tudui(output)

print(output.shape)

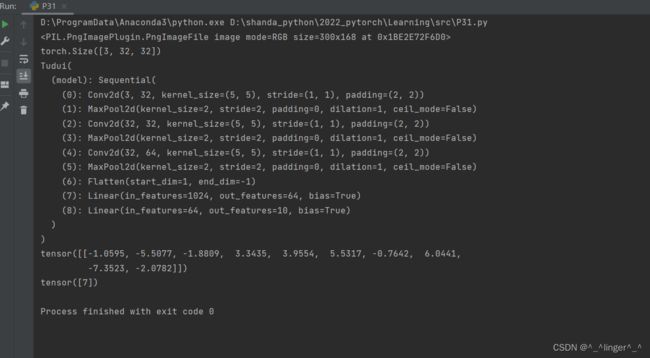

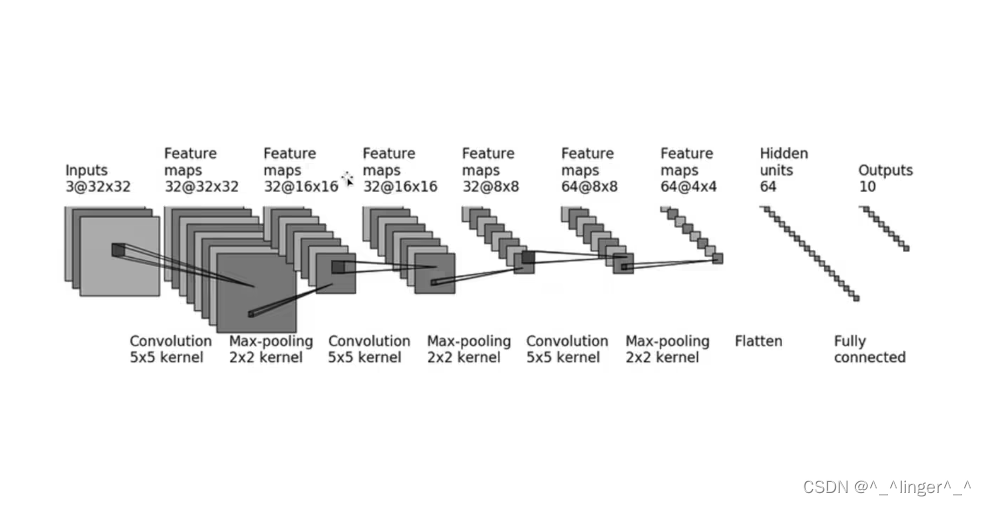

13、神经网络—搭建小实战和Sequential

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

import torch.nn as nn

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.conv1 = Conv2d(3,32,5,padding=2)

self.maxpool1 = MaxPool2d(2)

self.conv2 = Conv2d(32,32,5,padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32,64,5,padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024,64)

self.linear2 = Linear(64,10)

def forward(self,x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64,3,32,32))

output = tudui(input)

print(output.shape)

引入Sequential之后代码更加简洁了。

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

import torch.nn as nn

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.mode1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.mode1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64,3,32,32))

output = tudui(input)

print(output.shape)

# 可视化这个网络模型

writer = SummaryWriter("../logs_seq")

writer.add_graph(tudui,input)

writer.close()

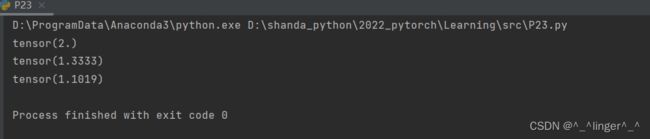

14、损失函数与反向传播

import torch

from torch.nn import L1Loss

from torch import nn

inputs = torch.tensor([1,2,3],dtype=torch.float32)

targets = torch.tensor([1,2,5],dtype=torch.float32)

inputs = torch.reshape(inputs,(1,1,1,3))

targets = torch.reshape(targets,(1,1,1,3))

loss = L1Loss(reduction='sum')

result = loss(inputs,targets)

print(result)

loss_mse = nn.MSELoss()

result_mse = loss_mse(inputs,targets)

print(result_mse)

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1])

x = torch.reshape(x,(1,3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x,y)

print(result_cross)

15、优化器

import torch.optim

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

import torchvision

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root="../dataset",train=True,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.mode1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.mode1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

optim = torch.optim.SGD(tudui.parameters(),lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs,targets)

optim.zero_grad()

result_loss.backward()

optim.step()

running_loss += result_loss

# print(outputs)

# print(result_loss)

print(running_loss)

16、现有网络模型的使用及修改

import torchvision

from torch import nn

vgg16_false = torchvision.models.vgg16(pretrained=False)

vgg16_true = torchvision.models.vgg16(pretrained=True)

print(vgg16_true)

train_data = torchvision.datasets.CIFAR10(root="../dataset",train=True,

transform=torchvision.transforms.ToTensor(),

download=True)

# 在vgg16网络模型上添加一个线性层

vgg16_true.classifier.add_module('add_linear',nn.Linear(1000,10))

print(vgg16_true)

print(vgg16_false)

vgg16_false.classifier[6] = nn.Linear(4096,10)

print(vgg16_false)

17、网络模型的保存与读取

import torch

import torchvision

vgg16 = torchvision.models.vgg16()

# 保存方式1—保存模型结构和模型参数

torch.save(vgg16,"vgg16_method1.pth")

# 保存方式2—模型参数(官方推荐)

torch.save(vgg16.state_dict(),"vgg16_method2.pth")

import torch

import torchvision

# 方式1-》保存方式1,加载模型

model = torch.load("vgg16_method1.pth")

print(model)

# 方式2-》保存方式2,加载模型

model = torch.load("vgg16_method2.pth")

print(model) # 输出只是参数

vgg16 = torchvision.models.vgg16()

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

print(vgg16) # 将参数送入到模型中

18、完整的模型训练套路

train.py

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

from model import *

from torch import nn

from torch.utils.data import DataLoader

'''

完整的模型训练套路

'''

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="../dataset",train=True,transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="../dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

# length长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size = 10,训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度:{}".format(test_data_size))

# 利用DataLoader来加载数据集

train_dataloader = DataLoader(train_data,batch_size=64)

test_dataloader = DataLoader(test_data,batch_size=64)

# 创建网络模型

tudui = Tudui()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(tudui.parameters(),lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("../logs_train")

for i in range(epoch):

print("------第{}轮训练开始------".format(i+1))

# 训练步骤开始

tudui.train() # 设置网络为训练阶段

for data in train_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

# 测试步骤开始

tudui.eval()# 设置网络为测试阶段

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.add_scalar("test_accuracy",total_accuracy/test_data_size,total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui,"tudui_{}.pth".format(i+1))

torch.save(tudui.state_dict(),"tudui_{}".format(i+1))

print("模型已保存!")

writer.close()

model.py

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

if __name__ == '__main__':

tudui = Tudui()

input = torch.ones((64,3,32,32))

output = tudui(input)

print(output.shape)

19、利用GPU训练

如果电脑没有GPU可以通过google colab来使用GPU训练,新建笔记本放入代码就可以。

代码1:

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch import nn

from torch.utils.data import DataLoader

import time

'''

完整的模型训练套路

'''

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="../dataset",train=True,transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="../dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

# length长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size = 10,训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度:{}".format(test_data_size))

# 利用DataLoader来加载数据集

train_dataloader = DataLoader(train_data,batch_size=64)

test_dataloader = DataLoader(test_data,batch_size=64)

# 创建网络模型

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(tudui.parameters(),lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("../logs_train")

start_time = time.time()

for i in range(epoch):

print("------第{}轮训练开始------".format(i+1))

# 训练步骤开始

tudui.train() # 设置网络为训练阶段

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time-start_time)

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

# 测试步骤开始

tudui.eval()# 设置网络为测试阶段

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.add_scalar("test_accuracy",total_accuracy/test_data_size,total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui,"tudui_{}.pth".format(i+1))

torch.save(tudui.state_dict(),"tudui_{}".format(i+1))

print("模型已保存!")

writer.close()

代码2:

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch import nn

from torch.utils.data import DataLoader

import time

'''

完整的模型训练套路

'''

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="../dataset",train=True,transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="../dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

# 定义训练的设备

device = torch.device("cuda:0")

# device = torch.device("cuda")

# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

# length长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size = 10,训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度:{}".format(test_data_size))

# 利用DataLoader来加载数据集

train_dataloader = DataLoader(train_data,batch_size=64)

test_dataloader = DataLoader(test_data,batch_size=64)

# 创建网络模型

tudui = Tudui()

tudui = tudui.to(device)

# 损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器

learning_rate = 0.01

optimizer = torch.optim.SGD(tudui.parameters(),lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("../logs_train")

start_time = time.time()

for i in range(epoch):

print("------第{}轮训练开始------".format(i+1))

# 训练步骤开始

tudui.train() # 设置网络为训练阶段

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time-start_time)

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

# 测试步骤开始

tudui.eval()# 设置网络为测试阶段

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss,total_test_step)

writer.add_scalar("test_accuracy",total_accuracy/test_data_size,total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui,"tudui_{}.pth".format(i+1))

torch.save(tudui.state_dict(),"tudui_{}".format(i+1))

print("模型已保存!")

writer.close()

20、完整的模型验证套路

import torch

from PIL import Image

import torchvision

from torch import nn

image_path = "../images/dog.png"

image = Image.open(image_path)

print(image)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

model = torch.load("tudui_30.pth",map_location=torch.device('cpu'))

print(model)

image = torch.reshape(image,(1,3,32,32))

model.eval()

with torch.no_grad():

output = model(image)

print(output)

print(output.argmax(1))

学习链接

- 代码:https://github.com/xiaotudui/pytorch-tutorial

- 蚂蚁蜜蜂/练手数据集:链接: https://pan.baidu.com/s/1jZoTmoFzaTLWh4lKBHVbEA 密码: 5suq

- 课程资源:https://pan.baidu.com/s/1CvTIjuXT4tMonG0WltF-vQ?pwd=jnnp 提取码:jnnp

- pytorch官网