强化学习笔记_7_策略学习中的Baseline

1. Policy Gradient with Baseline

1.1 Policy Gradient

-

policy network π ( a ∣ s ; θ ) \pi(a|s;\theta) π(a∣s;θ)

-

State-value function:

V π ( s ) = E A ∼ π [ Q π ( s , A ) ] = ∑ a π ( s ∣ s ; θ ) ⋅ Q π ( s , a ) \begin{aligned} V_\pi(s)&=E_{A\sim\pi}[Q_\pi(s,A)] \\&=\sum_a\pi(s|s;\theta)\cdot Q_\pi(s,a) \end{aligned} Vπ(s)=EA∼π[Qπ(s,A)]=a∑π(s∣s;θ)⋅Qπ(s,a) -

Policy gradient:

∂ V π ( s ) ∂ θ = E A ∼ π [ ∂ ln π ( A ∣ s ; θ ) ∂ θ ⋅ Q π ( s , A ) ] \frac{\partial V_\pi(s)}{\partial\theta}=E_{A\sim\pi}[\frac{\partial \ln\pi(A|s;\theta)}{\partial\theta}\cdot Q_\pi(s,A)] ∂θ∂Vπ(s)=EA∼π[∂θ∂lnπ(A∣s;θ)⋅Qπ(s,A)]

1.2. Baseline in Policy Gradient

-

Baseline 函数 b b b,不依赖于动作 A A A

-

性质:如果 b b b与 A A A无关,则 E A ∼ π [ b ⋅ ∂ ln π ( A ∣ s ; θ ) ∂ θ ] = 0 E_{A\sim\pi}[b\cdot \frac{\partial\ln\pi(A|s;\theta)}{\partial\theta}]=0 EA∼π[b⋅∂θ∂lnπ(A∣s;θ)]=0

E A ∼ π [ b ⋅ ∂ ln π ( A ∣ s ; θ ) ∂ θ ] = b ⋅ E A ∼ π [ ∂ ln π ( A ∣ s ; θ ) ∂ θ ] = b ⋅ ∑ a π ( a ∣ s ; θ ) ⋅ ∂ ln π ( a ∣ s ; θ ) ∂ θ = b ⋅ ∑ a π ( a ∣ s ; θ ) ⋅ 1 π ( a ∣ s ; θ ) ∂ π ( a ∣ s ; θ ) ∂ θ = b ⋅ ∑ a ∂ π ( a ∣ s ; θ ) ∂ θ = b ⋅ ∂ ∑ a π ( a ∣ s ; θ ) ∂ θ = b ⋅ 1 ∂ θ = 0 \begin{aligned} E_{A\sim\pi}[b\cdot \frac{\partial\ln\pi(A|s;\theta)}{\partial\theta}]&= b\cdot E_{A\sim\pi}[\frac{\partial\ln\pi(A|s;\theta)}{\partial\theta}] \\&=b\cdot \sum_a\pi(a|s;\theta)\cdot \frac{\partial\ln\pi(a|s;\theta)}{\partial\theta} \\&=b\cdot \sum_a\pi(a|s;\theta)\cdot \frac{1}{\pi(a|s;\theta)}\frac{\partial\pi(a|s;\theta)}{\partial\theta} \\&=b\cdot \sum_a\frac{\partial\pi(a|s;\theta)}{\partial\theta} \\&=b\cdot \frac{\partial\sum_a\pi(a|s;\theta)}{\partial\theta} \\&=b\cdot \frac{1}{\partial\theta} \\&=0 \end{aligned} EA∼π[b⋅∂θ∂lnπ(A∣s;θ)]=b⋅EA∼π[∂θ∂lnπ(A∣s;θ)]=b⋅a∑π(a∣s;θ)⋅∂θ∂lnπ(a∣s;θ)=b⋅a∑π(a∣s;θ)⋅π(a∣s;θ)1∂θ∂π(a∣s;θ)=b⋅a∑∂θ∂π(a∣s;θ)=b⋅∂θ∂∑aπ(a∣s;θ)=b⋅∂θ1=0 -

policy gradient

∂ V π ( s ) ∂ θ = E A ∼ π [ ∂ ln ( π ( A ∣ s ; θ ) ) ∂ θ ⋅ Q π ( s , A ) ] = E A ∼ π [ ∂ ln ( π ( A ∣ s ; θ ) ) ∂ θ ⋅ Q π ( s , A ) ] − E A ∼ π [ b ⋅ ∂ ln π ( A ∣ s ; θ ) ∂ θ ] = E A ∼ π [ ∂ ln ( π ( A ∣ s ; θ ) ) ∂ θ ⋅ ( Q π ( s , A ) − b ) ] \begin{aligned} \frac{\partial V_\pi(s)}{\partial\theta} &=E_{A\sim\pi}[\frac{\partial \ln(\pi(A|s;\theta))}{\partial\theta}·Q_\pi(s,A)] \\&=E_{A\sim\pi}[\frac{\partial \ln(\pi(A|s;\theta))}{\partial\theta}·Q_\pi(s,A)] -E_{A\sim\pi}[b\cdot \frac{\partial\ln\pi(A|s;\theta)}{\partial\theta}] \\&=E_{A\sim\pi}[\frac{\partial \ln(\pi(A|s;\theta))}{\partial\theta}·(Q_\pi(s,A)-b)] \end{aligned} ∂θ∂Vπ(s)=EA∼π[∂θ∂ln(π(A∣s;θ))⋅Qπ(s,A)]=EA∼π[∂θ∂ln(π(A∣s;θ))⋅Qπ(s,A)]−EA∼π[b⋅∂θ∂lnπ(A∣s;θ)]=EA∼π[∂θ∂ln(π(A∣s;θ))⋅(Qπ(s,A)−b)]

即:

∂ V π ( s t ) ∂ θ = E A ∼ π [ ∂ ln ( π ( A t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , A t ) − b ) ] \begin{aligned} \frac{\partial V_\pi(s_t)}{\partial\theta} =E_{A\sim\pi}[\frac{\partial \ln(\pi(A_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,A_t)-b)] \end{aligned} ∂θ∂Vπ(st)=EA∼π[∂θ∂ln(π(At∣st;θ))⋅(Qπ(st,At)−b)]

在实际计算中常使用蒙特卡洛近似, b b b不会影响方差,但是会影响蒙特卡洛近似,合理取值可以减小蒙特卡洛的方差,使收敛更快。

1.3. Monte Carlo Approximation

g ( A t ) = ∂ ln ( π ( A t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , A t ) − b ) ∂ V π ( s t ) ∂ θ = E A ∼ π [ ∂ ln ( π ( A t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , A t ) − b ) ] = E A ∼ π [ g ( A t ) ] \begin{aligned} g(A_t)&=\frac{\partial \ln(\pi(A_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,A_t)-b) \\\frac{\partial V_\pi(s_t)}{\partial\theta} &=E_{A\sim\pi}[\frac{\partial \ln(\pi(A_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,A_t)-b)] \\&=E_{A\sim\pi}[g(A_t)] \end{aligned} g(At)∂θ∂Vπ(st)=∂θ∂ln(π(At∣st;θ))⋅(Qπ(st,At)−b)=EA∼π[∂θ∂ln(π(At∣st;θ))⋅(Qπ(st,At)−b)]=EA∼π[g(At)]

以概率密度函数 a t ∼ π ( ⋅ ∣ s t ; θ ) a_t\sim\pi(·|s_t;\theta) at∼π(⋅∣st;θ)抽样得到行动 a t a_t at,计算得到 g ( a t ) g(a_t) g(at)为其期望的蒙特卡洛近似,也是对策略梯度的一个无偏估计。

- Stochastic Policy Gradient(梯度上升)

g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , a t ) − b ) θ ← θ + β ⋅ g ( a t ) \begin{aligned} g(a_t)&=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,a_t)-b) \\\theta&\leftarrow \theta+\beta\cdot g(a_t) \end{aligned} g(at)θ=∂θ∂ln(π(at∣st;θ))⋅(Qπ(st,at)−b)←θ+β⋅g(at)

b b b与 A t A_t At无关,故不会影响 g ( A t ) g(A_t) g(At)的期望,但是会影响其方差。如果选取的 b b b很接近于 Q π Q_\pi Qπ,则方差会很小。

1.4. Choices of Baseline

-

Choice 1: b = 0 b=0 b=0,不使用Baseline

-

Choice 2: b b b is state-value, b = V π ( s t ) b=V_\pi(s_t) b=Vπ(st)

状态 s t s_t st是先于 A t A_t At被观测到的,于是和 A t A_t At无关。

(有点像Dueling network,使用优势函数)

2. Reinforce with Baseline

2.1. Policy Gradient

使用 V π ( s t ) V_\pi(s_t) Vπ(st)作为Baseline:

g ( A t ) = ∂ ln ( π ( A t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , A t ) − V π ( s t ) ) ∂ V π ( s t ) ∂ θ = E A ∼ π [ ∂ ln ( π ( A t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , A t ) − V π ( s t ) ) ] = E A ∼ π [ g ( A t ) ] \begin{aligned} g(A_t)&=\frac{\partial \ln(\pi(A_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,A_t)-V_\pi(s_t)) \\\frac{\partial V_\pi(s_t)}{\partial\theta} &=E_{A\sim\pi}[\frac{\partial \ln(\pi(A_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,A_t)-V_\pi(s_t))] \\&=E_{A\sim\pi}[g(A_t)] \end{aligned} g(At)∂θ∂Vπ(st)=∂θ∂ln(π(At∣st;θ))⋅(Qπ(st,At)−Vπ(st))=EA∼π[∂θ∂ln(π(At∣st;θ))⋅(Qπ(st,At)−Vπ(st))]=EA∼π[g(At)]

2.2. Approximation

-

随机抽样得到行动 a t ∼ π ( ⋅ ∣ s t ; θ ) a_t\sim\pi(·|s_t;\theta) at∼π(⋅∣st;θ),得到Stochastic Policy Gradient:

g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , a t ) − V π ( s t ) ) g(a_t)=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,a_t)-V_\pi(s_t)) g(at)=∂θ∂ln(π(at∣st;θ))⋅(Qπ(st,at)−Vπ(st)) -

对 Q π ( s t , a t ) Q_\pi(s_t,a_t) Qπ(st,at)近似

Q π ( s t , a t ) = E [ U t ∣ s t , a t ] Q_\pi(s_t,a_t)=E[U_t|s_t,a_t] Qπ(st,at)=E[Ut∣st,at]

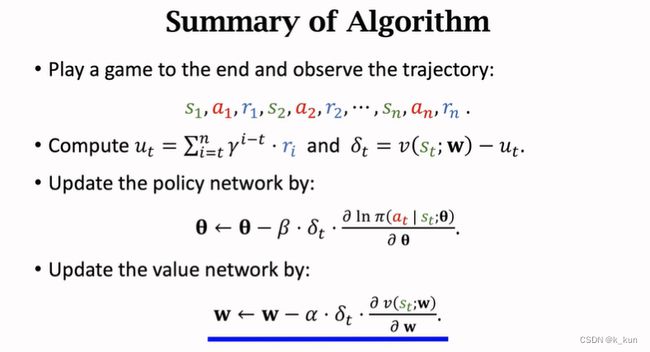

使用观测到的回报 u t u_t ut近似 Q π ( s t , a t ∼ u t ) Q_\pi(s_t,a_t\sim u_t) Qπ(st,at∼ut):- 观测到一条完整轨迹: s t , a t , r t , s t + 1 , a t + 1 , r t + 1 , ⋅ ⋅ ⋅ , s n , a n , r n s_t,a_t,r_t,s_{t+1},a_{t+1},r_{t+1},···,s_n,a_n,r_n st,at,rt,st+1,at+1,rt+1,⋅⋅⋅,sn,an,rn

- 计算回报: u t = ∑ i = t n γ i − t ⋅ r i u_t=\sum_{i=t}^n \gamma^{i-t}\cdot r_i ut=∑i=tnγi−t⋅ri

- 使用 u t u_t ut作为 Q π ( s t , a t ) Q_\pi(s_t,a_t) Qπ(st,at)的无偏估计

-

对 V π ( s t ) V_\pi(s_t) Vπ(st)近似,使用神经网络value network v ( s ; w ) v(s;w) v(s;w)近似

三次Approximation后得到的结果为:

∂ V π ( s t ) ∂ θ ≈ g ( a t ) ≈ ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( u t − v ( s t , w ) ) \frac{\partial V_\pi(s_t)}{\partial\theta}\approx g(a_t) \approx \frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(u_t-v(s_t,w)) ∂θ∂Vπ(st)≈g(at)≈∂θ∂ln(π(at∣st;θ))⋅(ut−v(st,w))

2.3. Policy and Value Network

2.4. Reinforce with Baseline

-

Updating the policy network

Policy gradient: ∂ V π ( s t ) ∂ θ ≈ ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( u t − v ( s t , w ) ) \frac{\partial V_\pi(s_t)}{\partial\theta}\approx \frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(u_t-v(s_t,w)) ∂θ∂Vπ(st)≈∂θ∂ln(π(at∣st;θ))⋅(ut−v(st,w))

Gradient ascent: θ ← θ + β ⋅ ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( u t − v ( s t , w ) ) \theta\leftarrow\theta+\beta\cdot\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(u_t-v(s_t,w)) θ←θ+β⋅∂θ∂ln(π(at∣st;θ))⋅(ut−v(st,w))

令

δ t = v ( s t ; w ) − u t \delta_t=v(s_t;w)-u_t δt=v(st;w)−ut

则Gradient ascent也可表示为:

θ ← θ − β ⋅ ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ δ t \theta\leftarrow\theta-\beta\cdot\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·\delta_t θ←θ−β⋅∂θ∂ln(π(at∣st;θ))⋅δt -

Updating the value network

使 v ( s t ; w ) v(s_t;w) v(st;w)接近 V π ( s t ) = E [ U t ∣ s t ] V_\pi(s_t)=E[U_t|s_t] Vπ(st)=E[Ut∣st],使用观测值 u t u_t ut进行拟合。

- Prediction error: δ t = v ( s t , w ) − u t \delta_t=v(s_t,w)-u_t δt=v(st,w)−ut

- Gradient: ∂ δ t 2 / 2 ∂ w = δ t ⋅ ∂ v ( s t ; w ) ∂ w \frac{\partial\delta_t^2/2}{\partial w}=\delta_t\cdot\frac{\partial v(s_t;w)}{\partial w} ∂w∂δt2/2=δt⋅∂w∂v(st;w)

- Gradient descent: w ← w − α ⋅ δ t ⋅ ∂ v ( s t ; w ) ∂ w w\leftarrow w-\alpha\cdot\delta_t\cdot\frac{\partial v(s_t;w)}{\partial w} w←w−α⋅δt⋅∂w∂v(st;w)

3. Advantage Actor-Critic (A2C)

3.1. Actor and Critic

使用2.中相同结构的神经网络,但是训练方法不同。与之前Actor-Critic不同的是,这里使用状态价值而不是行动价值,状态价值只与当前状态相关,更容易训练。

3.2. Training of A2C

-

Observe a transition ( s t , a t , r t , s t + 1 ) (s_t,a_t,r_t,s_{t+1}) (st,at,rt,st+1)

-

TD target y t = r t + γ ⋅ v ( s t + 1 ; w ) y_t=r_t+\gamma\cdot v(s_{t+1};w) yt=rt+γ⋅v(st+1;w)

-

TD error δ t = v ( s t ; w ) − y t \delta_t=v(s_t;w)-y_t δt=v(st;w)−yt

-

Update the policy network (actor) by:

θ ← θ − β ⋅ δ t ⋅ ∂ ln π ( a t ∣ s t ; θ ) ∂ θ \theta\leftarrow\theta-\beta\cdot\delta_t\cdot\frac{\partial\ln\pi(a_t|s_t;\theta)}{\partial\theta} θ←θ−β⋅δt⋅∂θ∂lnπ(at∣st;θ) -

Update the value network (critic) by:

w ← w − α ⋅ δ t ⋅ ∂ v ( s t ; w ) ∂ w w\leftarrow w-\alpha\cdot\delta_t\cdot\frac{\partial v(s_t;w)}{\partial w} w←w−α⋅δt⋅∂w∂v(st;w)

3.3.算法的数学推导

-

Value functions

在TD算法中已经得到: Q π ( s t , a t ) = E S t + 1 , A t + 1 [ R t + γ ⋅ Q π ( S t + 1 , A t + 1 ) ] Q_\pi(s_t,a_t)=E_{S_{t+1},A_{t+1}}[R_t+\gamma·Q_\pi(S_{t+1},A_{t+1})] Qπ(st,at)=ESt+1,At+1[Rt+γ⋅Qπ(St+1,At+1)]

由于 R t R_t Rt与 S t + 1 S_{t+1} St+1相关而与 A t + 1 A_{t+1} At+1无关, Q π ( S t + 1 , A t + 1 ) Q_\pi(S_{t+1},A_{t+1}) Qπ(St+1,At+1)与两者都有关,于是继续得到:

Q π ( s t , a t ) = E S t + 1 [ R t + γ ⋅ E A t + 1 [ Q π ( S t + 1 , A t + 1 ) ] = E S t + 1 [ R t + γ ⋅ V π ( S t + 1 ) ] \begin{aligned} Q_\pi(s_t,a_t)&=E_{S_{t+1}}[R_t+\gamma·E_{A_{t+1}}[Q_\pi(S_{t+1},A_{t+1})] \\&=E_{S_{t+1}}[R_t+\gamma·V_\pi(S_{t+1})] \end{aligned} Qπ(st,at)=ESt+1[Rt+γ⋅EAt+1[Qπ(St+1,At+1)]=ESt+1[Rt+γ⋅Vπ(St+1)]- Theorem 1: Q π ( s t , a t ) = E S t + 1 [ R t + γ ⋅ V π ( S t + 1 ) ] Q_\pi(s_t,a_t)=E_{S_{t+1}}[R_t+\gamma·V_\pi(S_{t+1})] Qπ(st,at)=ESt+1[Rt+γ⋅Vπ(St+1)]

继续利用价值函数的定义 V π ( s t ) = E A t [ Q π ( s t , A t ) ] V_\pi(s_t)=E_{A_t}[Q_\pi(s_t,A_t)] Vπ(st)=EAt[Qπ(st,At)],得到

V π ( s t ) = E A t [ E S t + 1 [ R t + γ ⋅ V π ( S t + 1 ) ] ] V_\pi(s_t)=E_{A_t}[E_{S_{t+1}}[R_t+\gamma·V_\pi(S_{t+1})]] Vπ(st)=EAt[ESt+1[Rt+γ⋅Vπ(St+1)]]- Theorem 2: V π ( s t ) = E A t , S t + 1 [ R t + γ ⋅ V π ( S t + 1 ) ] V_\pi(s_t)=E_{A_t,S_{t+1}}[R_t+\gamma·V_\pi(S_{t+1})] Vπ(st)=EAt,St+1[Rt+γ⋅Vπ(St+1)]

-

Monte Carlo approximations

观测得到一个transition,对Theorem 1和Theorem 2进行蒙特卡洛近似:

Q π ( s t , a t ) = r t + γ ⋅ V π ( s t + 1 ) V π ( s t ) = r t + γ ⋅ V π ( s t + 1 ) \begin{aligned} Q_\pi(s_t,a_t)&=r_t+\gamma·V_\pi(s_{t+1}) \\V_\pi(s_t)&=r_t+\gamma·V_\pi(s_{t+1}) \end{aligned} Qπ(st,at)Vπ(st)=rt+γ⋅Vπ(st+1)=rt+γ⋅Vπ(st+1) -

Updating policy network

带有Baseline的策略梯度下降: g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( Q π ( s t , a t ) − V π ( s t ) ) g(a_t)=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(Q_\pi(s_t,a_t)-V_\pi(s_t)) g(at)=∂θ∂ln(π(at∣st;θ))⋅(Qπ(st,at)−Vπ(st))

对 Q π ( s t , a t ) Q_\pi(s_t,a_t) Qπ(st,at)进行蒙特卡洛近似: g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ r t + γ ⋅ V π ( s t + 1 ) − V π ( s t ) ) g(a_t)=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·r_t+\gamma·V_\pi(s_{t+1})-V_\pi(s_t)) g(at)=∂θ∂ln(π(at∣st;θ))⋅rt+γ⋅Vπ(st+1)−Vπ(st))

使用value network v ( s ; w ) v(s;w) v(s;w)对 V π ( s t ) V_\pi(s_t) Vπ(st)进行拟合:

g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( r t + γ ⋅ v ( s t + 1 ; w ) − v ( s t ; w ) ) g(a_t)=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(r_t+\gamma·v(s_{t+1};w)-v(s_t;w)) g(at)=∂θ∂ln(π(at∣st;θ))⋅(rt+γ⋅v(st+1;w)−v(st;w))

TD target: y t = r t + γ ⋅ v ( s t + 1 ; w ) y_t=r_t+\gamma\cdot v(s_{t+1};w) yt=rt+γ⋅v(st+1;w)

g ( a t ) = ∂ ln ( π ( a t ∣ s t ; θ ) ) ∂ θ ⋅ ( y t − v ( s t ; w ) ) g(a_t)=\frac{\partial \ln(\pi(a_t|s_t;\theta))}{\partial\theta}·(y_t-v(s_t;w)) g(at)=∂θ∂ln(π(at∣st;θ))⋅(yt−v(st;w))

梯度上升:

θ ← θ − β ⋅ δ t ⋅ ∂ ln π ( a t ∣ s t ; θ ) ∂ θ \theta\leftarrow\theta-\beta\cdot\delta_t\cdot\frac{\partial\ln\pi(a_t|s_t;\theta)}{\partial\theta} θ←θ−β⋅δt⋅∂θ∂lnπ(at∣st;θ) -

Updating value network

使用value network v ( s ; w ) v(s;w) v(s;w)对 V π ( s t ) V_\pi(s_t) Vπ(st)进行拟合:

V ( s t ; w ) ≈ r t + γ ⋅ V ( s t + 1 ; w ) = y t V(s_t;w)\approx r_t+\gamma·V(s_{t+1};w)=y_t V(st;w)≈rt+γ⋅V(st+1;w)=yt

TD error: δ t = v ( s t ; w ) − y t \delta_t=v(s_t;w)-y_t δt=v(st;w)−ytGradient: ∂ δ t 2 / 2 ∂ w = δ t ∂ v ( s t ; w ) ∂ w \frac{\partial \delta_t^2/2}{\partial w}=\delta_t\frac{\partial v(s_t;w)}{\partial w} ∂w∂δt2/2=δt∂w∂v(st;w)

Gradient descent: w ← w − α ⋅ δ t ⋅ ∂ v ( s t ; w ) ∂ w w\leftarrow w-\alpha\cdot\delta_t\cdot\frac{\partial v(s_t;w)}{\partial w} w←w−α⋅δt⋅∂w∂v(st;w)

4. ReinForce versus A2C

4.1 Policy and Value Networks

两种算法的网络结构相同,都包括价值网络和策略网络。Reinforce with Baseline中,价值网络仅作为Baseline以降低随机梯度造成的方差;A2C中的价值网络用于对actor进行评价(critic)。

从算法流程上看,两种算法仅在TD target和error的部分有差别。Reinforce使用了真实的观测值Return,而A2C使用了TD target,部分基于观测值,部分基于预测值。

对于Multi-Step TD target,在只计算一步的情况下,为one-step TD target;在计算所有步的情况下,则变为 u t = ∑ i = t n γ i − t ⋅ r i u_t=\sum_{i=t}^n\gamma^{i-t}\cdot r_i ut=∑i=tnγi−t⋅ri,A2C算法与Reinforce相同。Reinforce是A2C算法的一个特例。

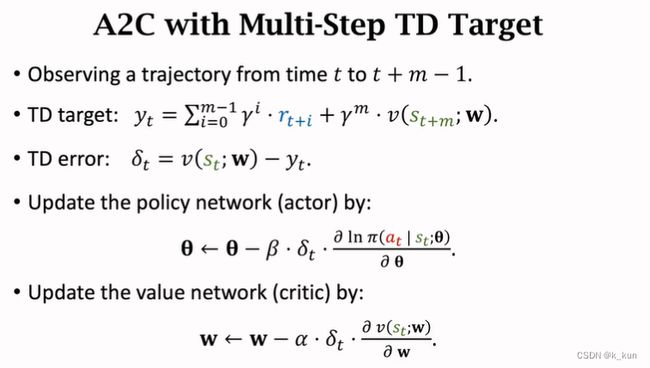

4.2 A2C with Multi-Step TD Target

one-Step TD Target:

y t = r t + γ ⋅ v ( s t + 1 ; w ) y_t=r_t+\gamma\cdot v(s_{t+1};w) yt=rt+γ⋅v(st+1;w)

m-Step TD Target:

y t = ∑ i = 0 m − 1 γ i ⋅ r t + i + γ m ⋅ v ( s t + m ; w ) y_t=\sum_{i=0}^{m-1}\gamma^i\cdot r_{t+i}+\gamma^m\cdot v(s_{t+m};w) yt=i=0∑m−1γi⋅rt+i+γm⋅v(st+m;w)