Mapreduce生成Hfile文件,加载到hbase问题汇总

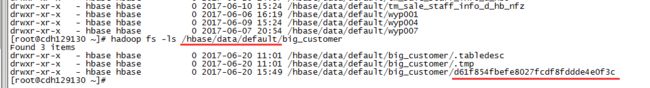

Hfile在hbase表中的底层数据形式:默认default下面

1、Can't get master address from ZooKeeper; znode data == null

hbase(main):001:0> list

TABLE

ERROR: Can't get master address from ZooKeeper; znode data == null

Here is some help for this command:

List all tables in hbase. Optional regular expression parameter could

be used to filter the output. Examples:

hbase> list

hbase> list 'abc.*'

hbase> list 'ns:abc.*'

hbase> list 'ns:.*'

2、LoadIncrementalHFiles:623 - Trying to load hfile=hdfs://

RpcRetryingCaller:132 - Call exception, tries=10, retries=20, started=696043

Caused by: org.apache.hadoop.security.AccessControlException: Permission denied: user=hbase, access=WRITE,

inode="/dop/persist/output/1_20170513232854/f1":hdfs:supergroup:drwxr-xr-x

解决方法:

http://blog.csdn.net/opensure/article/details/51581460

代码中显示设置权限为777

3、zookeeper.ZooKeeper: Client environment:java.class.path

4、Container id: container_1494491520677_0015_01_000006

Exit code: 1

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:601)

at org.apache.hadoop.util.Shell.run(Shell.java:504)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:786)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:213)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Container exited with a non-zero exit code 1

http://blog.csdn.net/wjcquking/article/details/41242625

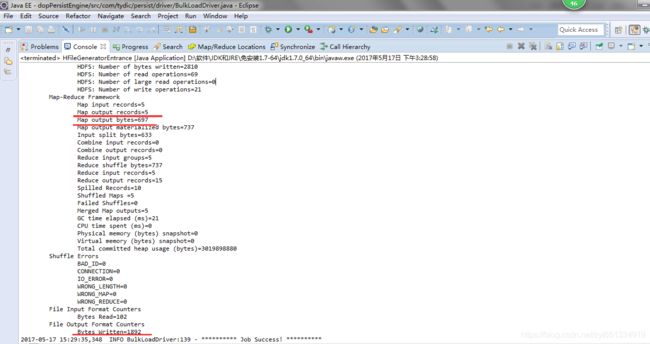

5、同样的程序本地和hadoop环境上跑结果不一样

eclipse中:

Linux中数值和eclipse中不一致!

解决方法:主程序中的变量不能带到mr中,只能通过conf方式

6、

17/05/19 10:40:25 INFO mapreduce.HFileOutputFormat2: Looking up current regions for table tel_rec5

org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed after attempts=21, exceptions:

Fri May 19 10:41:13 CST 2017, null, java.net.SocketTimeoutException: callTimeout=60000, callDuration=68513: row 'tel_rec5,,00000000000000' on table 'hbase:meta' at region=hbase:meta,,1.1588230740, hostname=cdh129136,60020,1494926555927, seqNum=0

at org.apache.hadoop.hbase.client.RpcRetryingCallerWithReadReplicas.throwEnrichedException(RpcRetryingCallerWithReadReplicas.java:270)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:225)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:63)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:200)

at org.apache.hadoop.hbase.client.ClientScanner.call(ClientScanner.java:314)

at org.apache.hadoop.hbase.client.ClientScanner.nextScanner(ClientScanner.java:289)

at org.apache.hadoop.hbase.client.ClientScanner.initializeScannerInConstruction(ClientScanner.java:161)

at org.apache.hadoop.hbase.client.ClientScanner.

at org.apache.hadoop.hbase.client.HTable.getScanner(HTable.java:888)

at org.apache.hadoop.hbase.client.MetaScanner.metaScan(MetaScanner.java:187)

at org.apache.hadoop.hbase.client.MetaScanner.metaScan(MetaScanner.java:89)

at org.apache.hadoop.hbase.client.MetaScanner.listTableRegionLocations(MetaScanner.java:334)

at org.apache.hadoop.hbase.client.HTable.listRegionLocations(HTable.java:702)

at org.apache.hadoop.hbase.client.HTable.getStartEndKeys(HTable.java:685)

at org.apache.hadoop.hbase.client.HTable.getStartKeys(HTable.java:668)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.getRegionStartKeys(HFileOutputFormat2.java:297)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configureIncrementalLoad(HFileOutputFormat2.java:416)

at org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2.configureIncrementalLoad(HFileOutputFormat2.java:386)

at com.tydic.persist.driver.BulkLoadDriver.run(Unknown Source)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at com.tydic.persist.driver.BulkLoadDriver.bulkLoadRun(Unknown Source)

at com.tydic.entrance.GetRuleConf.execute(Unknown Source)

at com.tydic.entrance.HFileGeneratorEntrance.main(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.SocketTimeoutException: callTimeout=60000, callDuration=68513: row 'tel_rec5,,00000000000000' on table 'hbase:meta' at region=hbase:meta,,1.1588230740, hostname=cdh129136,60020,1494926555927, seqNum=0

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:159)

at org.apache.hadoop.hbase.client.ResultBoundedCompletionService$QueueingFuture.run(ResultBoundedCompletionService.java:64)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.hadoop.hbase.exceptions.ConnectionClosingException: Call to cdh129136/192.168.129.136:60020 failed on local exception: org.apache.hadoop.hbase.exceptions.ConnectionClosingException: Connection to cdh129136/192.168.129.136:60020 is closing. Call id=9, waitTime=3

at org.apache.hadoop.hbase.ipc.RpcClientImpl.wrapException(RpcClientImpl.java:1252)

at org.apache.hadoop.hbase.ipc.RpcClientImpl.call(RpcClientImpl.java:1223)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.callBlockingMethod(AbstractRpcClient.java:216)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$BlockingRpcChannelImplementation.callBlockingMethod(AbstractRpcClient.java:300)

at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$BlockingStub.scan(ClientProtos.java:32651)

at org.apache.hadoop.hbase.client.ScannerCallable.openScanner(ScannerCallable.java:372)

at org.apache.hadoop.hbase.client.ScannerCallable.call(ScannerCallable.java:199)

at org.apache.hadoop.hbase.client.ScannerCallable.call(ScannerCallable.java:62)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:200)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas$RetryingRPC.call(ScannerCallableWithReplicas.java:371)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas$RetryingRPC.call(ScannerCallableWithReplicas.java:345)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:126)

... 4 more

Caused by: org.apache.hadoop.hbase.exceptions.ConnectionClosingException: Connection to cdh129136/192.168.129.136:60020 is closing. Call id=9, waitTime=3

at org.apache.hadoop.hbase.ipc.RpcClientImpl$Connection.cleanupCalls(RpcClientImpl.java:1042)

at org.apache.hadoop.hbase.ipc.RpcClientImpl$Connection.close(RpcClientImpl.java:849)

at org.apache.hadoop.hbase.ipc.RpcClientImpl$Connection.run(RpcClientImpl.java:568)

7、

[19 20:40:03,040 INFO ] org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2 - Looking up current regions for table tel_rec5

[19 20:40:03,280 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:07,891 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:08,046 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:09,599 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:11,093 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:12,399 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Failed to specify server's Kerberos principal name

[19 20:40:12,400 WARN ] org.apache.hadoop.hbase.ipc.AbstractRpcClient - Couldn't setup connection for hbase/cdh129130@MYCDH to null

[19 20:40:12,400 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Couldn't setup connection for hbase/cdh129130@MYCDH to null

[19 20:40:14,467 WARN ] o

解决方法:configuration.set("hbase.regionserver.kerberos.principal", dynamicPrincipal);

configuration.set("hbase.master.kerberos.principal", dynamicPrincipal);

8、

[19 22:36:56,639 WARN ] org.apache.hadoop.security.UserGroupInformation - PriviledgedActionException as:hbase/cdh129130@MYCDH (auth:KERBEROS) cause:java.io.IOException: Can't get Master Kerberos principal for use as renewer

java.io.IOException: Can't get Master Kerberos principal for use as renewer

at org.apache.hadoop.mapreduce.security.TokenCache.obtainTokensForNamenodesInternal(TokenCache.java:133)

at org.apache.hadoop.mapreduce.security.TokenCache.obtainTokensForNamenodesInternal(TokenCache.java:100)

at org.apache.hadoop.mapreduce.security.TokenCache.obtainTokensForNamenodes(TokenCache.java:80)

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:142)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:270)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:143)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1307)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1304)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1707)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1304)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1325)

at com.tydic.persist.driver.BulkLoadDriver.run(BulkLoadDriver.java:204)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at com.tydic.persist.driver.BulkLoadDriver.bulkLoadRun(BulkLoadDriver.java:76)

at com.tydic.entrance.GetRuleConf.execute(GetRuleConf.java:99)

at com.tydic.entrance.HFileGeneratorEntrance.main(HFileGeneratorEntrance.java:31)

解决方法:

configuration.set("yarn.resourcemanager.principal", "yarn/_HOST@MYCDH");

或者增加yarn的配置文件

9、

For more detailed output, check application tracking page:http://cdh129130:8088/proxy/application_1495209831244_0002/Then, click on links to logs of each attempt.

Diagnostics: Exception from container-launch.

Container id: container_1495209831244_0002_02_000001

Exit code: 1

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:543)

at org.apache.hadoop.util.Shell.run(Shell.java:460)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:720)

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.launchContainer(LinuxContainerExecutor.java:293)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Shell output: main : command provided 1

main : user is hbase

main : requested yarn user is hbase

解决方法:进入yarn界面查询具体job执行日志,发现是job.xml解析问题

hbase连接可能有问题!!!

10、

| 2017-05-20 11:38:48,560 FATAL [main] org.apache.hadoop.conf.Configuration: error parsing conf job.xml org.xml.sax.SAXParseException; systemId: file:///data/yarn/nm/usercache/hbase/appcache/application_1495209831244_0016/container_1495209831244_0016_01_000001/job.xml; lineNumber: 71; columnNumber: 50; 字符引用 "&# at com.sun.org.apache.xerces.internal.parsers.DOMParser.parse(DOMParser.java:257) at com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderImpl.parse(DocumentBuilderImpl.java:347) at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150) at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2390) at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2459) at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2412) at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2319) at org.apache.hadoop.conf.Configuration.get(Configuration.java:1146) at org.apache.hadoop.mapreduce.v2.util.MRWebAppUtil.initialize(MRWebAppUtil.java:51) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.main(MRAppMaster.java:1421) 2017-05-20 11:38:48,562 FATAL [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Error starting MRAppMaster java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:///data/yarn/nm/usercache/hbase/appcache/application_1495209831244_0016/container_1495209831244_0016_01_000001/job.xml; lineNumber: 71; columnNumber: 50; 字符引用 "&# at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2555) at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2412) at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2319) at org.apache.hadoop.conf.Configuration.get(Configuration.java:1146) at org.apache.hadoop.mapreduce.v2.util.MRWebAppUtil.initialize(MRWebAppUtil.java:51) at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.main(MRAppMaster.java:1421) Caused by: org.xml.sax.SAXParseException; systemId: file:///data/yarn/nm/usercache/hbase/appcache/application_1495209831244_0016/container_1495209831244_0016_01_000001/job.xml; lineNumber: 71; columnNumber: 50; 字符引用 "&# at com.sun.org.apache.xerces.internal.parsers.DOMParser.parse(DOMParser.java:257) at com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderImpl.parse(DocumentBuilderImpl.java:347) at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150) at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2390) at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2459) ... 5 more 2017-05-20 11:38:48,563 INFO [main] org.apache.hadoop.util.ExitUtil: Exiting with status 1

类似问题:https://github.com/elastic/elasticsearch-hadoop/issues/417 job.xml格式问题:传递参数有误\001,应该写成\\001 |

11、

[20 15:59:13,273 WARN ] org.apache.hadoop.mapred.LocalJobRunner - job_local199199413_0001

java.lang.Exception: org.apache.hadoop.mapreduce.task.reduce.Shuffle$ShuffleError: error in shuffle in localfetcher#1

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:462)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:529)

Caused by: org.apache.hadoop.mapreduce.task.reduce.Shuffle$ShuffleError: error in shuffle in localfetcher#1

at org.apache.hadoop.mapreduce.task.reduce.Shuffle.run(Shuffle.java:134)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:376)

at org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:319)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:744)

Caused by: java.lang.ExceptionInInitializerError

at org.apache.hadoop.mapred.SpillRecord.

at org.apache.hadoop.mapred.SpillRecord.

at org.apache.hadoop.mapred.SpillRecord.

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:124)

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.doCopy(LocalFetcher.java:102)

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.run(LocalFetcher.java:85)

Caused by: java.lang.RuntimeException: Secure IO is not possible without native code extensions.

at org.apache.hadoop.io.SecureIOUtils.

... 6 more

解决方法:LocalJobRunner本地eclipse跑的任务报,打成jar放到hadoop环境上正常

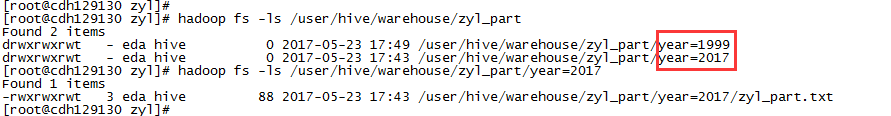

12、hive分区表如何处理mr

13、Wrong FS:

java.lang.IllegalArgumentException: Wrong FS: hdfs://cdh129130:8020/tmp/dop/persist/output/tel_rec_part_4/20170525091224, expected: file:///

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:654)

at org.apache.hadoop.fs.RawLocalFileSystem.pathToFile(RawLocalFileSystem.java:80)

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:529)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:747)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:524)

at org.apache.hadoop.fs.ChecksumFileSystem.delete(ChecksumFileSystem.java:534)

at com.tydic.utils.HdfsUtil.delete(HdfsUtil.java:452)

at com.tydic.persist.driver.BulkLoadDriver.deleteHdfsPath(BulkLoadDriver.java:210)

at com.tydic.persist.driver.BulkLoadDriver.run(BulkLoadDriver.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at com.tydic.persist.driver.BulkLoadDriver.bulkLoadRun(BulkLoadDriver.java:73)

at com.tydic.entrance.GetRuleConf.execute(GetRuleConf.java:86)

at com.tydic.entrance.HFileGeneratorEntrance.main(HFileGeneratorEntrance.java:29)

解决方法:下载核心配置文件 hdfs-site.xml、core-site.xml

或代码显示指定

Configuration config = new Configuration();

if(!"".equals(PropertiesUtil.getValue("fs.defaultFS"))){

config.set("fs.defaultFS", PropertiesUtil.getValue("fs.defaultFS"));

}

14、

[03 19:46:29,270 INFO ] org.apache.hadoop.mapreduce.JobSubmitter - Kind: HBASE_AUTH_TOKEN, Service: 55f3581e-b84a-49b9-84b3-8998de8db847, Ident: (org.apache.hadoop.hbase.security.token.AuthenticationTokenIdentifier@29)

[Fatal Error] job.xml:65:50: 字符引用 "&#

[03 19:46:29,354 INFO ] org.apache.hadoop.mapreduce.JobSubmitter - Cleaning up the staging area file:/tmp/hadoop-hdfs/mapred/staging/eda1250935355/.staging/job_local1250935355_0001

java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/tmp/hadoop-hdfs/mapred/staging/eda1250935355/.staging/job_local1250935355_0001/job.xml; lineNumber: 65; columnNumber: 50; 字符引用 "&#

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2656)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2513)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2409)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:982)

at org.apache.hadoop.mapred.JobConf.checkAndWarnDeprecation(JobConf.java:2032)

at org.apache.hadoop.mapred.JobConf.

at org.apache.hadoop.mapred.LocalJobRunner$Job.

at org.apache.hadoop.mapred.LocalJobRunner.submitJob(LocalJobRunner.java:731)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:244)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1307)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1304)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1707)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1304)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1325)

at com.tydic.persist.driver.BulkLoadDriver.run(BulkLoadDriver.java:172)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at com.tydic.persist.driver.BulkLoadDriver.bulkLoadRun(BulkLoadDriver.java:74)

at com.tydic.entrance.GetRuleConf.execute(GetRuleConf.java:104)

at com.tydic.entrance.HFileGeneratorEntrance.main(HFileGeneratorEntrance.java:34)

Caused by: org.xml.sax.SAXParseException; systemId: file:/tmp/hadoop-hdfs/mapred/staging/eda1250935355/.staging/job_local1250935355_0001/job.xml; lineNumber: 65; columnNumber: 50; 字符引用 "&#

at com.sun.org.apache.xerces.internal.parsers.DOMParser.parse(DOMParser.java:257)

at com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderImpl.parse(DocumentBuilderImpl.java:347)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2491)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2560)

... 20 more

解决方法:job.xml格式问题:传递参数有误\001,应该写成\\001

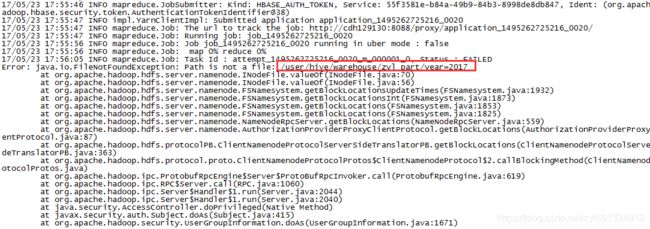

15、

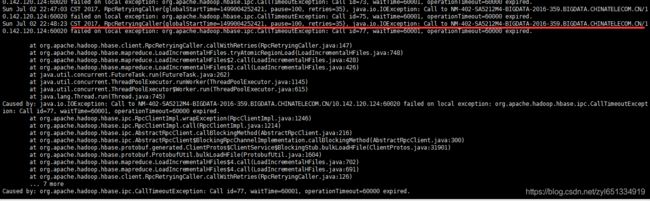

17/06/22 14:26:03 INFO client.RpcRetryingCaller: Call exception, tries=10, retries=20, started=68231 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

17/06/22 14:26:23 INFO client.RpcRetryingCaller: Call exception, tries=11, retries=20, started=88394 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

17/06/22 14:26:43 INFO client.RpcRetryingCaller: Call exception, tries=12, retries=20, started=108503 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

17/06/22 14:27:03 INFO client.RpcRetryingCaller: Call exception, tries=13, retries=20, started=128617 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

17/06/22 14:27:23 INFO client.RpcRetryingCaller: Call exception, tries=14, retries=20, started=148763 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

17/06/22 14:27:44 INFO client.RpcRetryingCaller: Call exception, tries=15, retries=20, started=168968 ms ago, cancelled=false, msg=row '' on table 'test' at region=test,,1498042202011.6ab53ace6e83876695e0458191c2d5e7., hostname=nm-304-sa5212m4-bigdata-219,60020,1495677223107, seqNum=2

Caused by: org.apache.hadoop.ipc.RemoteException(java.io.FileNotFoundException): File does not exist: /user/tydic/persist/output/test/20170622103558/F1/78a234dbc4ce42d9a4120682c465a6f4

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:66)

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:56)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocationsUpdateTimes(FSNamesystem.java:1932)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocationsInt(FSNamesystem.java:1873)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1853)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1825)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:559)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getBlockLocations(AuthorizationProviderProxyClientProtocol.java:87)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:363)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1060)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2044)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1671)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2038)

at org.apache.hadoop.ipc.Client.call(Client.java:1468)

at org.apache.hadoop.ipc.Client.call(Client.java:1399)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy15.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:254)

at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy16.getBlockLocations(Unknown Source)

at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:279)

at com.sun.proxy.$Proxy17.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:1213)

... 25 more

at org.apache.hadoop.hbase.ipc.RpcClientImpl.call(RpcClientImpl.java:1219)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.callBlockingMethod(AbstractRpcClient.java:216)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$BlockingRpcChannelImplementation.callBlockingMethod(AbstractRpcClient.java:300)

at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$BlockingStub.bulkLoadHFile(ClientProtos.java:32663)

at org.apache.hadoop.hbase.protobuf.ProtobufUtil.bulkLoadHFile(ProtobufUtil.java:1607)

... 10 more

解决方法:

查RegionServer的log

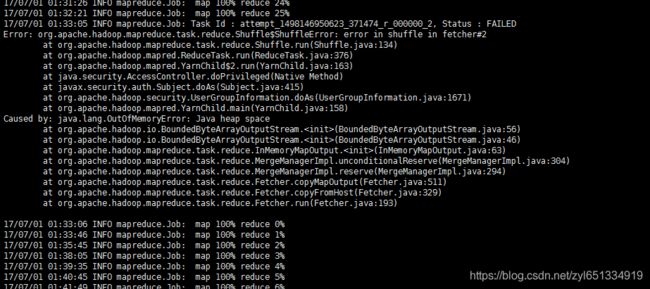

15、内存溢出

解决方法:

降低mapreduce.reduce.shuffle.memory.limit.percent这个参数

16、Exception in thread "main" java.io.IOException: Trying to load more than 32 hfiles to one family of one region

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.doBulkLoad(LoadIncrementalHFiles.java:377)

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.run(LoadIncrementalHFiles.java:960)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.main(LoadIncrementalHFiles.java:967)

解决方案:

1) You can change the program by adding following line after setting zookeeper client port.

configuration.setInt(LoadIncrementalHFiles.MAX_FILES_PER_REGION_PER_FAMILY,64);

2) you can add this property to custom configs through ambari and restart the cluster so new configs take affect

3) you can add this property directly to /etc/conf/hbase-site.xml in the machine where you are running the job so that you need not change the program everytime.

hbase org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles

-Dhbase.mapreduce.bulkload.max.hfiles.perRegion.perFamily=1024 17、hbase org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles hdfs://NM-304-SA5212M4-BIGDATA-221:54310/tmp-dike/app_mbl_user_family_cir_info_m/20170702205018 app_mbl_user_family_cir_info_m 解决方法:逐个文件load到hbase中

你可能感兴趣的:(Hadoop生态,java工具使用)