在真实游戏中使用机器学习代理工具包:初学者指南

My name is Alessia Nigretti and I am a Technical Evangelist for Unity. My job is to introduce Unity’s new features to developers. My fellow evangelist Ciro Continisio and I developed the first demo game that uses the new Unity Machine Learning Agents toolkit and showed it at DevGamm Minsk 2017. This post is based on our talk and explains what we learned making the demo. At the same time, we invite you to join the ML-Agents Challenge and show off your creative use-cases of the toolkit.

我叫Alessia Nigretti,我是Unity的技术传播者。 我的工作是向开发人员介绍Unity的新功能。 我和我的福音传教士Ciro Continisio一起开发了第一款使用新的Unity Machine Learning Agents工具包的演示游戏,并在DevGamm Minsk 2017上进行了演示。这篇文章基于我们的谈话,并解释了我们学习该演示的经验。 同时,我们邀请您参加ML-Agents挑战赛,并展示您的工具包的创意用例。

Last September, the Machine Learning team introduced the Machine Learning Agents toolkit with three demos and a blog post. The news ignited the interest of a huge number of developers who are either technical experts in the field of Artificial Intelligence or just interested in the ways Machine Learning is changing the world and the way we make and play games. As a consequence, the team has recently launched a new beta version (0.2) of the ML-Agents Toolkit and announced the very first community challenge dedicated to Unity Machine Learning Agents.

去年9月,机器学习团队推出了机器学习代理工具包,其中包含三个演示和一个 博客文章 。 该消息激发了众多开发人员的兴趣,这些开发人员要么是人工智能领域的技术专家,要么对机器学习正在改变世界的方式以及我们制作和玩游戏的方式感兴趣。 因此,该团队最近 发布了ML-Agents Toolkit的新测试版(0.2), 并宣布 了 针对Unity Machine Learning Agents的 第一个社区挑战 。

Ciro and I aren’t experts in Machine Learning, and we both wanted to highlight this during our talk. Since one of our main values at Unity is to democratise game development, we wanted to make sure that this new feature was available to everyone who felt like diving into it. First, we engaged with the Machine Learning team and produced some small demos to understand how this was working from the bottom. We had a lot of questions, and we figured those would be the same questions that a developer that is dealing with Machine Learning Agents for the first time would ask. So we wrote them all down and went through them during our talk. If you are reading this and you have no idea about what Machine Learning is or how it works, you can still find your way through the world of the Unity Machine Learning Agents Toolkit!

Ciro和我不是机器学习方面的专家,我们都希望在我们的演讲中强调这一点。 由于Unity的主要价值观之一就是使游戏开发民主化 ,因此我们希望确保希望加入该功能的每个人都可以使用此新功能。 首先,我们与机器学习团队合作,制作了一些小型演示,以从底层了解其工作原理。 我们有很多问题,我们认为这些问题与初次与机器学习代理打交道的开发人员会提出的问题相同。 因此,我们将它们全部写下来,并在谈话过程中进行了研究。 如果您正在阅读本文,但不知道什么是机器学习或它如何工作,您仍然可以在Unity Machine Learning Agents Toolkit的世界中找到自己的方式!

Our demo Machine Learning Roguelike is a 2D action game where the player has to fight ferocious smart slimes. These Machine Learning-controlled enemies will attack without giving our hero a break, and will run away when they feel like their life is in danger.

我们的演示机器学习Roguelike是一款2D动作游戏,玩家必须与凶猛的智能史莱姆作斗争。 这些由机器学习控制的敌人会在不中断我们英雄的情况下进行攻击,并在感到生命危险时逃跑。

什么是机器学习? (What is Machine Learning?)

Let’s start with an introduction to Machine Learning. Machine Learning is an application of artificial intelligence that provides a system with the ability of learning from data autonomously, rather than in an engineered way. This works by feeding the system with information and observations that can be used to find patterns and make predictions about future outcomes. More broadly, the system has to learn the desired input-output mapping. This way, the system will be able to choose the best action to perform next in order to optimise its outcome.

让我们从机器学习的介绍开始。 机器学习是人工智能的一种应用,它为系统提供了从数据中自动学习的能力,而无需以工程化的方式进行学习。 这通过向系统提供信息和观察结果来工作,这些信息和观察结果可用于发现模式并对未来结果进行预测。 更广泛地说,系统必须学习所需的输入-输出映射。 这样,系统将能够选择下一个要执行的最佳操作,以优化其结果。

There are different ways of doing this, that we can choose depending on what kind of observations we have available to feed our system. The one used in this context is Reinforcement Learning. Reinforcement Learning is a learning method based on not telling the system what to do, but only what is right and what is wrong. This means that we let the system perform random actions and we provide a reward or a punishment when we think that these actions are respectively right or wrong. Eventually, the system will understand that it has to perform certain actions in order to get a positive reward. We can think about it as teaching a dog how to sit: a dog will not understand what we mean, but if we feed it a treat when it does the right thing, it will eventually associate the action with the reward.

有多种方法可以执行此操作,我们可以根据提供给系统的观测值进行选择。 在这种情况下使用的是强化学习 。 强化学习是一种学习方法,其基础是不告诉系统要做什么,而只告诉对与错。 这意味着我们让系统执行随机动作,并且当我们认为这些动作是对还是错时,我们将提供奖励或惩罚。 最终,系统将了解必须执行某些操作才能获得积极的回报。 我们可以将其视为教狗如何坐着:狗不会理解我们的意思,但是如果我们在做正确的事时给它喂食,它最终将把动作与奖励联系起来。

We use Reinforcement Learning to train a Neural Network, which is a computer system modeled on the nervous system. Neural Networks are structured in units, or “neurons”. Neurons are divided into layers. The layer that interacts with the external environment and gathers all input information is the Input layer. Opposite to that, the Output layer contains all neurons that store information about the outcome of a certain input in the network. In the middle, the Hidden layers contain the neurons that perform all the calculations. They learn complex abstracted representations of the input data, which allow their outputs to ultimately be “intelligent”. Most layers are “fully connected”, which means that neurons in that layer are connected to all neurons in the previous layer. Each connection is defined by a “weight”, which is a numerical value that can either strengthen or weaken the link between the neurons.

我们使用强化学习来训练神经网络 ,这是一种以神经系统为模型的计算机系统。 神经网络以单位或“神经元”的形式构造。 神经元分为几层。 与外部环境交互并收集所有输入信息的层是输入层。 与此相反,输出层包含所有神经元,这些神经元存储有关网络中某些输入结果的信息。 在中间,隐藏层包含执行所有计算的神经元。 他们学习输入数据的复杂抽象表示,这使他们的输出最终成为“智能”的。 大多数层是“完全连接”的,这意味着该层中的神经元已连接到上一层中的所有神经元。 每个连接都由“权重”定义,“权重”是可以增强或减弱神经元之间链接的数值。

In Unity, our “agents” (the entities who perform the learning) use the Reinforcement Learning model. The agents perform actions on an environment. The actions cause a change in the environment, which in turn is fed back to the agent, together with some reward or punishment. The action happens in what we call the Learning Environment, which in practical terms corresponds to a regular Unity scene.

在Unity中,我们的“代理商”(执行学习的实体)使用强化学习模型。 代理在环境上执行操作。 这些行为会导致环境的变化,而环境的变化又会反馈给代理商,并带来一些奖励或惩罚。 该动作发生在我们所谓的学习环境中,该环境实际上与常规的Unity场景相对应。

Within the Learning Environment, we have an Academy, which is a script that defines the properties of the training (framerate, timescale…). The Academy passes these parameters to the Brains, which are entities that contain the model to train. Finally, the Agents are connected to the Brains, from which they get the actions and feed information back to facilitate the learning process. To perform the training, the system uses an extra module that allows the Brains to communicate with an external Python environment using the TensorFlow Machine Learning library. Once the training is complete, this environment will distill the learning process into a “model”, a binary file that gets reimported into Unity to be used as a trained Brain.

在学习环境中,我们有一所学院,它是一个脚本,用于定义培训的属性(帧速率,时间比例...)。 学院将这些参数传递给大脑,大脑是包含要训练的模型的实体。 最后,特工连接到大脑,他们从中获得动作并反馈信息以促进学习过程。 为了执行训练,系统使用了一个额外的模块,该模块允许Brains使用TensorFlow Machine Learning库与外部Python环境进行通信。 一旦训练完成,此环境将把学习过程提炼为“模型”,即一个二进制文件,该文件将重新导入到Unity中以用作受过训练的Brain。

将机器学习应用于真实游戏 (Applying Machine Learning to a real game)

After playing around with simple examples like 3D Ball and other small projects that we created in a few hours (see gifs), we were fascinated by the potential of this technology.

在玩了一些简单的示例(例如 3D Ball) 和我们在几个小时内创建的其他小项目(请参见gif)后,我们对这项技术的潜力着迷了。

|

|

|

|

|

|

We found a great application for it in a 2D Roguelike game. To build the scene, we also used new features such as 2D Tilemaps and Cinemachine 2D.

我们在2D Roguelike游戏中找到了很好的应用程序。 为了构建场景,我们还使用了2D Tilemaps和Cinemachine 2D等新功能。

The idea behind our Machine Learning Roguelike demo was to have a simple action game where all entities are Machine Learning agents. This way, we established a common interaction language that can be used both by the players and the enemies that they encounter. The goal is very simple: moving around and surviving enemy encounters.

我们的机器学习Roguelike演示背后的想法是要有一个简单的动作游戏,其中所有实体都是机器学习代理。 这样,我们建立了一种通用的交互语言,玩家和遇到的敌人都可以使用。 目标非常简单:四处走动并幸存下来。

设置培训 (Setting up the training)

Every good training starts with a high-level brainstorming session on the training algorithm. This is the code that runs every frame into the agent’s class, determining the effect of inputs on the agent and the rewards they generate. We started off by defining what the base actions of the game are (move, attack, heal…), how they connect with each other, what we wanted the agent to learn in respect to them, what we were going to reward or punish.

每次良好的培训都从针对培训算法的高级集体讨论开始。 这是运行在代理商类中每一帧的代码,确定输入对代理商的影响以及代理商产生的报酬。 我们首先定义游戏的基本动作是什么(移动,攻击,治愈……),它们如何相互联系,我们希望特工从中学习到有关它们的知识,我们将要奖励或惩罚的东西。

One of the first decisions to make is whether to use Discrete or Continuous space for States and Actions. Discrete means that the states and actions can only have one value at a time – it is a simplified version of a real environment, therefore the agents can associate actions with rewards more easily. In our example, we use Discrete actions, which we allow to have 6 values: 0: stay still / 1-4: move in one direction / 5: attack. Continuous means that there can be multiple states or actions, and they all have float values. However, they are harder to use as their variability can confuse the agent. In Roguelike, we use Continuous states to check for health, target, distance from target etc.

首先要做出的决定之一是对国家和行动使用离散空间还是连续空间。 离散意味着状态和动作一次只能具有一个值–这是真实环境的简化版本,因此代理可以更轻松地将动作与奖励相关联。 在我们的示例中,我们使用离散动作,该动作允许有6个值:0:保持静止/ 1-4:向一个方向移动/ 5:攻击。 连续表示可以有多个状态或动作,并且它们都具有浮点值。 但是,它们更难使用,因为它们的可变性会使代理混淆。 在Roguelike中,我们使用连续状态来检查健康状况,目标,距目标的距离等。

奖励功能 (The reward function)

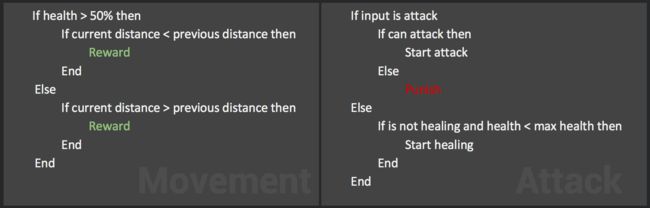

The initial algorithm we came up with was allowing the agent to earn reward if, when not in a situation of danger, its distance from the target was decreasing. Similarly, when in a situation of danger, it would earn reward if its distance from the target was increasing (the agent was “running away”). Additionally, the algorithm was including a punishment given to the agent in case it was attacking when not allowed to.

我们提出的最初算法是,如果不在危险情况下,代理与目标的距离在减小,则代理可以赢得奖励。 同样,在危险情况下,如果距目标的距离增加(特工“逃跑”),它将获得奖励。 此外,该算法还包括对代理的惩罚,以防万一它在不允许时发动攻击。

After days and days of testing, this initial algorithm changed a lot. What we learned is that the best way of reproducing the behaviour that we wanted to obtain, was to follow a simple pattern: try, fail, learn from your failure, loop. Like we do when we want the machine to learn from the observations we provide, we also want to learn from the observations that we make through trial and error. One of the things that we noticed, for example, is that the agent will always find a way to exploit the rewards: what happened in our case is that the agent started moving back and forth because for every time it would move forward again, it would get some reward. It basically found a way of taking advantage of our algorithm to get the most reward out of it!

经过数天的测试,这种初始算法发生了很大变化。 我们了解到,再现我们想要获得的行为的最佳方法是遵循一种简单的模式:尝试,失败,从失败中学习,循环。 就像我们希望机器从我们提供的观察结果中学习时一样,我们也希望从试验和错误中获得的观察结果中学习。 例如,我们注意到的一件事是,代理总是会找到一种利用奖励的方法:在我们的案例中,情况是代理开始来回移动,因为每次它再次向前移动时,会得到一些回报。 它基本上找到了一种利用我们的算法从中获得最大回报的方法!

我们学到了什么 (What we learned)

From our research for a suitable solution, we learned many different lessons that we managed to apply successfully to our final project. It is fundamental to keep in mind that rewards can be assigned at any moment of the gameplay, whenever we think that the agent is doing something right.

通过针对合适解决方案的研究,我们吸取了许多成功的经验教训,并成功地应用于了最终项目。 牢记最基本的一点是,只要我们认为代理人在做正确的事情,就可以在游戏的任何时刻分配奖励。

培训现场 (The training scene)

我们学到了什么 (What we learned)

From setting up the training scene, we learned that parallelizing training instead of just repeating the same situation several times makes the training more solid, as the agents have more data to work with and learn from. To do this, it is useful to set-up a few Heuristic agents to perform simple actions that help the agents learn, without leading them into making wrong assumptions. For instance, we set-up a simple Heuristic script to make our agents attack randomly, to provide our training agents with an example of what happens when they get receive damage. Once our environment is ready, we can launch the training. If we’re planning on launching a very long training, it is good to double-check that the logic of your algorithm makes sense. This isn’t about compile-time errors or whether you are a good programmer or not – as I mentioned, the agents will find a way of exploiting your algorithm, so make sure that there is no logic gap. To be even more sure, launch a 1x speed training to see what happens at each frame. Observe it and see if your agent is acting like you were expecting it to.

通过设置训练场景,我们了解到并行化训练(而不是仅仅重复几次相同的情况)会使训练更加扎实,因为座席有更多的数据可以使用和学习。 为此,设置一些启发式代理以执行有助于代理学习的简单操作非常有用,而不会导致他们做出错误的假设。 例如,我们设置了一个简单的启发式脚本来使我们的特工随机发动攻击,从而为我们的训练特工提供一个示例,说明他们受到伤害时会发生什么。 环境准备就绪后,我们就可以开始培训了。 如果我们计划进行很长的培训,最好再次检查算法的逻辑是否合理。 这不是关于编译时错误,也不是关于您是否是一名优秀的程序员,正如我所提到的,代理将找到一种利用算法的方法,因此请确保没有逻辑鸿沟。 更加确定的是,发起1倍速训练以查看每帧发生的情况。 观察它,看看您的代理人的行为是否与您期望的一样。

训练 (Training)

Once everything is ready for training, we need to create a build that will interact with the TensorFlow environment in Python. First, we set the Brain that has to be trained externally as “External”, then we build the game. Once this is done, we open the Python environment, set the hyperparameters and launch the training. In the Machine Learning Readme page you can find information on how to build for training and how to choose your hyperparameters.

一旦一切准备就绪,我们就需要创建一个构建版本,该构建版本将与Python中的TensorFlow环境进行交互。 首先,我们将必须在外部进行训练的大脑设置为“外部”,然后构建游戏。 完成此操作后,我们将打开Python环境,设置超参数并开始培训。 在机器学习 自述文件页面中, 您可以找到有关 如何构建训练 以及 如何选择超参数的信息 。

While we’re running the training, we can observe the mean reward that our agents are earning. This should be increasing slowly, until it stabilises – which should happen when the agents have stopped learning.

在进行培训时,我们可以观察到代理商所获得的平均报酬。 它应该缓慢增加,直到稳定为止,这应该在特工停止学习时发生。

When we’re happy with our model, we import it back into Unity and see what happens.

当我们对模型感到满意时,我们将其导入回Unity中,看看会发生什么。

The gif shows the result of ~1h training: as we wanted, our slime gets closer to the other agent to attack until it gets attacked back and loses most of its health. At that point, it runs away to give itself time to heal, then goes back to attack the opponent.

gif显示了大约1h训练的结果:根据我们的需要,我们的粘液会更靠近另一个代理进行攻击,直到它被攻击回来并失去大部分生命。 在那一点上,它消失了,给了自己时间来治愈,然后又回击了对手。

测试中 (Testing)

When we apply this model to our Roguelike game, we can see that it works the same way. Our slimes have learnt how to act intelligently and to adapt to scenarios that are potentially different from the ones where they have been trained in.

当我们将此模型应用于Roguelike游戏时,我们可以看到它的工作方式相同。 我们的史莱姆学会了如何聪明地行动并适应与他们所接受的培训方案可能不同的方案。

现在怎么办? (What now?)

This article was meant to provide a little demonstration of how much you can do with the Unity’s Machine Learning Agents API without necessarily having any specific technical knowledge about Machine Learning. If you want to take a look at this demo, it is available in the Evangelism Team repository.

本文旨在提供一些示范,说明您可以使用Unity的机器学习代理API进行多少操作,而不必具备有关机器学习的任何特定技术知识。 如果您想看一下这个演示,可以在Evangelism Team信息库中找到 。

There is a lot more that you can do to implement Machine Learning in a game that you have already started to work on: one example is to mix trained and heuristics, to hard-code behaviour that would be too complex to train, but also give some realism to your games using ML-Agents.

在已经开始工作的游戏中,您可以做很多事情来实现机器学习:一个例子是将训练有素的启发式方法混合在一起,对难以训练的行为进行硬编码,但也要给使用ML-Agents为您的游戏带来一些真实感。

If you want to add more finesse to the training, you can also put together information about the game style of real players, their strategies and intentions through a closed beta or internal playtesting. Once you have this data, you can further refine the training of your agents to get the next level of AI complexity. And if you feel adventurous, you can try building your own Machine Learning models and algorithm to obtain even more flexibility!

如果您想为培训增加更多技巧,您还可以通过封闭Beta或内部测试来汇总有关真实玩家的游戏风格,他们的策略和意图的信息。 获得这些数据后,您可以进一步完善对代理商的培训,以使AI复杂性再上一个台阶。 如果您喜欢冒险,可以尝试构建自己的机器学习模型和算法以获得更大的灵活性!

Now that you learned how to use ML-Agents, come and join the ML-Agents Challenge!

既然您已经学会了如何使用ML-Agent,那就来加入 ML-Agents Challenge !

Look forward to seeing you there!

期望在那里见到你!

Recommended Readings:

推荐读物:

Introducing ML-Agents Toolkit v0.2: Curriculum Learning, new environments, and more

引入ML-Agents工具包v0.2:课程学习,新环境等

Introducing: Unity Machine Learning Agents Toolkit

简介:Unity机器学习代理工具包

Unity AI – Reinforcement Learning with Q-Learning

Unity AI –通过Q学习进行强化学习

Unity AI-themed Blog Entries

Unity AI主题博客条目

翻译自: https://blogs.unity3d.com/2017/12/11/using-machine-learning-agents-in-a-real-game-a-beginners-guide/