Bert-tensorflow用于文本分类任务

1 前言

作为学习应用Bert的一次记录。网上已经有许多相应的代码和介绍都可以参考。因此,这里更多的偏重于实际应用部分。参考的代码有许多,这里列出google官方的代码:

https://github.com/google-research/bert/blob/master/predicting_movie_reviews_with_bert_on_tf_hub.ipynb.

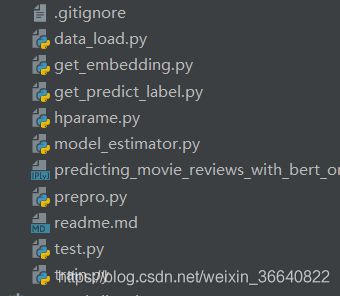

2 项目结构

3 流程说明

1. data_load.py

def load_directory_data(directory):

data = {}

data["sentence"] = []

# data['sentiment'] = []

data["polarity"] = []

for file_path in os.listdir(directory):

with tf.gfile.GFile(os.path.join(directory, file_path), "r") as f:

# txt

# data["sentence"].append(f.read())

# csv

reader = csv.reader(f, delimiter=",")

for line in reader:

data["sentence"].append(line[0])

# data['sentiment'].append(line[2])

data['polarity'].append(int(line[1]))

# data["sentiment"].append(re.match("(\w+)\.csv", file_path).group(1))

return pd.DataFrame.from_dict(data)

这部分为数据加载。已经分别实现了多个txt和csv文件的读取。主要属性有sentence,polarity,后者相当于标签,下图为运行结果。

可以看到主要是对sentence进行fine-tuning,并且可以打乱顺序。

2. hparame.py

parser = argparse.ArgumentParser()

# data preprocessing

parser.add_argument('--DATA_COLUMN', default="sentence", help="data column")

parser.add_argument('--LABEL_COLUMN', default="polarity", help="polarity")

parser.add_argument('--label_list', default="0,1", help="label_list ")

# This is a path to an uncased (all lowercase) version of BERT

# parser.add_argument("--BERT_MODEL_HUB", default="https://tfhub.dev/google/bert_uncased_L-12_H-768_A-12/1")

parser.add_argument("--BERT_INIT_CHKPNT", default="./bert_pretrain_model/bert_model.ckpt")

parser.add_argument("--BERT_VOCAB", default="./bert_pretrain_model/vocab.txt")

parser.add_argument("--BERT_CONFIG", default="./bert_pretrain_model/bert_config.json")

# We'll set sequences to be at most 128 tokens long.

parser.add_argument("--MAX_SEQ_LENGTH", default=128, type=int)

""" train hyper-parameters """

parser.add_argument("--BATCH_SIZE", default=32, type=int)

parser.add_argument("--LEARNING_RATE", default=2e-5, type=float)

parser.add_argument("--NUM_TRAIN_EPOCHS", default=3.0, type=float)

# Warmup is a period of time where hte learning rate

# is small and gradually increases--usually helps training.

parser.add_argument("--WARMUP_PROPORTION", default=0.1, type=float)

# Model configs

parser.add_argument("--SAVE_CHECKPOINTS_STEPS", default=500, type=int)

parser.add_argument("--SAVE_SUMMARY_STEPS", default=100, type=int)

""" save model """

parser.add_argument("--OUTPUT_DIR", default="./save_model/")

parser.add_argument("--model_output", default="bert_model")

该部分主要一些参数,包括输入的label,保存模型的输入和输出路径,迭代次数等等

3. prepro.py

def create_tokenizer_from_hub_module(hp):

"""

create tokenizer

:return:

"""

tokenization.validate_case_matches_checkpoint(True, hp.BERT_INIT_CHKPNT)

return tokenization.FullTokenizer(vocab_file=hp.BERT_VOCAB, do_lower_case=True)

def process_data(hp):

tokenizer = create_tokenizer_from_hub_module(hp)

train, test = download_and_load_datasets()

# print(train)

# train = train.sample(5000)

# test = test.sample(5000)

# Use the InputExample class from BERT's run_classifier code to create examples from the data

train_InputExamples = train.apply(lambda x: bert.run_classifier.InputExample(guid=None,

# Globally unique ID for bookkeeping, unused in this example

text_a=x[hp.DATA_COLUMN],

text_b=None,

label=x[hp.LABEL_COLUMN]), axis=1)

test_InputExamples = test.apply(lambda x: bert.run_classifier.InputExample(guid=None,

text_a=x[hp.DATA_COLUMN],

text_b=None,

label=x[hp.LABEL_COLUMN]), axis=1)

# print(tokenizer.tokenize("This here's an example of using the BERT tokenizer"))

# Convert our train and test features to InputFeatures that BERT understands.

label_list = [int(i) for i in hp.label_list.split(",")]

train_features = run_classifier.convert_examples_to_features(train_InputExamples, label_list, hp.MAX_SEQ_LENGTH,

tokenizer)

test_features = run_classifier.convert_examples_to_features(test_InputExamples, label_list, hp.MAX_SEQ_LENGTH,

tokenizer)

return train_features, test_features

主要有两个函数,就是进行fine-tuning的函数run_classifier以及分词create_tokenizer_from_hub_module

4. model_estimator.py

return {

"eval_accuracy": accuracy,

"f1_score": f1_score,

"auc": auc,

"precision": precision,

"recall": recall,

"true_positives": true_pos,

"true_negatives": true_neg,

"false_positives": false_pos,

"false_negatives": false_neg

}

训练的主要文件,在前面的部分都调好参数后,可以直接进行训练,返回包括准确率,recalll和F1值等,可以自己选择

5.get_predict_label.py和get_embedding.py

if use_sentence:

output_layer = model.get_pooled_output()

else:

output_layer = model.get_sequence_output()

return output_layer

[('i dont know why people think this is such a bad movie its got a pretty good plot some good action and the change of location for harry does not hurt either sure some of its offensive and gratuitous but this is not the only movie like that eastwood is in good form as dirty harry and i liked pat hingle in this movie as the small town cop if you liked dirty harry then you should see this one its a lot better than the dead pool', array([-0.21832937, -1.6289296 ], dtype=float32), '0'), ('i watched this video at a friends house im glad i did not waste money buying this one the video cover has a scene from the 1975 movie capricorn one the movie starts out with several clips of rocket blow-ups most not related to manned flight sibrels smoking gun is a short video clip of the astronauts preparing a video broadcast he edits in his own voice-over instead of letting us listen to what the crew had to say the video curiously ends with a showing of the zapruder film his claims about radiation shielding star photography and others lead me to believe is he extremely ignorant or has some sort of ax to grind against nasa the astronauts or american in general his science is bad and so is this video.', array([-0.6369945, -0.7526416], dtype=float32), '0'), ('this movie is full of references like mad max ii the wild one and many others the ladybug´s face it´s a clear reference or tribute to peter lorre this movie is a masterpiece we´ll talk much more about in the future', array([-1.7239381 , -0.19645582], dtype=float32), '1'), ('what happens when an army of wetbacks towelheads and godless eastern european commies gather their forces south of the border gary busey kicks their butts of course another laughable example of reagan-era cultural fallout bulletproof wastes a decent supporting cast headed by l q jones and thalmus rasulala', array([-1.4781466 , -0.25884843], dtype=float32), '1')]

最后当然是获取我们想要的词向量或者句子向量, model.get_pooled_output()为返回token也就是词向量,get_sequence_output()为得到句子的向量。当然,在分类任务上想知道分类的结果,就可以调用get_predict_label.py。如上结果,包括了各个标签的输出概率及其对应标签。

代码整理后在上传~~