Kubernetes k8s学习笔记 尚硅谷王泽老师 由浅入深

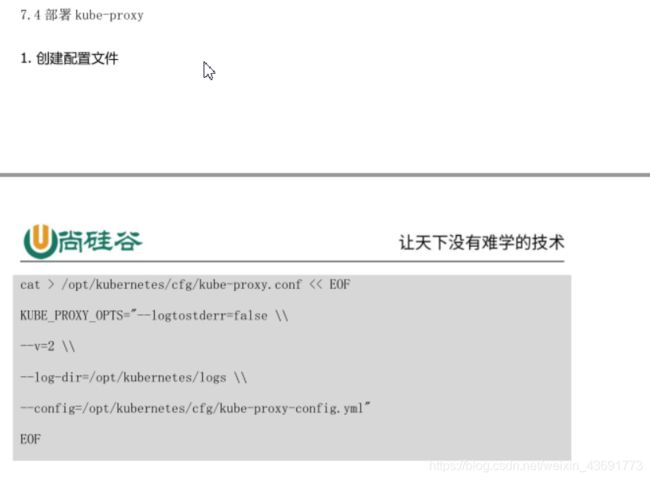

Kubernetes k8s学习笔记尚硅谷 不是汪洋的 是王泽老师

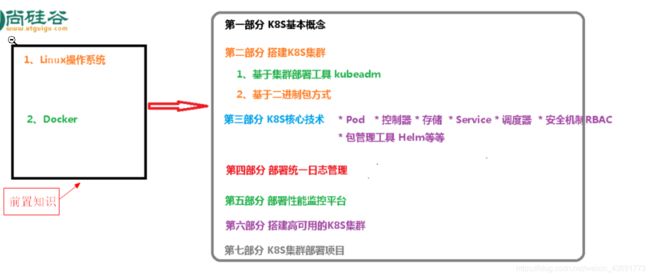

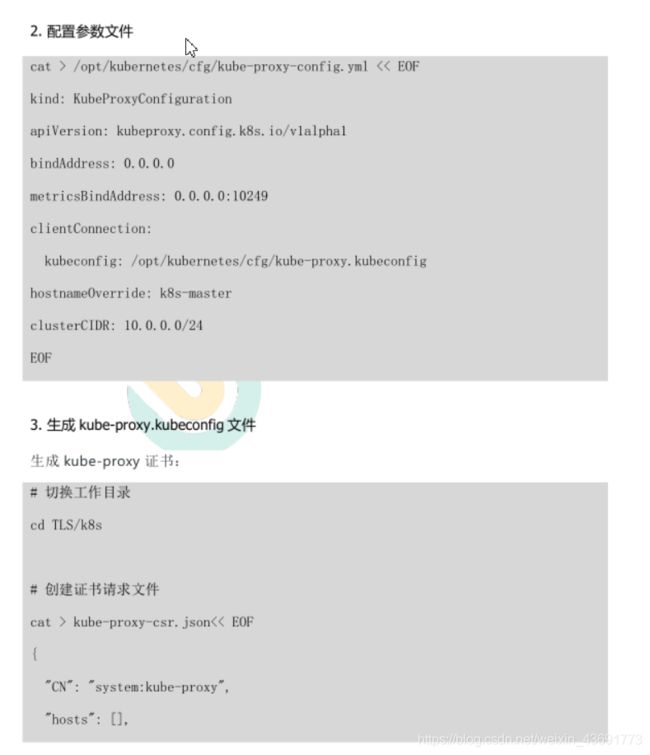

这里写目录标题

- Kubernetes k8s学习笔记尚硅谷 不是汪洋的 是王泽老师

- Kubernetes 的介绍和特性

-

- 介绍

- kubernetes 功能和架构

-

- 概述

- K8s 功能:

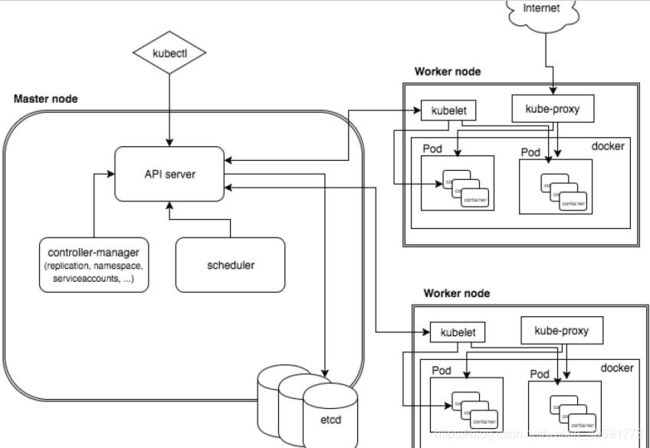

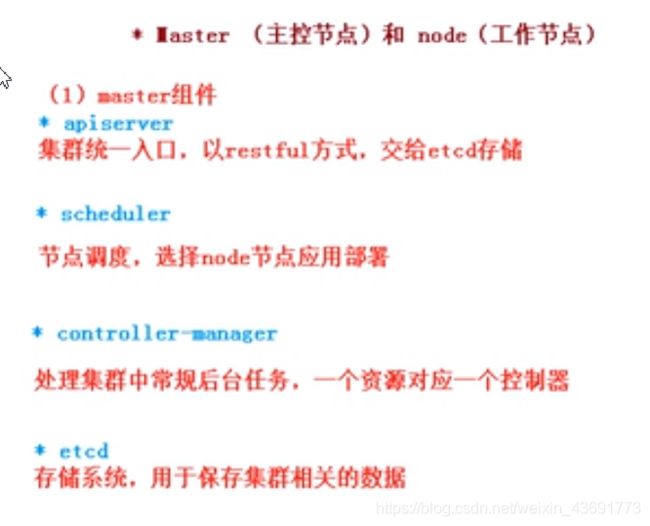

- k8s集群架构组件

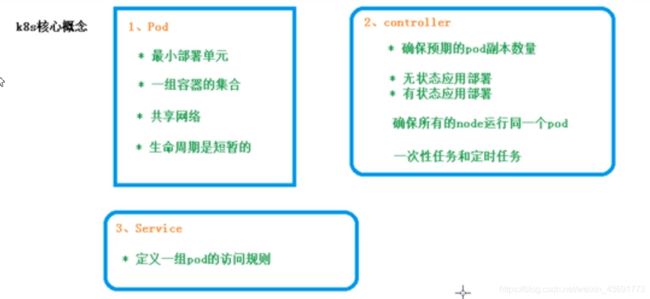

- k8s的核心概念

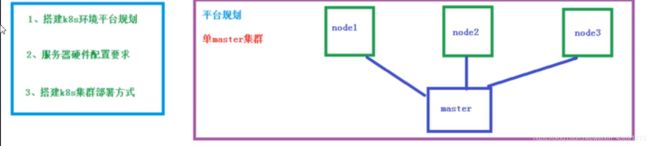

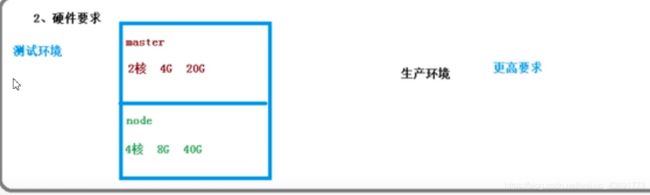

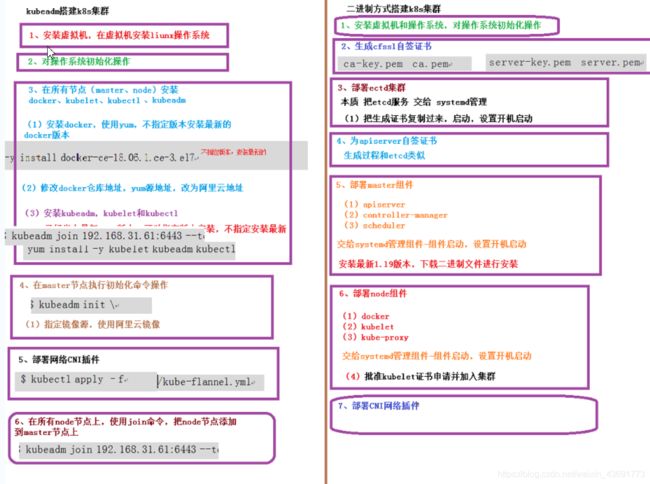

- 搭建K8s集群

-

- Kubeadm方式

- 安装kubeadm (单master集群)

- 8 部署 Kubernetes Master

- 二进制方式安装 (单master集群)

-

- 安装

-

- 在这里插入图片描述

- 部署 Etcd 集群

- 两种安方式的总结

- Kubernetes 核心技术

-

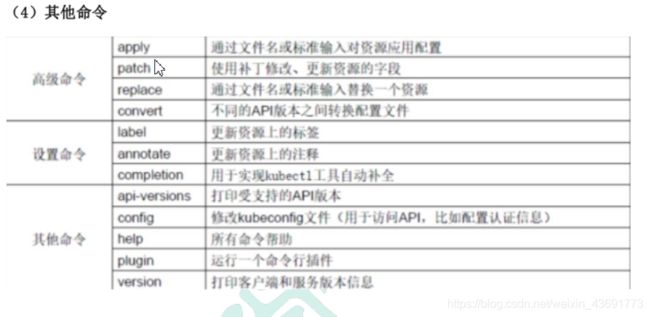

- 命令行工具 kubernetes核心技术

-

- 概述

- 命令的语法格式

- 资源编排yaml

-

- 介绍

- 编写方式

-

-

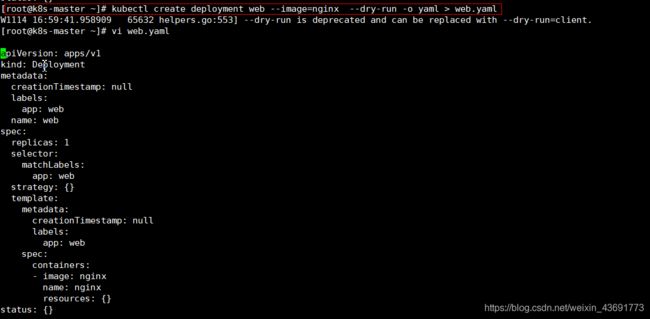

- 使用kubectl create 命令生成yaml文件

- 使用kubectl get 命令导出yaml文件

-

- pod

-

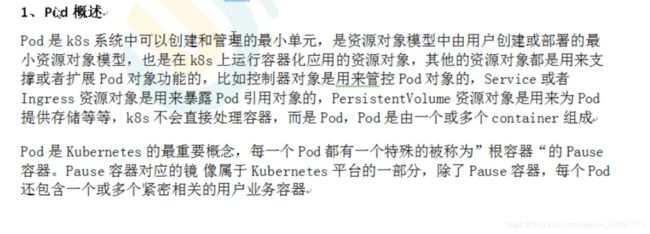

- pod的概述存在意义

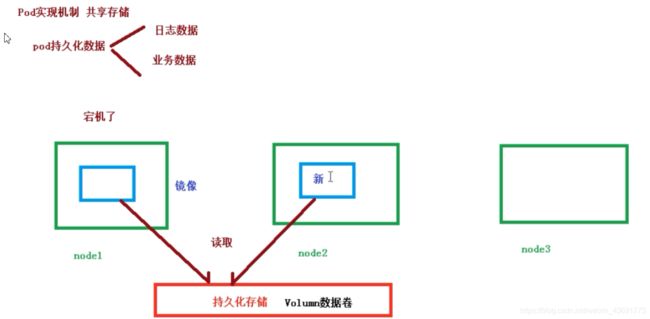

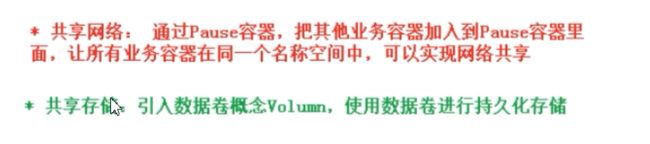

- pod两种实现机制

-

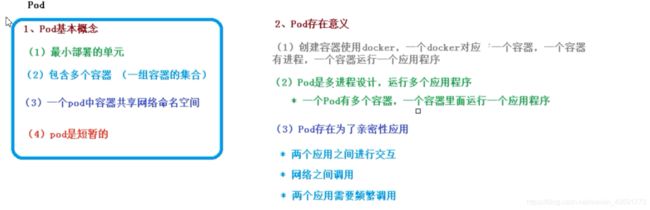

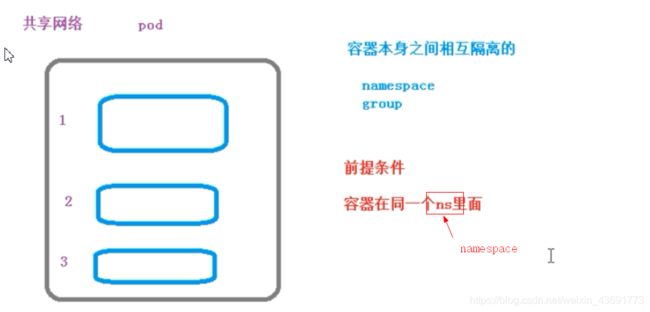

- 共享网络

- 共享存储

- 机制总结

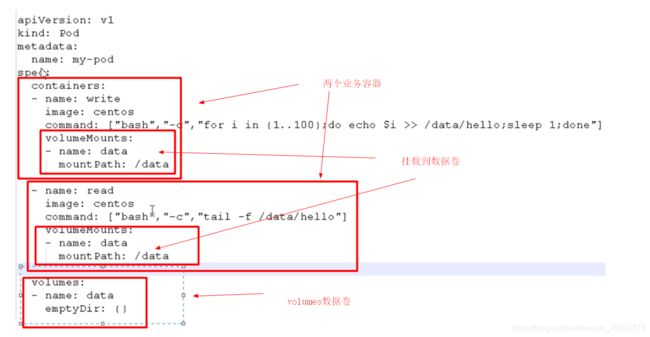

- pod 镜像拉取 重启策略和资源限制

-

- 镜像拉取策略

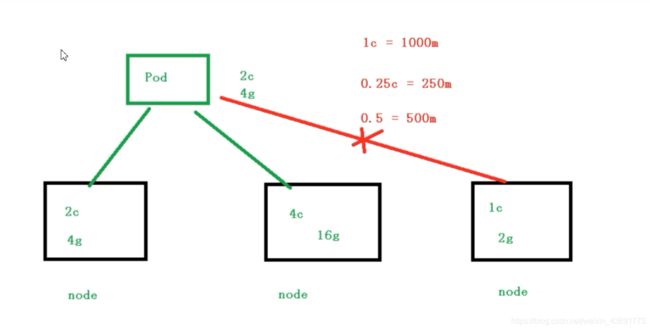

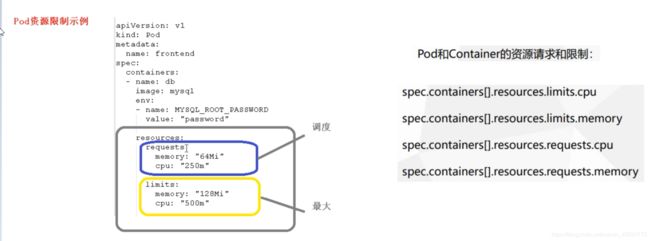

- pod资源限制

- pod重启机制

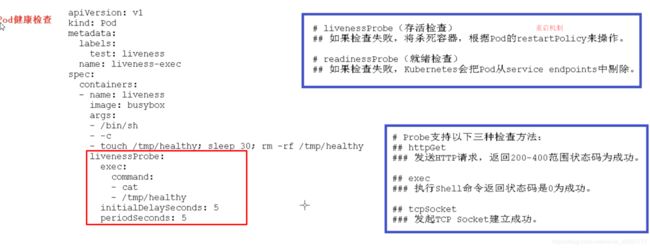

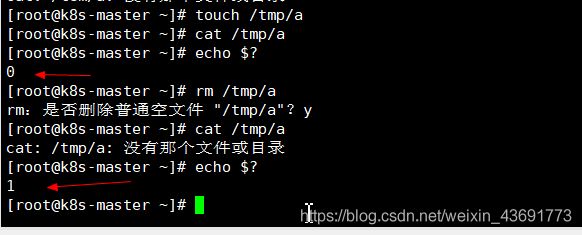

- pod健康检查

- pod调度策略

-

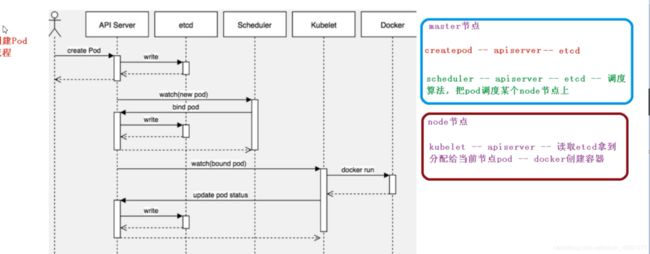

- 创建pod流程

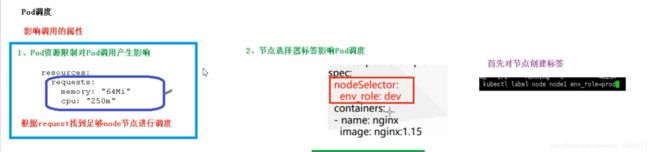

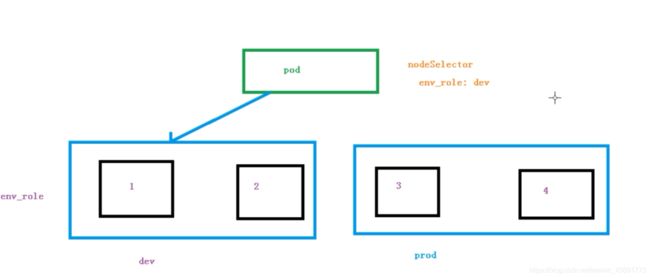

- 影响pod调度(资源限制和节点选择器)

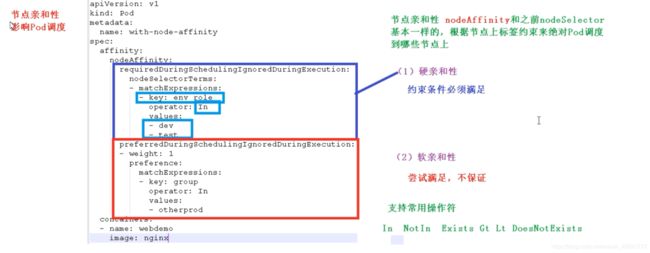

- 影响pod调度(节点亲和性)

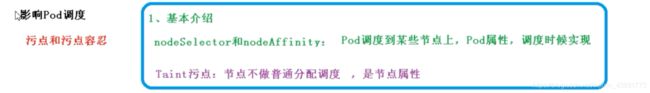

- 影响pod调度(污点和污点容忍)

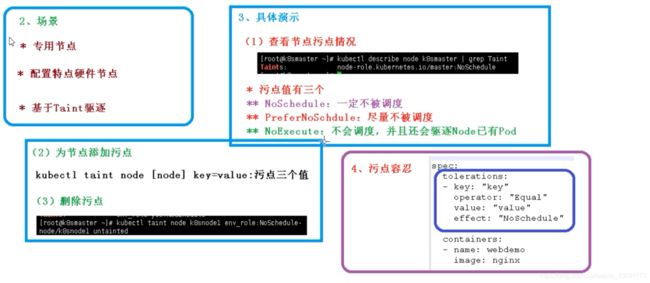

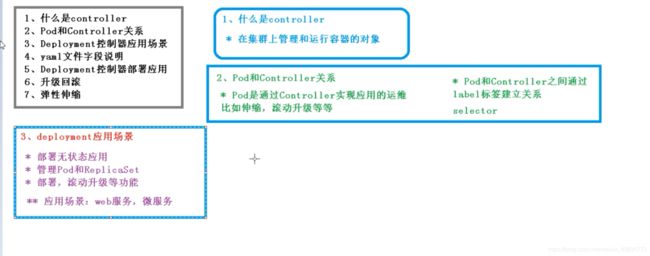

- Controller(Deployment)

-

- 概述和适用场景

- 发布应用

-

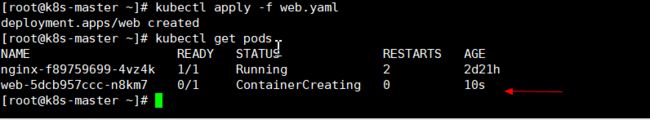

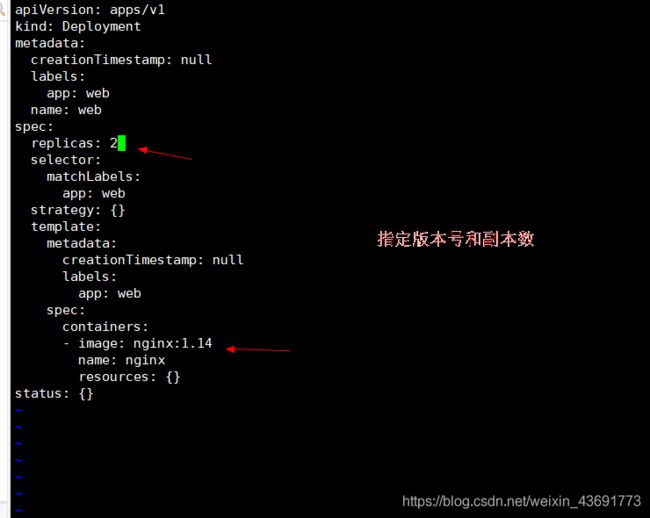

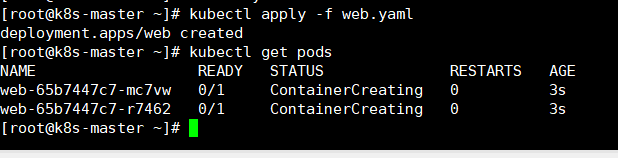

- 使用Deployment部署应用(yaml)

- 应用升级回滚和弹性伸缩

-

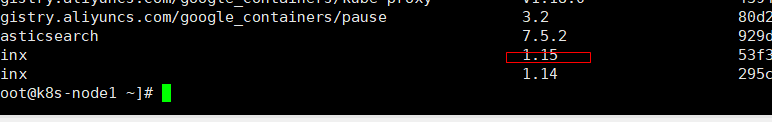

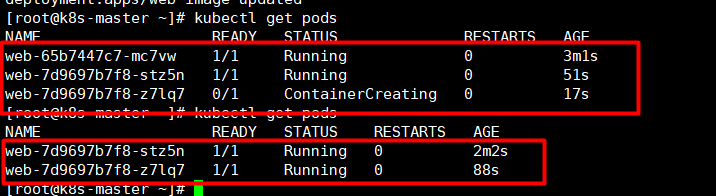

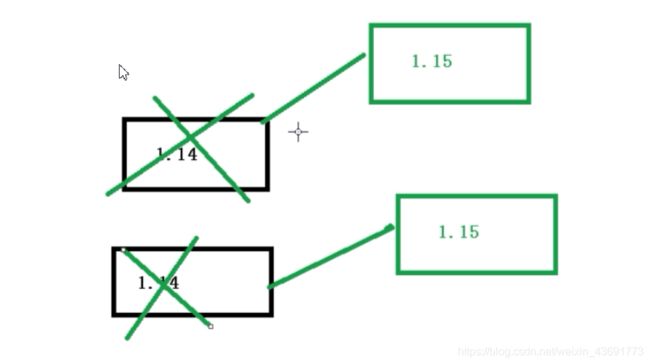

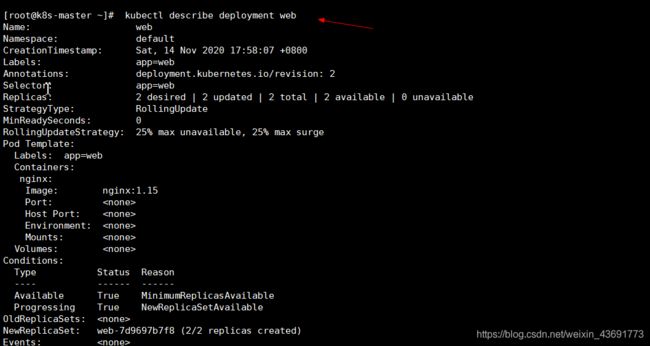

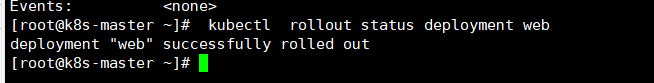

- 应用升级回滚

- 弹性伸缩

- Service

-

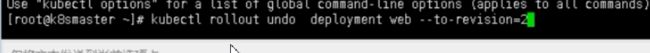

- 概述

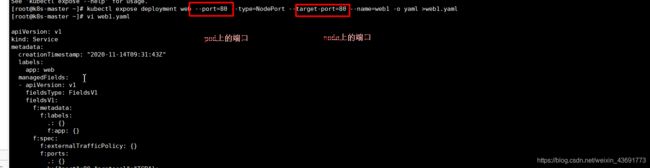

- Service的三种类型

- StatefulSet 部署有状态应用

-

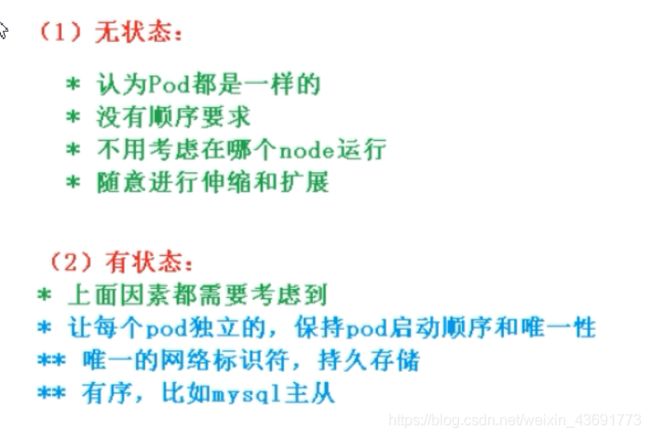

- 无状态和有状态的区别

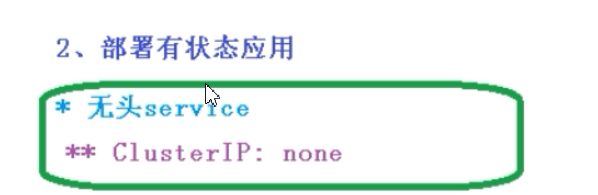

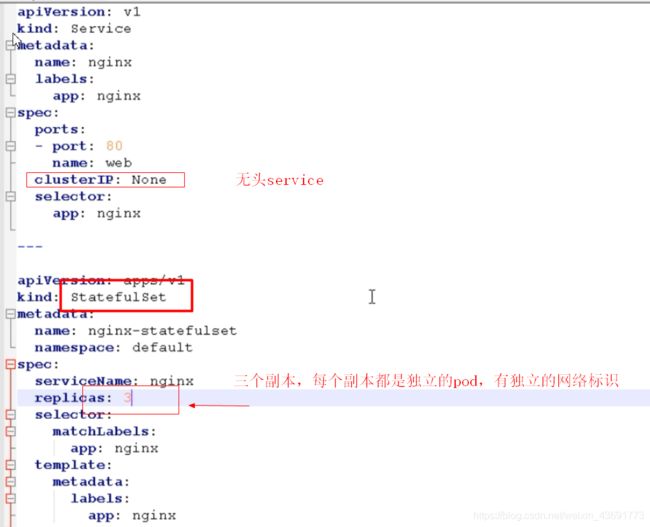

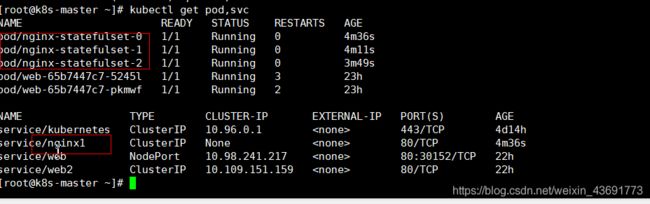

- 部署

- deployment和statefulset的区别

- DaemonSet 部署守护进程

-

- 部署

- 一次任务和定时任务(Job和CronJob)

-

- 一次任务

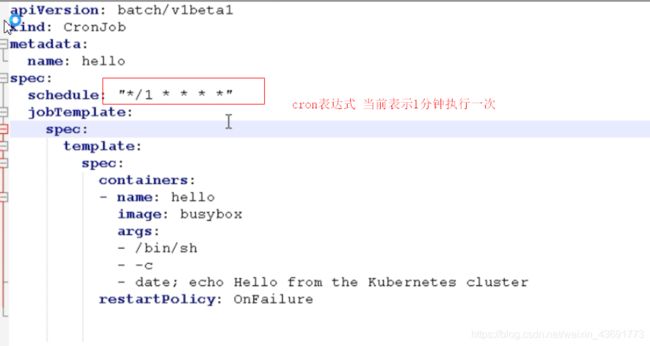

- 定时任务

- 配置管理

-

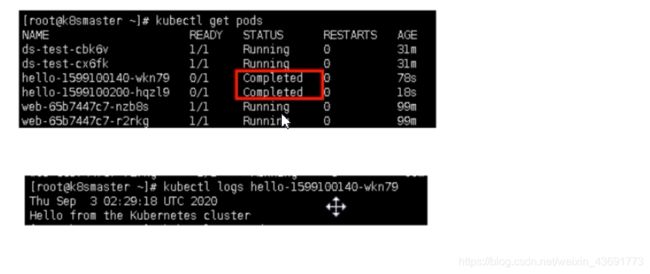

- Secret

-

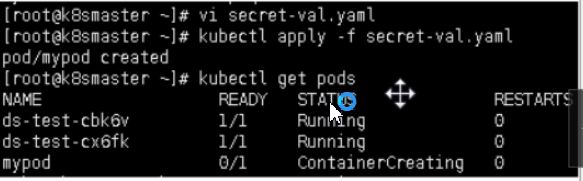

- 创建Secret加密数据

- 以变量的形式 挂载到Pod容器中

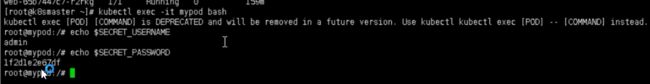

- 以Volumes的形式 挂载到Pod容器中

- ConfigMap

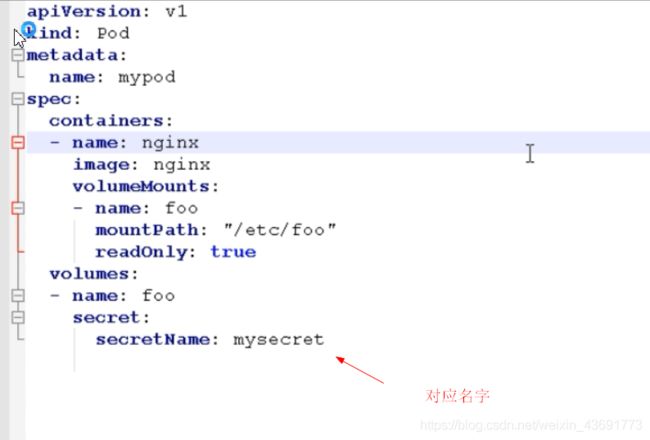

- kubenetes集群安全机制

-

- 概述

- RBAC 基于角色访问控制

-

- 介绍

- 实现鉴权

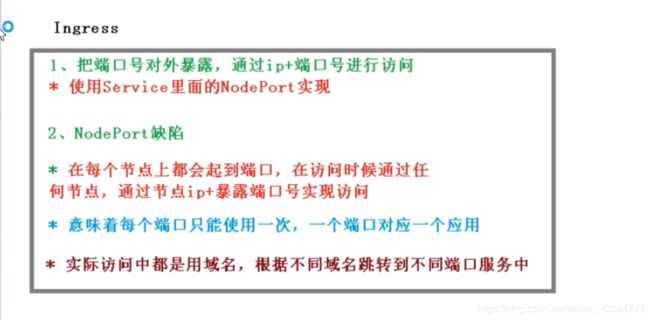

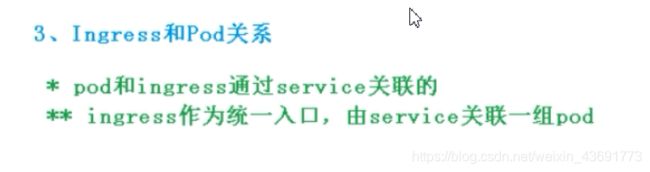

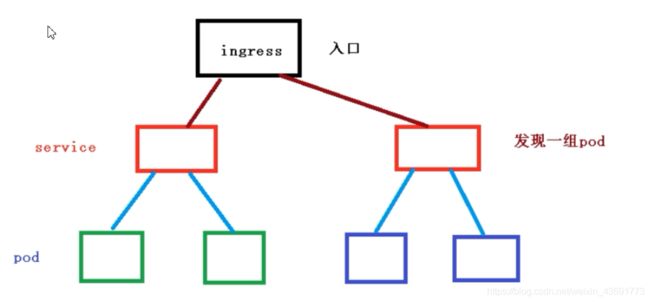

- Ingress

-

- 概述

- ingress对外暴露应用

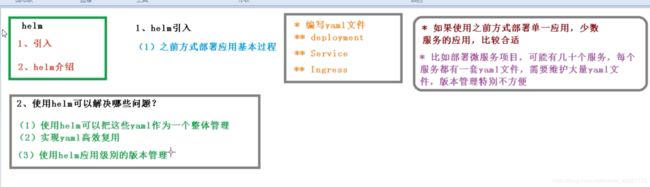

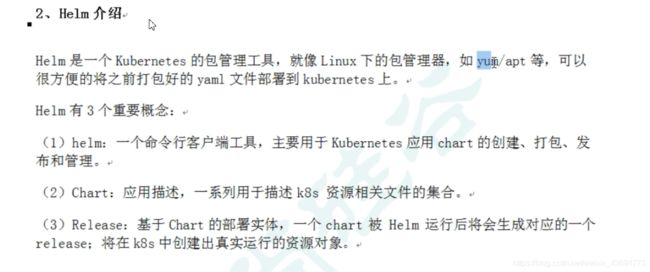

- Helm

-

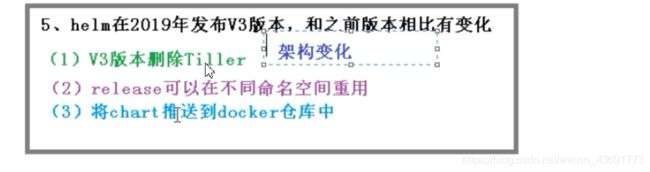

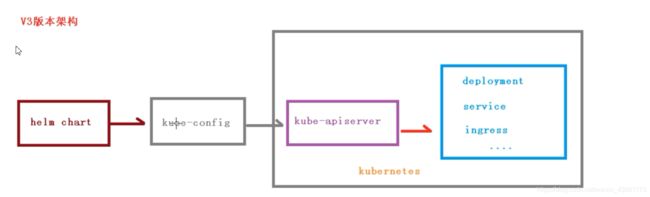

- 介绍

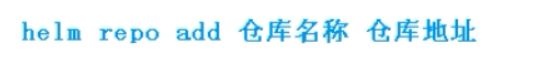

- 安装和配置仓库

- 使用Helm快速部署应用

- 自定义chart部署

- chart模板使用

- 持久化储存

-

- nfs网络储存

- pv和pvc

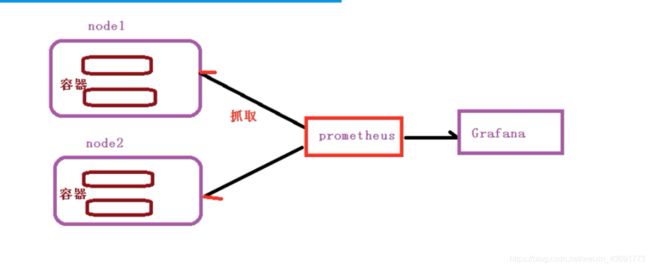

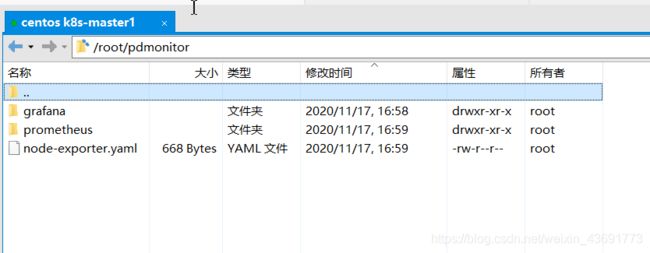

- kubenetes集群资源监控

-

- 监控指标和方案

- 搭建监控平台

-

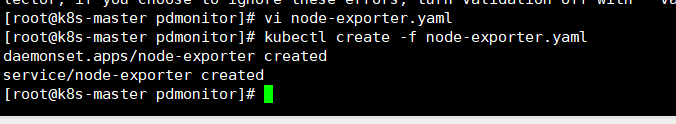

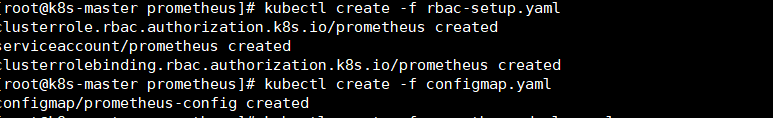

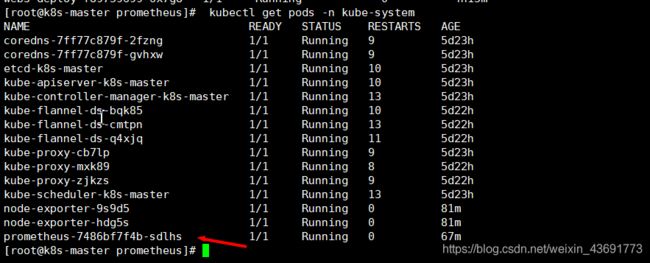

- 部署promethoeus

- 部署 Grafana

- 打开 Grafana ,配置purometheus数据源,导入显示模板

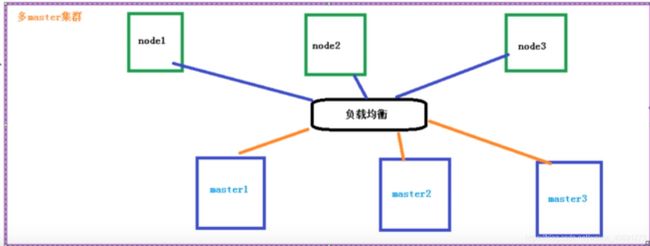

- 搭建k8s搭建高可用集群

-

- 初始化个部署keepalive

Kubernetes 的介绍和特性

介绍

kubernetes, 简称 K8s, 是用 8 代替 8 个字符“ubernete” 而成的缩写。 是一个开源的, 用于管理云平台中多个主机上的容器化的应用, Kubernetes 的目标是让部署容器化的应用简单并且高效(powerful) ,Kubernetes 提供了应用部署, 规划, 更新, 维护的一种机制。

传统的应用部署方式是通过插件或脚本来安装应用。 这样做的缺点是应用的运行、 配置、 管理、 所有生存周期将与当前操作系统绑定, 这样做并不利于应用的升级更新/回滚等操作, 当然也可以通过创建虚拟机的方式来实现某些功能, 但是虚拟机非常重, 并不利于可移植性。

新的方式是通过部署容器方式实现, 每个容器之间互相隔离, 每个容器有自己的文件系统 , 容器之间进程不会相互影响, 能区分计算资源。 相对于虚拟机, 容器能快速部署,由于容器与底层设施、 机器文件系统解耦的, 所以它能在不同云、 不同版本操作系统间进行迁移。

容器占用资源少、 部署快, 每个应用可以被打包成一个容器镜像, 每个应用与容器间成一对一关系也使容器有更大优势, 使用容器可以在 build 或 release 的阶段, 为应用创建容器镜像, 因为每个应用不需要与其余的应用堆栈组合, 也不依赖于生产环境基础结构,这使得从研发到测试、 生产能提供一致环境。 类似地, 容器比虚拟机轻量、 更“透明” ,这更便于监控和管理。

Kubernetes 是 Google 开源的一个容器编排引擎, 它支持自动化部署、 大规模可伸缩、应用容器化管理。 在生产环境中部署一个应用程序时, 通常要部署该应用的多个实例以便对应用请求进行负载均衡。

在 Kubernetes 中, 我们可以创建多个容器, 每个容器里面运行一个应用实例, 然后通过内置的负载均衡策略, 实现对这一组应用实例的管理、 发现、 访问, 而这些细节都不需要运维人员去进行复杂的手工配置和处理。

kubernetes 功能和架构

概述

Kubernetes 是一个轻便的和可扩展的开源平台,用于管理容器化应用和服务。通过Kubernetes 能够进行应用的自动化部署和扩缩容Kubernetes 中,会将组成应用的容器组合成一个逻辑单元以更易管理和发现。Kubernetes 积累了作为 Google 生产环境运行工作负载 15 年的经验,并吸收了来自于社区的最佳想法和实践。

K8s 功能:

(1) 自动装箱

基于容器对应用运行环境的资源配置要求自动部署应用容器

(2) 自我修复(自愈能力)

当容器失败时, 会对容器进行重启

当所部署的 Node 节点有问题时, 会对容器进行重新部署和重新调度

当容器未通过监控检查时, 会关闭此容器直到容器正常运行时, 才会对外提供服务

(3) 水平扩展

通过简单的命令、 用户 UI 界面或基于 CPU 等资源使用情况, 对应用容器进行规模扩大或规模剪裁

(4) 服务发现

用户不需使用额外的服务发现机制, 就能够基于 Kubernetes 自身能力实现服务发现和负载均衡

(5) 滚动更新

可以根据应用的变化, 对应用容器运行的应用, 进行一次性或批量式更新

(6) 版本回退

可以根据应用部署情况, 对应用容器运行的应用, 进行历史版本即时回退

(7) 密钥和配置管理

在不需要重新构建镜像的情况下, 可以部署和更新密钥和应用配置, 类似热部署。

(8) 存储编排

自动实现存储系统挂载及应用, 特别对有状态应用实现数据持久化非常重要,存储系统可以来自于本地目录、 网络存储(NFS、 Gluster、 Ceph 等)、 公共云存储服务

(9) 批处理

提供一次性任务, 定时任务; 满足批量数据处理和分析的场景

k8s集群架构组件

k8s的核心概念

搭建K8s集群

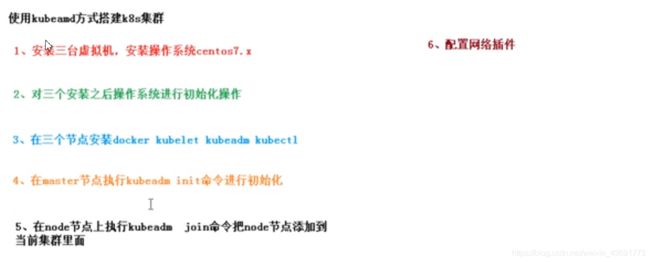

Kubeadm方式

kubeadm 是官方社区推出的一个用于快速部署 kubernetes 集群的工具,这个工具能通

过两条指令完成一个 kubernetes 集群的部署:

第一、创建一个 Master 节点 kubeadm init

第二, 将 Node 节点加入到当前集群中 $ kubeadm join

永久关闭swapcat >/etc/sysconfig/kubelet<< EOF KUBELET_EXTRA_ARGS="--fail-swap-on=false" EOF

安装kubeadm (单master集群)

所有节点安装 Docker/kubeadm/kubelet

Kubernetes 默认 CRI(容器运行时)为 Docker,因此先安装 Docker。

(1)安装 Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

$ yum -y install docker-ce-18.06.1.ce-3.el7

$ systemctl enable docker && systemctl start docker

$ docker --version

(2)添加阿里云 YUM 软件源

设置仓库地址

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

添加 yum 源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

(3)安装 kubeadm,kubelet 和 kubectl

$ yum install -y kubelet kubeadm kubectl

#版本太高降级

yum -y remove kubelet

yum -y install kubelet-1.18.1 kubeadm-1.18.1

$ systemctl enable kubelet #设置开机启动

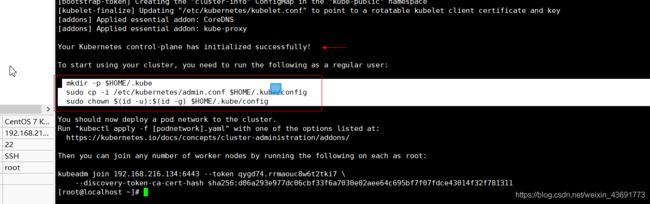

8 部署 Kubernetes Master

在 192.168.216.134(Master)执行

由于默认拉取镜像地址 k8s.gcr.io 国内无法访问,这里指定阿里云镜像仓库地址。

# kubeadm init \

# --apiserver-advertise-address=192.168.216.134 \

# --image-repository registry.aliyuncs.com/google_containers \

# --kubernetes-version v1.18.0 \

# --service-cidr=10.96.0.0/12 \

# --pod-network-cidr=10.244.0.0/16

kubeadm init --apiserver-advertise-address=192.168.216.134 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

如果失败

kubeadm reset -f

希望你永远都用不到。。

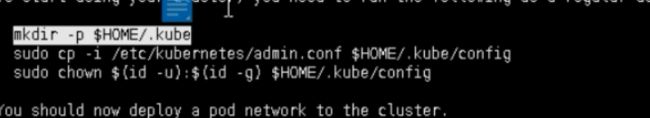

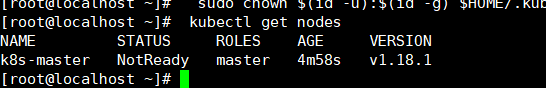

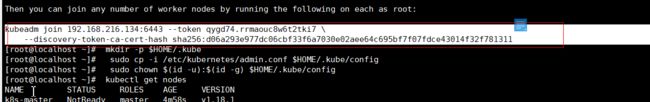

成功后跟着提示来

使用

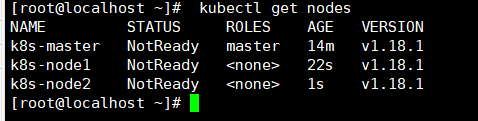

kubectl get nodes

查看是否成功

继续跟着提示操作 在node的centos7里 把node加入master中

节点里加!!!

kubeadm join 192.168.216.134:6443 --token qygd74.rrmaouc8w6t2tki7 --discovery-token-ca-cert-hash sha256:d06a293e977dc06cbf33f6a7030e02aee64c695bf7f07fdce43014f32f781311

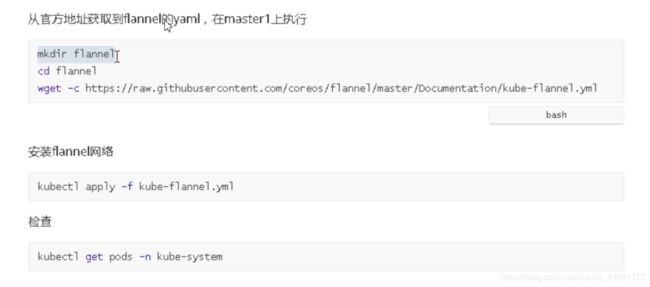

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

报错了去这里查看https://blog.csdn.net/weixin_38074756/article/details/109231865

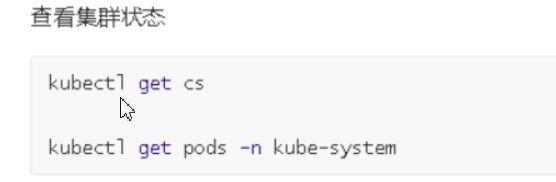

成功

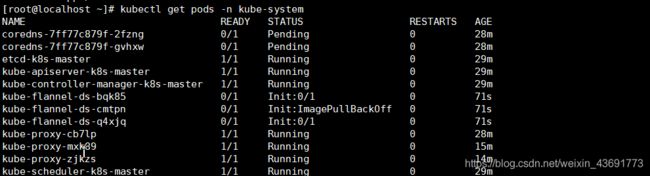

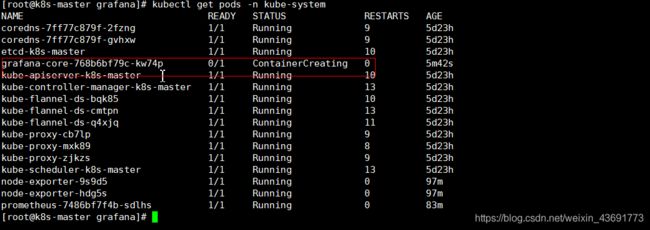

查看pod

kubectl get pods -n kube-system

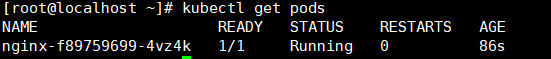

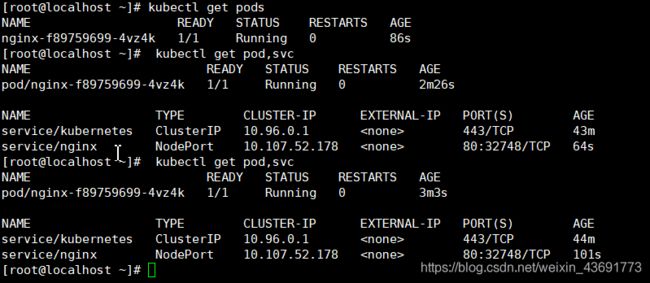

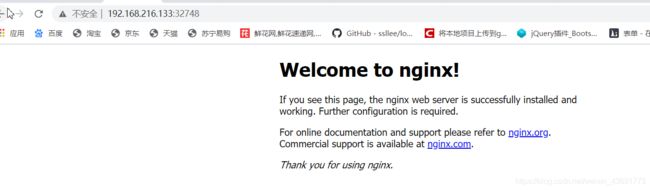

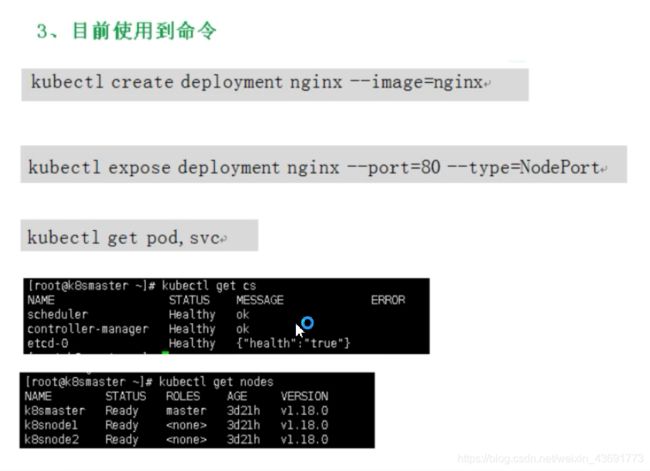

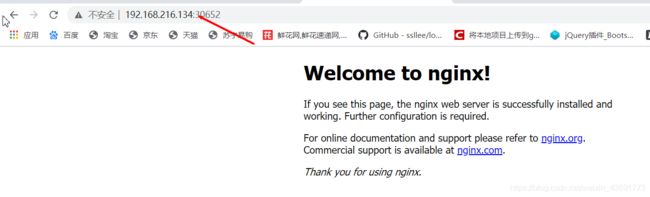

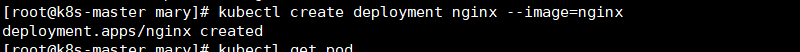

创建pod 测试 kubernetes 集群

在 Kubernetes 集群中创建一个 pod,验证是否正常运行:

拉去一个nginx对象

create deployment nginx --image=nginx

暴露80端口

kubectl expose deployment nginx --port=80 --type=NodePort

查看对外端口

kubectl get pod,svc

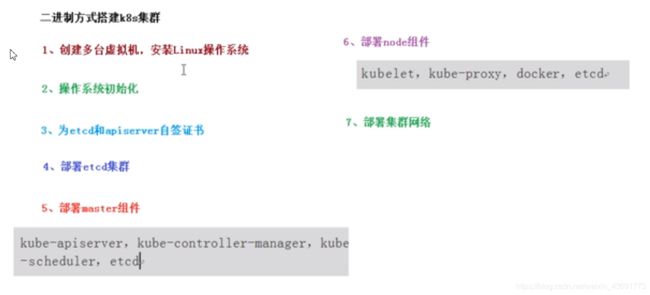

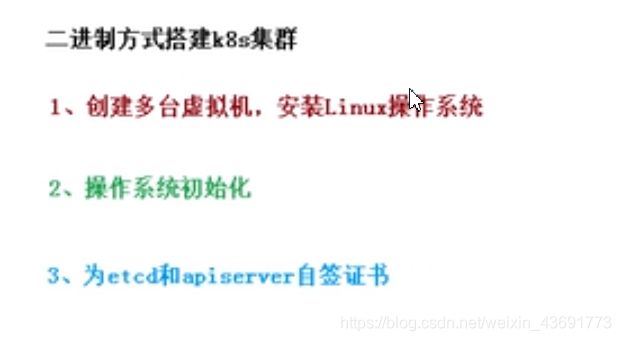

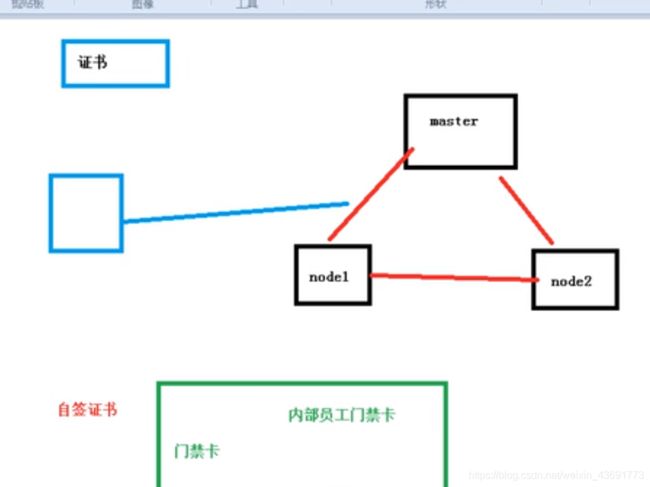

二进制方式安装 (单master集群)

内部访问和内部访问都需要自签证书

证书到官方申请 这边是在学习就自己签发

证书到官方申请 这边是在学习就自己签发

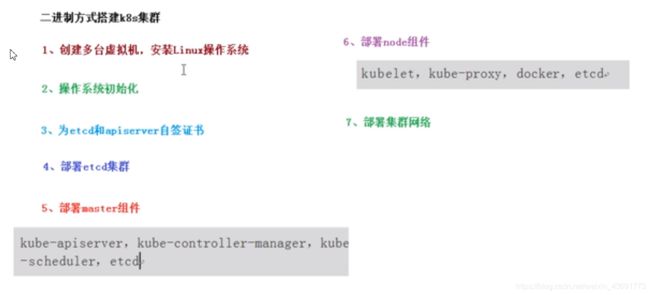

总的步骤

安装

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 关闭 selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

# 关闭 swap

swapoff -a # 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

# 根据规划设置主机名

hostnamectl set-hostname <hostname>

# 在 master 添加 hosts

cat >> /etc/hosts << EOF

192.168.216.130 m1

192.168.216.135 n1

192.168.216.136 n1

EOF

# 将桥接的 IPv4 流量传递到 iptables 的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

# 时间同步

yum install ntpdate -y

ntpdate time.windows.com

部署 Etcd 集群

Etcd 是一个分布式键值存储系统,Kubernetes 使用 Etcd 进行数据存储,所以先准备

一个 Etcd 数据库,为解决 Etcd 单点故障,应采用集群方式部署,这里使用 3 台组建集

群,可容忍 1 台机器故障,当然,你也可以使用 5 台组建集群,可容忍 2 台机器故障。

注:为了节省机器,这里与 K8s 节点机器复用。也可以独立于 k8s 集群之外部署,只要

apiserver 能连接到就行。

准备 cfssl 证书生成工具

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书,相比 openssl 更方便使用。

找任意一台服务器操作,这里用 Master 节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

生成 Etcd 证书

(1)自签证书颁发机构(CA)

创建工作目录

mkdir -p ~/TLS/{etcd,k8s}

cd TLS/etcd

自签 CA:

cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json<< EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

生成证书:

# 如果执行没有权限的话 先执行 chmod +x /usr/local/bin/cfssljson /usr/local/bin/cfssl

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

(2)使用自签 CA 签发 Etcd HTTPS 证书

创建证书申请文件:

cat > server-csr.json<< EOF

{

"CN": "etcd",

"hosts": [

"192.168.216.130",

"192.168.216.135",

"192.168.216.136"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

注:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信 IP,一个都不能少!为了

方便后期扩容可以多写几个预留的 IP

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

从 Github 下载二进制文件

https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-

linux-amd64.tar.gz

部署 Etcd 集群

以下在节点 1 上操作,为简化操作,待会将节点 1 生成的所有文件拷贝到节点 2 和节点 3.

(1)创建工作目录并解压二进制包

mkdir /opt/etcd/{bin,cfg,ssl} –p

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

(2)创建 etcd 配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.216.130:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.216.130:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.216.130:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.216.130:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.216.130:2380,etcd-2=https://192.168.216.135:2380,etcd-3=https://192.168.216.136:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

说明:

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群 Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入

已有集群

(3)systemd 管理 etcd

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_TOKEN} \

--initial-cluster-state=new \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

(4)拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径:

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

(5)启动并设置开机启动

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

(6)将上面节点 1 所有生成的文件拷贝到节点 2 和节点 3

scp -r /opt/etcd/ root@192.168.216.135:/opt/

scp /usr/lib/systemd/system/etcd.service root@192.168.216.135:/usr/lib/systemd/system/

scp -r /opt/etcd/ root@192.168.216.136:/opt/

scp /usr/lib/systemd/system/etcd.service root@192.168.216.136:/usr/lib/systemd/system/

然后在节点 2 和节点 3 分别修改 etcd.conf 配置文件中的节点名称和当前服务器 IP:

vi /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1" # 修改此处,节点 2 改为 etcd-2,节点 3 改为 etcd-3

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.31.71:2380" # 修改此处为当前服务器 IP

ETCD_LISTEN_CLIENT_URLS="https://192.168.31.71:2379" # 修改此处为当前服务器 IP

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.31.71:2380" # 修改此处为当前

服务器 IP

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.31.71:2379" # 修改此处为当前服务器

IP

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.31.71:2380,etcd-

2=https://192.168.31.72:2380,etcd-3=https://192.168.31.73:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

最后启动 etcd 并设置开机启动,同上。

(7)查看集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem -- cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem -- endpoints="https://192.168.216.130:2379,https://192.168216.135:2379,https://192.168.216.136:2379" endpoint health

如果输出上面信息,就说明集群部署成功。如果有问题第一步先看日志:

/var/log/message 或 journalctl -u etcd

安装 Docker

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-

19.03.9.tgz

以下在所有节点操作。这里采用二进制安装,用 yum 安装也一样。

(1)解压二进制包

tar zxvf docker-19.03.9.tgz

mv docker/* /usr/bin

(2) systemd 管理 docker

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

(3)创建配置文件

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

registry-mirrors 阿里云镜像加速器

(4)启动并设置开机启动

systemctl daemon-reload

systemctl start docker

systemctl enable docker

部署 Master Node

生成 kube-apiserver 证书

(1)自签证书颁发机构(CA)

cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json<< EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

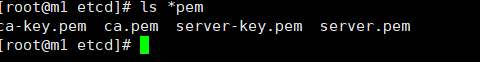

(2)生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

(3)使用自签 CA 签发 kube-apiserver HTTPS 证书

创建证书申请文件:

cd TLS/k8s

cat > server-csr.json<< EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.31.71",

"192.168.31.72",

"192.168.31.73",

"192.168.31.74",

"192.168.31.81",

"192.168.31.82",

"192.168.31.88",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -

profile=kubernetes server-csr.json | cfssljson -bare server

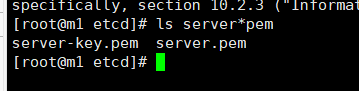

ls server*pem

server-key.pem server.pem

从 Github 下载二进制文件

下载地址:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183

注:打开链接你会发现里面有很多包,下载一个 server 包就够了,包含了 Master 和 Worker Node 二进制文件。

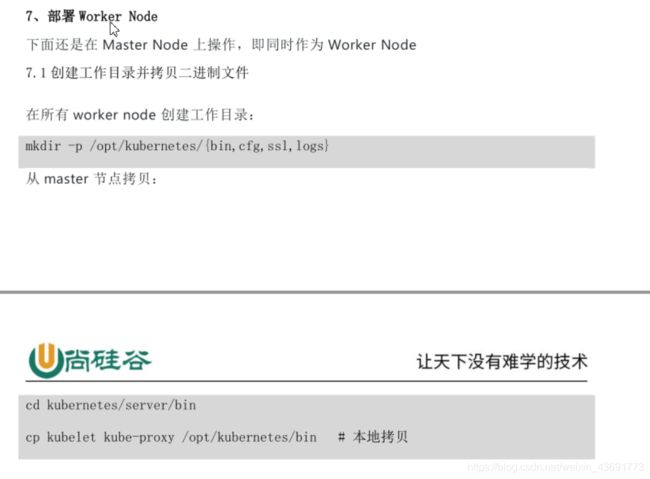

解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

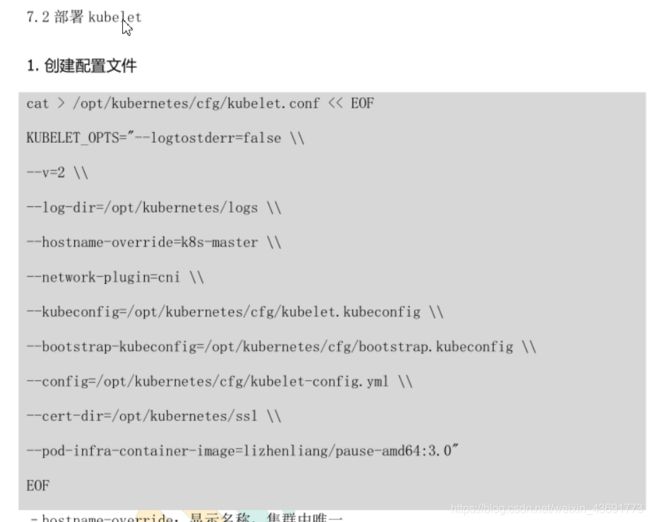

部署 kube-apiserver

- 创建配置文件

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcd-

servers=https://192.168.31.71:2379,https://192.168.31.72:2379,https://192.168.3

1.73:2379 \\

--bind-address=192.168.31.71 \\

--secure-port=6443 \\

--advertise-address=192.168.31.71 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-

plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestric

tion \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

注:上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用 EOF 保留换

行符。

–logtostderr:启用日志

—v:日志等级

–log-dir:日志目录

–etcd-servers:etcd 集群地址

–bind-address:监听地址

–secure-port:https 安全端口

–advertise-address:集群通告地址

–allow-privileged:启用授权

–service-cluster-ip-range:Service 虚拟 IP 地址段

–enable-admission-plugins:准入控制模块

–authorization-mode:认证授权,启用 RBAC 授权和节点自管理

–enable-bootstrap-token-auth:启用 TLS bootstrap 机制

–token-auth-file:bootstrap token 文件

–service-node-port-range:Service nodeport 类型默认分配端口范围

–kubelet-client-xxx:apiserver 访问 kubelet 客户端证书

–tls-xxx-file:apiserver https 证书

–etcd-xxxfile:连接 Etcd 集群证书

–audit-log-xxx:审计日志

- 拷贝刚才生成的证书

把刚才生成的证书拷贝到配置文件中的路径:

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

- 启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver 启用 TLS 认证后,Node 节点 kubelet 和 kube-

proxy 要与 kube-apiserver 进行通信,必须使用 CA 签发的有效证书才可以,当 Node

节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了

简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet

会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。

所以强烈建议在 Node 上使用这种方式,目前主要用于 kubelet,kube-proxy 还是由我

们统一颁发一个证书。

创建上述配置文件中 token 文件:

cat > /opt/kubernetes/cfg/token.csv << EOF

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-

bootstrapper"

EOF

格式:token,用户名,UID,用户组

token 也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

- systemd 管理 apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

- 授权 kubelet-bootstrap 用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

部署 kube-controller-manager

- 创建配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--master=127.0.0.1:8080 \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

–master:通过本地非安全本地端口 8080 连接 apiserver。

–leader-elect:当该组件启动多个时,自动选举(HA)

–cluster-signing-cert-file/–cluster-signing-key-file:自动为 kubelet 颁发证书

的 CA,与 apiserver 保持一致

- systemd 管理 controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager

\$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

部署 kube-scheduler

4. 创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect \

--master=127.0.0.1:8080 \

--bind-address=127.0.0.1"

EOF

–master:通过本地非安全本地端口 8080 连接 apiserver。

–leader-elect:当该组件启动多个时,自动选举(HA)

- systemd 管理 scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- 启动并设置开机启动

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

- 查看集群状态

所有组件都已经启动成功,通过 kubectl 工具查看当前集群组件状态:

kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

两种安方式的总结

Kubernetes 核心技术

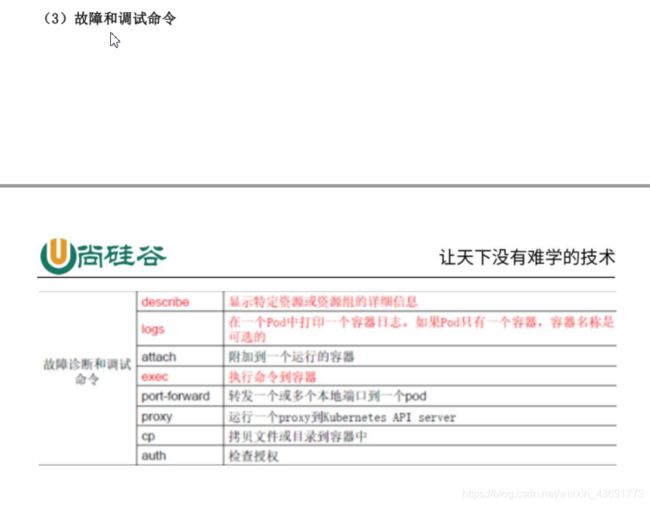

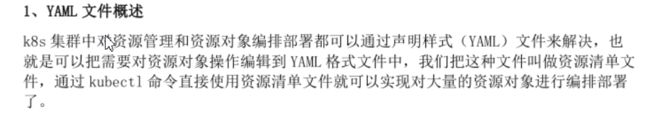

命令行工具 kubernetes核心技术

概述

kubectl 是 是 s Kubernetes 集群的命令行工具,通过 kubectl 能够对集群本身进行管理,并能够在集群上进行容器化应用的安装部署。

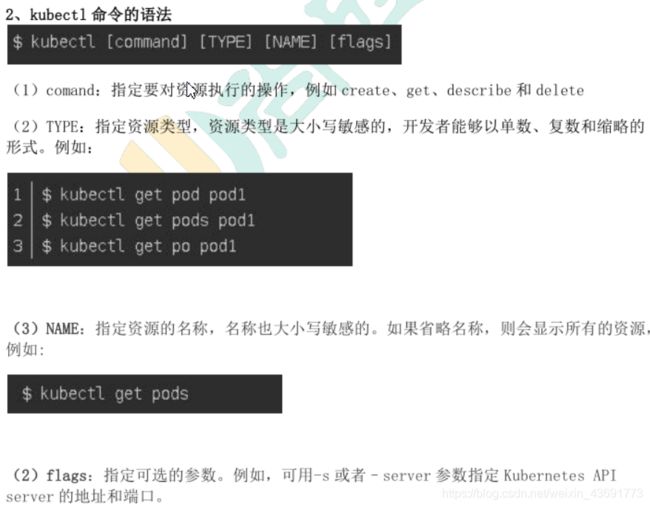

命令的语法格式

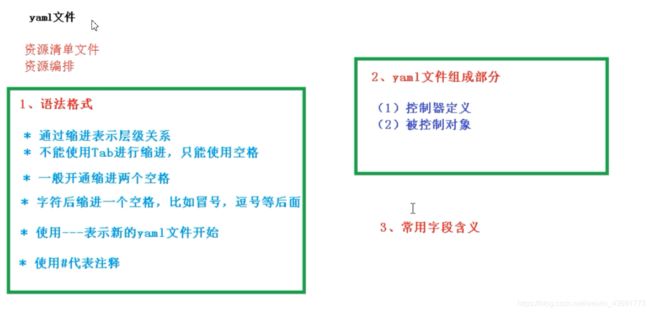

资源编排yaml

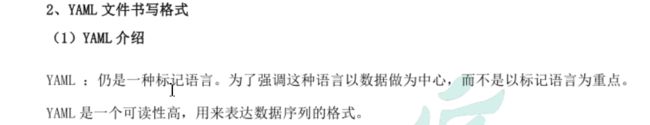

介绍

编写方式

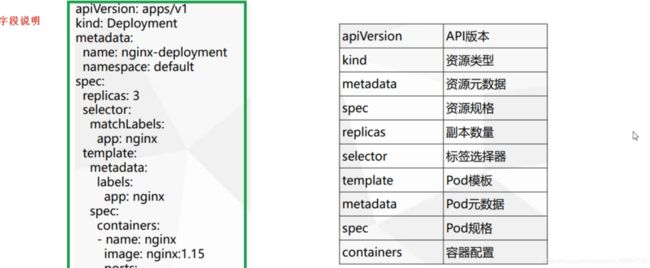

使用kubectl create 命令生成yaml文件

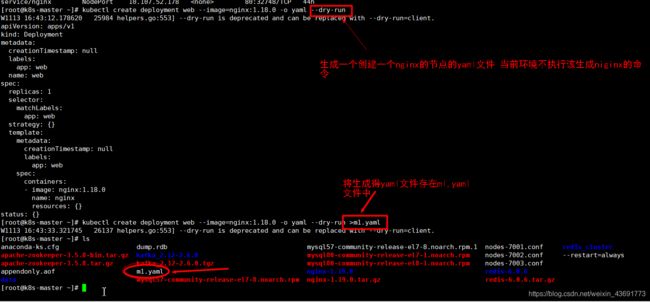

使用kubectl get 命令导出yaml文件

pod

pod的概述存在意义

pod两种实现机制

共享网络

共享存储

机制总结

pod 镜像拉取 重启策略和资源限制

镜像拉取策略

pod资源限制

pod重启机制

pod健康检查

pod调度策略

创建pod流程

影响pod调度(资源限制和节点选择器)

影响pod调度(节点亲和性)

影响pod调度(污点和污点容忍)

Controller(Deployment)

![]()

概述和适用场景

发布应用

使用Deployment部署应用(yaml)

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 1 # 副本

selector:

matchLabels:

app: web # 控制器中的selector的标签

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web # pod的标签 与上面的controller的selector标签进行匹配

spec:

containers:

- image: nginx

name: nginx

resources: {}

status: {}

~

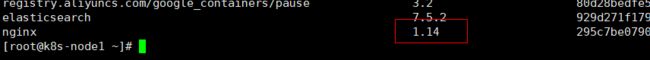

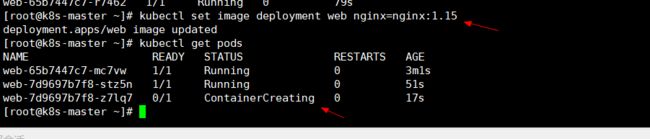

应用升级回滚和弹性伸缩

应用升级回滚

弹性伸缩

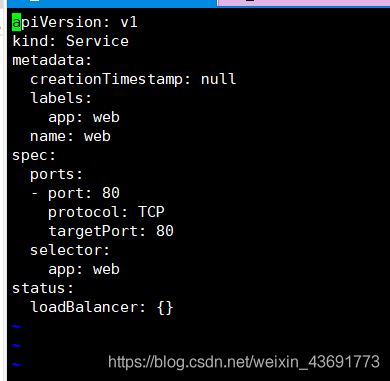

Service

概述

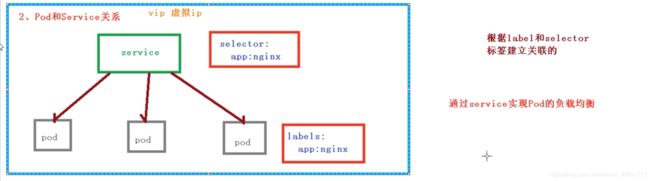

pod升级回滚 ip地址会变动 Service的作用是防止pod失联(服务发现)

定义一组pod的访问策略(负载均衡)

service和pod的关系

service对外有一个vip 通过vip 做到服务发现和负载均衡

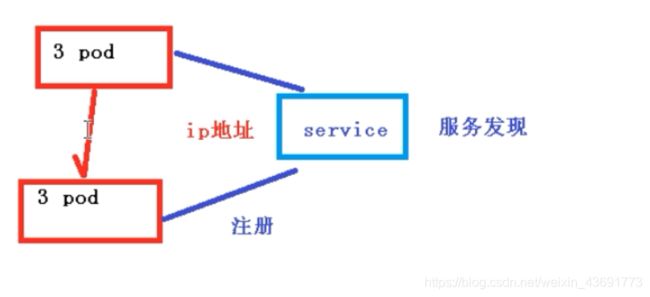

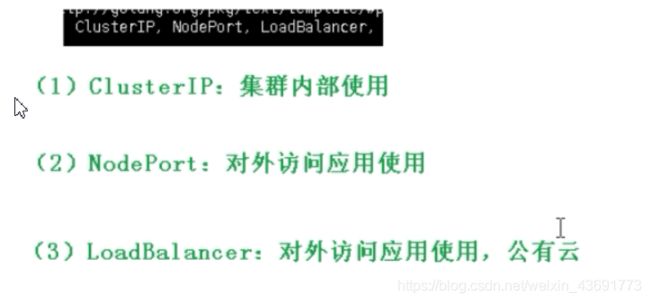

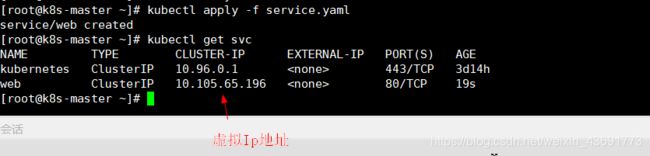

Service的三种类型

没有特殊的设置 默认使用 ClusterIP类型

集群内部可以进行访问

更改type

部署一下service应用 可以对外应用的访问

第三种

StatefulSet 部署有状态应用

之前的deployment部署的都是无状态应用

无状态和有状态的区别

部署

1 apiVersion: v1

2 kind: Service

3 metadata:

4 labels:

5 app: nginx

6 name: nginx1

7

8 spec:

9 ports:

10 - port: 80

11 name: web

12 clusterIP: None

13 selector:

14 app: nginx

15

16

17 ---

18

19 apiVersion: apps/v1

20 kind: StatefulSet

21 metadata:

22 name: nginx-statefulset

23 namespace: default

24 spec:

25 serviceName: nginx1

26 replicas: 3

27 selector:

28 matchLabels:

29 app: nginx

30 template:

31 metadata:

32 labels:

33 app: nginx

34 spec:

35 containers:

36 - name: nginx

37 image: nginx:latest

38 ports:

39 - containerPort: 80

deployment和statefulset的区别

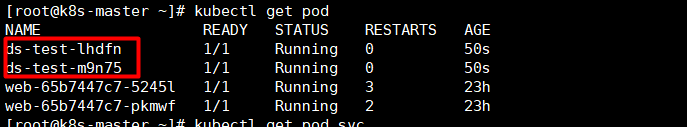

DaemonSet 部署守护进程

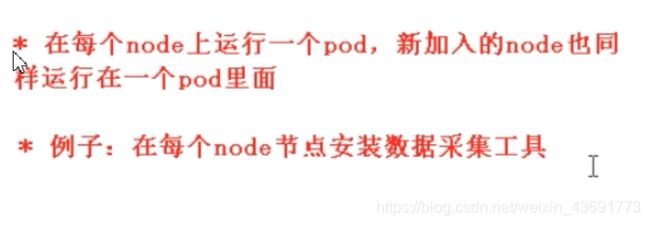

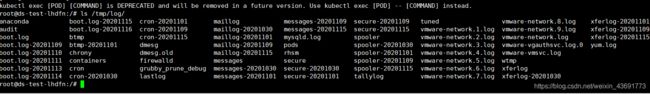

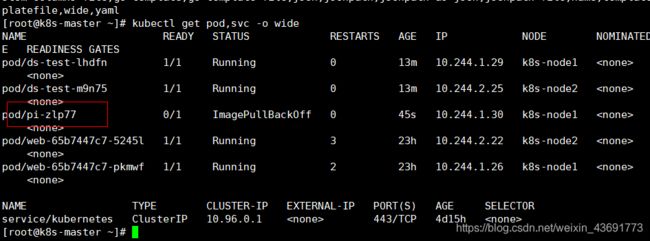

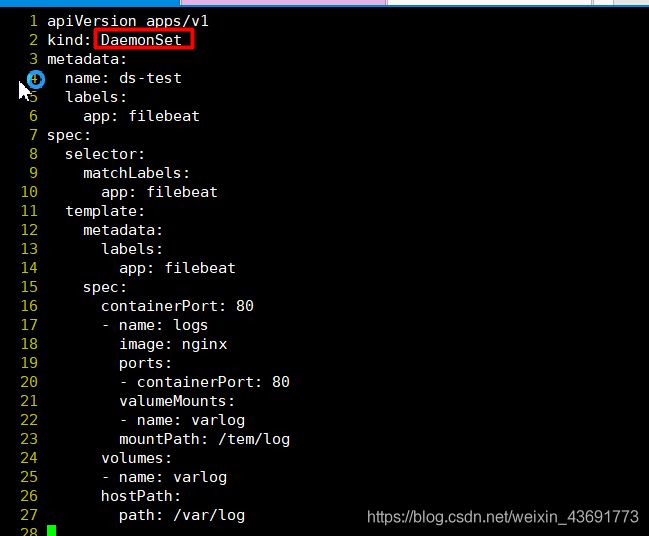

部署

部署一个日志采集守护进程

每一个node节点上安装数据采集工具

1 apiVersion: apps/v1

2 kind: DaemonSet

3 metadata:

4 name: ds-test

5 labels:

6 app: filebeat

7 spec:

8 selector:

9 matchLabels:

10 app: filebeat

11 template:

12 metadata:

13 labels:

14 app: filebeat

15 spec:

16 containers:

17 - name: logs

18 image: nginx

19 ports:

20 - containerPort: 80

21 volumeMounts:

22 - name: varlog

23 mountPath: /tmp/log

24 volumes:

25 - name: varlog

26 hostPath:

27 path: /var/log

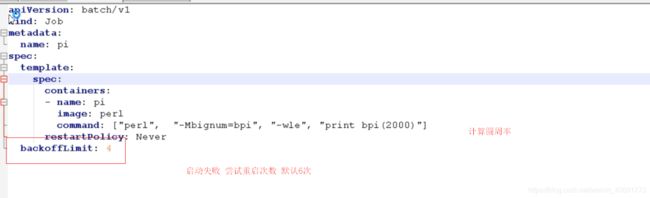

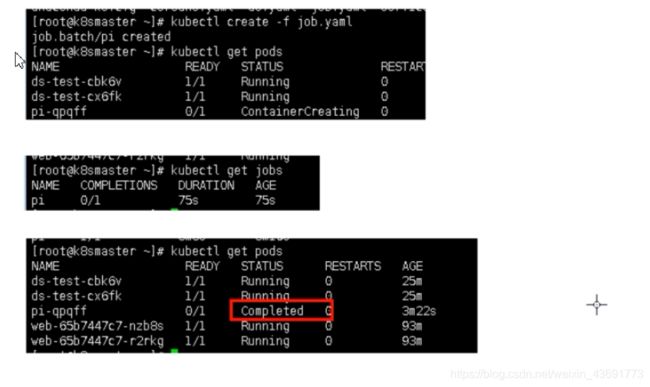

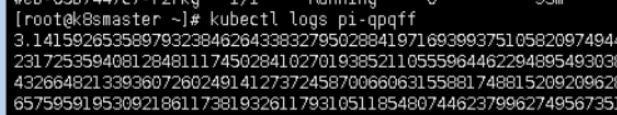

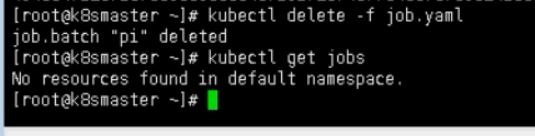

一次任务和定时任务(Job和CronJob)

一次任务

apiVersion: batch/v1

2 kind: Job

3 metadata:

4 name: pi

5 spec:

6 template:

7 spec:

8 containers:

9 - name: pi

10 image: per1

11 command: ["per1", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

12 restartPolicy: Never

13 backoffLimit: 4

定时任务

配置管理

Secret

创建Secret加密数据

1 apiVersion: v1

2 kind: Secret

3 metadata:

4 name: mysecret

5 type: Opaque

6 data:

7 username: YWRtaW4=

8 password: MWYyZDF1MmU2N2Rm

以变量的形式 挂载到Pod容器中

以Volumes的形式 挂载到Pod容器中

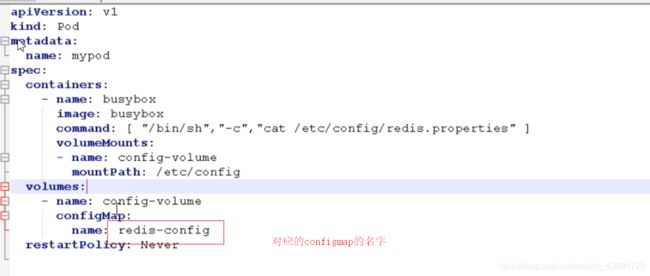

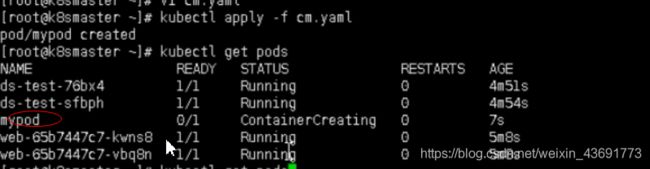

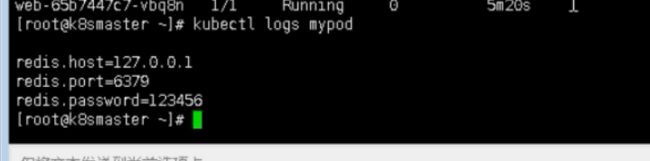

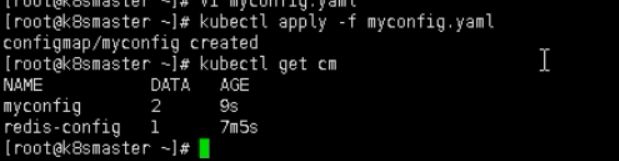

ConfigMap

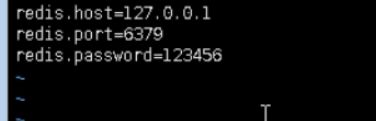

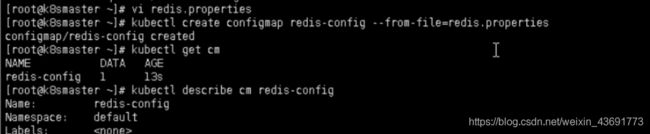

创建配置文件redis.properties

创建一个ConfigMap

Volume形式挂载到pod容器中

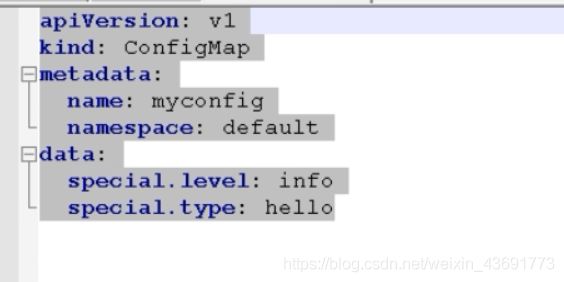

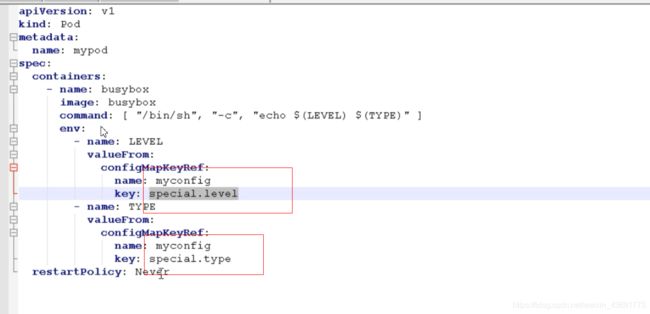

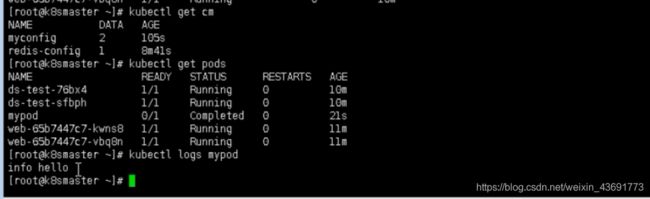

变量形式挂载到pod容器中

创建yaml ,声明变量信息,configmap创建

kubenetes集群安全机制

概述

RBAC 基于角色访问控制

介绍

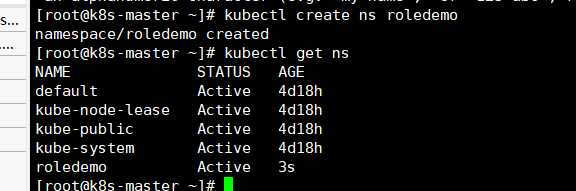

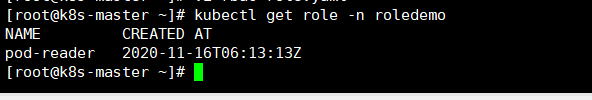

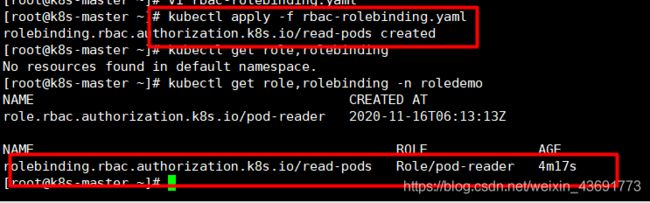

实现鉴权

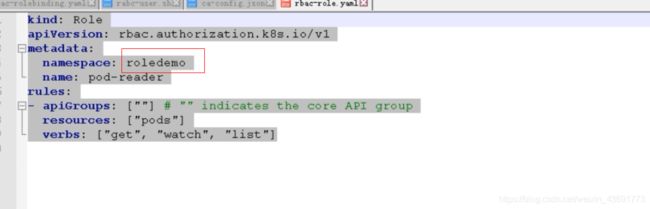

1 kind: Role

2 apiVersion: rbac.authorization.k8s.io/v1

3 metadata:

4 namespace: roledemo

5 name: pod-reader

6 rules:

7 - apiGroups: [""] # "" indicates the core API group

8 resources: ["pods"]

9 verbs: ["get", "watch", "list"]

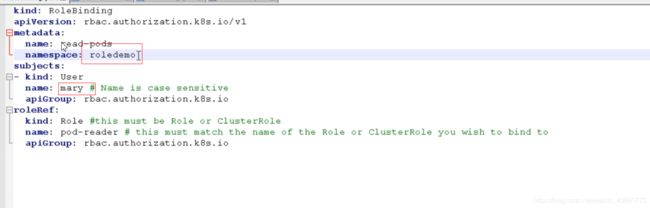

1 kind: RoleBinding

2 apiVersion: rbac.authorization.k8s.io/v1

3 metadata:

4 name: read-pods

5 namespace: roledemo

6 subjects:

7 - kind: User

8 name: mary

9 apiGroup: rbac.authorization.k8s.io

10 roleRef:

11 kind: Role

12 name: pod-reader

13 apiGroup: rbac.authorization.k8s.io

创建并查看

使用证书识别身份

新建目录 在目录内新建rabc-user.sh

cat > mary-csr.json <Ingress

概述

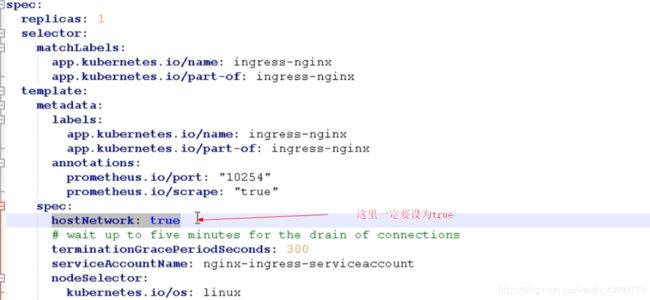

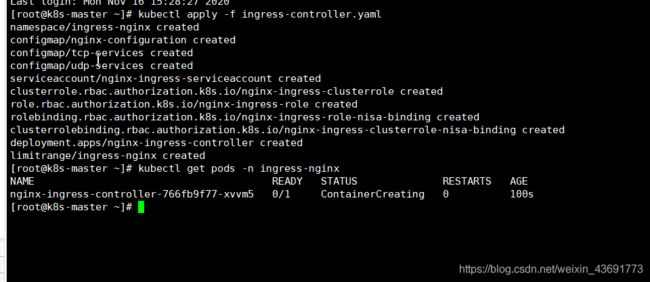

ingress对外暴露应用

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

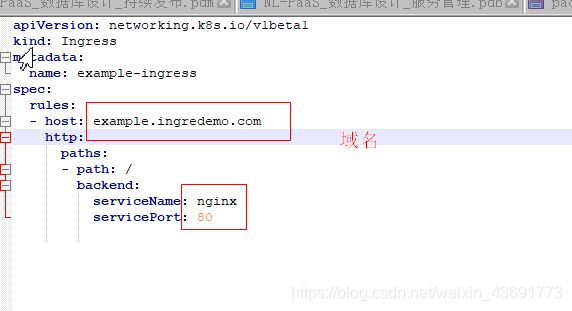

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: example-ingress

spec:

rules:

- host: example.ingredemo.com

http:

paths:

- path: /

backend:

serviceName: nginx

servicePort: 80

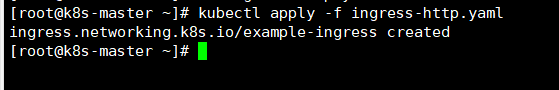

查看该应用部署到node2中

查看node 2

![]()

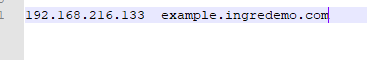

在windows系统hosts文件中添加域名访问规则

Helm

介绍

安装和配置仓库

方式一:使用官方提供的脚本一键安装

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get > get_helm.sh

$ chmod 700 get_helm.sh

$ ./get_helm.sh

方式二:手动下载安装

#从官网下载最新版本的二进制安装包到本地:https://github.com/kubernetes/helm/releases

tar -zxvf helm-v3.3.1-linux-amd64.tar.gzz # 解压压缩包

# 把 helm 指令放到bin目录下

cd linux-amd64

mv helm /usr/bin

helm help # 验证

![]()

配置helm仓库

(1)添加仓库

# 微软仓库

helm repo add stable http://mirror.azure.cn/kubernetes/charts

# 国内仓库

helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# 查看仓库地址

helm repo list

# 更新

helm repo update

# 删除

helm repo remove aliyun

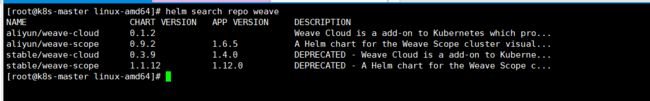

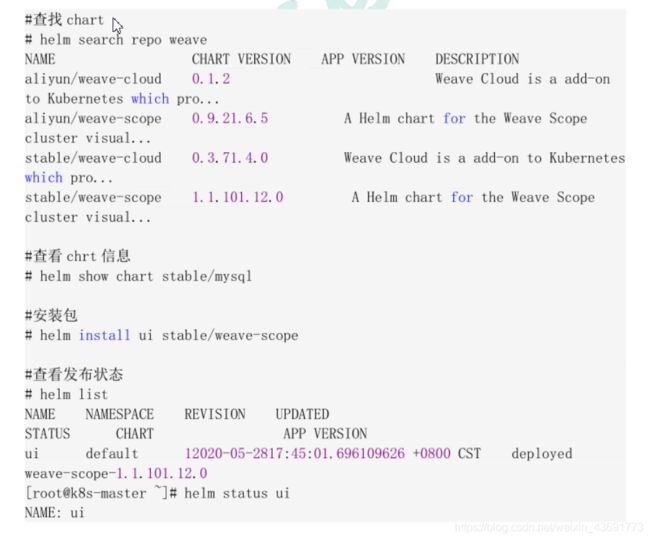

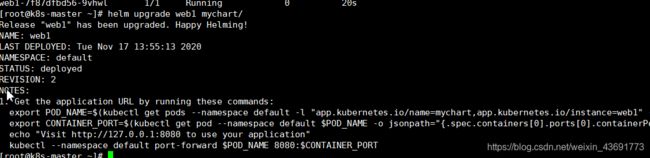

使用Helm快速部署应用

使用命令搜索应用

helm search repo 名称(weave)

根据搜索内容选择进行安装

helm install 安装之后的名称 搜索之后的应用名称

查看安装之后的状态

#查看安装列表

helm list

# 查看安装状态

helm status 安装之后的名称

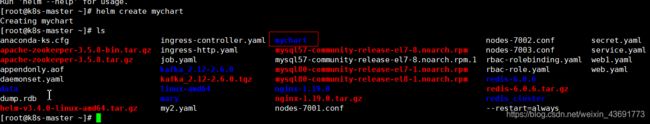

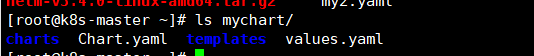

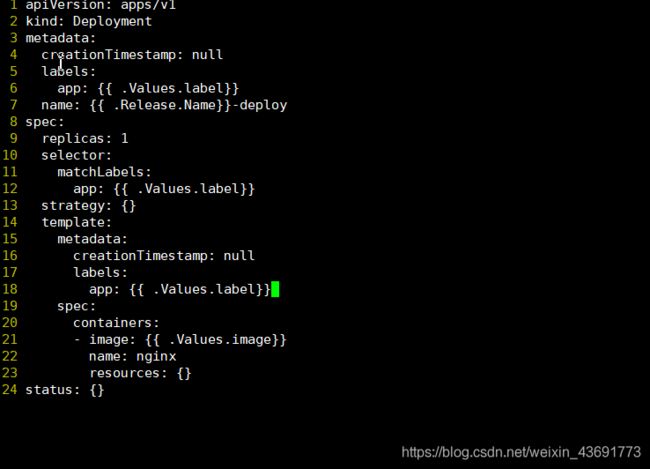

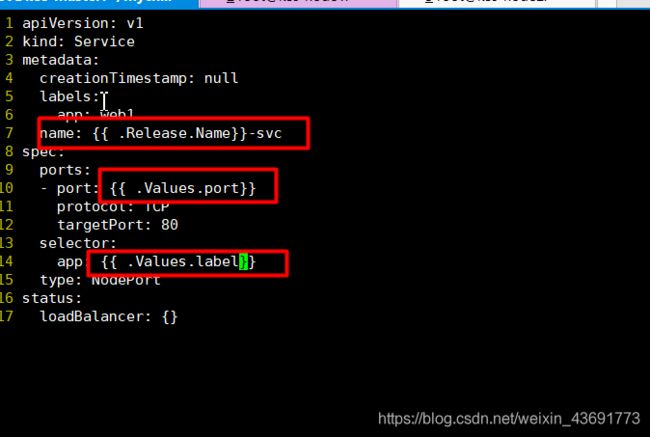

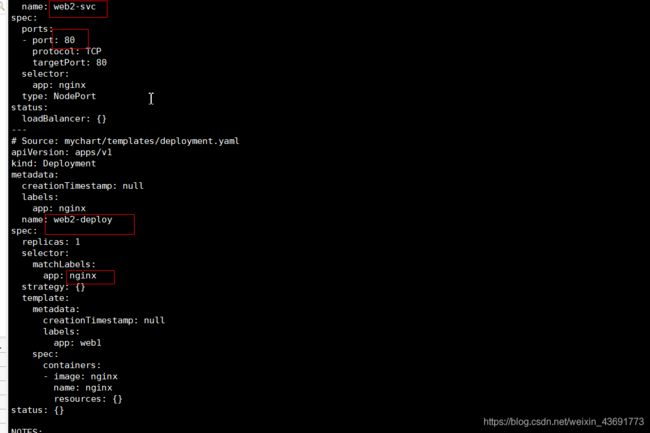

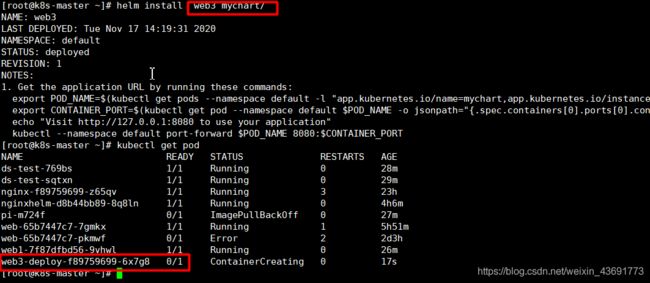

自定义chart部署

Chart.yaml 配置当前chart属性配置信息

tmplate 编写yaml文件放入该文件夹

values.yaml 放全局变量

在template文件夹中创两个yaml文件

deployment.yaml

service.yaml

![]()

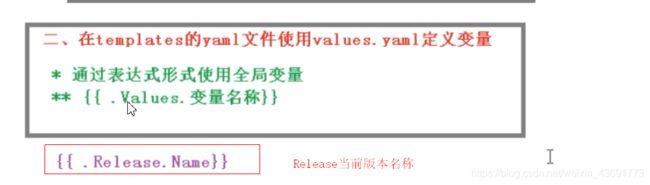

chart模板使用

让yaml文件高效复用

通过传递参数,动态渲染模板,让yaml内容传入参数生成

![]()

helm install --dry-run web2 mychart/

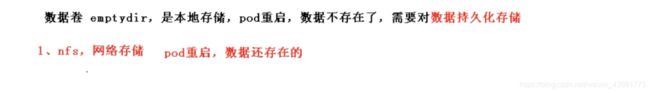

持久化储存

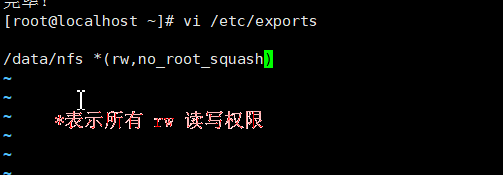

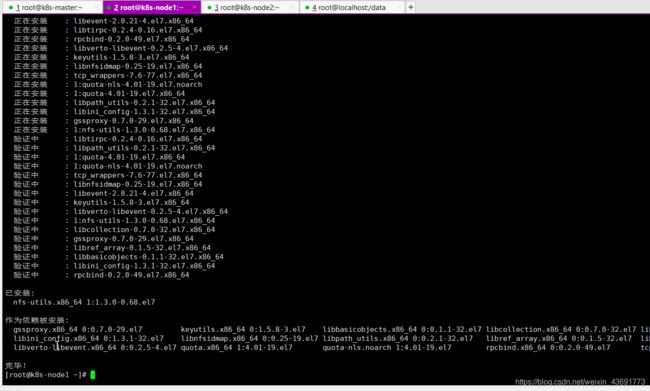

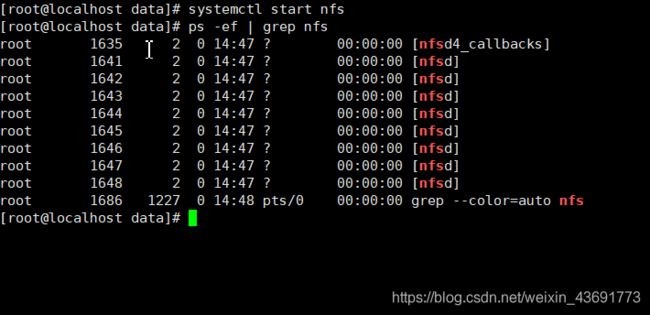

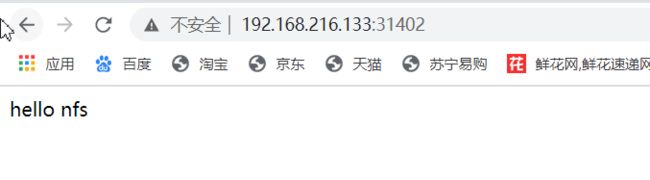

nfs网络储存

yum install -y nfs-utils

设置挂载路径(路径需要创建出来)

k8 snode节点上也安装nfs

nfs服务器启动nfs

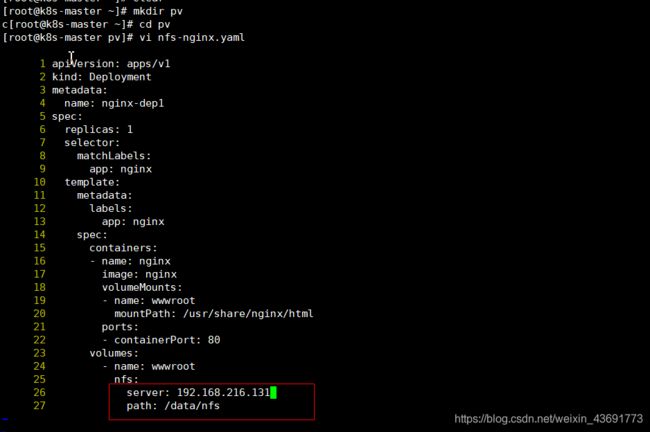

k8s集群部署应用 使用nfs持久网络存储

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

nfs:

server: 192.168.216.131

path: /data/nfs

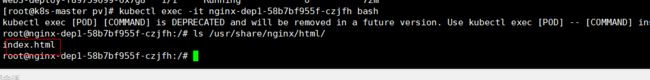

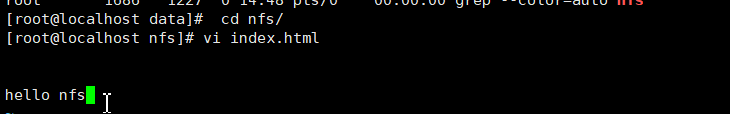

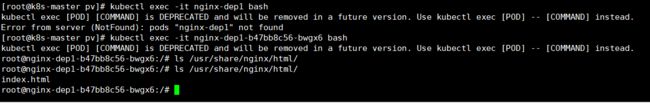

启动 pod 进入pod

ns服务器在 /data/nfs 目录下新增html文件

进入 pod查看

将nginx-dep1暴露端口

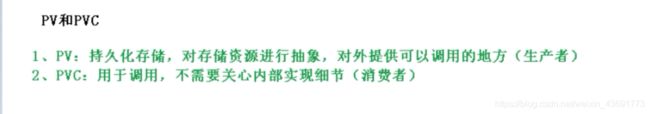

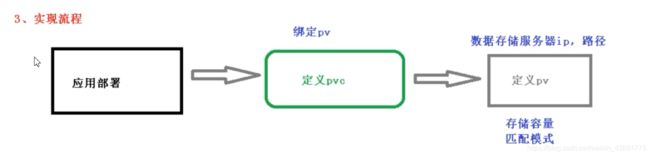

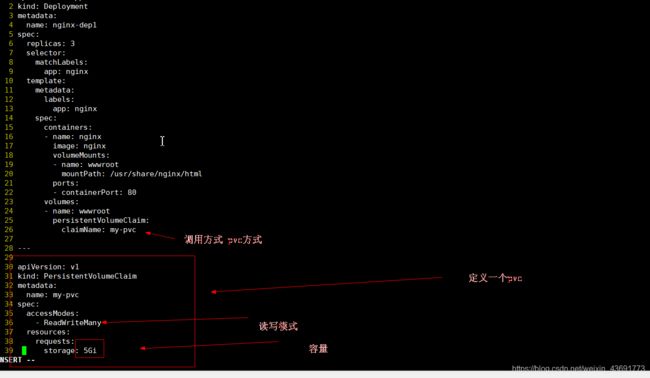

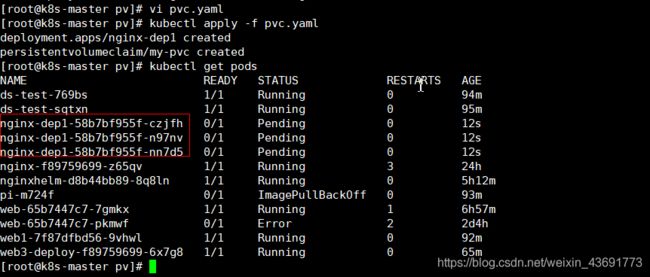

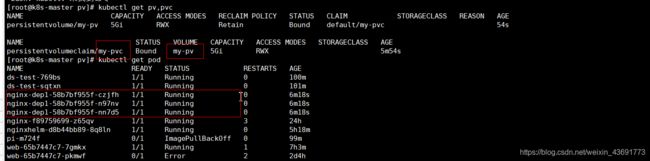

pv和pvc

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep1

spec:

replicas: 3 #三个副本数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: my-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

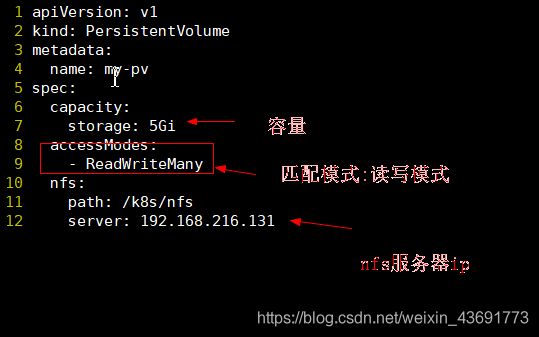

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /data/nfs

server: 192.168.216.131

kubenetes集群资源监控

监控指标和方案

搭建监控平台

部署promethoeus

---

2 apiVersion: apps/v1

3 kind: DaemonSet

4 metadata:

5 name: node-exporter

6 namespace: kube-system

7 labels:

8 k8s-app: node-exporter

9 spec:

10 selector:

11 matchLabels:

12 k8s-app: node-exporter

13 template:

14 metadata:

15 labels:

16 k8s-app: node-exporter

17 spec:

18 containers:

19 - image: prom/node-exporter

20 name: node-exporter

21 ports:

22 - containerPort: 9100

23 protocol: TCP

24 name: http

25 ---

26 apiVersion: v1

27 kind: Service

28 metadata:

29 labels:

30 k8s-app: node-exporter

31 name: node-exporter

32 namespace: kube-system

33 spec:

34 ports:

35 - name: http

36 port: 9100

37 nodePort: 31672

38 protocol: TCP

39 type: NodePort

40 selector:

41 k8s-app: node-exporter

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

1 ---

2 apiVersion: apps/v1

3 kind: Deployment

4 metadata:

5 labels:

6 name: prometheus-deployment

7 name: prometheus

8 namespace: kube-system

9 spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: prometheus

14 template:

15 metadata:

16 labels:

17 app: prometheus

18 spec:

19 containers:

20 - image: prom/prometheus:v2.0.0

21 name: prometheus

22 command:

23 - "/bin/prometheus"

24 args:

25 - "--config.file=/etc/prometheus/prometheus.yml"

26 - "--storage.tsdb.path=/prometheus"

27 - "--storage.tsdb.retention=24h"

28 ports:

29 - containerPort: 9090

30 protocol: TCP

31 volumeMounts:

32 - mountPath: "/prometheus"

33 name: data

34 - mountPath: "/etc/prometheus"

35 name: config-volume

36 resources:

37 requests:

38 cpu: 100m

39 memory: 100Mi

40 limits:

41 cpu: 500m

42 memory: 2500Mi

43 serviceAccountName: prometheus

44 volumes:

45 - name: data

46 emptyDir: {}

47 - name: config-volume

48 configMap:

prometheus.svc.yml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

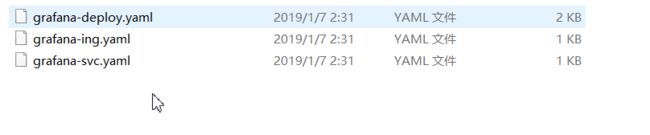

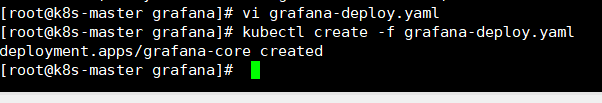

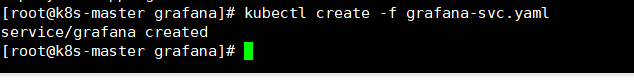

部署 Grafana

apiVersion: apps/v1

2 kind: Deployment

3 metadata:

4 name: grafana-core

5 namespace: kube-system

6 labels:

7 app: grafana

8 component: core

9 spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: grafana

14 component: core

15 template:

16 metadata:

17 labels:

18 app: grafana

19 component: core

20 spec:

21 containers:

22 - image: grafana/grafana:4.2.0

23 name: grafana-core

24 imagePullPolicy: IfNotPresent

25 # env:

26 resources:

27 # keep request = limit to keep this container in guaranteed class

28 limits:

29 cpu: 100m

30 memory: 100Mi

31 requests:

32 cpu: 100m

33 memory: 100Mi

34 env:

35 # The following env variables set up basic auth twith the default admin user and admin password.

36 - name: GF_AUTH_BASIC_ENABLED

37 value: "true"

38 - name: GF_AUTH_ANONYMOUS_ENABLED

39 value: "false"

40 # - name: GF_AUTH_ANONYMOUS_ORG_ROLE

41 # value: Admin

42 # does not really work, because of template variables in exported dashboards:

43 # - name: GF_DASHBOARDS_JSON_ENABLED

44 # value: "true"

45 readinessProbe:

46 httpGet:

47 path: /login

48 port: 3000

49 # initialDelaySeconds: 30

50 # timeoutSeconds: 1

51 volumeMounts:

52 - name: grafana-persistent-storage

53 mountPath: /var

54 volumes:

55 - name: grafana-persistent-storage

56 emptyDir: {}

apiVersion: v1

2 kind: Service

3 metadata:

4 name: grafana

5 namespace: kube-system

6 labels:

7 app: grafana

8 component: core

9 spec:

10 type: NodePort

11 ports:

12 - port: 3000

13 selector:

14 app: grafana

15 component: core

![]()

grafana-ing.yaml

apiVersion: extensions/v1beta1

2 kind: Ingress

3 metadata:

4 name: grafana

5 namespace: kube-system

6 spec:

7 rules:

8 - host: k8s.grafana

9 http:

10 paths:

11 - path: /

12 backend:

13 serviceName: grafana

14 servicePort: 3000

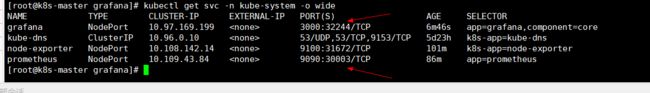

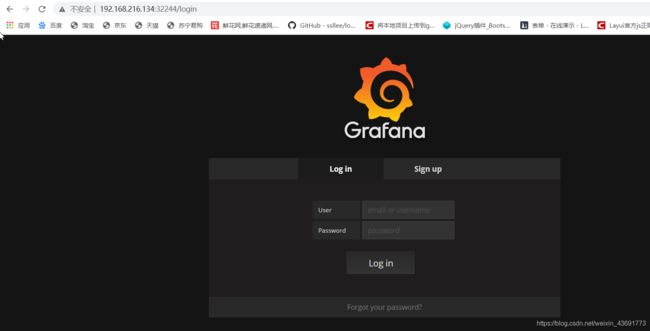

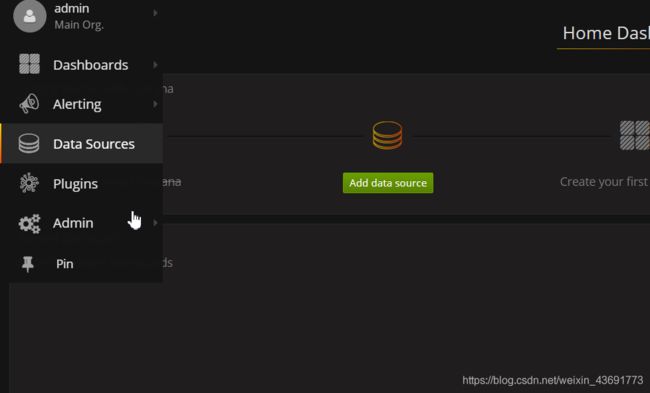

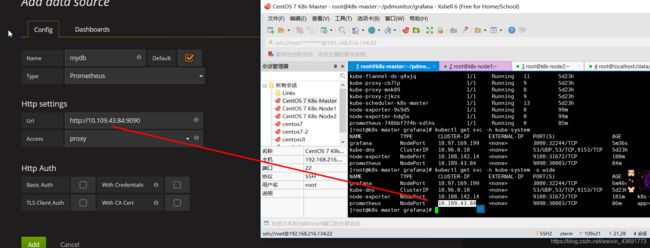

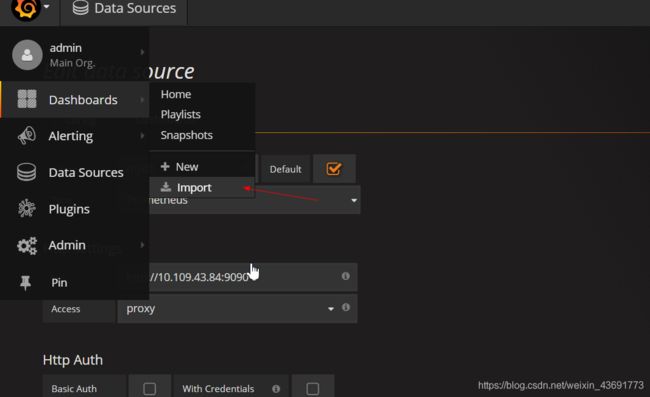

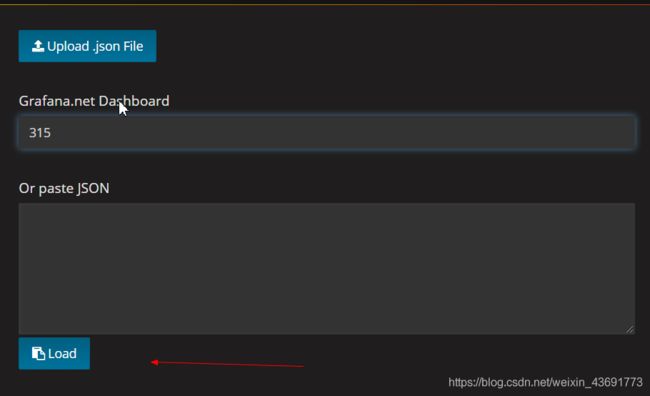

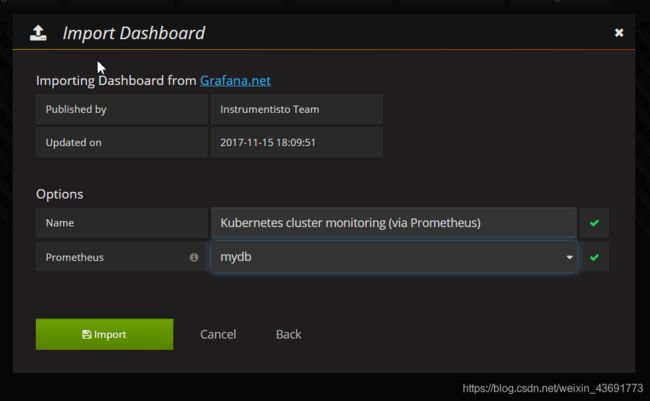

打开 Grafana ,配置purometheus数据源,导入显示模板

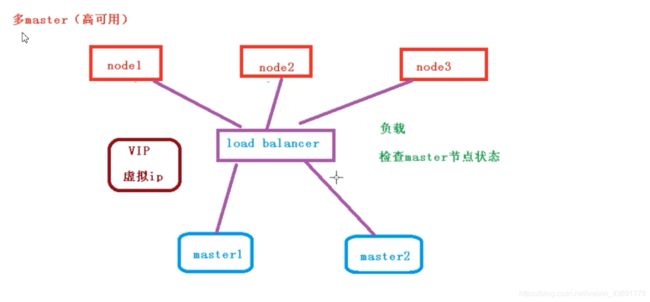

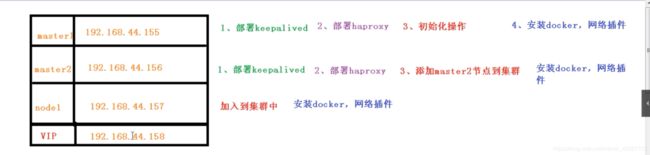

搭建k8s搭建高可用集群

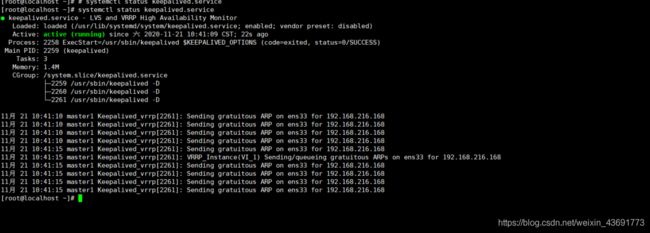

初始化个部署keepalive

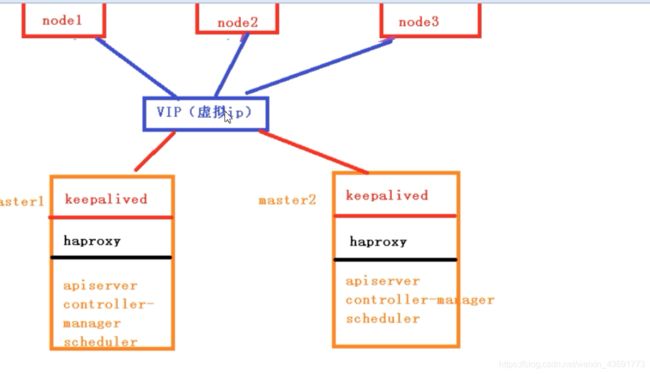

keepalive 配置VIP 检查节点健康状态

所有master节点节点安装keepalive

安装相关包

yum install -y conntrack-tools libseccomp libtool-ltdl

安装 keepalived

yum install -y keepalived

配置master节点 注意网卡地址和虚拟ip

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

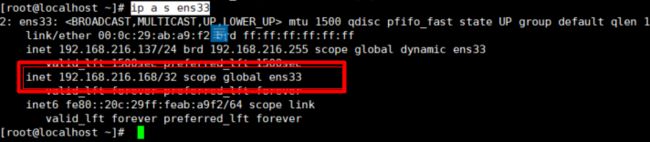

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 250

advert_int 1

authentication {

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

}

virtual_ipaddress {

192.168.216.168

}

track_script {

check-haproxy

}

}

EOF

启动keepalived

systemctl start keepalived.service

设置开机启动

systemctl enable keepalived.service

查看状态启动成功

一台机子有 一台没有 当有的那台挂了 vip会飘到另一台

部署 haproxy

安装

yum install -y haproxy

配置文件

cat > /etc/haproxy/haproxy.cfg << EOF

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#frontend main *:5000

# acl url_static path_beg -i /static /images /javascript /stylesheets

# acl url_static path_end -i .jpg .gif .png .css .js

#

# use_backend static if url_static

# default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

# backend static

# balance roundrobin

# server static 127.0.0.1:4331 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master 192.168.216.134:6443 check

server master1 192.168.216.137:6443 check

#-----------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

EOF

启动

systemctl start haproxy

systemctl enable haproxy

systemctl status haproxy

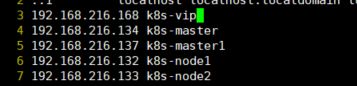

部署master

在具有vip的操作

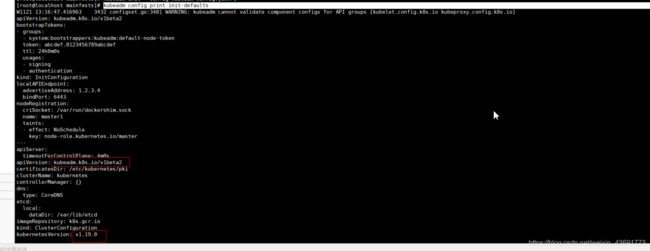

输入

kubeadm config print init-defaults

查看当前版本对应的推荐配置

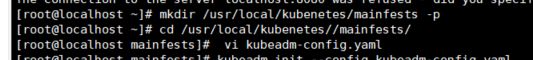

新建文件夹 新建yml配置文件

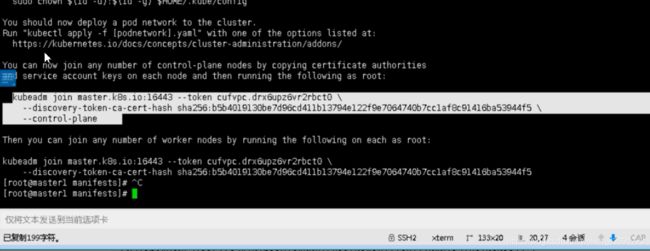

运行kubeadm init --config kubeadm-config.yaml

保存上面选择的内容,等等加入节点的时候使用

依次执行自己执行成功日志的当中的内容.

从master1复制密钥及相关文件到maste

# ssh [email protected] mkdir -p /etc/kubernetes/pki/etcd

# scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes

# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} [email protected]:/etc/kubernetes/pki

# scp /etc/kubernetes/pki/etcd/ca.* [email protected]:/etc/kubernetes/pki/etcd

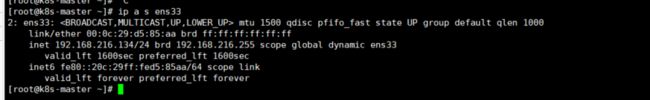

master2加入集群

执行在master1上init后输出的join命令,需要带上参数--control-plane表示把master控制节点加入集群

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba --control-plane

检查状态

kubectl get node

kubectl get pods --all-namespaces

加入Kubernetes Node

在node1上执行

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba

集群网络重新安装,因为添加了新的node节点