LSTM_CNN文本分类与tensorflow实现

1. 引言

前面我们介绍了RNN、CNN等文本分类模型,并在情感分析任务上都取得了不错的成绩,那有没有想过将RNN、CNN两者进行融合呢?答案肯定是有的!这次,我们将介绍一个将LSTM和CNN进行融合的文本分类模型,该模型同时兼具了RNN和CNN的优点,在很多文本分类任务上直接超过了RNN和CNN单个模型的效果。论文的下载地址如下:

- 论文地址:《Twitter Sentiment Analysis using combined LSTM-CNN Models》

下面我们将对该模型的结构进行具体介绍,并用tensorflow来实现它。

2. LSTM_CNN模型介绍

作者在原论文中其实提出了两种类型的结构,一种是CNN_LSTM,一种是LSTM_CNN,但是作者发现CNN_LSTM的效果不如LSTM_CNN,这可能是因为先用CNN层的话,会使得句子中的序列信息丢失,这时,后面尽管再使用LSTM层,其实也没法充分发挥LSTM的序列编码能力,从而导致模型的效果相对比较一般,因此,这里我们不对CNN_LSTM的进行介绍,感兴趣的读者可以直接查看原论文。

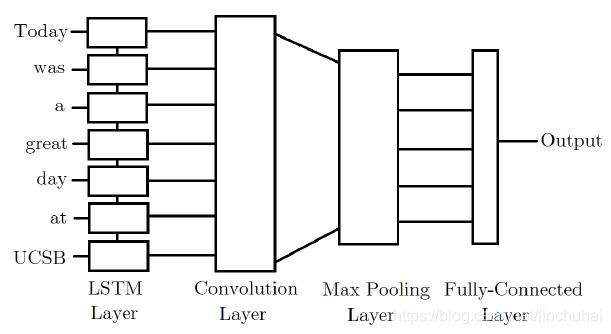

如图1所示,LSTM_CNN的模型结构其实也很简单,就是在TextCNN模型结构的基础上,在embedding层与CNN层之间再插入一层LSTM层,其原理就是对于句子中的词汇特征向量,先经过LSTM层进行编码,这样一来,每个时间步的输出不仅包括了当前词汇的特征向量信息,也包括了该词汇之前所有词汇的特征信息,使得每个时间步的特征向量能够包含更多的信息,接着,把每个时间步的输出向量当做TextCNN中的embedding层,后面的计算就跟TextCNN完全一模一样了,如果对TextCNN模型不了解的读者可以查看我之前的文章《TextCNN文本分类与tensorflow实现》,这里就不再赘述了。

3. LSTM_CNN模型的tensorflow实现

接下来,利用tensorflow框架来实现LSTM_CNN模型。同样的,为了便于对比各个模型的预测效果,本文的数据集还是采用之前的文章《FastText文本分类与tensorflow实现》中提到的数据集,LSTM_CNN的模型代码如下:

import os

import numpy as np

import tensorflow as tf

from eval.evaluate import accuracy

from tensorflow.contrib import slim

from loss.loss import cross_entropy_loss

class LSTM_CNN(object):

def __init__(self,

num_classes,

seq_length,

vocab_size,

embedding_dim,

learning_rate,

learning_decay_rate,

learning_decay_steps,

epoch,

filter_sizes,

num_filters,

dropout_keep_prob,

l2_lambda,

lstm_dim

):

self.num_classes = num_classes

self.seq_length = seq_length

self.vocab_size = vocab_size

self.embedding_dim = embedding_dim

self.learning_rate = learning_rate

self.learning_decay_rate = learning_decay_rate

self.learning_decay_steps = learning_decay_steps

self.epoch = epoch

self.filter_sizes = filter_sizes

self.num_filters = num_filters

self.dropout_keep_prob = dropout_keep_prob

self.l2_lambda = l2_lambda

self.lstm_dim = lstm_dim

self.input_x = tf.placeholder(tf.int32, [None, self.seq_length], name='input_x')

self.input_y = tf.placeholder(tf.float32, [None, self.num_classes], name='input_y')

self.l2_loss = tf.constant(0.0)

self.model()

def model(self):

# embedding层

with tf.name_scope("embedding"):

self.embedding = tf.Variable(tf.random_uniform([self.vocab_size, self.embedding_dim], -1.0, 1.0),

name="embedding")

self.embedding_inputs = tf.nn.embedding_lookup(self.embedding, self.input_x)

# LSTM层

with tf.name_scope('lstm'):

lstm = tf.contrib.rnn.LSTMCell(self.lstm_dim)

lstm_cell = tf.contrib.rnn.MultiRNNCell([lstm])

self.lstm_out, self.lstm_state = tf.nn.dynamic_rnn(lstm_cell, self.embedding_inputs, dtype=tf.float32)

self.lstm_out_expanded = tf.expand_dims(self.lstm_out, -1)

# 卷积层 + 池化层

pooled_outputs = []

for i, filter_size in enumerate(self.filter_sizes):

with tf.name_scope("conv_{0}".format(filter_size)):

filter_shape = [filter_size, self.lstm_dim, 1, self.num_filters]

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[self.num_filters]), name="b")

conv = tf.nn.conv2d(self.lstm_out_expanded, W, strides=[1, 1, 1, 1], padding="VALID", name="conv")

h = tf.nn.relu(tf.nn.bias_add(conv, b), name="relu")

pooled = tf.nn.max_pool(h, ksize=[1, self.seq_length - filter_size + 1, 1, 1], strides=[1, 1, 1, 1],

padding='VALID', name="pool")

pooled_outputs.append(pooled)

# 将每种尺寸的卷积核得到的特征向量进行拼接

num_filters_total = self.num_filters * len(self.filter_sizes)

h_pool = tf.concat(pooled_outputs, 3)

h_pool_flat = tf.reshape(h_pool, [-1, num_filters_total])

# 对最终得到的句子向量进行dropout

with tf.name_scope("dropout"):

h_drop = tf.nn.dropout(h_pool_flat, self.dropout_keep_prob)

# 全连接层

with tf.name_scope("output"):

W = tf.get_variable("W", shape=[num_filters_total, self.num_classes],

initializer=tf.contrib.layers.xavier_initializer())

b = tf.Variable(tf.constant(0.1, shape=[self.num_classes]), name="b")

self.l2_loss += tf.nn.l2_loss(W)

self.l2_loss += tf.nn.l2_loss(b)

self.logits = tf.nn.xw_plus_b(h_drop, W, b, name="scores")

self.pred = tf.argmax(self.logits, 1, name="predictions")

# 损失函数

self.loss = cross_entropy_loss(logits=self.logits, labels=self.input_y) + self.l2_lambda * self.l2_loss

# 优化函数

self.global_step = tf.train.get_or_create_global_step()

learning_rate = tf.train.exponential_decay(self.learning_rate, self.global_step,

self.learning_decay_steps, self.learning_decay_rate,

staircase=True)

optimizer = tf.train.AdamOptimizer(learning_rate)

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

self.optim = slim.learning.create_train_op(total_loss=self.loss, optimizer=optimizer, update_ops=update_ops)

# 准确率

self.acc = accuracy(logits=self.logits, labels=self.input_y)

def fit(self, train_x, train_y, val_x, val_y, batch_size):

# 创建模型保存路径

if not os.path.exists('./saves/lstmcnn'): os.makedirs('./saves/lstmcnn')

if not os.path.exists('./train_logs/lstmcnn'): os.makedirs('./train_logs/lstmcnn')

# 开始训练

train_steps = 0

best_val_acc = 0

# summary

tf.summary.scalar('val_loss', self.loss)

tf.summary.scalar('val_acc', self.acc)

merged = tf.summary.merge_all()

# 初始化变量

sess = tf.Session()

writer = tf.summary.FileWriter('./train_logs/lstmcnn', sess.graph)

saver = tf.train.Saver(max_to_keep=10)

sess.run(tf.global_variables_initializer())

for i in range(self.epoch):

batch_train = self.batch_iter(train_x, train_y, batch_size)

for batch_x, batch_y in batch_train:

train_steps += 1

feed_dict = {self.input_x: batch_x, self.input_y: batch_y}

_, train_loss, train_acc = sess.run([self.optim, self.loss, self.acc], feed_dict=feed_dict)

if train_steps % 1000 == 0:

feed_dict = {self.input_x: val_x, self.input_y: val_y}

val_loss, val_acc = sess.run([self.loss, self.acc], feed_dict=feed_dict)

summary = sess.run(merged, feed_dict=feed_dict)

writer.add_summary(summary, global_step=train_steps)

if val_acc >= best_val_acc:

best_val_acc = val_acc

saver.save(sess, "./saves/lstmcnn/", global_step=train_steps)

msg = 'epoch:%d/%d,train_steps:%d,train_loss:%.4f,train_acc:%.4f,val_loss:%.4f,val_acc:%.4f'

print(msg % (i, self.epoch, train_steps, train_loss, train_acc, val_loss, val_acc))

sess.close()

def batch_iter(self, x, y, batch_size=32, shuffle=True):

"""

生成batch数据

:param x: 训练集特征变量

:param y: 训练集标签

:param batch_size: 每个batch的大小

:param shuffle: 是否在每个epoch时打乱数据

:return:

"""

data_len = len(x)

num_batch = int((data_len - 1) / batch_size) + 1

if shuffle:

shuffle_indices = np.random.permutation(np.arange(data_len))

x_shuffle = x[shuffle_indices]

y_shuffle = y[shuffle_indices]

else:

x_shuffle = x

y_shuffle = y

for i in range(num_batch):

start_index = i * batch_size

end_index = min((i + 1) * batch_size, data_len)

yield (x_shuffle[start_index:end_index], y_shuffle[start_index:end_index])

def predict(self, x):

sess = tf.Session()

sess.run(tf.global_variables_initializer())

saver = tf.train.Saver(tf.global_variables())

ckpt = tf.train.get_checkpoint_state('./saves/lstmcnn/')

saver.restore(sess, ckpt.model_checkpoint_path)

feed_dict = {self.input_x: x}

logits = sess.run(self.logits, feed_dict=feed_dict)

y_pred = np.argmax(logits, 1)

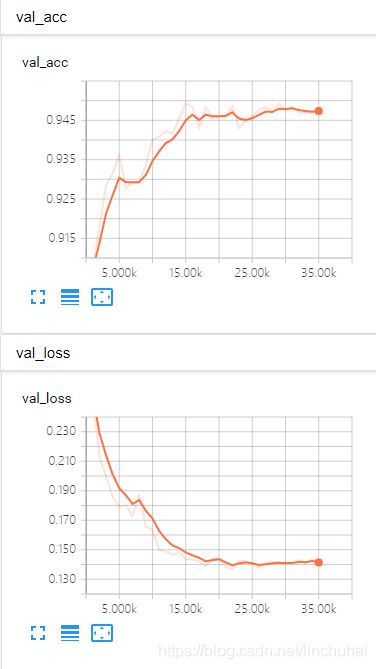

return y_pred对于数据集和模型的超参,保持与之前FastText和TextCNN中的一致,唯一的不同是LSTM_CNN由于引入了LSTM层,因此多了一个LSTM层隐藏单元数的参数,本文在实验时设置为200,这样的话得到的每个时间步的输出向量维度与词向量的维度一致,另外卷积核的数量改为36,其他参数与FastText和TextCNN相同。在验证集上的准确率和损失值如图2所示,在15000次迭代时验证集的准确率达到最高,为94.77%,笔者在3000个测试集上对模型进行测试,发现LSTM_CNN在测试集上的准确率达到97.67%,比TextCNN的准确率97.56%高0.11%。可以发现,引入LSTM后,模型的效果要比单纯的CNN模型效果要更好。

4. 总结

最后,大致总结一下LSTM_CNN模型的优缺点:

- LSTM_CNN模型兼具了RNN和CNN的优点,既考虑了句子的序列信息,又能捕捉句子中一些关键的词汇。

- LSTM_CNN同时也兼具了RNN的缺点,即没法并行计算,因此,在训练时速度要比FastText和TextCNN慢得多。