MaskRCNN源码解析4:头网络(Networks Heads)解析

MaskRCNN源码解析1:整体结构概述

MaskRCNN源码解析2:特征图与anchors生成

MaskRCNN源码解析3:RPN、ProposalLayer、DetectionTargetLayer

MaskRCNN源码解析4-0:ROI Pooling 与 ROI Align理论

MaskRCNN源码解析4:头网络(Networks Heads)解析

MaskRCNN源码解析5:损失部分解析

目录

MaskRCNN概述:

C),头网络解析

1,PyramidROIAlign

2,fpn_classifier_graph()进行分类和回归操作

3,build_fpn_mask_graph()进行mask操作

MaskRCNN概述:

Mask R-CNN是一个小巧、灵活的通用对象实例分割框架(object instance segmentation)。它不仅可对图像中的目标进行检测,还可以对每一个目标给出一个高质量的分割结果。它在Faster R-CNN[1]基础之上进行扩展,并行地在bounding box recognition分支上添加一个用于预测目标掩模(object mask)的新分支。该网络还很容易扩展到其他任务中,比如估计人的姿势,也就是关键点识别(person keypoint detection)。该框架在COCO的一些列挑战任务重都取得了最好的结果,包括实例分割(instance segmentation)、候选框目标检测(bounding-box object detection)和人关键点检测(person keypoint detection)。

参考文章:

Mask RCNN 学习笔记

MaskRCNN源码解读

令人拍案称奇的Mask RCNN

论文笔记:Mask R-CNN

Mask R-CNN个人理解

解析源码地址:

https://github.com/matterport/Mask_RCNN

C),头网络解析

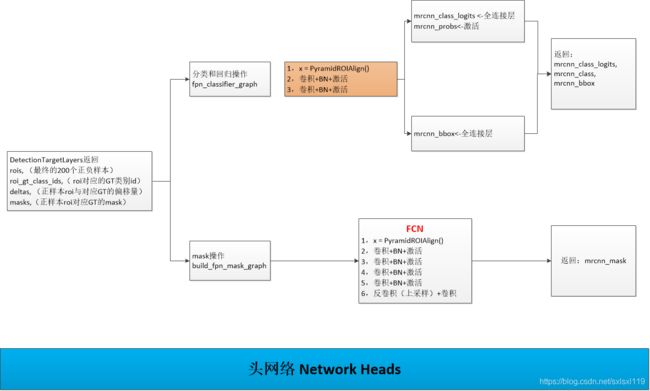

MaskRCNN里的3个最主要的操作:分类、回归、Mask 在头网络里进行,处理流程如下图所示:

整体调用头网络代码,分成了2个小步分别进行,其中fpn_classifier_graph()进行分类和回归操作;build_fpn_mask_graph()进行mask操作。这两个操作中都用到了PyramidROIAlign,即论文里提高的改进点之一ROIAlign。

# *************************7,头网络 Network Heads********************************************************************

# Network Heads

# TODO: verify that this handles zero padded ROIs

# 分类和回归操作

mrcnn_class_logits, mrcnn_class, mrcnn_bbox =\

fpn_classifier_graph(rois, mrcnn_feature_maps, input_image_meta,

config.POOL_SIZE, config.NUM_CLASSES,

train_bn=config.TRAIN_BN,

fc_layers_size=config.FPN_CLASSIF_FC_LAYERS_SIZE)

# mask操作

mrcnn_mask = build_fpn_mask_graph(rois, mrcnn_feature_maps,

input_image_meta,

config.MASK_POOL_SIZE,

config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

# TODO: clean up (use tf.identify if necessary)

output_rois = KL.Lambda(lambda x: x * 1, name="output_rois")(rois)1,PyramidROIAlign【需要详细解析一下】

这部分的理论解析与实例计算专门写了一篇文章,为了维持结构的整洁性,就不在这里贴理论的东西了,文章见:

MaskRCNN源码解析4-0:ROI Pooling 与 ROI Align理论

计算每一个roi来自于金字塔特征的P2到P5的哪一层特征的公式:

![]()

对于上面公式而言:w,h分别表示ROI宽度和高度;k是这个RoI应属于的特征层level;![]() 是w,h=224,224时映射的level,一般取为4,即对应着P4,至于为什么使用224,一般解释为是因为这是ImageNet的标准图片大小,比如现在有一个ROI是112*112,则利用公式可以计算得到k=3,即P3层。

是w,h=224,224时映射的level,一般取为4,即对应着P4,至于为什么使用224,一般解释为是因为这是ImageNet的标准图片大小,比如现在有一个ROI是112*112,则利用公式可以计算得到k=3,即P3层。

下面是ROIAlign的实现代码:

"""

Implements ROI Pooling on multiple levels of the feature pyramid.

在特征金字塔的多个级别上实现ROI池化。

Params:

- pool_shape: [pool_height, pool_width] of the output pooled regions. Usually [7, 7]

Inputs:

- boxes: [batch, num_boxes, (y1, x1, y2, x2)] in normalized

coordinates. Possibly padded with zeros if not enough

boxes to fill the array.

- image_meta: [batch, (meta data)] Image details. See compose_image_meta()

- feature_maps: List of feature maps from different levels of the pyramid.

Each is [batch, height, width, channels]

Output:

Pooled regions in the shape: [batch, num_boxes, pool_height, pool_width, channels].

The width and height are those specific in the pool_shape in the layer constructor.

"""

# PyramidROIAlign首先根据下面的公式计算每一个roi来自于金字塔特征的P2到P5的哪一层的特征:

# k=[k0+log2(sqrt(w*h)/244)],其中w,h分别表示boxes的宽度和高,k是分配ROI的level,k0是w,h=224,224时映射的level.

class PyramidROIAlign(KE.Layer):

def __init__(self, pool_shape, **kwargs):

super(PyramidROIAlign, self).__init__(**kwargs)

self.pool_shape = tuple(pool_shape)

def call(self, inputs):

# Crop boxes [batch, num_boxes, (y1, x1, y2, x2)] in normalized coords

boxes = inputs[0]

# Image meta

# Holds details about the image. See compose_image_meta()

image_meta = inputs[1]

# Feature Maps. List of feature maps from different level of the

# feature pyramid. Each is [batch, height, width, channels]

feature_maps = inputs[2:]

# Assign each ROI to a level in the pyramid based on the ROI area.

y1, x1, y2, x2 = tf.split(boxes, 4, axis=2)

h = y2 - y1

w = x2 - x1

# Use shape of first image. Images in a batch must have the same size.

image_shape = parse_image_meta_graph(image_meta)['image_shape'][0]

# Equation 1 in the Feature Pyramid Networks paper. Account for

# the fact that our coordinates are normalized here.

# e.g. a 224x224 ROI (in pixels) maps to P4

image_area = tf.cast(image_shape[0] * image_shape[1], tf.float32)

roi_level = log2_graph(tf.sqrt(h * w) / (224.0 / tf.sqrt(image_area)))

roi_level = tf.minimum(5, tf.maximum(

2, 4 + tf.cast(tf.round(roi_level), tf.int32)))

roi_level = tf.squeeze(roi_level, 2)

# Loop through levels and apply ROI pooling to each. P2 to P5.

pooled = []

box_to_level = []

for i, level in enumerate(range(2, 6)):

ix = tf.where(tf.equal(roi_level, level))

level_boxes = tf.gather_nd(boxes, ix)

# Box indices for crop_and_resize.

box_indices = tf.cast(ix[:, 0], tf.int32)

# Keep track of which box is mapped to which level

box_to_level.append(ix)

# Stop gradient propogation to ROI proposals

level_boxes = tf.stop_gradient(level_boxes)

box_indices = tf.stop_gradient(box_indices)

# Crop and Resize

# From Mask R-CNN paper: "We sample four regular locations, so

# that we can evaluate either max or average pooling. In fact,

# interpolating only a single value at each bin center (without

# pooling) is nearly as effective."

#

# Here we use the simplified approach of a single value per bin,

# which is how it's done in tf.crop_and_resize()

# Result: [batch * num_boxes, pool_height, pool_width, channels]

pooled.append(tf.image.crop_and_resize(

feature_maps[i], level_boxes, box_indices, self.pool_shape,

method="bilinear"))

# Pack pooled features into one tensor

pooled = tf.concat(pooled, axis=0)

# Pack box_to_level mapping into one array and add another

# column representing the order of pooled boxes

box_to_level = tf.concat(box_to_level, axis=0)

box_range = tf.expand_dims(tf.range(tf.shape(box_to_level)[0]), 1)

box_to_level = tf.concat([tf.cast(box_to_level, tf.int32), box_range],

axis=1)

# Rearrange pooled features to match the order of the original boxes

# Sort box_to_level by batch then box index

# TF doesn't have a way to sort by two columns, so merge them and sort.

sorting_tensor = box_to_level[:, 0] * 100000 + box_to_level[:, 1]

ix = tf.nn.top_k(sorting_tensor, k=tf.shape(

box_to_level)[0]).indices[::-1]

ix = tf.gather(box_to_level[:, 2], ix)

pooled = tf.gather(pooled, ix)

# Re-add the batch dimension

shape = tf.concat([tf.shape(boxes)[:2], tf.shape(pooled)[1:]], axis=0)

pooled = tf.reshape(pooled, shape)

return pooled

def compute_output_shape(self, input_shape):

return input_shape[0][:2] + self.pool_shape + (input_shape[2][-1], )

2,fpn_classifier_graph()进行分类和回归操作

该部分是分类和回归的分支

输入参数:

- rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized coordinates. 归一化坐标

- feature_maps: List of feature maps from different layers of the pyramid,[P2, P3, P4, P5]. Each has a different resolution. 每个都有不同的分辨率。

- image_meta: [batch, (meta data)] Image details. See compose_image_meta() 1+3+3+4+1+80=92

- pool_size: The width of the square feature map generated from ROI Pooling. 由ROI合并生成的方形特征图的宽度。

- num_classes: number of classes, which determines the depth of the results 类的数量,它决定结果的深度

- train_bn: Boolean. Train or freeze Batch Norm layers

- fc_layers_size: Size of the 2 FC layers 全连接层大小

返回值:

- logits: [batch, num_rois, NUM_CLASSES] classifier logits (before softmax) 分类器logits(在softmax之前)

- probs: [batch, num_rois, NUM_CLASSES] classifier probabilities 分类器概率

- bbox_deltas: [batch, num_rois, NUM_CLASSES, (dy, dx, log(dh), log(dw))] Deltas to apply to

- proposal boxes 预选框的偏移量

PyramidROIAlign首先根据下面的公式计算每一个roi来自于金字塔特征的P2到P5的哪一层的特征:

k=[k0+log2(sqrt(w*h)/244)],其中w,h分别表示boxes的宽度和高,k是分配ROI的level,k0是w,h=224,224时映射的level.然后从对应的特征图中取出坐标对应的区域,利用双线性插值的方式进行pooling操作。最后返回resize成相同大小的rois。

有一个细节需要注意的就是此处PyramidROIAlign得到的特征图是7 * 7大小的, 经过build_fpn_mask_graph()PyramidROIAlign得到的特征图大小是14 * 14。

下面是具体的实现代码:

"""

分类和回归

Builds the computation graph of the feature pyramid network classifier and regressor heads.

建立特征金字塔网络分类器的计算图和回归头。

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized coordinates. 归一化坐标

feature_maps: List of feature maps from different layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution. 每个都有不同的分辨率。

image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling. 由ROI合并生成的方形特征图的宽度。

num_classes: number of classes, which determines the depth of the results 类的数量,它决定结果的深度

train_bn: Boolean. Train or freeze Batch Norm layers

fc_layers_size: Size of the 2 FC layers 全连接层大小

Returns:

logits: [batch, num_rois, NUM_CLASSES] classifier logits (before softmax) 分类器logits(在softmax之前)

probs: [batch, num_rois, NUM_CLASSES] classifier probabilities 分类器概率

bbox_deltas: [batch, num_rois, NUM_CLASSES, (dy, dx, log(dh), log(dw))] Deltas to apply to

proposal boxes 预选框的偏移量

"""

def fpn_classifier_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True,

fc_layers_size=1024):

# ROI Pooling

# Shape: [batch, num_rois, POOL_SIZE, POOL_SIZE, channels]

# PyramidROIAlign首先根据下面的公式计算每一个roi来自于金字塔特征的P2到P5的哪一层的特征:

# k=[k0+log2(sqrt(w*h)/244)],其中w,h分别表示boxes的宽度和高,k是分配ROI的level,k0是

# w,h=224,224时映射的level.

# 然后从对应的特征图中取出坐标对应的区域,利用双线性插值的方式进行pooling操作。

# 最后返回resize成相同大小的rois。

# 有一个细节需要注意的就是此处PyramidROIAlign得到的特征图是7 * 7大小的,

# 经过build_fpn_mask_graph()PyramidROIAlign得到的特征图大小是14 * 14

x = PyramidROIAlign([pool_size, pool_size], # *****

name="roi_align_classifier")([rois, image_meta] + feature_maps)

# Two 1024 FC layers (implemented with Conv2D for consistency)

# TimeDistributed的真正意义在于使不同层的特征图共享权重

x = KL.TimeDistributed(KL.Conv2D(fc_layers_size, (pool_size, pool_size), padding="valid"), # 卷积

name="mrcnn_class_conv1")(x)

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn1')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

x = KL.TimeDistributed(KL.Conv2D(fc_layers_size, (1, 1)), name="mrcnn_class_conv2")(x) # 卷积

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn2')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

shared = KL.Lambda(lambda x: K.squeeze(K.squeeze(x, 3), 2),

name="pool_squeeze")(x)

# Classifier head

mrcnn_class_logits = KL.TimeDistributed(KL.Dense(num_classes), name='mrcnn_class_logits')(shared) # 全连接层

mrcnn_probs = KL.TimeDistributed(KL.Activation("softmax"), name="mrcnn_class")(mrcnn_class_logits) # 激活

# BBox head

# [batch, num_rois, NUM_CLASSES * (dy, dx, log(dh), log(dw))]

x = KL.TimeDistributed(KL.Dense(num_classes * 4, activation='linear'),name='mrcnn_bbox_fc')(shared) # 全连接层

# Reshape to [batch, num_rois, NUM_CLASSES, (dy, dx, log(dh), log(dw))]

s = K.int_shape(x)

mrcnn_bbox = KL.Reshape((s[1], num_classes, 4), name="mrcnn_bbox")(x)

return mrcnn_class_logits, mrcnn_probs, mrcnn_bbox3,build_fpn_mask_graph()进行mask操作 【FCN需要详细解析一下】

全卷积网络 FCN 详解

"""

Mask

Builds the computation graph of the mask head of Feature Pyramid Network.

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized

coordinates.

feature_maps: List of feature maps from different layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution.

image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling.

num_classes: number of classes, which determines the depth of the results

train_bn: Boolean. Train or freeze Batch Norm layers

Returns: Masks [batch, num_rois, MASK_POOL_SIZE, MASK_POOL_SIZE, NUM_CLASSES]

"""

def build_fpn_mask_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True):

# ROI Pooling

# Shape: [batch, num_rois, MASK_POOL_SIZE, MASK_POOL_SIZE, channels]

# 有一个细节需要注意的就是此处PyramidROIAlign得到的特征图是7 * 7大小的,

# 经过build_fpn_mask_graph()PyramidROIAlign得到的特征图大小是14 * 14

x = PyramidROIAlign([pool_size, pool_size],

name="roi_align_mask")([rois, image_meta] + feature_maps)

# Conv layers

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"), name="mrcnn_mask_conv1")(x) # 卷积

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_mask_bn1')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"), name="mrcnn_mask_conv2")(x) # 卷积

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_mask_bn2')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),name="mrcnn_mask_conv3")(x) # 卷积

x = KL.TimeDistributed(BatchNorm(),name='mrcnn_mask_bn3')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),name="mrcnn_mask_conv4")(x) # 卷积

x = KL.TimeDistributed(BatchNorm(),name='mrcnn_mask_bn4')(x, training=train_bn) # BN

x = KL.Activation('relu')(x) # 激活

x = KL.TimeDistributed(KL.Conv2DTranspose(256, (2, 2), strides=2, activation="relu"), # 反卷积上采样

name="mrcnn_mask_deconv")(x)

x = KL.TimeDistributed(KL.Conv2D(num_classes, (1, 1), strides=1, activation="sigmoid"), # 卷积

name="mrcnn_mask")(x)

return x

![]()