pytorch基础操作

文章目录

-

- 1 机器学习基本构成元素

- 2 pytorch基本概念

- 3 tensor与机器学习的关系

- 4 tensor创建编程实例

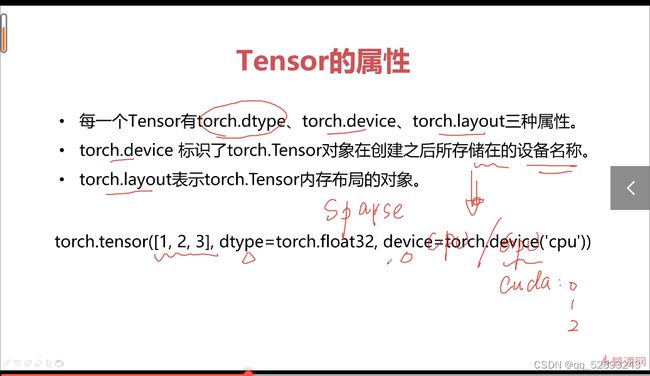

- 5 tensor的属性

- 6 稀疏张量的编程实践

- 7 tensor的算数运算

- 8 tensor算术运算编程实例

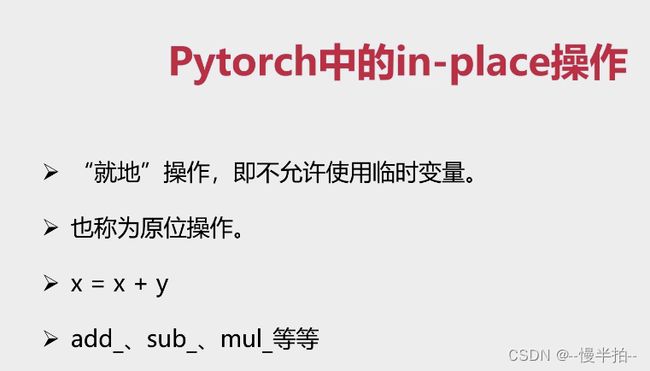

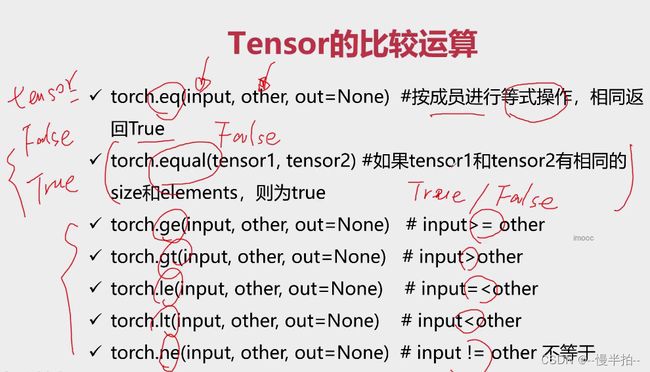

- 9 in-place的概念和广播机制

- 10 取整-余

- 11 比较运算-排序

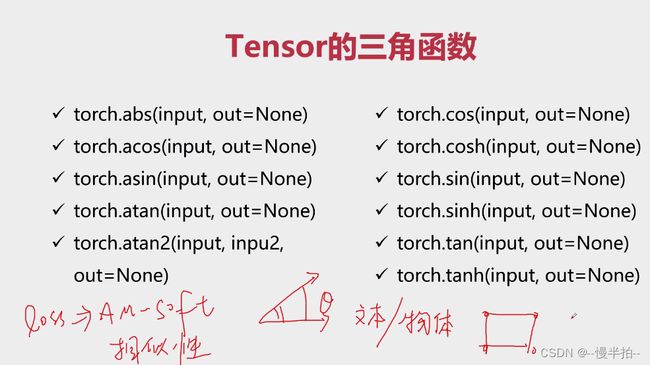

- 12 三角函数

- 13 其他数学函数

- 14 pytorch与统计学方法

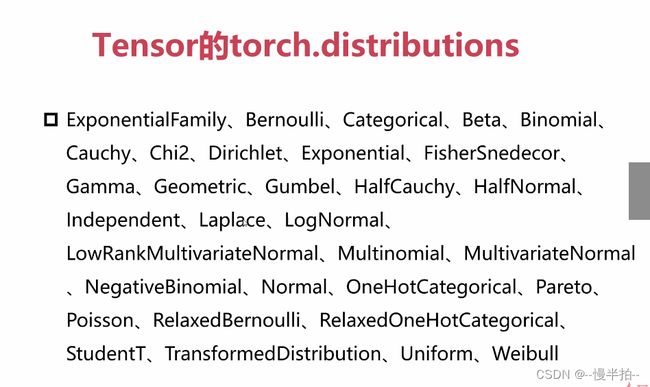

- 15 pytorch与分布函数

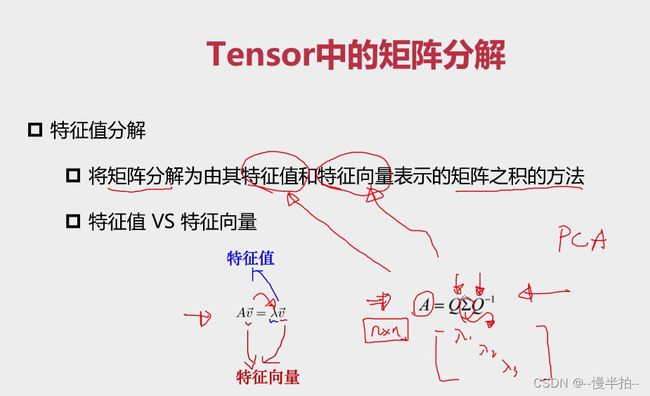

- 16 pytorch与随机抽样

- 17 pytorch与线性代数

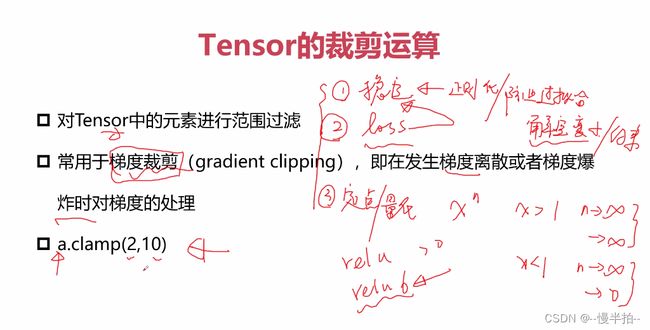

- 18 pytorch与矩阵分解-PCA

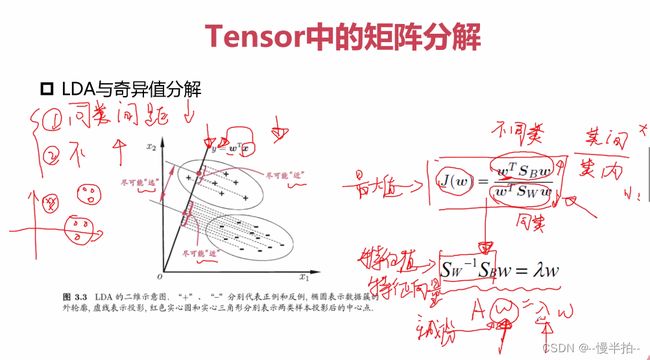

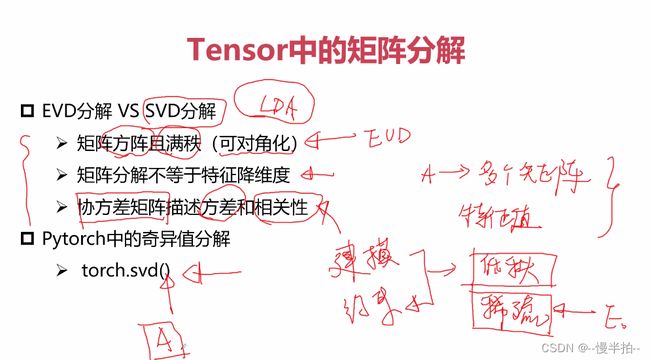

- 19 SVD分解-LDA

- 20 pytorch与张量裁剪

- 21 张量的索引与数据筛选

- 22 张量组合与拼接

1 机器学习基本构成元素

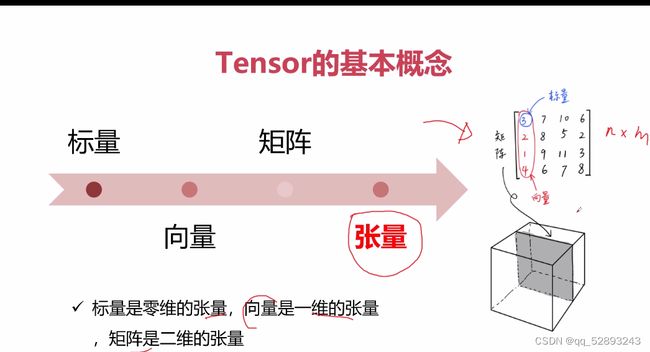

2 pytorch基本概念

3 tensor与机器学习的关系

4 tensor创建编程实例

import torch

a = torch.Tensor([[1,2],[3,4]])

print(a)

print(a.type())

a=torch.Tensor(2,3)

print(a)

print(a.type())

'''几种特殊的tensor'''

a=torch.ones(2,2)

print(a)

print(a.type())

a=torch.eye(2,2)

print(a)

print(a.type())

a=torch.zeros(2,2)

print(a)

print(a.type())

b = torch.Tensor(2,3)

b = torch.ones_like(b)

b = torch.zeros_like(b)

print(b)

print(b.type())

'''随机'''

a= torch.rand(2,2)

print(a)

print(a.type())

a = torch.normal(mean=0.0, std=torch.rand(5)) #正态分布

print(a)

print(a.type())

a = torch.normal(mean=torch.rand(5), std=torch.rand(5)) #正态分布

print(a)

print(a.type())

'''a = torch.Tensor(2,2).uniform(-1,1) #均匀分布要指定Tensor的大小

print(a)

print(a.type())'''

a = torch.arange(0,10,1)

print(a)

print(a.type())

a = torch.linspace(2,10,4) #拿到等间隔的n个数字

print(a)

print(a.type())

a = torch.randperm(10)

print(a)

print(a.type())

################

import numpy as np

a = np.array([[1,2],[3,4]])

print(a)

b = np.eye(3)

print(b)

5 tensor的属性

稀疏(零元素的个数越多越稀疏)

①稀疏使模型更简单

②减少内存的开销

6 稀疏张量的编程实践

import torch

dev = torch.device("cpu")

dev = torch.device("cuda")

a = torch.tensor([2,2],dtype=torch.float32,device=dev)

print(a)

i = torch.tensor([[0,1,2],[0,1,2]])

v = torch.tensor([1,2,3])

a= torch.sparse_coo_tensor(i,v,(4,4))

print(a) #稀疏的张量

i = torch.tensor([[0,1,2],[0,1,2]])

v = torch.tensor([1,2,3])

a= torch.sparse_coo_tensor(i,v,(4,4),

dtype=torch.float32,

device=dev).to_dense() #device是对资源的分配cpu,gpu

print(a) # 转换成稠密的张量

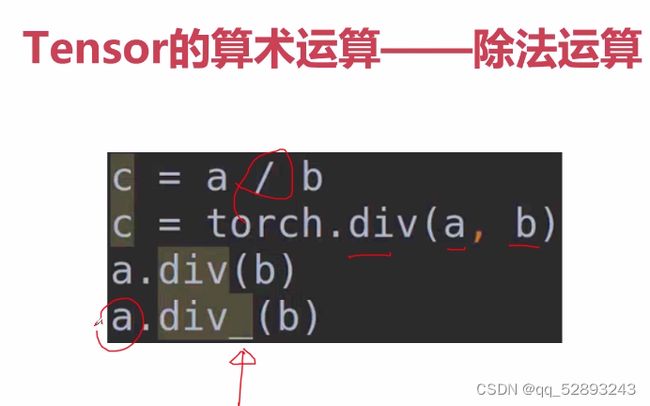

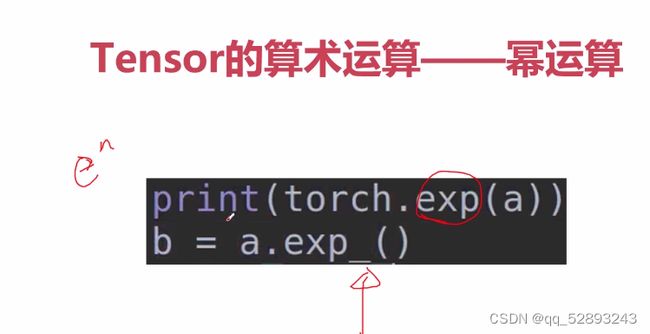

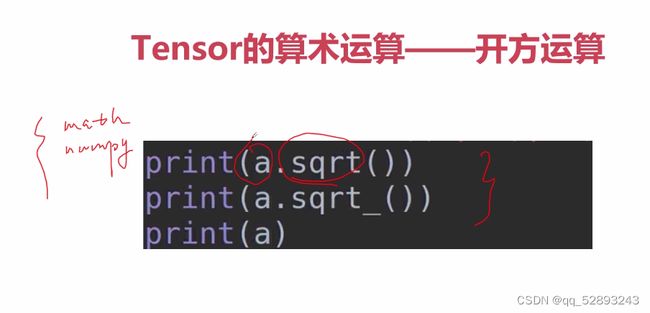

7 tensor的算数运算

8 tensor算术运算编程实例

import torch

#add

a=torch.rand(2,3)

b=torch.rand(2,3)

print(a)

print(b)

print(a+b)

print(a.add(b))

print(torch.add(a,b))

print(a)

print(a.add_(b))

print(a)

#sub

print("==== sub ====")

print(a-b)

print(a.sub(b))

print(torch.sub(a,b))

print(a)

print(a.sub_(b))

print(a)

#mul

print("==== mul ====")

print(a*b)

print(a.mul(b))

print(torch.mul(a,b))

print(a)

print(a.mul_(b))

print(a)

print("==== div ====")

print(a/b)

print(a.div(b))

print(torch.div(a,b))

print(a.div_(b))

print(a)

###matmul

a = torch.ones(2,1)

b = torch.ones(1,2)

print(a @ b)

print(a.matmul(b))

print(torch.matmul(a,b))

print(torch.mm(a,b))

print(a.mm(b))

###高维tensor

a = torch.ones(1,2,3,4)

b = torch.ones(1,2,4,3)

print(a.matmul(b).shape)

##pow

a = torch.tensor([1,2])

print(a)

print(torch.pow(a,3))

print(a.pow(3))

print(a**3)

print(a.pow_(3))

print(a)

#exp

a = torch.tensor([1,2],dtype=torch.float32)

print(torch.exp(a))

print(torch.exp_(a))

print(a.exp())

print(a.exp_())

###log

a = torch.tensor([10,2],dtype=torch.float32)

print(torch.log(a))

print(torch.log_(a))

print(a.log())

print(a.log_())

###sqrt

a = torch.tensor([10,2],dtype=torch.float32)

print(torch.sqrt(a))

print(torch.sqrt_(a))

print(a.sqrt())

print(a.sqrt_())

9 in-place的概念和广播机制

import torch

a = torch.rand(2,1,1,3)

b = torch.rand(4,2,3)

# a, 2*1

# b, 1*2

# c, 2*2

# c, 2*4*2*3

c = a+b

print(a)

print(b)

print(c)

print(c.shape)

10 取整-余

11 比较运算-排序

import torch

a = torch.rand(2,3)

b = torch.rand(2,3)

print(a)

print(b)

print(torch.eq(a,b)) #等于

print(torch.equal(a,b))

print(torch.ge(a,b)) #大于等于

print(torch.gt(a,b)) #大于

print(torch.le(a,b)) #小于等于

print(torch.lt(a,b)) #小于

print(torch.ne(a,b)) #不等于

####

a = torch.tensor([1,4,4,3,5])

print(torch.sort(a))

print(torch.sort(a,descending=True))

b = torch.tensor([[1,4,4,3,5],

[2,3,1,3,5]])

print(b.shape)

print(torch.sort(b,dim=1,descending=False)) #dim=0对2这个维度排序,dim=1对5这个维度排序

#####topk

a= torch.tensor([[2,4,3,1,5],

[2,3,5,1,4]])

print(a.shape)

print(torch.topk(a,k=2,dim=1))

print(torch.topk(a,k=1,dim=0))

print(torch.kthvalue(a,k=2,dim=0))

print(torch.kthvalue(a,k=2,dim=1)) #取第二小的数

a = torch.rand(2,3)

print(a)

print(a/0)

print(torch.isfinite(a)) #看看是否有界

print(torch.isfinite(a/0))

print(torch.isinf(a/0)) #看看是否无界

print(torch.isnan(a))

import numpy as np

b = torch.tensor([1,2,np.nan])

print(torch.isnan(b))

12 三角函数

import torch

a = torch.zeros(2,3)

b = torch.cos(a)

print(a)

print(b)

13 其他数学函数

14 pytorch与统计学方法

import torch

a = torch.rand(2,2)

print(a)

print(torch.mean(a))

print(torch.sum(a))

print(torch.prod(a))

b = torch.rand(2,2)

print(b)

print(torch.mean(b,dim=0))

print(torch.sum(b,dim=0))

print(torch.prod(b,dim=0))

print(torch.argmax(a,dim=0))

print(torch.argmin(a,dim=0))

print(torch.std(a)) #标准差

print(torch.var(a)) #方差

print(torch.median(a)) #中值

print(torch.mode(a)) #众数

a = torch.rand(2,2)*10

print(a)

print(torch.histc(a,6,0,0))

a= torch.randint(0,10,[10])

print(a)

print(torch.bincount(a)) #bincount只能处理1维的

#统计某一类别样本的个数

15 pytorch与分布函数

16 pytorch与随机抽样

import torch

torch.manual_seed(1)

mean = torch.rand(1,2)

std = torch.rand(1,2)

print(torch.normal(mean,std)) #正态分布

17 pytorch与线性代数

0范数是指向量中非零元素的个数

1范数是指元素的绝对值的和

通过范数约束可以完成对模型参数的约束

18 pytorch与矩阵分解-PCA

矩阵分解:解决和分析最优化问题时会用到

LU、QR用的不多

EVD、SVD用的较多,需要掌握。与EVD分解相对应的是PCA算法(无监督的算法),与SVD分解相对应的是LDA算法(可以完成特征工程的降维,引入了学习的因素,有监督的算法)。

满秩的矩阵称为奇异矩阵(用EVD),不满秩的矩阵称为非奇异矩阵(用SVD)。

方差越大,说明特征值越丰富,相关性越低,信息之间的冗余度越低

把n维中能量低的去掉,保留其中k维能量高的。

19 SVD分解-LDA

SB是同类物体之间的协方差矩阵

Sw是不同类物体之间的协方差矩阵

类内要小,类间要大

20 pytorch与张量裁剪

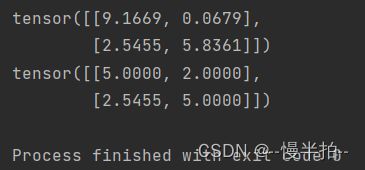

import torch

a = torch.rand(2,2)*10

print(a)

a = a.clamp(2,5)

print(a)

21 张量的索引与数据筛选

import torch

#torch.where

a = torch.rand(4,4)

b = torch.rand(4,4)

print(a)

print(b)

out = torch.where(a>0.5,a,b)#大于0.5输出a,否则输出b

print(out)

'''

tensor([[0.4527, 0.6981, 0.1994, 0.2436],

[0.7594, 0.1886, 0.5046, 0.9406],

[0.9217, 0.2026, 0.1484, 0.1788],

[0.8292, 0.4543, 0.4000, 0.3469]])

tensor([[0.1317, 0.3827, 0.8856, 0.8149],

[0.1946, 0.1278, 0.1825, 0.3817],

[0.7441, 0.5222, 0.3295, 0.4972],

[0.3863, 0.8676, 0.9039, 0.1099]])

tensor([[0.1317, 0.6981, 0.8856, 0.8149],

[0.7594, 0.1278, 0.5046, 0.9406],

[0.9217, 0.5222, 0.3295, 0.4972],

[0.8292, 0.8676, 0.9039, 0.1099]])

'''

#torch.index_select

a = torch.rand(4,4)

print(a)

out = torch.index_select(a,dim=0,

index=torch.tensor([0,3,2]))#取a的第0,3,2行进行输出

print(out)

'''

tensor([[0.1697, 0.1639, 0.6349, 0.1004],

[0.2946, 0.5318, 0.9958, 0.1493],

[0.3166, 0.2766, 0.5476, 0.7023],

[0.5456, 0.4693, 0.3431, 0.0805]])

tensor([[0.1697, 0.1639, 0.6349, 0.1004],

[0.5456, 0.4693, 0.3431, 0.0805],

[0.3166, 0.2766, 0.5476, 0.7023]])

'''

#torch.gather

a = torch.linspace(1,16,16).view(4,4)

print(a)

out = torch.gather(a,dim=0,

index=torch.tensor([[0,1,1,1],

[0,1,2,2],

[0,1,3,3]]))

print(out)

'''

tensor([[ 1., 2., 3., 4.],

[ 5., 6., 7., 8.],

[ 9., 10., 11., 12.],

[13., 14., 15., 16.]])

tensor([[ 1., 6., 7., 8.],

[ 1., 6., 11., 12.],

[ 1., 6., 15., 16.]])

'''

#torch.masked_index

a = torch.linspace(1,16,16).view(4,4)

mask = torch.gt(a,8)

print(a)

print(mask)

out = torch.masked_select(a,mask)

print(out)

'''

tensor([[ 1., 2., 3., 4.],

[ 5., 6., 7., 8.],

[ 9., 10., 11., 12.],

[13., 14., 15., 16.]])

tensor([[False, False, False, False],

[False, False, False, False],

[ True, True, True, True],

[ True, True, True, True]])

tensor([ 9., 10., 11., 12., 13., 14., 15., 16.])

'''

#torch.take

a = torch.linspace(1,16,16).view(4,4)

b = torch.take(a,index=torch.tensor([0,15,13,10]))

print(b)

'''

tensor([ 1., 16., 14., 11.])

'''

#torch.nonzero

a = torch.tensor([[0,1,2,0],[2,3,0,1]])

out = torch.nonzero(a)

print(out)#稀疏表示

'''

tensor([[0, 1],

[0, 2],

[1, 0],

[1, 1],

[1, 3]])

'''

22 张量组合与拼接

import torch

a = torch.zeros((2,4))

b = torch.ones((2,4))

out = torch.cat((a,b),dim=0)

print(out)

'''

tensor([[0., 0., 0., 0.],

[0., 0., 0., 0.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]])

'''

out = torch.cat((a,b),dim=1)

print(out)

'''

tensor([[0., 0., 0., 0., 1., 1., 1., 1.],

[0., 0., 0., 0., 1., 1., 1., 1.]])

'''

#torch.stack

a = torch.linspace(1,6,6).view(2,3)

b = torch.linspace(7,12,6).view(2,3)

out = torch.stack((a,b),dim=0)

print(out)

print(out.shape)

'''

tensor([[[ 1., 2., 3.],

[ 4., 5., 6.]],

[[ 7., 8., 9.],

[10., 11., 12.]]])

torch.Size([2, 2, 3])

'''

out = torch.stack((a,b),dim=1)

print(out)

print(out.shape)

'''

tensor([[[ 1., 2., 3.],

[ 7., 8., 9.]],

[[ 4., 5., 6.],

[10., 11., 12.]]])

torch.Size([2, 2, 3])

'''

out = torch.stack((a,b),dim=2)

print(out)

print(out.shape)

'''

tensor([[[ 1., 7.],

[ 2., 8.],

[ 3., 9.]],

[[ 4., 10.],

[ 5., 11.],

[ 6., 12.]]])

torch.Size([2, 3, 2])

'''

在深度学习当中cat用的比较多