机器学习实战 K近邻算法(附数据集)

机器学习实战 K近邻算法

实验环境:程序在Anaconda的Jupyter Notebook上运行,Python版本3.6.6。

数据集:使用K-近邻算法改进约会网站的配对效果 提取码:vcpy

数字识别系统 提取码:7aqx

1.前言

k近邻是一种基本分类与回归方法。k近邻法的输入为实例的特征向量,对应于特征空间的点,输出为实例的类别,可以取多类。k近邻法假设给定一个训练数据集,其中的实例类别已定。分类时,对新的实例,根据其K个最近邻的训练实例的类别,通过多数表决等方式进行预测。因此,k近邻不具有显示的学习过程(训练时间开销为0)。k值的选择,距离度量及分类决策规则是k近邻法的三个基本要素。

优点:精度高,对异常值不敏感,无数据输入假定

缺点:计算复杂度高,空间复杂度高

适用数据范围:数值型和标称型

k-近邻算法(kNN),它的工作原理是:

存在一个样本数据集合,也称作训练样本集,并且样本集中每个数据都存在标签,即我们知道样本集中每一数据与所属分类的对应关系。输入没有标签的新数据后,将新数据的每个特征与样本集中数据对应的特征进行比较,然后算法提取样本集中特征最相似数据(最近邻)的分类标签。一般来说,我们只选择样本数据集中前k个最相似的数据,这就是k-近邻算法中k的出处,通常k是不大于20的整数。最后,选择k个最相似数据中出现次数最多的分类,作为新数据的分类。

k-近邻算法的一般流程

- (1) 收集数据:可以使用任何方法。

- (2) 准备数据:距离计算所需要的数值,最好是结构化的数据格式。

- (3) 分析数据:可以使用任何方法。

- (4) 训练算法:此步骤不适用于k-近邻算法。

- (5) 测试算法:计算错误率。

- (6) 使用算法:首先需要输入样本数据和结构化的输出结果,然后运行k-近邻算法判定输入数据分别属于哪个分类,最后应用对计算出的分类执行后续的处理。

该函数的功能是使用k-近邻算法将每组数据划分到某个类中,其伪代码如下:

对未知类别属性的数据集中的每个点依次执行以下操作:

- (1) 计算已知类别数据集中的点与当前点之间的距离;

- (2) 按照距离递增次序排序;

- (3) 选取与当前点距离最小的k个点;

- (4) 确定前k个点所在类别的出现频率;

- (5) 返回前k个点出现频率最高的类别作为当前点的预测分类。

1.1 准备:使用Python导入数据

from numpy import *

import numpy as np

import operator

def createDataSet():

group = np.array([[1.0,1.1],[1.0,1.0],[0,0],[0,0.1]])

labels = ['A','A','B','B']

return group,labels

group,labels = createDataSet()

group

array([[1. , 1.1],

[1. , 1. ],

[0. , 0. ],

[0. , 0.1]])

labels

['A', 'A', 'B', 'B']

1.2 实施KNN算法

"""

输入为四个参数:

用于输入向量是inX,输入的训练样本集为dataSet,标签向量为labels,k表示用于选择最近邻居的数目。

注意:labels元素数目和矩阵dataSet的行数相同

程序使用欧式距离公式

"""

def classify0(inX, dataSet, labels, k):

# 1.距离计算

dataSetSize = dataSet.shape[0]

# 具体这一步的操作就是生成和训练样本对应的矩阵,并与训练样本求差

diffMat = tile(inX,(dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distance=sqDistances**0.5

# 距离从小到大(从近到远)的排列,并返回其所对应的索引

sortedDistIndicies=distance.argsort()

# 2.选择距离最小的k个点

classCount={}

for i in range (k):

voteIlabel = labels[sortedDistIndicies[i]]

# get 返回键值key对应的值;如果key没有在字典里,则返回default参数的值,默认为0。功能:计算每个标签类别的个数.

classCount[voteIlabel] = classCount.get(voteIlabel,0)+1

# 3.排序并返回出现最多的那个类型

# 语法:dict.items() 功能:以列表返回可遍历的(键,值)元组数组

sortedClassCount = sorted(classCount.items(),key=operator.itemgetter(1),reverse=True)

# 最后返回发生频率最高的元素标签。

return sortedClassCount[0][0]

语法:sorted(iterable,*,key=None,reverse=False)

- key按第一个域进行排序,默认False为升序排序。此处True为逆序,即按照频率从大到小次序排序。

- sort 是应用在 list 上的方法,sorted 可以对所有可迭代的对象进行排序操作。

- sort是在原有基础上排序,sorted 排序是产生一个新的列表。

语法: operator.itemgetter(item) #功能: 用于获取对象的指定维数据,参数为序号

- 注意: operator.itemgetter函数获取的不是值,而是定义了一个函数。通过该函数作用到对象上,才能获取值。

- 示例:import operator

- a=[1,2,3]

- b=operator.itemgetter(1)

- b(a)

- 输出:2 <获取对象的第一个域的值>

classify0([0,0],group,labels,2)

classify0([1,1.2],group,labels,2)

'A'

2.示例:使用K-近邻算法改进约会网站的配对效果

2.1 准备数据:从文本文件中解析数据

"""

准备数据:从文本中解析数据

输入:文件名字符串(文件路径)

功能:返回训练样本矩阵returnMat和类标签向量classLabelVector

"""

def file2matrix(filename):

fr = open(filename)

arrayOfLines = fr.readlines()

numbersOfLines = len(arrayOfLines)

returnMat = zeros((numbersOfLines,3))

classLabelVector = []

index = 0

for line in arrayOfLines:

# 语法:str.strip([chars]) 功能:返回移除字符串头尾指定的字符生成的新字符串(默认是去除首尾空格)

line = line.strip()

# 语法:str.split(str="", num=string.count(str)) str 分隔符,默认为所有的空字符,包括空格、换行(\n)、制表符(\t)等。 num 分割次数

listFromLine = line.split('\t')

returnMat[index,:] = listFromLine[0:3]

if listFromLine[-1] == 'didntLike':

classLabelVector.append(1)

elif listFromLineLabelVector.append(2)

elif listFromLine[-1] == 'largeDoses':

[-1] == 'smallDoses':

classclassLabelVector.append(3)

# classLabelVector.append(listFromLine[-1])

index += 1

return returnMat,classLabelVector

datingDataMat,datelabels = file2matrix('datingTestSet2.txt')

datingDataMat

array([[4.0920000e+04, 8.3269760e+00, 9.5395200e-01],

[1.4488000e+04, 7.1534690e+00, 1.6739040e+00],

[2.6052000e+04, 1.4418710e+00, 8.0512400e-01],

...,

[2.6575000e+04, 1.0650102e+01, 8.6662700e-01],

[4.8111000e+04, 9.1345280e+00, 7.2804500e-01],

[4.3757000e+04, 7.8826010e+00, 1.3324460e+00]])

datelabels[:20]

[3, 2, 1, 1, 1, 1, 3, 3, 1, 3, 1, 1, 2, 1, 1, 1, 1, 1, 2, 3]

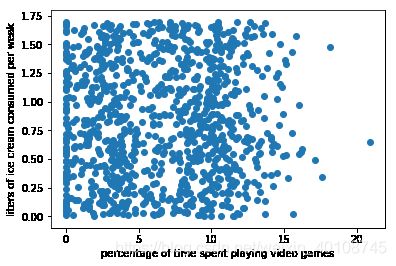

2.2分析数据:使用Matplotlib创建散点图

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

plt.xlabel('percentage of time spent playing video games')

plt.ylabel('liters of ice cream consumed per weak')

ax.scatter(datingDataMat[:,1],datingDataMat[:,2])

plt.show()

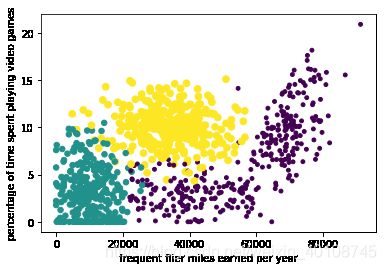

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

plt.xlabel('percentage of time spent playing video games')

plt.ylabel('liters of ice cream consumed per weak')

ax.scatter(datingDataMat[:,1],datingDataMat[:,2],15.0*array(datelabels),15.0*array(datelabels))

plt.show()

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

plt.xlabel('frequent flier miles earned per year')

plt.ylabel('percentage of time spent playing video games')

ax.scatter(datingDataMat[:,0],datingDataMat[:,1],15.0*array(datelabels),15.0*array(datelabels))

plt.show()

2.3 准备数据:归一化数值

归一化的必要性:消除属性之间量级不同导致的影响

"""

输入:数据集

功能:返回归一化后的数据集,最大值和最小值之差以及最小值(后两者并没有实际用到)

"""

def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = zeros(shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - tile(minVals,(m,1))

normDataSet = normDataSet/tile(ranges,(m,1))

return normDataSet,ranges,minVals

autoNorm(datingDataMat)

(array([[0.44832535, 0.39805139, 0.56233353],

[0.15873259, 0.34195467, 0.98724416],

[0.28542943, 0.06892523, 0.47449629],

...,

[0.29115949, 0.50910294, 0.51079493],

[0.52711097, 0.43665451, 0.4290048 ],

[0.47940793, 0.3768091 , 0.78571804]]),

array([9.1273000e+04, 2.0919349e+01, 1.6943610e+00]),

array([0. , 0. , 0.001156]))

2.4 测试算法:作为完整程序验证分类器

"""

测试算法:验证分类器的正确率

功能:返回分类错误率

"""

def datingClassTest():

hoRate = 0.1 #测试集所占的比例

datingDataMat,datelabels = file2matrix('datingTestSet2.txt')

normMat, ranges,minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRate)

errorCount = 0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datelabels[numTestVecs:m],1)

print('the classifierResult is %d,the real answer is %d' %(classifierResult, datelabels[i]))

if (classifierResult != datelabels[i]):

errorCount += 1

print("the total error rate is: %f " %(errorCount/numTestVecs))

datingClassTest()

the classifierResult is 1,the real answer is 1

the classifierResult is 3,the real answer is 3

the classifierResult is 2,the real answer is 3

the classifierResult is 1,the real answer is 1

the classifierResult is 2,the real answer is 2

...

the classifierResult is 1,the real answer is 1

the classifierResult is 3,the real answer is 3

the classifierResult is 3,the real answer is 3

the classifierResult is 2,the real answer is 2

the classifierResult is 2,the real answer is 1

the classifierResult is 1,the real answer is 1

the total error rate is: 0.080000

"""

使用算法,构建完整可用系统

找到某人并输入他的信息,程序会给出她对对方喜欢程度的预测值

"""

def classifyPerson():

resultList = ['不喜欢','一般魅力','极具魅力']

percentTats = float(input('玩游戏时间百分比:'))

ffMiles = float(input('每年获得飞行常客旅行数:'))

iceCream = float(input('每周消费的冰淇淋公升数:'))

datingDataMat,datelabels = file2matrix('datingTestSet2.txt')

normMat,ranges,minVals = autoNorm(datingDataMat)

intArr = [ffMiles,percentTats,iceCream]

classifierResult = classify0((intArr-minVals)/ranges,normMat,datelabels,3)

print('You will probably like this person:',resultList[classifierResult-1])

classifyPerson()

玩游戏时间百分比:10

每年获得飞行常客旅行数:12345

每周消费的冰淇淋公升数:1

You will probably like this person: 一般魅力

3.实例:数字识别系统

3.1 收集数据

提供文字的文本文件,大小都为32*32

3.2准备数据,把数据转换为上文中分类器可用的一维vector向量,从3232变为11024

为了使用前面两个例子的分类器,我们必须将图像格式化处理为一个向量。我们将把一个32×

32的二进制图像矩阵转换为1×1024的向量,这样前两节使用的分类器就可以处理数字图像信息了。

def image2Vector(filename):

returnVect = zeros((1,1024))

fr = open(filename)

for i in range(32):

linestr = fr.readline()

for j in range(32):

returnVect[0,32*i+j] = int(linestr[j])

return returnVect

testVector = image2Vector('testDigits/0_12.txt')

testVector[0,0:31]

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.])

testVector[0,32:63]

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 1., 1.,

1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.])

from os import listdir

def handwritingClassTest():

# 1.获取目录内容

hwlabels = []

trainFileList = listdir('trainingDigits')

m = len(trainFileList)

trainingMat = zeros((m,1024))

# 2.文件名解析分类数字

for i in range(m):

fileNameStr = trainFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

hwlabels.append(classNumStr)

trainingMat[i,:] = image2Vector('trainingDigits/%s' %(fileNameStr))

testFileList = listdir('testDigits')

m_test = len(testFileList)

error_count = 0

for i in range(m_test):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = image2Vector('testDigits/%s' %(fileNameStr))

classfierResult = classify0(vectorUnderTest,trainingMat,hwlabels,3)

print('the classfierResult is %d,the real answer is %d' %(classfierResult,classNumStr))

if (classfierResult!=classNumStr):

error_count += 1

print('the total number of error is %d' % error_count)

print('the error rate is %f' %(error_count/m_test))

handwritingClassTest()

the classfierResult is 0,the real answer is 0

the classfierResult is 0,the real answer is 0

the classfierResult is 0,the real answer is 0

the classfierResult is 0,the real answer is 0

...

the classfierResult is 9,the real answer is 9

the classfierResult is 9,the real answer is 9

the classfierResult is 9,the real answer is 9

the total number of error is 10

the error rate is 0.010571