ts12_Multi-step Forecast_sktime_bold_Linear Regress_sMAPE MASE_warn_plotly acf vlines_season_summary

In this chapter, you will explore different machine learning (ML) algorithms for time series forecasting. Machine learning algorithms can be grouped into supervised learning, unsupervised learning, and reinforcement learning. This chapter will focus on supervised machine learning. Preparing time series for supervised machine learning is an important phase that you will be introduced to in the first recipe.

Furthermore, you will explore two machine learning libraries: scikit-Learn and sktime. scikit-learn is a popular machine learning library in Python that offers a wide range of algorithms for supervised and unsupervised learning and a plethora/ ˈpleθərə /过多;过剩 of tools for data preprocessing, model evaluation, and selection. Keep in mind that scikit-learn, or sklearn , is a generic ML library and not specific to time series data. On the other hand, the sktime library, from the Alan Turing Institute, is a dedicated machine learning library for time series data.

In this chapter, we will cover the following recipes:

- • Preparing time series data for supervised learning

- • One-step forecasting using linear regression models with scikit-learn

- • Multi-step forecasting using linear regression models with scikit-learn

- • Forecasting using non-linear models with sktime

- • Optimizing a forecasting model with hyperparameter tuning

- • Forecasting with exogenous variables and ensemble learning

Technical requirements

You will be working with the sktime library, described as "a unified framework for machine learning with time series". Behind the scenes, sktime is a wrapper to other popular ML and time series libraries, including scikit-learn. It is recommended to create a new virtual environment for Python so that you can install all the required dependencies without any conflicts or issues with your current environment. ts11_pmdarima_edgecolor_bokeh plotly_Prophet_Fourier_VAR_endog exog_Granger causality_IRF_Garch vola_LIQING LIN的博客-CSDN博客

The following instructions will show how to create a virtual environment using conda . You can call the environment any name you like. For the following example, we will name the environment sktime :

conda create -n sktime python=3.9 -yconda activate sktime

conda install -c conda-forge sktime-all-extrasTo make the new sktime environment visible within Jupyter, you can run the following code:

pip install ipykernel![]()

python -m ipykernel install --name sktime --display-name "sktime"then 'Run As Administrator' to launch Anaconda Navigator, to install notebook

![]()

pip install jupyter notebookconda install -c pyviz hvplot![]()

pip install plotlyYou will be working with three CSV fles in this chapter: Monthly Air Passenger, Monthly Energy Consumption, and Daily Temperature data. Start by importing the common libraries:

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

from pathlib import PathLoad air_passenger.csv , energy_consumption.csv , and daily_weather.csv as pandas DataFrames:

path = 'https://raw.githubusercontent.com/PacktPublishing/Time-Series-Analysis-with-Python-Cookbook/main/datasets/Ch12/'

daily_temp = pd.read_csv(path+'daily_weather.csv',

index_col='DateTime',

parse_dates=True

)

daily_tempdaily_temp.columns=['y'] # rename 'Temperature' to 'y'

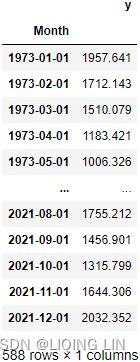

daily_tempenergy = pd.read_csv(path+'energy_consumption.csv',

index_col='Month',

parse_dates=True

)

energy.columns = ['y'] # rename 'Total Energy Consumed by the Residential Sector' to 'y'

energyair = pd.read_csv(path+'air_passenger.csv',

index_col='date',

parse_dates=True

)

air.columns = ['y'] # rename 'passengers' to 'y'

air ![]()

Then, add the proper frequency for each DataFrame:

daily_temp.index.freq = 'D'

energy.index.freq = 'MS'

air.index.freq = 'M'

air.index

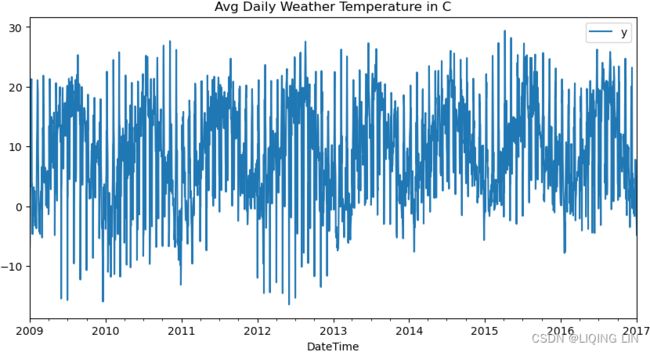

You can plot the three DataFrames to gain an understanding of how they differ:

daily_temp.plot( title='Avg Daily Weather Temperature in C',

figsize=(10,5)

)

energy.plot( title='Monthly Energy Consumption',

figsize=(10,5)

)

air.plot( title='Monthly Passengers',

figsize=(10,5)

)

plt.show()plotly_ts( ts_df=daily_temp,

title='Avg Daily Weather Temperature in C',

x_title='Date', y_title='Temperature in C'

)

Avg Daily Weather Temperature in C seem no trend, but exists repeating seasonality (every summer)

plotly_ts( ts_df=energy,

title='Total Energy Consumed by the Residential Sector',

x_title='Month', y_title='Total Energy Consumed'

) The time series plot for the energy DataFrame shows a positive (upward) trend and a repeating seasonality (every winter)![]() . The energy_consumption data is recorded monthly from January 1973 to December 2021 (588 months). The seasonal magnitudes and variations over time seem to be unsteady,

. The energy_consumption data is recorded monthly from January 1973 to December 2021 (588 months). The seasonal magnitudes and variations over time seem to be unsteady,![]() indicating an multiple nature. Having a seasonal decomposition that specifes the level, trend, and season of an additive model will reflect this as well. For more insight on seasonal decomposition, please review the Decomposing time series data recipe in https://blog.csdn.net/Linli522362242/article/details/127737895, Exploratory Data Analysis and Diagnosis.

indicating an multiple nature. Having a seasonal decomposition that specifes the level, trend, and season of an additive model will reflect this as well. For more insight on seasonal decomposition, please review the Decomposing time series data recipe in https://blog.csdn.net/Linli522362242/article/details/127737895, Exploratory Data Analysis and Diagnosis.

#############################

import plotly.graph_objects as go

def plotly_ts( ts_df, title, x_title='', y_title='' ):

#hvplot.extension('plotly') # 'matplotlib' # 'bokeh' # holoviews

# air.index[0] ==> Timestamp('1949-01-31 00:00:00', freq='M')

start = pd.DatetimeIndex( ts_df.index ).year[0]

end = pd.DatetimeIndex( ts_df.index ).year[-1]

# https://stackoverflow.com/questions/59953431/how-to-change-plotly-figure-size

layout=go.Layout(width=1000, height=500,

title=f'{title} {start}-{end}',

title_x=0.5, title_y=0.9,

xaxis=dict(title=x_title, color='green', tickangle=30),

yaxis=dict(title=y_title, color='blue')

)

fig = go.Figure(layout=layout)

precision = 3

fig.add_trace( go.Scatter( name='y',

mode ='lines',

line=dict(shape = 'linear', color = 'blue', #'rgb(100, 10, 100)',

width = 2, #dash = 'dashdot'

),

x=ts_df.index, y=ts_df.y,

#hovertemplate = '%{xaxis_title}: %{x|%Y-%m-%d}

%{yaxis_title}: %{y:%.1f}',

hovertemplate='

'.join([ x_title + ': %{x|%Y-%m-%d}',

y_title + f": %{{y:.{precision}f}}",

'Figure 9.13 – The Air Passengers dataset showing trend and increasing seasonal variation

The air passenger data shows a long-term linear (upward) trend and seasonality. However, the seasonality fluctuations seem to be increasing as well, indicating a multiplicative model(A multiplicative model is suitable when the seasonal variation fluctuates over time. OR When the variation in the seasonal pattern, or the variation around the trend-cycle, appears to be proportional to the level of the time series与时间序列的水平成正比时, then a multiplicative decomposition is more appropriate. ). ==>

==>

#############################

When plotting the datasets, observe how each time series exhibits different characteristics(Trend,Seasonality, stationary). This initial insight will be helpful as you as proceed with the recipes in the chapter. In addition, you will realize how an algorithm's performance will vary when applied to different time series data.

Understanding supervised machine learning

In supervised machine learning, the data used for training contains known past outcomes, referred to as dependent or target variable(s). These are the variables you want your machine learning (ML) model to predict. The ML algorithm learns from the data using all other variables, known as independent or predictor variables, to determine how they are used to estimate the target variable. For example, the target variable is the house price in the house pricing prediction problem. The other variables, such as the number of bedrooms, number of bathrooms, total square footage/ˈfʊtɪdʒ/尺码长度, and city, are the independent variables used to train the model. You can think of the ML model as a mathematical model for making predictions on unobserved outcomes.

On the other hand, in unsupervised machine learning, the data contains no labels or outcomes to train on (unknown or unobserved). Unsupervised algorithms are used to find patterns in the data, such as the case with clustering, for example, customer segmentation, anomaly detection, or recommender systems.

Generally, there are two types of supervised machine learning: classification and regression.

- In classifcation, the goal is to predict which class (or label) a particular observation belongs to. In other words, you are predicting a discrete value,

- for example, whether an email is spam or not spam or whether a transaction is fraudulent or not. The two examples represent a binary classification problem, but you can have a multi-class classification problem, such as the case with image classification.

- Some popular classification algorithms include Logistic Regression, Random Forests, K-Nearest Neighbors, and Support Vector Machines, to name a few.

- In regression, you predict a continuous variable, such as the price of a house or a person's height. In regression,

- you can have a simple linear regression problem with one independent variable and one target variable,

- a multiple regression problem with more than one independent variable and one target variable,

- or a multivariate multiple regression problem with more than one independent variable and more than one dependent variable.

- Some popular linear regression algorithms include Linear Regression, Lasso Regression, and Elastic Net Regression. These examples are considered linear algorithms that assume a linear relationship between the variables.

Interestingly, several of the classification algorithms mentioned earlier can be used for regression; for example, you can have a Random Forest Regression, K-Nearest Neighbors Regression, and Support Vector Machines Regression. These regressors can capture non-linear relationships and produce more complex models.

Preparing time series data for supervised learning

In supervised ML, you must specify the independent variables (predictor variables) and the dependent variable (target variable). For example, in scikit-learn, you will use the fit(X, y) method for fitting a model, where X refers to the independent variable and y to the target variable.

Generally, preparing the time series data is similar to what you have done in previous chapters. However, additional steps will be specific to supervised ML, which is what this recipe is about. The following highlights the overall steps:

- 1. Inspect your time series data to ensure there are no significant gaps没有明显的差距, such as missing data, in your time series. If there are gaps, evaluate the impact and consider some of the imputation and interpolation techniques discussed in https://blog.csdn.net/Linli522362242/article/details/128422412, Handling Missing Data.

- 2. Understand any stationarity assumptions in the algorithm before fitting the model. If stationarity is an assumption before training, then transform the time series using the techniques discussed in the Detecting time series stationarity section in https://blog.csdn.net/Linli522362242/article/details/127737895, Exploratory Data Analysis and Diagnosis.

- 3. Transform your time series to contain independent and dependent variables. To do this, you will define a sliding window to convert the data into a window of inputs. For example, if you decide that the past five periods (lags) should be used to predict the current period (the sixth), then you will create a sliding window of five periods. This will transform a univariate time series into a tabular format. A univariate time series has only one dependent variable and no independent variables. For example, a five-period sliding window will produce five independent variables

,

,  ,

,  ,

,  ,

,  , which are lagged versions of the dependent variable. This representation of multiple inputs (a sequence) to one output is referred to as a one-step forecast. This will be the focus of this recipe. Figure 12.1 illustrates this concept.

, which are lagged versions of the dependent variable. This representation of multiple inputs (a sequence) to one output is referred to as a one-step forecast. This will be the focus of this recipe. Figure 12.1 illustrates this concept. - 4. Before training a model, split that data into training and test sets. Sometimes, you may need to split it into training, validation, and test sets, as you will explore in Chapter 13, Deep Learning for Time Series Forecasting.

- 5. Depending on the algorithm, you may need to scale your data; for example, in scikit-learn, you can leverage the StandardScaler

(cp4 Training Sets Preprocessing_StringIO_dropna_categorical_feature_Encode_Scale_L1_L2_bbox_to_ancho_LIQING LIN的博客-CSDN博客center the feature columns at mean

(cp4 Training Sets Preprocessing_StringIO_dropna_categorical_feature_Encode_Scale_L1_L2_bbox_to_ancho_LIQING LIN的博客-CSDN博客center the feature columns at mean  with standard deviation

with standard deviation  so that the feature columns takes the form of a normal distribution

so that the feature columns takes the form of a normal distribution ) or the MinMaxScaler

) or the MinMaxScaler (this normalization refers to the rescaling of the features to a range of [0, 1]) class When making your predictions, you will need to inverse the transform to restore the results to their original scale, for example, you can use the inverse_transform method from scikit-learn.

(this normalization refers to the rescaling of the features to a range of [0, 1]) class When making your predictions, you will need to inverse the transform to restore the results to their original scale, for example, you can use the inverse_transform method from scikit-learn.

In this recipe, you will prepare time series data for supervised learning by creating independent variables from a univariate time series. The following illustrates how a sliding window of five periods creates the dependent (target) variable at a time (t) and five independent variables (![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , which are lagged versions of the dependent variable). In the daily temperature data, this means a five-day sliding window.

, which are lagged versions of the dependent variable). In the daily temperature data, this means a five-day sliding window. ![]()

Figure 12.1 – Example of a five-day sliding window for daily temperature data

Figure 12.1 – Example of a five-day sliding window for daily temperature data

Since you will be transforming all three DataFrames, you will create functions to simplify the process:

1. Inspect for missing data in the DataFrames. If there is missing data, then perform a simple fill forward imputation. First, make a copy so you do not change the original

air_copy = air.copy(deep=True)

energy_copy = energy.copy(deep=True)

daily_temp_copy = daily_temp.copy(deep=True)Create the handle_missing_data function:

def handle_missing_data(df, ifreport=False):

n = int( df.isna().sum() )

if n>0:

df.ffill(inplace=True)

print( f'found\033[1m {n} missing\033[0m observations...',

end="" if ifreport else '\n'

)

if ifreport:

return TruePass each DataFrame to the handle_missing_data function:

for name, df in {'air_copy':air_copy,

'energy_copy':energy_copy,

'daily_temp_copy':daily_temp_copy

}.items():

if handle_missing_data(df, True):

print(f'in \033[1m{name}\033[0m')![]()

Only the daily_weather DataFrame had two NaN (missing values).

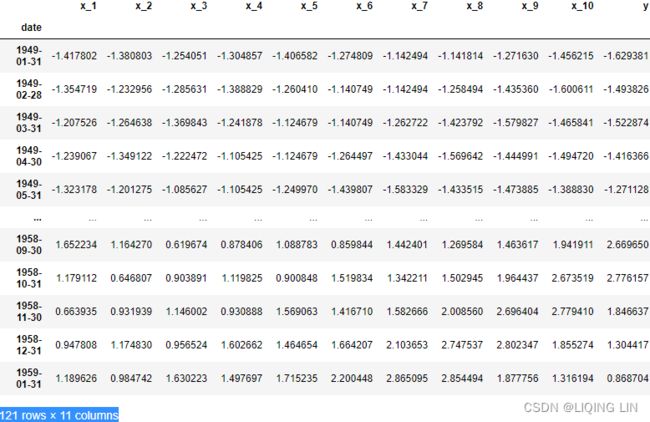

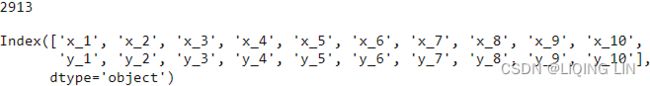

daily_temp_copy.isna().sum()2. Create the create_lagXs_y function, which returns a DataFrame with a specified number of independent variables (columns) and a target variable (column). The total number of columns returned is based on the sliding window parameter (number of columns = sliding window + 1). This is illustrated in Figure 12.2. This technique was described in Machine Learning Strategies for Time Series Forecasting, Lecture Notes in Business Information Processing. Berlin, Heidelberg: Springer Berlin Heidelberg (https: //doi.org/10.1007/978-3-642-36318-4_3) . Create the function using the following:

The create_lagXs_y function, you produced additional columns to represent independent variables used in model training. The new columns are referred to as features. The process of engineering these new features, as you did earlier, is called feature engineering. In this, you create new features (columns) that were not part of the original data to improve your model's performance.

def create_lagXs_y( df, lag_window ):

n = len(df)

ts = df.values

Xs = []

idx = df.index[:-lag_window]

# slice and draw

for start in range( n-lag_window ):

end = start + lag_window

Xs.append( ts[start:end] ) # [x_0, x_1, x_2, x_3, x_4]

cols = [ f'x_{i}'

for i in range(1, 1+lag_window)

]# columnName:[x_1, x_2, x_3, x_4, x_5]

Xs = np.array(Xs).reshape(n-lag_window, -1) #==>shape:(n-lag_window, 5)

y = df.iloc[lag_window:].values

df_Xs = pd.DataFrame( Xs, columns=cols, index=idx )

df_y = pd.DataFrame( y.reshape(-1), columns=['y'], index=idx )

return pd.concat([df_Xs, df_y],

axis=1

).dropna()The create_lagXs_y function will transform a time series with a specifed number of steps (the sliding window size).

For simplicity, transform all three DataFrames with the same sliding window size of five periods, lag_window=5 . Recall, the weather data is daily, so one period represents one day, while for the air passenger and energy consumption datasets, a period is equivalent to one month:

air_5_1 = create_lagXs_y( air_copy, 5 )

energy_5_1 = create_lagXs_y( energy_copy, 5 )

daily_temp_5_1 = create_lagXs_y( daily_temp_copy, 5 )

print( air_5_1.shape )

print( energy_5_1.shape )

print( daily_temp_5_1.shape )air_5_1

3. You will need to split the data into training and test sets. You could use the train_test_split function from scikit-learn with shuffle=False . An alternative is to create the split_data function to split the data:

def split_data( df, test_split=0.15 ):

n = int( len(df) * test_split )

train, test = df[:-n], df[-n:]

return train, testThe following shows how to use the split_data function:

train, test = split_data( air_5_1 )

print( f'Train: {len(train)} Test: {len(test)}' ) ![]()

4. Certain algorithms benefit from scaling the data. You can use the StandardScaler class from scikit-learn. In this recipe, you will create the Standardize class with three methods: the fit_transform method, will fit on the training set and then transforms both the training and test sets, the inverse method is used to return a DataFrame to its original scale and the inverse_y method to inverse the target variable (or a specific column and not the entire DataFrame):

class Standardize:

def __init__( self, split=0.15 ):

self.split = split

def _transform( self, df ):

return (df - self.mu)/self.sigma ###

def split_data( self, df ):

n = int( len(df) * test_split )

train, test = df[:-n], df[-n:]

return train, test

def fit_transform( self, train, test ):

self.mu = train.mean() ###

self.sigma = train.std() ###

train_s = self._transform(train)

test_s = self._transform(test)

return train_s, test_s

def transform( self, df ):

return self._transform( df )

def inverse( self, df ):

return (df*self.sigma)+self.mu

def inverse_y( self, df ):

return ( df*self.sigma[-1] )+self.mu[-1]The following shows how you can use the Standardize class:

scaler = Standardize()

train_s, test_s = scaler.fit_transform( train, test )

train_strain_original = scaler.inverse(train_s)

train_originaly_train_original = scaler.inverse_y( train_s['y'] )

y_train_originalThe Standardize class also has additional methods, such as split_data for convenience.

You will be leveraging these functions in the recipes of this chapter for data preparation.

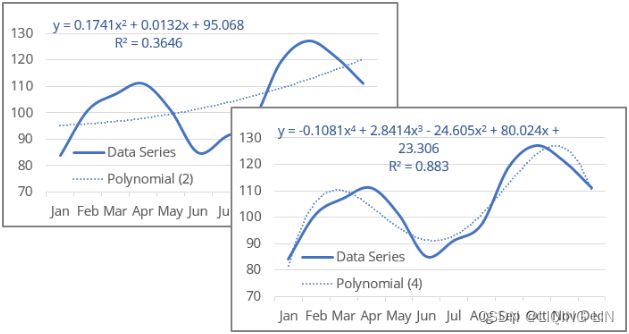

Preparing time series data for supervised learning is summarized in Figure 12.1 and Figure 12.2. For example, in a regression problem, you are essentially transforming a univariate time series into a multiple regression problem. You will explore this concept further in the following One-step forecasting using linear regression models with scikit-learn recipe.

The lag_window parameter can be adjusted to fit your need. In the recipe, we used a split window of five (5) periods, and you should experiment with different window sizes.

The sliding window(lag_window) is one technique to create new features based on past observations. Other techniques can be used to extract features from time series data to prepare it for supervised machine learning. For example, you could create new columns based on the date column, such as day of the week, year, month, quarter, season, and other date-time features.

The following is an example of engineering date time related features using pandas:

df = daily_temp.copy(deep=False)

dfdf = daily_temp.copy(deep=False)

df['day_of_week'] = df.index.dayofweek

df['days_in_month'] = df.index.days_in_month

df['month_end'] = df.index.is_month_end.astype(int)

df['is_leap'] = df.index.is_leap_year.astype(int)

df['month'] = df.index.month

df

![]()

Even though you will be using the create_lagXs_y function throughout the chapter, you should explore other feature engineering techniques and experiment with the different algorithms introduced in this chapter.

One-step forecasting using linear regression models with scikit-learn

In ts10_Univariate TS模型_circle mark pAcf_ETS_unpack product_darts_bokeh band interval_ljungbox_AIC_BIC_LIQING LIN的博客-CSDN博客ts10_2Univariate TS模型_pAcf_bokeh_AIC_BIC_combine seasonal_decompose twinx ylabel_bold partial title_LIQING LIN的博客-CSDN博客_first-order diff, Building Univariate Time Series Models Using Statistical Methods, you were introduced to statistical models such as AutoRegressive (AR  or

or![]() ) type models. These statistical models are considered linear models, where the independent variable(s) are lagged versions of the target (dependent) variable. In other words, the variable you want to predict is based on past values of itself at some lag.

) type models. These statistical models are considered linear models, where the independent variable(s) are lagged versions of the target (dependent) variable. In other words, the variable you want to predict is based on past values of itself at some lag.

In this recipe, you will move from statistical models into ML models. More specifically, you will be training different linear models, such as

- Linear Regression

https://blog.csdn.net/Linli522362242/article/details/111307026

Simple linear regression : The goal of simple (univariate) linear regression is to model the relationship between a single feature (explanatory variable, x) and a continuous-valued target (response variable, y).

The goal of simple (univariate) linear regression is to model the relationship between a single feature (explanatory variable, x) and a continuous-valued target (response variable, y).

Multiple linear regression :

OR

Here, is the y axis intercept with

is the y axis intercept with  = 1.

= 1. or

or  is the predicted variable,

is the predicted variable, or

or  or

or  is the intercept,

is the intercept, are the features or independent variables, and

are the features or independent variables, and or

or  are the coefficients for each of the independent variables.

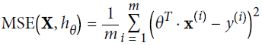

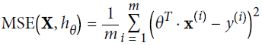

are the coefficients for each of the independent variables. - Mean Squared Error cost function:

note

note

- Huber Regression https://blog.csdn.net/Linli522362242/article/details/107294292

Huber loss :

Suppose you want to train a regression model, but your training set is a bit noisy. Of course, you start by trying to clean up your dataset by removing or fixing the outliers, but that turns out to be insufficient; the dataset is still noisy. Which loss function should you use?

The Mean Squared Error might penalize large errors too much and cause your model to be imprecise.

The Mean Absolute Error would not penalize outliers as much不会对异常值造成太大的惩罚, but training might take a while to converge, and the trained model might not be very precise. This is probably a good time to use the Huber loss

would not penalize outliers as much不会对异常值造成太大的惩罚, but training might take a while to converge, and the trained model might not be very precise. This is probably a good time to use the Huber loss

The Huber loss is quadratic when the error is smaller than a threshold δ (typically 1) but linear when the error is larger than δ. The linear part makes it less sensitive to outliers than the Mean Squared Error, and the quadratic part allows it to converge faster and be more precise than the Mean Absolute Error ) instead of the good old MSE. The Huber loss is not currently part of the official Keras API, but it is available in tf.keras (just use an instance of the keras.losses.Huber class).

) instead of the good old MSE. The Huber loss is not currently part of the official Keras API, but it is available in tf.keras (just use an instance of the keras.losses.Huber class). - The three regression models, ElasticNet, Lasso, and Ridge, add a regularization (penalization) term to the objective function(cost function or loss function) that we want to minimize.

Regularization helps avoid overfitting during training and allows the model to better

generalize. Additionally, L1 regularization can be used for feature selection.

we can think of regularization as adding a penalty term to the cost function to encourage smaller weights; or, in other words, we penalize large weights.

Thus, by increasing the regularization strength via the regularization parameter , we shrink the weights towards zero and decrease the dependence of our model on the training data.

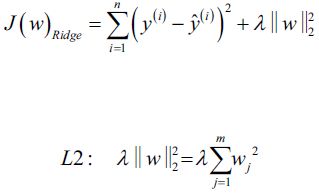

, we shrink the weights towards zero and decrease the dependence of our model on the training data. - Ridge Regression

Equation 4-8. Ridge Regression cost function vs

vs

use RSS(Residuals of Sum Squares) instead of MSE is for convenient computation, we should remove it for Practical application

is for convenient computation, we should remove it for Practical application

L2 norm:

OR

OR  e.g.

e.g.

In Ridge Regression, the regularization term is referred to as L2 regularization and can shrink the coefficients of the least important features but does not eliminate them (no zero coefficients).

Under the penalty constraint, our best effort is to choose the point where the L2 ball intersects with the contours of the unpenalized cost function. The larger the value of the regularization parameter gets, the faster the penalized cost function

gets, the faster the penalized cost function grows, which leads to a narrower L2 ball

grows, which leads to a narrower L2 ball . For example, if we increase the regularization parameter

. For example, if we increase the regularization parameter  towards infinity, the weight coefficients will become effectively zero, denoted by the center of the L2 ball. To summarize the main message of the example: our goal is to minimize the sum of the unpenalized cost function plus the penalty term, which can be understood as adding bias and preferring a simpler model to reduce the variance(try to underfit) in the absence of sufficient training data to fit the model.

towards infinity, the weight coefficients will become effectively zero, denoted by the center of the L2 ball. To summarize the main message of the example: our goal is to minimize the sum of the unpenalized cost function plus the penalty term, which can be understood as adding bias and preferring a simpler model to reduce the variance(try to underfit) in the absence of sufficient training data to fit the model.

https://blog.csdn.net/Linli522362242/article/details/108230328

cp4 Training Sets Preprocessing_StringIO_dropna_categorical_feature_Encode_Scale_L1_L2_bbox_to_ancho_LIQING LIN的博客-CSDN博客

Equation 4-9. Ridge Regression closed-form solution

Note: Normal Equation(a closed-form equation)

Here is how to perform Ridge Regression with Scikit-Learn using a closed-form solution (a variant of Equation 4-9 using a matrix factorization technique by André-Louis Cholesky):04_TrainingModels_Normal Equation(正态方程,正规方程) Derivation_Gradient Descent_Polynomial Regression_LIQING LIN的博客-CSDN博客

- Lasso Regression

Equation 4-10. Lasso Regression cost function vs

vs

use RSS(Residuals of Sum Squares) instead of MSE

L1 norm: OR

OR e.g.

e.g.

In Lasso Regression, the regularization term can reduce the coefficient (the or

or  in the objective function) of the least important features (independent variables) to zero, and thus eliminating them.

in the objective function) of the least important features (independent variables) to zero, and thus eliminating them.

This added penalization term is called L1 regularization.

In the preceding figure, we can see that the contour of the cost function touches the L1 diamond at . Since the contours of an L1 regularized system are sharp, it is more likely that the optimum—that is, the intersection between the ellipses of the cost function and the boundary of the L1 diamond—is located on the axes, which encourages sparsity. The mathematical details of why L1 regularization can lead to sparse solutions are beyond the scope of this book. If you are interested, an excellent section on L2 versus L1 regularization can be found in section 3.4 of The Elements of Statistical Learning, Trevor Hastie, Robert Tibshirani, and Jerome Friedman, Springer.

. Since the contours of an L1 regularized system are sharp, it is more likely that the optimum—that is, the intersection between the ellipses of the cost function and the boundary of the L1 diamond—is located on the axes, which encourages sparsity. The mathematical details of why L1 regularization can lead to sparse solutions are beyond the scope of this book. If you are interested, an excellent section on L2 versus L1 regularization can be found in section 3.4 of The Elements of Statistical Learning, Trevor Hastie, Robert Tibshirani, and Jerome Friedman, Springer.

The Lasso cost function is not differentiable无法进行微分运算 at (for i = 1, 2, ⋯, n), but Gradient Descent still works fine if you use a subgradient vector g instead when any

(for i = 1, 2, ⋯, n), but Gradient Descent still works fine if you use a subgradient vector g instead when any  . Equation 4-11 shows a subgradient vector equation you can use for Gradient Descent with the Lasso cost function.

. Equation 4-11 shows a subgradient vector equation you can use for Gradient Descent with the Lasso cost function.

Equation 4-11. Lasso Regression subgradient vector

04_TrainingModels_02_regularization_L2_cost_Ridge_Lasso_Elastic Net_Early Stopping_LIQING LIN的博客-CSDN博客 - Elastic Net Regression

Elastic Net is a middle ground between Ridge Regression and Lasso Regression. The regularization term is a simple mix of both Ridge and Lasso’s regularization terms, and you can control the mix ratio r. When r = 0, Elastic Net is equivalent to Ridge Regression, and when r = 1, it is equivalent to Lasso Regression (see Equation 4-12).

Equation 4-12. Elastic Net cost function

ElasticNet Regression, on the other hand, is a hybrid between the two by combining L1 and L2 regularization terms. - other loss function:mpf11_Learning rate_Activation_Loss_Optimizer_Quadratic Program_NewtonTaylor_L-BFGS_Nesterov_Hessian_LIQING LIN的博客-CSDN博客_standardscaler activation function

These are considered linear regression models and assume a linear relationship between the variables.

In the previous recipe, you transformed a univariate time series into a multiple regression problem with five independent variables and one dependent variable (a total of six columns), as shown in the following diagram: Figure 12.2 – Transforming time series for supervised ML

Figure 12.2 – Transforming time series for supervised ML

For the representation in Figure 12.2, the multiple linear regression equation would be as follows:![]()

Where ![]() is the estimated (predicted) value, (

is the estimated (predicted) value, (![]() ,

,![]() ,...,

,...,![]() ) are the coefficients for each

) are the coefficients for each

independent variable (![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ),

), ![]() is the intercept, and ϵ is the residual or error

is the intercept, and ϵ is the residual or error

term. Remember, the independent variables that were created (![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ) are lagged

) are lagged

versions of the dependent variable ( ![]() ) created using a sliding window. You can simplify

) created using a sliding window. You can simplify

the equation in matrix notation by adding an ![]() term, which is a constant value of one (

term, which is a constant value of one (![]() ). This will give us the following equation:

). This will give us the following equation:

![]()

In linear regression, you want to minimize the errors (loss), which is the difference between the actual value, ![]() , and the estimated value,

, and the estimated value, ![]() . More specifically, it is the square loss at each data point. If we take the sum of these squared losses or errors, you get the Residual Sum of Square (RSS). The cost function (RSS) is what you want to minimize. This results in our objective function being written as follows:

. More specifically, it is the square loss at each data point. If we take the sum of these squared losses or errors, you get the Residual Sum of Square (RSS). The cost function (RSS) is what you want to minimize. This results in our objective function being written as follows:

Sometimes, you will see the objective function as minimizing the Mean Squared Error (MSE), which is obtained by dividing the RSS by the degrees of freedom (for simplicity, you can think of it as the number of observations, where N represents the number of elements. By default ddof is zerocp4 Training Sets Preprocessing_StringIO_dropna_categorical_feature_Encode_Scale_L1_L2_bbox_to_ancho_LIQING LIN的博客-CSDN博客).

Once a time series is prepared for supervised ML, you can use any regression algorithm to train a model. This is summarized in Figure 12.2. The function for transforming the time series is create_lagXs_y( df, lag_window ), to remind you that we are preparing the data so that a sequence of inputs is given (the independent variables) to produce a single output (one-step forecast).

In this recipe, you will continue using the three DataFrames you loaded from the Technical requirements section of this chapter. You will leverage the functions and classes created in the previous Preparing time series data for supervised learning recipe.

Start by loading the necessary classes and functions for this recipe. Make a copy of the DataFrames to ensure they do not get overwritten:

from sklearn.linear_model import ( LinearRegression,

ElasticNet,

Ridge,

Lasso,

HuberRegressor

)

air_cp = air.copy(deep=True)

en_cp = energy.copy(deep=True)

dw_cp = daily_temp.copy(deep=True)The following steps will use the energy consumption data for demonstration. You should use the same steps on all three DataFrames, as shown in the accompanying Jupyter notebook:

1. Use the handle_missing_data function to ensure there are no missing values:

for name, df in {'air_copy':air_cp,

'energy_copy':en_cp,

'daily_temp_copy':dw_cp

}.items():

if handle_missing_data(df, True):

print(f'in \033[1m{name}\033[0m')![]()

dw_cp.isna().sum()2. Use create_lagXs_y to convert the time series DataFrames into a supervised learning problem with 10 steps (windows):

air_10_1 = create_lagXs_y( air_cp, 10 )

energy_10_1 = create_lagXs_y( en_cp, 10 )

daily_temp_10_1 = create_lagXs_y( dw_cp, 10 )

print( air_10_1.shape )

print( energy_10_1.shape )

print( daily_temp_10_1.shape )![]() Feel free to change the window size.

Feel free to change the window size.

3. Split and scale the data using the split_data function and the Standardize class. Later, you can use the class instance to inverse the scaling:

train_air, test_air = split_data( air_10_1, test_split=0.10 )

scaler_air = Standardize()

train_air_s, test_air_s = scaler_air.fit_transform( train_air, test_air )

train_air_strain_en, test_en = split_data( energy_10_1, test_split=0.10 )

scaler_en = Standardize()

train_en_s, test_en_s = scaler_en.fit_transform( train_en, test_en )

train_en_strain_dw, test_dw = split_data( daily_temp_10_1, test_split=0.10 )

scaler_dw = Standardize()

train_dw_s, test_dw_s = scaler_dw.fit_transform( train_dw, test_dw )

train_dw_sCommon error metrics used in regression are Mean Squared Error(MSE) or Root Mean Squared Error(RMSE). These are scale-dependent, so if you experiment with different model configurations, for example, scale your data using the Standardize class function, this will impact the scores and make it difficult to compare.

MAPE(Mean Absolute Percentage Error)

Another popular error metric in forecasting is Mean Absolute Percentage Error(MAPE), which is more intuitive to interpret since it is expressed as a percentage and is scale-independent与数据的比例无关. For certain problems, MAPE may not be suitable. For example, with the daily temperature data, MAPE puts a heavier penalty on negative errors (you can have negative Celsius). With MAPE, you cannot divide by zero (Celsius at zero can be problematic). Additionally, measuring temperature as a percentage may not make sense in this case.

The Mean Absolute Percentage Error(MAPE), also known as Mean Absolute Percentage Deviation (MAPD), is a measure of prediction accuracy of a forecasting method in statistics. It usually expresses the accuracy as a ratio defined by the formula: where

where ![]() is the actual value and

is the actual value and ![]() is the forecast value. Their difference is divided by the actual value

is the forecast value. Their difference is divided by the actual value ![]() . The absolute value of this ratio is summed for every forecasted point in time and divided by the number of fitted points n.

. The absolute value of this ratio is summed for every forecasted point in time and divided by the number of fitted points n.

It's a good practice to capture different metrics, and in this case, you will capture both RMSE and MAPE by importing them from sktime . Note that sklearn does support both MSE and MAPE. The third metric, which has been proposed as an alternative to the shortcoming of MAPE, is the Mean Absolute Scaled Error (MASE) metric.

def _percentage_error(y_true, y_pred, symmetric=True):

"""Percentage error.

Parameters

----------

y_true : pd.Series, pd.DataFrame or np.array of shape (fh,) or (fh, n_outputs) \

where fh is the forecasting horizon

Ground truth (correct) target values.

y_pred : pd.Series, pd.DataFrame or np.array of shape (fh,) or (fh, n_outputs) \

where fh is the forecasting horizon

Forecasted values.

symmetric : bool, default = False

Whether to calculate symmetric percentage error.

Returns

-------

percentage_error : float

References

----------

Hyndman, R. J and Koehler, A. B. (2006). "Another look at measures of \

forecast accuracy", International Journal of Forecasting, Volume 22, Issue 4.

"""

if symmetric:

# Alternatively could use np.abs(y_true + y_pred) in denom

# Results will be different if y_true and y_pred have different signs

percentage_error = (

2

* np.abs(y_true - y_pred)

/ np.maximum( np.abs(y_true) + np.abs(y_pred), EPS )

) # note : EPS = np.finfo(np.float64).eps

else:

percentage_error = (y_true - y_pred) / np.maximum(np.abs(y_true), EPS)

return percentage_error

def mean_absolute_percentage_error(

y_true,

y_pred,

horizon_weight=None,

multioutput="uniform_average",

symmetric=True,

**kwargs,

):

#https://github.com/sktime/sktime/blob/4e06cb0231cdabb74bf88d0cb4f2b721fc863fe3/sktime/performance_metrics/forecasting/_functions.py#L1447

output_errors = np.average(

np.abs(_percentage_error(y_true, y_pred, symmetric=symmetric)),

weights=horizon_weight, # default None

axis=0,

)SMAPE(Symmetric Mean Absolute Percentage Error)

Symmetric Mean Absolute Percentage Error (SMAPE or sMAPE) is an accuracy measure based on percentage (or relative) errors. It is usually defined[citation needed] as follows: sktime

The absolute difference between ![]() and

and ![]() is divided by half the sum of absolute values of the actual value

is divided by half the sum of absolute values of the actual value ![]() and the forecast value

and the forecast value ![]() . The value of this calculation is summed for every fitted point

. The value of this calculation is summed for every fitted point ![]() and divided again by the number of fitted points

and divided again by the number of fitted points ![]() .

.

The earliest reference to similar formula appears to be Armstrong (1985, p. 348) where it is called "adjusted MAPE" and is defined without the absolute values in denominator. It has been later discussed, modified and re-proposed by Flores (1986).

Armstrong's original definition is as follows:

The problem is that it can be negative (if ![]() ) or even undefined (if

) or even undefined (if ![]() ). Therefore the currently accepted version of SMAPE assumes the absolute values in the denominator(sktime solved them by using EPS).

). Therefore the currently accepted version of SMAPE assumes the absolute values in the denominator(sktime solved them by using EPS).

In contrast to the (MAPE) mean absolute percentage error, SMAPE has both a lower bound and an upper bound. Indeed, the formula above provides a result between 0% and 200%. However a percentage error between 0% and 100% is much easier to interpret. That is the reason why the formula below is often used in practice (i.e. no factor 0.5 OR ![]() in denominator):

in denominator):

In the above formula, if ![]() , then the t'th term in the summation is 0, since the percent error between the two is clearly 0 两者之间的百分比误差显然为 0 and the value of

, then the t'th term in the summation is 0, since the percent error between the two is clearly 0 两者之间的百分比误差显然为 0 and the value of  is undefined.

is undefined.

One supposed problem with SMAPE is that it is not symmetric since over- and under-forecasts are not treated equally. This is illustrated by the following example by applying the second SMAPE formula:

- Over-forecasting: At = 100 and Ft = 110 give SMAPE = 4.76%

- Under-forecasting: At = 100 and Ft = 90 give SMAPE = 5.26%.

However, one should only expect this type of symmetry for measures which are entirely difference-based and not relative (such as mean squared error and mean absolute deviation). 但是,对于完全基于差异而非相对的度量(例如均方误差和平均绝对偏差),应该只期望这种类型的对称性。

There is a third version of SMAPE, which allows to measure the direction of the bias in the data by generating a positive and a negative error on line item level. Furthermore it is better protected against outliers and the bias effect更好地防止异常值和偏差效应 mentioned in the previous paragraph than the two other formulas. The formula is:

A limitation to SMAPE is that if the actual value or forecast value is 0, the value of error will boom up to the upper-limit of error. (200% for the first formula and 100% for the second formula).

Provided the data are strictly positive, a better measure of relative accuracy can be obtained based on the log of the accuracy ratio: log(Ft / At) This measure is easier to analyse statistically, and has valuable symmetry and unbiasedness properties. When used in constructing forecasting models the resulting prediction corresponds to the geometric mean (Tofallis, 2015).

MASE

The mean absolute scaled error has the following desirable properties:[3]

- Scale invariance: The Mean Absolute Scaled Error is independent of the scale of the data与数据的比例无关, so can be used to compare forecasts across data sets with different scales.

MASE is independent of the scale of the forecast since it is defined using ratio of errors in the forecast . This means MASE values will be similar if we are forecasting high valued time series like number of internet traffic packet crossing a router hourly when compared to forecasting number of pedestrians crossing a busy traffic light every hour.

. This means MASE values will be similar if we are forecasting high valued time series like number of internet traffic packet crossing a router hourly when compared to forecasting number of pedestrians crossing a busy traffic light every hour. - Predictable behavior as

or

or  : Percentage forecast accuracy measures such as the Mean absolute percentage error (MAPE

: Percentage forecast accuracy measures such as the Mean absolute percentage error (MAPE ) rely on division of

) rely on division of  , skewing the distribution of the MAPE for values of

, skewing the distribution of the MAPE for values of  near or equal to 0. This is especially problematic for data sets whose scales do not have a meaningful 0, such as temperature in Celsius or Fahrenheit, and for intermittent/ˌɪntərˈmɪtənt/间歇的,断断续续的 demand data sets, where

near or equal to 0. This is especially problematic for data sets whose scales do not have a meaningful 0, such as temperature in Celsius or Fahrenheit, and for intermittent/ˌɪntərˈmɪtənt/间歇的,断断续续的 demand data sets, where  occurs frequently.

occurs frequently.

MASE is immune to the problem不受...问题的影响 faced by Mean Absolute Percentage Error (MAPE) when actual time series output or

or  or

or  at any time step is zero(since the denominator is the mean absolute error of the one-step "naive forecast method" on the training set). In this situation MAPE gives an infinite output, which is not meaningful. However, it is noted that for a time series with all values equal to zero at all steps, MASE output will also be not defined but such time series are not realistic.

at any time step is zero(since the denominator is the mean absolute error of the one-step "naive forecast method" on the training set). In this situation MAPE gives an infinite output, which is not meaningful. However, it is noted that for a time series with all values equal to zero at all steps, MASE output will also be not defined but such time series are not realistic. - Symmetry: The Mean Absolute Scaled Error

penalizes positive and negative forecast errors equally, and

penalizes errors in large forecasts and small forecasts equally. In contrast, the MAPE and median absolute percentage error (MdAPE) fail both of these criteria, while the "symmetric" sMAPE and sMdAPE[4] fail the second criterion. - Interpretability: The Mean Absolute Scaled Error can be easily interpreted, as values greater than one(>1) indicate that in-sample one-step forecasts from the naïve method perform better than the forecast values under consideration.

Mean Absolute Error (MAE) for the predictions from the algorithm

: actual value,

: actual value,  : predction

: predction  Its value greater than one (1) indicates the algorithm is performing poorly compared to the naïve forecast.

Its value greater than one (1) indicates the algorithm is performing poorly compared to the naïve forecast. - Asymptotic normality of the MASE: The Diebold-Mariano test for one-step forecasts is used to test the statistical significance of the difference between two sets of forecasts.[5][6][7] To perform hypothesis testing with the Diebold-Mariano test statistic, it is desirable for

, where

, where  is the value of the test statistic. The DM statistic for the MASE has been empirically shown to approximate this distribution, while the Mean Relative Absolute Error (MRAE), MAPE and sMAPE do not.

is the value of the test statistic. The DM statistic for the MASE has been empirically shown to approximate this distribution, while the Mean Relative Absolute Error (MRAE), MAPE and sMAPE do not.

Non seasonal time series

For a non-seasonal time series,[8] the Mean Absolute Scaled Error is estimated by

where the numerator ![]() is the forecast error for a given period (with

is the forecast error for a given period (with ![]() , the number of forecasts), defined as the actual value (

, the number of forecasts), defined as the actual value (![]() ) minus the forecast value (

) minus the forecast value (![]() ) for that period:

) for that period: ![]() , and the denominator is the mean absolute error of the one-step "naive forecast method" on the training set (here defined as t = 1..T),[8] which uses the actual value from the prior period as the forecast:

, and the denominator is the mean absolute error of the one-step "naive forecast method" on the training set (here defined as t = 1..T),[8] which uses the actual value from the prior period as the forecast: ![]()

#########

1. Calculate Mean Absolute Error (MAE) for the predictions from the algorithm

![]() : actual value

: actual value

Consider a time series with output(forecast or prediction) for N steps given as y1, y2, y3,…yn![]()

(the denominator )Naïve forecast error at different time steps is given by:

(the denominator )Mean Absolute Error for naïve forecast over entire duration is defined as

2. MASE is given by the ratio of MAE for algorithm and MAE of naïve forecast.

#########

Seasonal time series

For a seasonal time series, the Mean Absolute Scaled Error is estimated in a manner similar to the method for non-seasonal time series:

The main difference with the method for non-seasonal time series, is that the denominator is the mean absolute error of the one-step "seasonal naive forecast method" on the training set,[8] which uses the actual value from the prior season as the forecast: ![]() ,[9] where m is the seasonal period.

,[9] where m is the seasonal period.

This scale-free error metric "can be used to compare forecast methods on a single series and also to compare forecast accuracy between series. This metric is well suited to intermittent-demand series[clarification needed] because it never gives infinite or undefined values[1] except in the irrelevant case where all historical data are equal.

When comparing forecasting methods, the method with the lowest MASE is the preferred method.

Seasonal variation in the forecast is captured by equating the current forecast to the actual output from the period in last season corresponding to current period, e.g. prediction of demand of a product in holiday season is made equal to the actual demand of the product from last holiday season.

(the denominator )Mean Absolute Error for naïve forecast over entire duration is defined as![]()

Non-time series data

For non-time series data, the mean of the data (![]() ) can be used as the "base" forecast.

) can be used as the "base" forecast.In this case the MASE is the Mean absolute error divided by the Mean Absolute Deviation.

You will use MASE from the sktime library as well:

from sktime.performance_metrics.forecasting import( MeanSquaredError,

MeanAbsolutePercentageError,

MeanAbsoluteScaledError

)Create an instance of each of the classes to use later in the recipe:

mse = MeanSquaredError()

mape = MeanAbsolutePercentageError()

mase = MeanAbsoluteScaledError() Note, you will be calculating RMSE as the square root of MSE, for example, using

np.sqrt( mse(y_actual – y_hat) ) .

4. Create the train_model function that takes the training and test sets, then fits the model on the train set and evaluates the model using the test set using

MAPE( ),

),

MASE(), and RMSE(

![]() ). The function will return a dictionary with additional model information:

). The function will return a dictionary with additional model information:

def train_model( train, test, regressor, reg_name ):

X_train, y_train = train.drop( columns=['y'] ), train['y']

X_test, y_test = test.drop( columns=['y'] ), test['y']

print( f'training {reg_name} ...' )

regressor.fit( X_train, y_train )

yhat = regressor.predict( X_test )

rmse_test = np.sqrt( mse(y_test, yhat) )

mape_test = mape( y_test, yhat )

mase_test = mase( y_test, yhat, y_train=y_train )

residuals = y_test.values - yhat

model_metadata = {'Model Name': reg_name, 'Model': regressor,

'RMSE': rmse_test,

'MAPE': mape_test,

'MASE': mase_test,

'yhat': yhat,

'resid': residuals,

'actual': y_test.values

}

return model_metadataThe function returns the model and evaluation metrics against the test data, the forecast, and the residuals.

5. Create a dictionary that contains the regression algorithms and their names (keys) to use in a loop. This makes it easier later to update the dictionary with additional regressors:

regressors = { 'Linear Regression': LinearRegression(fit_intercept=False),

# alpha:Constant that multiplies the penalty terms. and l1_ratio=0.5 Ridge + Lasso

'Elastic Net': ElasticNet(alpha=0.5, fit_intercept=False), # False, the data is assumed to be already centered

'Ridge Regression': Ridge(alpha=0.5, fit_intercept=False),

'Lasso Regression': Lasso(alpha=0.5, fit_intercept=False),

'Huber Regression': HuberRegressor(fit_intercept=False)

}The three regressors, Ridge, Lasso, and ElasticNet, add a regularization (penalization) term to the objective function. All three take an alpha ( α ) parameter, which determines the penalization factor for the coefficients. This is a hyperparameter you can experiment with; for now, you will use the value ( 0.5 ).

6. The train_model function fits and evaluates one model at a time. Create another function, train_different_models , which can loop through the dictionary of regressors and calls the train_model function. The function will return the results from each regressor as a list:

def train_different_models( train, test, regressors):

results = []

for reg_name, regressor in regressors.items():

results.append( train_model( train,

test,

regressor,

reg_name

)

)

return resultsPass the dictionary of regressors along with the training and test sets to the train_different_models function and store the results:

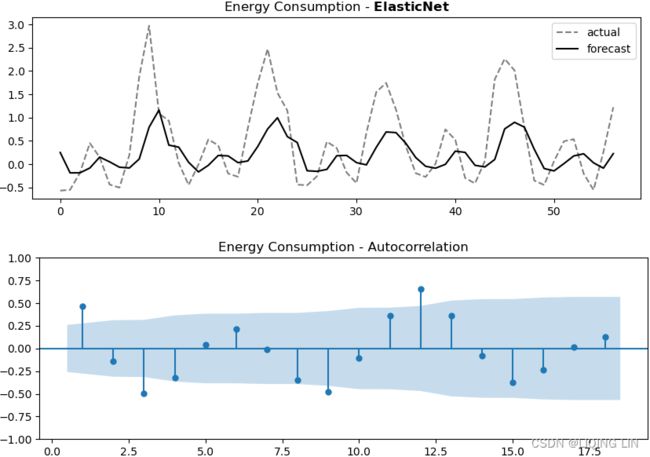

air_results = train_different_models( train_air_s, test_air_s, regressors )

en_results = train_different_models( train_en_s, test_en_s, regressors )

dw_results = train_different_models( train_dw_s, test_dw_s, regressors )import warnings

warnings.filterwarnings('ignore')

air_results = train_different_models( train_air_s, test_air_s, regressors )

en_results = train_different_models( train_en_s, test_en_s, regressors )

dw_results = train_different_models( train_dw_s, test_dw_s, regressors )en_results![]()

7. You can convert the results into a DataFrame to view the scores and model name:

cols = ['Model Name', 'RMSE', 'MAPE', 'MASE']

air_results = pd.DataFrame( air_results )

air_results[cols].sort_values('MASE') The preceding code should produce the following results:![]()

en_results = pd.DataFrame( en_results )

en_results[cols].sort_values('MASE')  Figure 12.3 – Results of all five regression models on the energy consumption data

Figure 12.3 – Results of all five regression models on the energy consumption data

dw_results = pd.DataFrame( dw_results )

dw_results[cols].sort_values('MASE')

You can update the sort_values method to use RMSE or MAPE and observe any changes in the ranking. Note that you did not reset the index since the order (row ID) is aligned with the order from the regressors dictionary.

cols = ['yhat', 'resid', 'actual', 'Model Name']

for row in en_results[cols].iterrows():

print(row)![]()

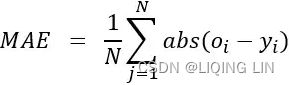

8. The en_results list contains the actual test results ( actual ), the forecast value ( yhat ), and the residuals ( resid ). You can use these to visualize the model's performance. Create a plot_results function to help diagnose the models:

from statsmodels.graphics.tsaplots import plot_acf

def plot_results( cols, results, data_name ):

for row in results[cols].iterrows():

yhat, resid, actual, name = row[1] # row[0] : index

#plt.figure(figsize=(10,6))

plt.rcParams["figure.figsize"] = [10, 3] ##

plt.title( r'{} - $\bf{}$'.format(data_name, name) )

plt.plot( actual, 'k--', alpha=0.5 )

plt.plot( yhat, 'k' )

plt.legend( ['actual', 'forecast'] )

plot_acf(resid, zero=False,

title=f'{data_name} - Autocorrelation'

)

plt.show()Notice the use of the plot_acf function from statsmodels to evaluate the residuals. The following is an example of using the plot_results function on the energy consumption data:

cols = ['yhat', 'resid', 'actual', 'Model Name']

plot_results(cols, en_results, 'Energy Consumption') The preceding code should produce two plots for each of the five models (a total of 10 plots for the energy consumption data). For each model, the function will output a line plot comparing out-of-sample data (test data) against the forecast (predicted values) and a residual autocorrelation plot.

Figure 12.4 – Hubber Regression plots

Figure 12.4 – Hubber Regression plots

Observe from the plots how the models rank and behave differently on different time series processes.

From Figure 12.4, the Hubber Regression model seems to perform well with a potential for further tuning. Later in this chapter, you will explore hyperparameter tuning in the Optimizing a forecasting model with hyperparameter tuning recipe.

Later, in the Forecasting using non-linear models with sktime recipe, you will explore more regressors (linear and non-linear) and how to deal with trend and seasonality.

from statsmodels.graphics.tsaplots import plot_acf

def plot_resultMix( cols, results, data_name ):

fig, ax = plt.subplots( figsize=(12,6) )

color_list = ['orange', 'cyan', 'red', 'magenta', 'blue']

alpha_list = [0.8, 1, 1, 0.5, 1]

ls_list = [ 'o', ':', '--', ':', '--']

for row in results[cols].iterrows():

idx, (yhat, resid, actual, name) = row # row[0] : index

# print(name, color_list[idx], idx)

if idx==0:

ax.plot( actual,

'k--', alpha=0.5,

label='actual',

)

ax.plot( yhat, color=color_list[idx], alpha=alpha_list[idx], marker=ls_list[idx],

label=name

)

else:

ax.plot( yhat, color=color_list[idx], alpha=alpha_list[idx], ls=ls_list[idx],

label=name

)

plt.rcParams["figure.figsize"] = [12, 2] ##

plot_acf(resid, zero=False,

#title=f'{name} Residual - Autocorrelation'

title = r' $\bf{}$ Residual - Autocorrelation'.format(name)

)

ax.set_title(r'$\bf{}$'.format(data_name))

ax.autoscale(enable=True, axis='x', tight=True)### ### Align both ends

ax.legend(fancybox=True, framealpha=0)

plt.show()

cols = ['yhat', 'resid', 'actual', 'Model Name']

plot_resultMix(cols, en_results, 'Energy Consumption')  the Hubber Regression model seems to perform well with a potential for further tuning.

the Hubber Regression model seems to perform well with a potential for further tuning.

##############

plotly acf vlines

import plotly.graph_objects as go

def plotly_resultMix( cols, results, data_name, x_title='', y_title='' ):

# https://stackoverflow.com/questions/59953431/how-to-change-plotly-figure-size

layout=go.Layout(width=1000, height=600,

title=f'{data_name}',

title_x=0.5, title_y=0.9,

#xaxis=dict(title=x_title, color='green', tickangle=30),

#yaxis=dict(title=y_title, color='blue')

)

fig = go.Figure(layout=layout)

color_list = ['orange', 'red', 'blue', 'magenta', 'black']

#alpha_list = [0.8, 1, 1, 0.5, 1]

lws = [ 6, 2, 4, 2, 2]

ls_list =[ 'dot', 'dot','dash', 'dot', None]

for row in results[cols].iterrows():

idx, (yhat, resid, actual, name) = row # row[0] : index

#print(name, color_list[idx], idx)

if idx == 0 :

fig.add_trace( go.Scatter( name='actual',

mode ='lines',

line=dict(shape = 'linear', color = 'firebrick', #'rgb(100, 10, 100)',

width = 1,

dash = 'dash'

),

y=actual,

)

)

fig.add_trace( go.Scatter( name=name,

mode ='lines',

line=dict(shape = 'linear', color = color_list[idx], #'rgb(100, 10, 100)',

width = lws[idx],

dash = ls_list[idx],

),

y=yhat,

)

)

fig.update_xaxes(showgrid=False, ticklabelmode="period", gridcolor='grey', griddash='dash')

fig.update_yaxes(showgrid=False, ticklabelmode="instant", gridcolor='grey', griddash='dash')

fig.update_layout( title_font_family="Times New Roman", title_font_size=30,

hoverlabel=dict( font_color='white',

#bgcolor="black"

),

legend=dict( x=0.83,y=1,

bgcolor='rgba(0,0,0,0)',#None

),

plot_bgcolor='white',#"LightSteelBlue",#'rgba(0,0,0,0)',

#paper_bgcolor="LightSteelBlue",

)

fig.show()

cols = ['yhat', 'resid', 'actual', 'Model Name']

plotly_resultMix( cols,

results=en_results,

data_name='Energy Consumption',

# x_title='Date', y_title='#Passenger'

)import plotly.graph_objects as go

from statsmodels.tsa.stattools import acf

from plotly.subplots import make_subplots

def plotly_resultMix( cols, results, data_name, x_title='', y_title='' ):

# https://stackoverflow.com/questions/59953431/how-to-change-plotly-figure-size

layout=go.Layout(width=1000, height=600,

title=f'{data_name}',

title_x=0.5, title_y=0.9,

#xaxis=dict(title=x_title, color='green', tickangle=30),

#yaxis=dict(title=y_title, color='blue')

)

fig = go.Figure(layout=layout)

color_list = ['orange', 'red', 'blue', 'magenta', 'black']

#alpha_list = [0.8, 1, 1, 0.5, 1]

lws = [ 6, 2, 4, 2, 2]

ls_list =[ 'dot', 'dot','dash', 'dot', None]

##########

# https://plotly.com/python-api-reference/generated/plotly.subplots.make_subplots.html

acf_plots = make_subplots(rows=len(en_results), cols=1,

shared_xaxes=True,

vertical_spacing=0.05,

subplot_titles=results['Model Name'],

#column_widths=[1000]*len(results),

row_heights=[1000]*len(results),

)

##########

for row in results[cols].iterrows():

idx, (yhat, resid, actual, name) = row # row[0] : index

#print(name, color_list[idx], idx)

if idx == 0 :

fig.add_trace( go.Scatter( name='actual',

mode ='lines',

line=dict(shape = 'linear', color = 'firebrick', #'rgb(100, 10, 100)',

width = 1,

dash = 'dash'

),

y=actual,

)

)

fig.add_trace( go.Scatter( name=name,

mode ='lines',

line=dict(shape = 'linear', color = color_list[idx], #'rgb(100, 10, 100)',

width = lws[idx],

dash = ls_list[idx],

),

y=yhat,

)

)

##########

acf_x, confint_interval, _, _ =acf( resid, nlags=18, alpha=0.05,

fft=False, qstat=True,

#bartlett_confint=True,

#adjusted=False,

missing='none',

)

#lags=np.array(range(18))

lags = np.arange(start=0, stop=acf_x.shape[0], dtype='float')

#.scatter(x=xlabel, y=acf_value, c='red')

acf_plots.add_trace( go.Scatter( name=name,

mode='markers',

x=lags[1:],

y=acf_x[1:],

line=dict(color=color_list[idx])

),

row=idx+1, col=1,

)

acf_plots.add_hline( y=0,

line_width=1,

row=idx+1, col=1,

)

# plot multiple verical lines

# print( np.repeat( np.array( lags[1:] ), 2 ).reshape(-1,2) )

xx = np.repeat( np.array( lags[1:] ), 2 ).reshape(-1,2)

# print( np.concatenate( ( np.zeros( len(acf_x[1:]) )[:, np.newaxis], np.array(acf_x[1:])[:, np.newaxis] ),

# axis=1

# )

# )

yy = np.concatenate( ( np.zeros( len(acf_x[1:]) )[:, np.newaxis],

np.array(acf_x[1:])[:, np.newaxis]

),

axis=1

)

for i in range(len(xx)):

acf_plots.add_trace( go.Scatter( x=xx[i], y=yy[i],

mode='lines',

line=dict(color=color_list[idx])

),

row=idx+1, col=1,

)

lags[1]-=0.5,

lags[-1]+=0.5,

acf_plots.add_trace( go.Scatter( x=lags[1:], y=confint_interval[1:,1]- acf_x[1:],

mode='lines',

fill='tozeroy', fillcolor='rgba(13, 180, 185,0.5)',

line=dict(color='white')

),

row=idx+1, col=1,

)

acf_plots.add_trace( go.Scatter( x=lags[1:], y=confint_interval[1:,0]- acf_x[1:],

mode='lines',

fill='tozeroy', fillcolor='rgba(13, 180, 185,0.5)',

line=dict(color='white')

),

row=idx+1, col=1,

)

##########

fig.update_xaxes(showgrid=False, ticklabelmode="period", gridcolor='grey', griddash='dash')

fig.update_yaxes(showgrid=False, ticklabelmode="instant", gridcolor='grey', griddash='dash')

fig.update_layout( title_font_family="Times New Roman", title_font_size=30,

hoverlabel=dict( font_color='white',

#bgcolor="black"

),

legend=dict( x=0.83,y=1,

bgcolor='rgba(0,0,0,0)',#None

),

plot_bgcolor='white',#"LightSteelBlue",#'rgba(0,0,0,0)',

#paper_bgcolor="LightSteelBlue",

)

fig.show()

##########

acf_plots.update_layout( title_font_family="Times New Roman", title_font_size=30,

hoverlabel=dict( font_color='white',

#bgcolor="black"

),

showlegend=False,

plot_bgcolor='white',#"LightSteelBlue",#'rgba(0,0,0,0)',

height=1000, width=1000,

)

acf_plots.show()

##########

cols = ['yhat', 'resid', 'actual', 'Model Name']

plotly_resultMix( cols,

results=en_results,

data_name='Energy Consumption',

# x_title='Date', y_title='#Passenger'

)You can inspect the coefficients to observe the effects, as shown in the following code block:

cols = ['Model Name', 'Model']

en_models = en_results.iloc[0:4][cols] # [0:4] : exclude Huber Regression

for row in en_models.iterrows():

print( row[1][0] ) # Model Name

print( row[1][1].coef_ ) # .intercept_ :[0.0, 0.0, 0.0, 0.0]

The energy consumption data has 10 features.

- Observe how the Linear Regression model estimated higher coefficient values (weights) for the last two features.

- On the other hand, the ElasticNet Regression model(Ridge(L2 norm) +Lasso(L1 norm)) eliminated the first 8 features by estimating the coefficients at zero.

- The Ridge Regression model produced similar results as the Linear Regression model, reducing the weights of the least significant features (thus shrinking their effect)减少了最不重要特征的权重(从而缩小了它们的影响).

- Lastly, the Lasso Regression model(L1 norm) only deemed the last feature as significant by eliminating the rest (with zero coefficients).

Recall that these features were engineered and represent lags or the past values of the dependent variable (y). The coefficients from the four models suggest that the 10th feature (or lag, or the last value) is alone significant in making a future prediction.

train_en_s[:11]![]()

air_results[cols].sort_values('MASE'):

![]()

cols = ['yhat', 'resid', 'actual', 'Model Name']

plot_resultMix(cols, air_results, 'Air Passengers')

dw_results[cols].sort_values('MASE')

cols = ['yhat', 'resid', 'actual', 'Model Name']

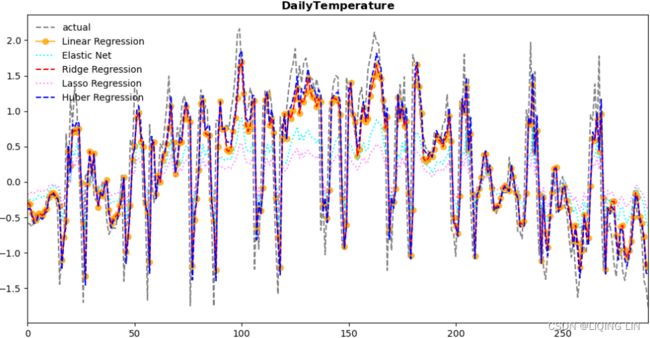

plot_resultMix(cols, dw_results, 'Daily Temperature')Regression Coefficients and Feature Selection

Let's examine this concept and see whether one feature is sufficient for the energy consumption dataset. Retrain the models using only the 10th feature, as in the following:

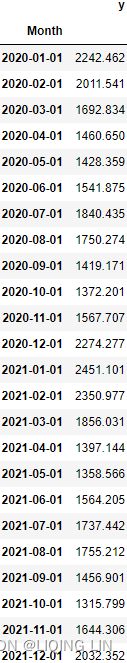

energy_10_1[-11:]en_10 = energy_10_1[ ['y', 'x_10'] ] # at time t, y=x_t, X=x_t-1

train_en10, test_en10 = split_data( en_10, test_split=0.10 )

scaler_en10 = Standardize()

train_en10_s, test_en10_s = scaler_en10.fit_transform( train_en10, test_en10 )

train_en10_s# regressors = { 'Linear Regression': LinearRegression(fit_intercept=False),

# # alpha:Constant that multiplies the penalty terms. and l1_ratio=0.5 Ridge + Lasso

# 'Elastic Net': ElasticNet(alpha=0.5, fit_intercept=False), # False, the data is assumed to be already centered

# 'Ridge Regression': Ridge(alpha=0.5, fit_intercept=False),

# 'Lasso Regression': Lasso(alpha=0.5, fit_intercept=False),

# 'Huber Regression': HuberRegressor(fit_intercept=False)

# }

en_10_results = train_different_models(train_en10_s, test_en10_s, regressors)

pd.DataFrame(en_10_results)cols = ['Model Name', 'RMSE', 'MAPE', 'MASE']

en_10_results = pd.DataFrame(en_10_results)

en_10_results[cols].sort_values('MASE')different number of lag variables(as features) but with the same number of instances

![]()

If you rank the models by the scores and plot the results, you will notice that the performance from using just one feature (![]() ==

==![]() ) produces similar results obtained from using all 10 features (

) produces similar results obtained from using all 10 features (![]() ,

, ![]() , … ,

, … , ![]() )==(

)==(![]() ,

, ![]() , … ,

, … , ![]() )

)

and you also notice the impact to the performance from different regularization terms used.

#########

en_1_1 = create_lagXs_y( en_cp, 1 )

en_1_1 ![]()

en_1 = energy_1_1[ ['y', 'x_1'] ] # at time t, y=x_t, X=x_t-1

train_en1, test_en1 = split_data( en_1, test_split=0.10 )

scaler_en1 = Standardize()

train_en1_s, test_en1_s = scaler_en1.fit_transform( train_en1, test_en1 )

en_1_results = train_different_models(train_en1_s, test_en1_s, regressors)

cols = ['Model Name', 'RMSE', 'MAPE', 'MASE']

en_1_results = pd.DataFrame(en_1_results)

en_1_results[cols].sort_values('MASE')different number of instances but with the same number of lag variables(as features)

train_en1_stest_en1_s.shape, test_en10_s.shape ![]()

#########

cols = ['yhat', 'resid', 'actual', 'Model Name']

plot_resultMix(cols, en_10_results, 'Energy Consumption') lag_window = 10 is better than lag_window = 1 or en_1_1[ ['y', 'x_1'] ] Proved!

To learn more about the different regression models available in the scikit-learn library, visit the main regression documentation here: https://scikit-learn.org/stable/supervised_learning.html.

To learn more about how different ML algorithms for time series forecasting compare, you can reference the following research paper:

Ahmed, Nesreen K., Amir F. Atiya, Neamat El Gayar, and Hisham El-Shishiny.

An Empirical Comparison of Machine Learning Models for Time Series Forecasting.

Econometric Reviews 29, no. 5–6 (August 30, 2010): 594–621.

https: //doi.org/10.1080/07474938.2010.481556 .

cols = ['Model Name', 'Model']

en_models = en_10_results.iloc[0:4][cols] # [0:4] : exclude Huber Regression

for row in en_models.iterrows():

print(row[1][0]) # Model Name

print(row[1][1].coef_) # .intercept_ :[0.0, 0.0, 0.0, 0.0] In the next recipe, you will explore multi-step forecasting techniques.

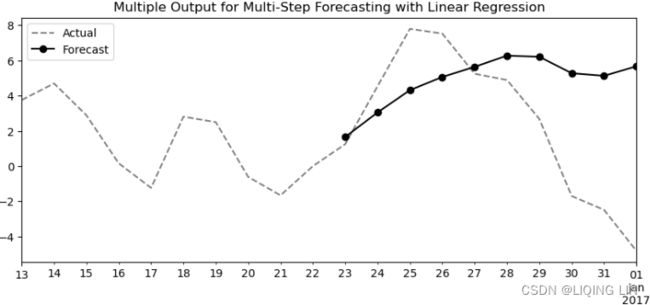

Multi-step forecasting using linear regression models with scikit-learn

In the One-step forecasting using linear regression models with scikit-learn recipe, you

implemented a one-step forecast; you provide a sequence of values for the past 10 periods

(![]() ,

, ![]() , … ,

, … , ![]() )==(

)==(![]() ,

, ![]() , … ,

, … , ![]() ) and the linear model will forecast the next period (

) and the linear model will forecast the next period (![]() ), which is referred to as

), which is referred to as ![]() . This is called one-step forecasting.

. This is called one-step forecasting.

For example, in the case of energy consumption, to get a forecast for December 2021 you need to provide data for the past 10 months (February to November). This can be reasonable for monthly data, or quarterly data, but what about daily or hourly? In the daily temperature data, the current setup means you need to provide temperature values for the past 10 days to obtain a one-day forecast (just one day ahead). This may not be an efficient approach since you have to wait until the next day to observe a new value to feed to the model to get another one-day forecast.

What if you want to predict more than one future step? For example, you want three months into the future ( ![]() ,

, ![]() ,

, ![]() )==(

)==(![]() ,

, ![]() ,

, ![]() ) based on a sequence of 10 months (

) based on a sequence of 10 months (![]() ,

, ![]() , … ,

, … , ![]() )==(

)==(![]() ,

, ![]() , … ,

, … , ![]() ). This concept is called a multi-step forecast. In the Preparing time series data for supervised learning recipe, we referenced the paper Machine Learning Strategies for Time Series Forecasting for preparing time series data for supervised ML. The paper also discusses four strategies for multi-step forecasting, such as the Recursive strategy, the Direct strategy, DirRec (Direct-Recursive) strategy, and Multiple Output strategies.

). This concept is called a multi-step forecast. In the Preparing time series data for supervised learning recipe, we referenced the paper Machine Learning Strategies for Time Series Forecasting for preparing time series data for supervised ML. The paper also discusses four strategies for multi-step forecasting, such as the Recursive strategy, the Direct strategy, DirRec (Direct-Recursive) strategy, and Multiple Output strategies.

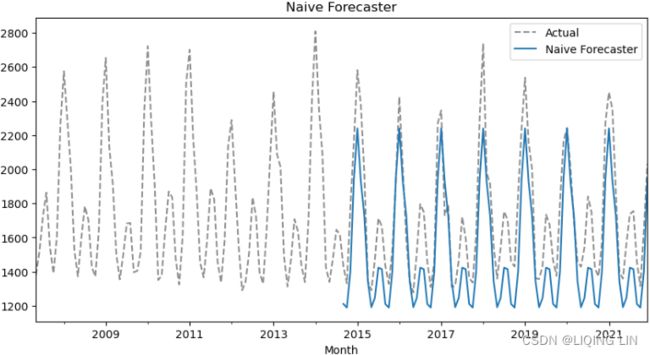

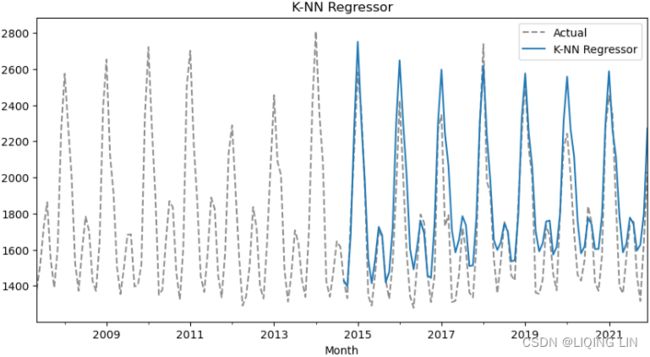

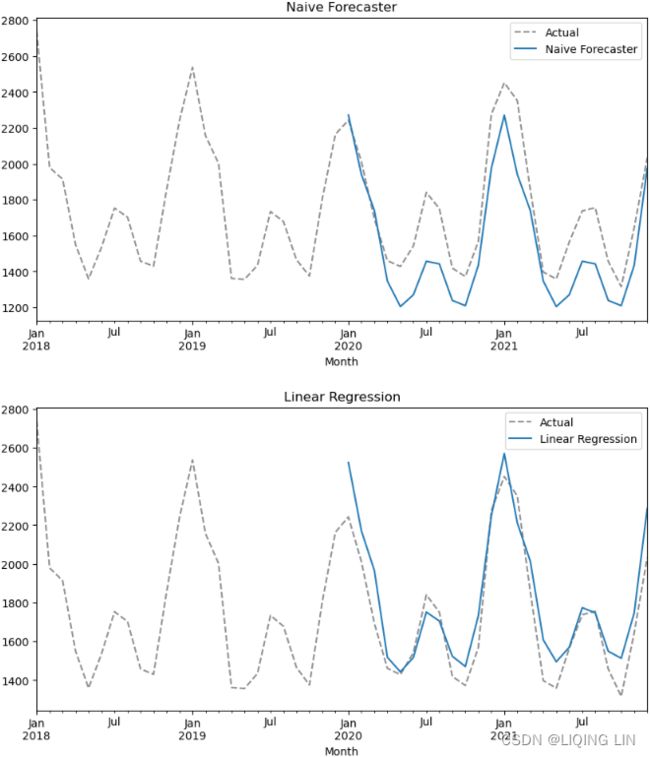

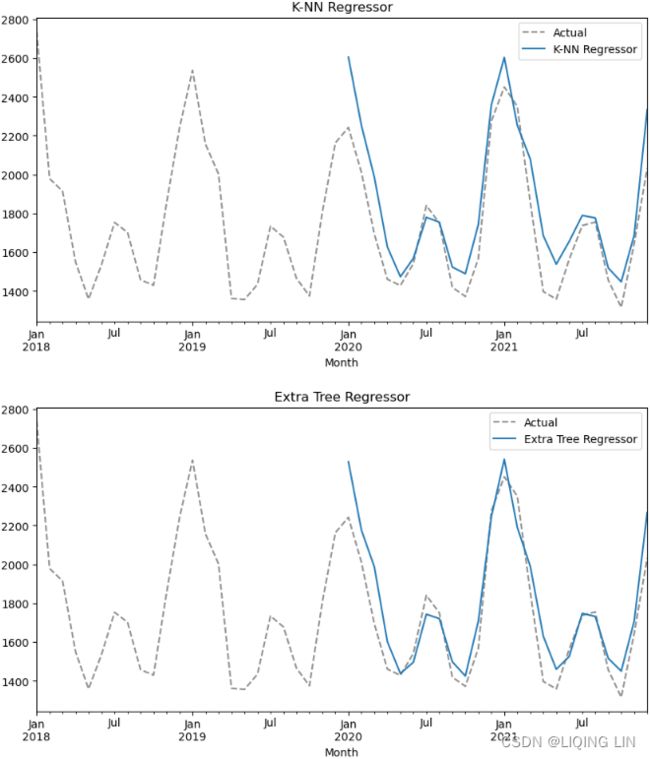

In this recipe, you will implement a Recursive forecasting strategy. This will help you gain an idea of what a multi-step forecasting is all about. This is useful when you want to forecast further into the future beyond the out-of-sample (test) data that you have at hand.

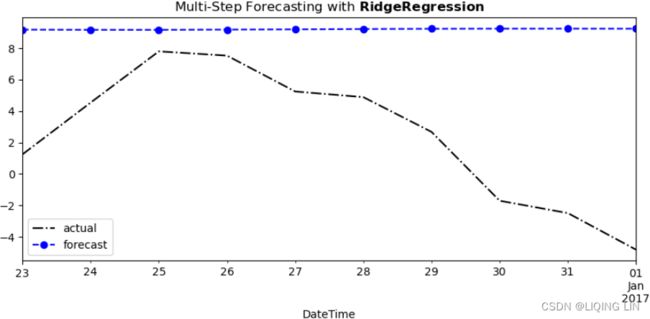

The following illustrates the idea behind the recursive strategy. It is still based on one-step forecasts that are reused (recursively) to make the next one-step prediction, and the process continues (think of a loop) until you get all the future steps, known as future horizons, produced.

Figure 12.5 – Sliding window (five periods) with multi-step forecasts of daily temperature

Figure 12.5 – Sliding window (five periods) with multi-step forecasts of daily temperature

At each step in Figure 12.5, you are still performing a one-step forecast.

- The gray boxes represent the actual observed values, and

- the black boxes represent estimated or forecasted values.

If you want to forecast into the future, let's say five periods ahead, and your actual observed data ends on 2017-01-01, you will need to provide five past periods from 2016-12-28 to 2017-01-01 to get a one-step forecast for 2017-01-02. The estimated value on 2017-01-02 is used as an input to estimate the next one-step to forecast for 2017-01-03. This recursive behavior continues until all five future steps (horizons) are estimated.

In this recipe, you will be using the models obtained from the previous One-step forecasting using linear regression models with scikit-learn recipe. A recursive multi-step strategy is used in the forecasting (prediction) phase:

1. From the previous recipe, you should have three DataFrames ( air_results, dw_results, and en_results ) that contain the results from the trained models. The following steps will use dw_results for demonstration (daily weather), you should be able to apply the same process on the remaining DataFrames (as demonstrated in the accompanying Jupyter Notebook).

air_results = train_different_models(train_air_s, test_air_s, regressors)

en_results = train_different_models(train_en_s, test_en_s, regressors)

# train_dw, test_dw = split_data( daily_temp_10_1, test_split=0.10 )

# scaler_dw = Standardize()

# train_dw_s, test_dw_s = scaler_dw.fit_transform( train_dw, test_dw )

dw_results = train_different_models(train_dw_s, test_dw_s, regressors)

air_results = pd.DataFrame(air_results)

en_results = pd.DataFrame(en_results)

dw_results = pd.DataFrame(dw_results)

dw_results

Extract the model and the model's name. Recall that there are five trained models:

models_dw = dw_results[['Model Name', 'Model']]

models_dw2. Create the multi_step_forecast function, which consists of a for loop that makes a one-step future forecast (estimate) using the model's predict method. On each iteration or step, the estimated value is used as input to produce the next one-step estimate for another future step:

def multi_step_forecast( input_window, model, steps=10 ):

forecast = []

for i in range( steps ):

one_step_pred = model.predict( np.array(input_window).reshape(1,-1) )[0]

forecast.append( one_step_pred )